Autism self-assessment exercise 2018: introduction

Updated 20 June 2019

1. Autism strategy

The government has announced that it intends to review the 2014 Autism Strategy in 2019.

The 2009 Autism Act commits the Government to having a strategy for meeting the needs of adults in England with autistic spectrum conditions by ensuring that the local authority and NHS services they need are accessible for them.

The Government is required to produce guidance for local authorities and local health services on implementing the strategy and they are expected to keep both the strategy and the guidance up to date.

As part of the process of updating the guidance the Autism Act says that the government must take account of progress in meeting the strategy aims. The current strategy aims were set out in Think Autism in the form of 15 priority challenges reproduced at [Annex 1].

The Autism Self-Assessment is one of the mechanisms the government has for identifying what progress has been made. The Self-Assessment is a periodic survey of upper tier local authorities, the bodies responsible for co-ordinating local action on the strategy, asking about progress on a wide range of local health, social services and wider issues.

The 2018 exercise that is reported here is the fifth. The first, baseline self-assessment was done in 2011. Update self-assessments were done in 2013, shortly after the publication of ‘Think Autism’ in 2014 and 2016.

2. Details of the survey

The principal areas covered by the Self-Assessment are:

- local context and senior leadership

- planning

- training

- diagnosis

- care and support

- housing and accommodation

- employment

- criminal justice system

- local innovations

- completion of the survey

Excluding respondent details, and the details of agency involvement and sign-off in the completion section, the 2018 self-assessment comprised 98 principal questions. These are set out in full in Annex 2. Most asked for structured answers in a number of formats.

These included:

- ratings of red, amber or green (31 questions) or other choices of options (3 questions): these gave guidance about how local areas should measure their performance

- yes or no (29 questions): with 2 exceptions these were framed so that ‘Yes’ was the desirable response

- other options (3 questions)

- numbers (20 questions: 4 of which have been analysed as options questions as described below)

- date (1 question, analysed as an option question)

In addition to structured questions, 14 principal questions asked for a response in text format. These included names, web links and in some cases more detailed comments.

As well as questions specifically requesting free text answers, respondents could add additional free text comments alongside responses to the structured questions to fill out their response further. Where they had rated their performance as good they were invited say what had worked well. Where they had rated it less satisfactory, they could describe the difficulties.

As far as possible, we avoided changing the questions from the 2016 self-assessment in order to give the clearest possible view of the progress made over the period. Eleven new questions were introduced and 19 modified, in most cases to a very limited extent. In several cases, the only modification was addition of a reference to the paragraph in the statutory guidance to which the question-related.

Table 1 shows the number of questions that were unchanged, modified or new. Where relevant modifications are described in the results section.

Table 1. numbers of questions by type and whether or not unchanged from 2016 Self-Assessment

| Question type | Unchanged | Modified | New | Total |

|---|---|---|---|---|

| Green / Amber / Red | 25 | 5 | 1 | 31 |

| Yes / No | 18 | 5 | 6 | 29 |

| Other Option | 2 | 1 | 0 | 3 |

| Number | 13 | 5 | 2 | 20 |

| Comment | 9 | 3 | 2 | 14 |

| Date | 1 | 0 | 0 | 1 |

| Total | 68 | 19 | 11 | 98 |

Local authority autism lead officers led the process of collecting local responses. They were asked to work with a range of local partners:

- local authority Adult Social Services

- local authority Department of Children’s Services

- local education authority

- Health and Wellbeing Board

- local authority Public Health department

- clinical commissioning group

- primary healthcare providers

- secondary healthcare providers

- employment service

- business sector

- police

- probation service

- court service

- prisons located in the area

- local charitable or voluntary or self- advocacy or interest groups

- autistic adults

- informal carers, family, friends of autistic people

When the exercise was completed respondents were asked to present the response to their local autism partnership board for discussion and sign-off and also to seek sign-off from their Director of Adult Social Services, Director of Public Health and the relevant CCG Chief Operating Officer.

The exercise was launched on 19 September with a letter to local authority directors of adult social services from Jonathan Marron, Director General for Community and Social Care at the Department of Health and Social Care and Glen Garrod, President of the Association of Directors of Adult Social Services.

This requested that responses should be completed by 10 December, just under 3 months. In practice submissions were accepted up to the end of December.

3. Presentation of the results

This website gives an overview of the results overall and for each section. More detailed results are available in an interactive spreadsheet/dashboard you can download using the link. This provides full details for all responding local authorities. In most cases, these are grouped in former Government Office Regions.

The results section starts with an initial overview of the response rate and the details of the extent to which the various stakeholder agencies participated in the consultation in different areas. This is followed by an initial overview of the pattern of movement in the responses from the 2016 to the 2018 self-assessment. After this, the results of each section are presented in outline detail.

For more extensive detail and particularly for the patterns of responses by regions or for individual local authorities look at the interactive spreadsheet/dashboard. In both the tables on this website and the presentations in the interactive spreadsheet/dashboard, abbreviated versions of the questions are used. The full versions of both the questions and the rating criteria for green/amber/red questions are available or are in the individual questions worksheet of the interactive dashboard.

Slightly confusingly, there is a difference between the way proportions of responses are reported on this webpage and in the interactive dashboard.

This year 141 out of the possible 152 upper tier authorities responded to the self-assessment. In 2016 145 did. To allow simple narrative comparisons, on this webpage, numbers of local authorities assigning themselves particular ratings for particular questions have therefore been reported as percentages of responding authorities. We anticipate that the interactive spreadsheet/dashboard will be used by people wanting to look up details of individual places. For this reason, all 152 local authorities have been included (although there are obviously blanks for non-responders) and percentages are of this total.

The reporting of coded questions is relatively straightforward as respondents have a limited set of options in answering. Number questions raise more complex issues. We used 3 approaches to number question. For 4 questions, the best approach was to report responses by collecting them into evidently common groups. These covered the number of:

- CCGs local authority had to work with (question 4)

- WTEs autism leads and commissioners had allocated to their autism tasks (questions 11 and 13)

- people sent out-of-area for diagnostic assessments (question 62)

Results for these are shows as for other grouped response questions. One of the other number questions (average waiting time for a diagnostic assessment - question 63) was reasonably comparable between authorities irrespective of their size and geography. This was reported as a simple number.

The other numbers could not be compared between areas so directly because they were obviously partly dependent on the size of the local authorities’ populations. For example, the number of people getting autism diagnoses or the numbers of autistic children in school years (questions 37 to 40).

We divided these numbers by a relevant local population measure (a ‘denominator’), such as the local authority’s total population or the population of children of the age for a specific school year. In a few cases we divided them by the response to another question. In most cases we applied data checking rules to these numbers and excluded zero responses or responses that seemed impossible. A list of the calculations and the data checking rules applied is in table 2. The charts in which these are displayed indicate the sum that is being shown.

Table 2. Number questions, choice of denominators and validation rules.

| Number | Question | Denominator | Validation rules |

|---|---|---|---|

| Q22 | How many autistic people are social care eligible? | Total population | Not < 1 |

| Q23 | How many autistic people with LD are social care eligible? | Q22 | Not < 1 AND <=Q22 |

| Q24 | How many autist peop with mental hlth probs soc care eligible? | Q22 | Not < 1 AND <=Q22 |

| Q37 | How many autistic children in school year 10? | Children of school year 10 age | Not < 1 |

| Q38 | How many autistic children in school year 11? | Children of school year 11 age | Not < 1 |

| Q39 | How many autistic children in school year 12? | Children of school year 12 age | Not < 1 |

| Q40 | How many autistic children in school year 13? | Children of school year 13 age | Not < 1 |

| Q41 | How many autistic people completed transition from school? | Children of school year 11 age | Not < 1 |

| Q51 | How many health/social care staff eligible for training? | Total population | Not < 1 |

| Q52 | How many health/social care staff up-to-date with training? | Q51 | Not < 1 and Not > Q51 |

| Q62 | How many were referred out of area for diagnosis last year? | Total population | Not < 1 |

| Q65 | How many people are waiting for diagnostic assessment? | Total population | Not < 1 |

| Q66 | How many people were diagnosed with autism last year? | Total population | Not < 1 |

| Q67 | Numbers: new diagnosees completed assessments and getting support? | Q66 | Not < 0 AND sum(Q67 to Q71) <=Q66 |

| Q68 | Numbers: new diagnosees completed assessments but awating support? | Q66 | Not < 0 AND sum(Q67 to Q71) <=Q66 |

| Q69 | Numbers: new diagnosees completed assessments don’t need support? | Q66 | Not < 0 AND sum(Q67 to Q71) <=Q66 |

| Q70 | Numbers: new diagnosees not yet completed all assessments? | Q66 | Not < 0 AND sum(Q67 to Q71) <=Q66 |

| Q71 | How many new diagnosees are not Care Act eilgible? | Q66 | Not < 0 AND sum(Q67 to Q71) <=Q66 |

| Q83 | Number: autistic adults eligible for soc care have personal budget? | Total population | Not < 1 |

| Q84 | Number: autistic adults not LD eligible for soc care have personal budget? | Q83 | Not < 0 AND sum(Q84 to Q85) <=Q83 |

| Q85 | Number: autistic adults with LD eligible for soc care have personal budget? | Q83 | Not < 0 AND sum(Q84 to Q85) <=Q83 |

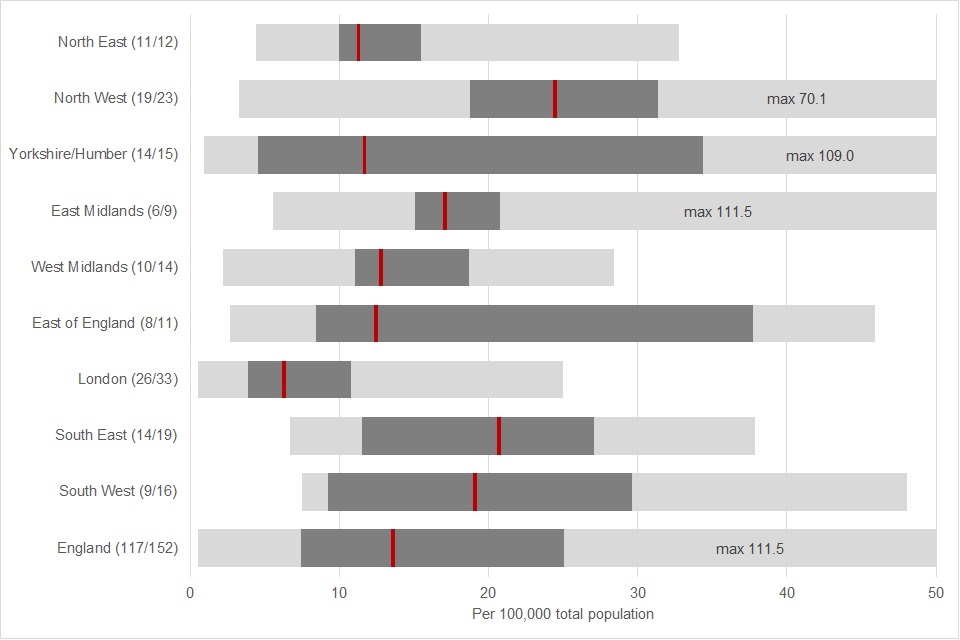

Apart from those translated into option format, the results from number questions are shown on ‘range charts’. Figure 1 shows an example of a range chart. This shows the range of responses to question 66: ‘In the year to the end of March 2018 how many people have received a diagnosis of an autistic spectrum condition?’.

Figure 1. Example chart. Rates per 100,000 population of people receiving a diagnosis of autism in the year to end March 2018

Figure 1: Example chart showing rates per 100,000 population of people receiving a diagnosis of autism in the year to end March 2018

Responses have been turned into rates per 100,000 population of the local authorities to make the figures directly comparable. The chart shows the ranges for these figures. The lowest horizontal bar shows ranges for all local authority areas in England, higher bars show ranges for individual regions.

The numbers in brackets after the region name show first the number of local authorities that sent us usable data and that are therefore included in the chart and then the total number in the region.

The pale grey bar shows the full range of responses for England or the region. It stretches from the lowest reported value to the highest (unless cut off as described below). The darker grey bar shows the range for the middle half of included values (the ‘inter-quartile range’). The red line shows the value for the local authority in the middle (the ‘median’). Half of the other areas will have provided higher values and half lower.

Where the pale grey bar is very wide it suggests a small number of areas have sent extreme data. This may not be reliable. In these cases the bars are usually cut off to allow the chart scaling to show the detail for the majority of local authorities. Where this has been done the actual maximum value is printed on the bar. Where the dark grey section of the bar for some regions shows little or no overlap with the dark grey section for others, then as long as it is based on a reasonable proportion of local authorities, it suggests practice may differ between these regions.

Five number questions, (questions 67 to 71) seemed particularly difficult. Only 5 responding authorities provided figures for these. They have been reported in a way that reflects this later in this report.

4. Narrative questions

Three questions asked local areas about examples of innovative practice (questions 103 and 104 parts 1 and 2).

One was about providing local homes for people who have been in psychiatric hospitals for longer than is necessary, one about local care or support initiatives and one about encouraging local private sector organisations to improve accessibility or employment opportunities. Narrative summaries of the themes emerging in responses are given on this web page.

In most cases the full responses from local authorities can be found in the interactive spreadsheet. The exceptions to this are where we have redacted responses, or sections of responses which give details of individual cases which we considered could be identifiable to individuals involved if published with detail of the area in which they lived.

5. Movement scores

For red, amber or green questions, most yes or no questions, other option questions, and 1numeric question (question 63 - diagnostic waiting times), we compared local authority’s 2018 responses to their 2016 responses.

To summarise these movements we scored:

- +1 for any movement in a favourable direction (red to amber, red to green, no to yes)

- -1 for any movement in an unfavourable direction

- 0 for no movement.

Questions were left out of movement scoring if the desirable direction of change was ambiguous; a list of the questions included and excluded from movement scoring is available.

This year, the modifications made to a few questions were minimal and we considered they did not affect the comparability of answers. We calculated overall percentage movement scores for sections of the self-assessment, for regions and for England as a whole, by dividing the sum of the question movement scores by the possible maximum for the section or region. This approach was intended to make the section scores broadly comparable notwithstanding the different numbers of questions they included.

The table presenting this analysis includes a summary row and column indicating the number of sections in a region, or regions for a section that showed overall positive and negative movement scores.