OPSS monitoring and evaluation framework

Updated 31 January 2024

A practical approach to delivering monitoring and evaluation.

Executive summary

This framework sets out how monitoring and evaluation (M&E) is delivered across OPSS, demonstrating what OPSS is doing, and how those activities are delivering outcomes. The aim of this document is to set out the structure and tools needed to deliver evaluation in OPSS.

The framework should be read in the context of the Department for Business and Trade’s monitoring and evaluation strategy.

OPSS has a clearly articulated set of outcomes that focus on what OPSS is achieving. This framework ultimately seeks to measure progress towards these outcomes, they are:

- People are protected from product-related harm.

- Consumers can buy and use products with confidence.

- Businesses comply with their legal obligations.

- Responsible businesses can operate with confidence.

- Product regulation supports the transition to net zero.

- The environment is protected from harm.

Core features of the framework

Evaluation challenge 1 – Attribution to often distant outcomes

In line with other regulators, OPSS faces a challenge of demonstrating the achievement of outcomes which are often several degrees of separation from the delivered activities. This suggests that outcomes evidence should focus on identifying OPSS’s contribution to the outcomes, rather than direct causation.

Evaluation challenge 2 – Evaluating regulations, regulatory powers and policy making.

OPSS has a unique mix of responsibilities and powers. The M&E framework accounts for outcomes arising from regulations, regulatory powers and policy making.

To support an understanding of the types of evaluation required, this framework sets out a ‘regulatory net’ metaphor. The metaphor states that OPSS casts a ‘regulatory net’ across the UK, which introduces a stable system of regulations, standards, accreditation and metrology which in a relatively passive way manages the risk of harms. However, the net is not perfect (either by design or failure), which results in specific problems slipping through the net. OPSS is then required to take targeted action to resolve these problems. Examples range from specific cases of product non-compliance handled by enforcement teams to larger programmes covering a broader range of products and actors. This metaphor creates two types of evaluation that the framework accounts for:

- ‘Problem’ focused evaluations ask: What incidents of harm have occurred despite the regulatory system being in place, and why? How have OPSS activities supported the delivery of outcomes in these targeted areas?

- ‘Regulatory net’ focused evaluations ask: To what extent is the regulatory net, and specific elements of it, working to deliver OPSS outcomes? How have OPSS activities supported the delivery of outcomes through indirect means? Why are issues escaping through the net and what can be done to prevent this?

Evaluation Challenge 3 – Embedding evaluation in a fast growing organisation

OPSS was established in 2018 and has grown rapidly since then. OPSS’s strategy builds evidence into its guiding principles. The development of this M&E framework represents one of the ways in which OPSS is delivering against this principle. With this framework in place, there is work to be done to embed its principles and approaches into everyday practice.

Framework aims

In seeking to address the challenges identified, the framework sets out the following aims:

- Support identification and communication of ‘outcome stories’ to show how activity contributes towards outcomes

- Support delivery of detailed research and evaluation projects that evidence the extent to which OPSS is contributing to outcomes (i.e. causal impact evaluation)

- Support OPSS to aggregate evidence from across the teams to tell an overall outcome story

- Support OPSS to learn lessons which can inform decision making to improve outcomes

What does the framework look like in practice?

The core elements of the framework are:

-

Outcome focused story telling – Story telling is at the heart of the M&E framework. It sets the structure around which evidence needs are planned. The framework puts responsibility on each team or programme to own their own story and put plans in place to build the evidence to demonstrate it. These evidence needs will sit alongside wider OPSS strategic evidence needs.

-

Metrics to monitor and manage progress – Teams and programmes are responsible for developing a suite of metrics that help demonstrate both their activities delivered and outcomes contributed to. Organisation of metrics under an agreed set of OPSS headings ensures these can be aggregated across OPSS. Over time these metrics can be drawn on to monitor progress at the organisational level.

-

Research and analysis projects to build evidence base for taking action – The tools in this framework support the existing OPSS research commissioning process, enabling teams to more robustly identify and articulate their research needs.

-

Evaluation projects to demonstrate contribution to outcomes – Prioritised cross-cutting ‘regulatory net’ level evaluations will sit alongside ‘problem level’ outcome and process evaluations.

-

Assessment of value for money – Central to OPSS’s value for money assessment is the development of better evidence on the benefits achieved. A benefits estimation model has been developed to support this, but the activities coming out of the M&E framework itself will support filling the evidence gaps.

Tools

A suite of tools put the building blocks in place that enable teams and programmes to deliver effective M&E, summarised below under their functional headings.

-

Defining programme stories – These tools support clear articulation of how activities contribute to outcomes. This makes outcome stories clear as well as identifying where evidence is needed to support this story. A logic map template is at the heart of this, however, when designing interventions, a problem statement toolkit supports a ground up consideration of the intervention options and evidence needs to inform design decision.

-

Defining evidence needs and plans – These tools support confirmation of precise evidence needs and plans. A metrics mapping template enables logging of activity and outcome metrics within an agreed set of OPSS level categories that support aggregation. Similarly, an additional template allows for logging of delivery assumptions which may need research to confirm or test, in order to learn about the effectiveness of the delivery. These two contribute to development of an ‘M&E Plan On A Page’ for each team or programme.

-

Supporting delivery of research and evidence – These tools support the practical collection and delivery of evidence, including guidance on producing case studies, commissioning research and building value for money assumptions into programme evidence.

-

Organisational management and reporting – These tools ensure that evidence from across OPSS is brought together to meet strategic needs. In addition to a tool that aggregates metrics into a single database, there is also an evaluation prioritisation matrix that supports decisions around prioritising programme and cross-cutting evaluations.

Monitoring and Evaluation framework

Introduction

This framework sets out how monitoring and evaluation (M&E) is delivered across OPSS, demonstrating what OPSS is doing, and how those activities are delivering outcomes. The aim of this document is to set out the structure and tools needed to deliver evaluation in OPSS.

The framework should be read in the context of the Department for Business and Trade’s monitoring and evaluation strategy, with the OPSS M&E framework providing further detail appropriate to OPSS’s unique context.

OPSS has a complex and wide-ranging portfolio of work, ranging from actions that directly deliver benefits for consumers (e.g. border work to prevent non-compliant products reaching the UK market) to actions that set a longer-term framework to proactively prevent consumer harm.

To support an understanding of what OPSS is delivering, OPSS’s Product Regulation Strategy 2022-2025 sets out a clear purpose, set of objectives and outcomes.

OPSS’s purpose is defined as “to protect people and places from product-related harm, ensuring consumers and businesses can buy and sell products with confidence.”

The objectives focus on how OPSS works to achieve the purpose, they are:

- To deliver protection through responsive policy and active enforcement.

- To apply policies and practices that reflect the needs of citizens.

- To enable responsible business to thrive.

- To co-ordinate local and national regulation.

- To inspire confidence as a trusted regulator.

The outcomes focus on what OPSS is achieving, with an ambition to measure progress towards these outcomes, they are:

- People are protected from product-related harm.

- Consumers can buy and use products with confidence.

- Businesses comply with their legal obligations.

- Responsible businesses can operate with confidence.

- Product regulation supports the transition to net zero.

- The environment is protected from harm.

The recommendations set out in this framework are informed by, among other sources, National Audit Office’s ‘Performance measurement by regulators’ guide. This guide includes a maturity assessment toolkit, allowing the current and ideal practice to be benchmarked against suggested criteria. This assessment has been used to guide the development of this framework.

Core features of the M&E framework

Evaluation challenge 1 – Attribution to often distant outcomes

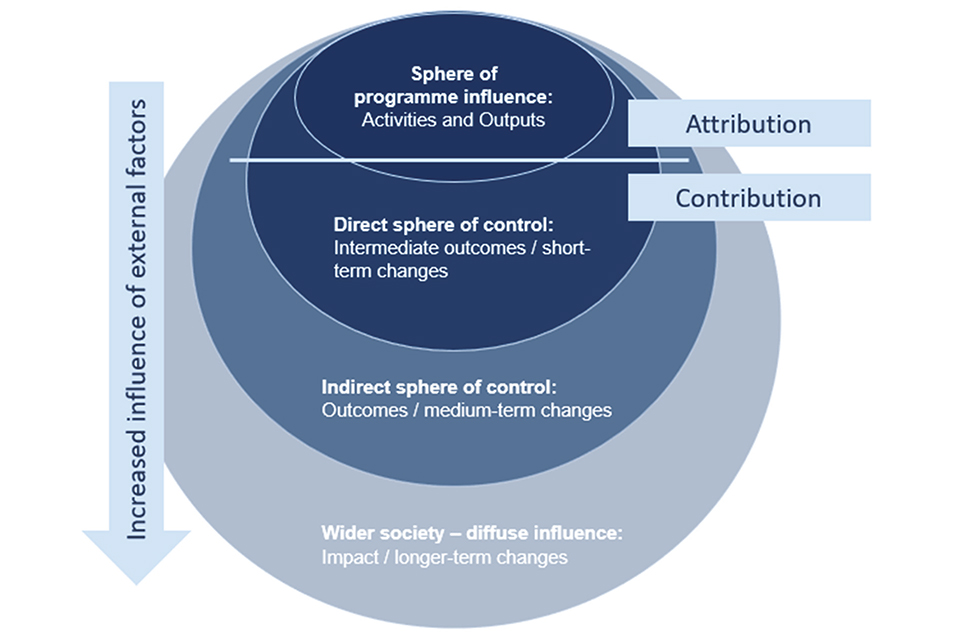

A key question in the evaluation of programmes and projects is that of attribution: to what extent are observed outcomes and impacts due to programme activities rather than other factors? Tracing attribution of OPSS’s work will be challenging, due to the complex regulatory landscape it operates in. Whilst it is possible to measure attribution within the direct sphere of control (activities and outputs) this becomes more difficult for longer-term outcomes and impacts where external factors, actors and conditions become more influential, as visualised in the below diagram. In these latter situations, assessment of ‘contribution’ to outcomes is more appropriate.

Figure 1 – Illustration of how attribution vs contribution varies towards the results depending on the level of influence

As outlined in the National Audit Office ‘Performance measurement by regulators’ guide, the situation is complex for various reasons:

- Intended outcomes (e.g. consumer protection) are generally delivered in practice by third party organisations.

- There are several external factors outside immediate control.

- Outcomes and impacts are often only realised in the long-term.

Where outcomes are influenced by multiple factors, the contribution of each element can be difficult to distinguish, however it remains important to monitor such outcomes in order to assess whether activities are having any positive contribution.

Whilst at a specific policy or intervention level it may be possible to conduct randomised experiments, this is not feasible for an overall strategy level monitoring and evaluation plan. However, collecting monitoring data consistently over a period of time can help to identify trends and comparison to baseline periods e.g. in levels of compliance.

The approach to monitoring and evaluation outlined in this plan has been informed by the concept of ‘contribution analysis’, aiming to measure plausible contributions to change through a logical ‘theory of change’ or ‘logic map’, underpinned by assumptions (such as cause and effect relationships). Contribution analysis can provide assessments of cause and effect when it is not practical to design an experiment to evaluate performance.

Evaluation challenge 2 – Evaluating regulations, regulatory powers and policy making.

OPSS has a unique mix of responsibilities and powers. The M&E framework accounts for outcomes arising from regulations, regulatory powers and policy making.

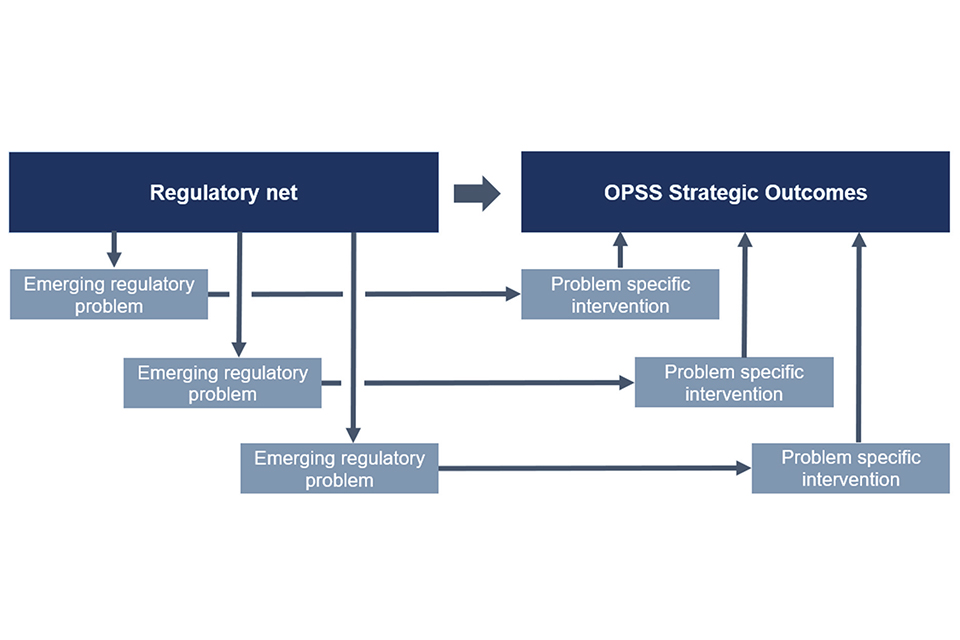

To deliver the outcomes focus and structure how evaluation is delivered across OPSS’s diverse portfolio, evaluation will be delivered through a two-tiered set of evaluation questions. This approach is based on the ‘regulatory net’ metaphor.

The Regulatory Net

OPSS is responsible for a ‘regulatory safety net’ made up of regulations, standards, accreditation, testing, metrology and perceived risk of enforcement.

This net is cast across the UK and is intended to hold down most of the harm in an indirect or routine way.

However, the net isn’t perfect and product safety issues slip through holes in the net, either as full incidents or smaller isolated instances of harm. OPSS is then required to take actions to tackle the priority issues that have made it through the net.

OPSS therefore has two spheres of action:

- Addresses specific incidents of harm (regulatory powers to tackle the problems escaping the net).

2. Building a strong regulatory system (policy making and regulation to maintain the net)

Evaluation in OPSS therefore seeks to answer key questions:

1. What incidents of harm have occurred despite the regulatory system being in place, and why? How have OPSS activities supported the delivery of outcomes in these targeted areas?

a. This requires a ‘problem focused’ evaluation approach, for example looking at all of the regulatory, enforcement and policy action that relate to the problem at hand and assessing how they have worked together as a regulatory system in the context of this product/issue.

2. To what extent is the regulatory net, and specific elements of it, working to deliver OPSS outcomes? How have OPSS activities supported the delivery of outcomes through indirect means?

Evaluation is also needed to understand the preventative effect of the net, potential negative/unintended outcomes that exist, and whether the system as a whole constitutes good value for money.

a. This requires assessing the outcomes that each work area of OPSS contributes to and how effectively they work to deliver these outcomes. Importantly, they look across the breadth of products or issues that OPSS tackles, rather than any specific one. Once a body of evidence has been developed, a cross-OPSS synthesis of evidence would add value to drawing conclusions.

b. Practically, this will lead to evaluations of the individual work areas in OPSS, but will take account of interactions or independencies between them where appropriate.

Figure 2 illustrates the relationship between these two types of evaluation and how they interact with outcomes. This demonstrates that to effectively assess OPSS’s contribution to outcomes, it is necessary to conduct both types of evaluation.

Figure 2 – Illustration of how the OPSS strategic outcomes result from both ‘regulatory net’ activities as well as ‘problem’ specific interventions

Evaluation Challenge 3 – Embedding M&E in a fast growing organisation

“We make decisions informed by science and evidence”

OPSS Product Regulation Strategy (guiding principle)

OPSS was established in 2018 and has grown rapidly since then. Significant progress has been made on establishing a strategic approach, guiding principles and operational procedures for regulatory activity. The OPSS Product Regulation Strategy 2022-2025 represents the outputs of this developmental work.

OPSS’s strategy builds evidence into its guiding principles, the development of this M&E framework represents one of the ways in which OPSS is delivering against this principle. With this framework now in place, there is work to be done to embed its principles and approaches into everyday practice. There is an opportunity to create cultural and structural practices that ensure the benefits of M&E are exploited as OPSS continues to grow.

Framework aims

The M&E framework is required to deliver against four objectives for OPSS.

1. Support identification and communication of ‘outcome stories’ to show how activities contribute towards outcomes

- This is delivered through use of logic maps for all teams, projects or programmes. These maps tell the story on one page, while also identifying the metrics to be monitored and the further research needed to test assumptions and causation.

2. Support delivery of detailed research and evaluation projects that evidence the extent to which OPSS is contributing to outcomes (i.e. causal impact evaluation)

- This is delivered through a prioritised set of ‘problem’ level and ‘regulatory net’ level outcome evaluations. Theory-based evaluation approaches can build from the logic maps, evidencing the contribution OPSS makes along the causal chains from activity to outcomes.

3. Support OPSS to aggregate evidence from across the teams to tell an overall outcome story

- This is delivered using consistent activity and outcome metrics definitions, allowing aggregation across OPSS to build an evidence-based story of outcomes. The use of case-studies to illustrate real-world impacts also contributes here.

4. Support OPSS to learn lessons which can inform decision making to improve outcomes

-

This is delivered by using process evaluation and value for money assessments, that sit alongside the outcome evaluations and provide actionable conclusions on how OPSS’s activities can, if necessary, be amended to improve outcome delivery.

-

Furthermore, the prioritisation of evaluation resources for those areas where OPSS can affect change, combined with effective dissemination of findings, supports application of the findings for effective decision-making.

What does the framework look like in practice?

This M&E framework has so far set out the high-level challenge and set out the aims of the framework. This section describes the overarching structure of the framework and how it will be implemented, including what responsibilities lie where.

There are five key elements that make up the framework. Together they establish a set of practices in OPSS that support delivery of the framework aims. These are effectively the building blocks, which together support an effective M&E system.

1. Outcome focused story telling

Teams and programmes tell the story of how activities lead to outcomes. Individual teams or programme teams are best placed to understand and explain how their work leads to the delivery of outcomes. Logic maps or theories of change are the tools through which outcome stories are developed and are the structure through which the remaining elements of this framework are made possible.

An ‘M&E Plan On A Page’ for each programme summarises the outputs from logic mapping, metrics identification and evaluation planning. This forms the basis on which further action can be taken.

2. Metrics to monitor and manage progress

An OPSS-wide set of monitoring indicators is not being proposed in this framework. Instead, this framework will set out the structure that monitoring indicators should take, laying the foundation for this to be developed across OPSS.

Metrics at the team or programme level are most effective at supporting outcome story telling. These are likely to make up of internally collected data as well as drawing on external data sources, with some metrics requiring bespoke primary data collection.

The metrics fall into two broad categories:

-

Activity and output metrics – These focus on what OPSS is doing and producing. As outcomes evidence develops, the metrics chosen should be those which have a known and direct relationship to the delivery of outcomes. These metrics support operational and strategic decisions regarding the nature of the work being delivered. Likely sources include project delivery data, the OPSS Electronic Case Management system, and website statistics.

-

Outcome metrics – OPSS is often just one of many factors that will drive change in the core outcomes. Where an OPSS activity directly influences outcomes, tracking the change shows the impact achieved. However, even where OPSS is just a contributing factor to change, tracking trends or issues allows OPSS to make strategic decisions regarding prioritisation of work and resource.

Consistent metric definitions are used, which allow each team or programme to report into an OPSS level metric. This allows aggregation of activity, outputs and outcomes.

3. Research projects to build an evidence base for taking action

OPSS has processes in place to identify and commission research as needed to support delivery at the organisation level. The use of the problem definition toolkit and logic mapping toolkit set out in this framework can support this process by improving identification of evidence needs.

As an example, the logic mapping process pulls out a set of assumptions and uncertainties that sit within the causal chain set out in the logic map. Once identified, these naturally lead to generating research ideas which can be considered and commissioned via the existing process. This process offers the benefit of pulling out research needs at the start of a project, placing the responsibility with the team/programme, and using a systematic process to ensure research needs can be proactively resourced and managed.

4. Evaluation projects to demonstrate how actions contribute to outcomes

Dedicated evaluation projects are required to identify and assess the extent to which OPSS’s activities have contributed to the delivery of outcomes. The metrics and research projects will be a source of evidence within these evaluations, but alone do not generate causal conclusions. An analytical evaluation process is required to draw the causal conclusions.

Evaluation projects will fall into two broad groups:

- ‘Problem based’ evaluations – In line with the ‘regulatory net’ metaphor, evaluations will take place of activities that target specific ‘problems’ that escape through the regulatory net.

These evaluations are built off the logic maps specific to each ‘problem’, including drawing on the metrics and assumptions that are identified. Dedicated data collection may be required, e.g. interviews with stakeholders, depending on the needs of the evaluation project.

- ‘Regulatory net’ evaluations – These evaluation projects will assess how effectively the ‘regulatory net’ is functioning. The evaluations will require bespoke logic maps or theories of change to support the evaluation and will likely draw on evidence that is already collected across OPSS. New data collection is likely to be needed in order to assess how the regulations are working outside of OPSS’s immediate sphere of influence.

The methods that are delivered in relation to problem-based or regulatory net evaluations are likely to draw on a range of established evaluation methods, these include:

-

Process evaluations – Including data collection and analysis to understand the extent to which project or programme activities are being delivered as intended. These might be conducted during the life of a project or programme, in advance of the evidence regarding outcomes being available. They usually rely on collecting feedback from stakeholders as well as analysis of activity and outcomes metrics.

-

Outcomes evaluation – Providing OPSS with evidence of the effectiveness of its activities. This supports operational and strategic decision making. Evidence of outcomes achieved is essential to this type of evaluation, as well as a methodology to assess the causal relationship between OPSS’s activities and the outcomes. Theory-based evaluations are most likely to be appropriate (although counterfactual methods will be considered), resulting in a need for data collection from stakeholders required to test causal hypotheses.

5. Assessment of Value for Money to demonstrate value and target use of limited resources

A value for money assessment sets out to demonstrate the financial value that OPSS’s activities deliver in comparison the resources that are committed to deliver those activities. The assessment can also identify whether there are specific activities or ways of working that offer better or worse value for money. This evidence supports strategic decisions regarding where limited resources should be targeted to most cost-effectively deliver against OPSS outcomes.

To conduct a value for money assessment, OPSS require evidence of the cost of its activities and the value of the outcomes it delivers. While the cost of activities is available through internal financial reporting, identifying the value of outcomes presents a larger challenge.

Most of OPSS’s outcomes are not monetary, they relate to the avoidance of harm for consumers, businesses, and the environment. Examples include reducing the number of injuries to consumers, reducing administrative burden to businesses or reducing harmful waste being disposed of in the environment.

To support a value for money assessment, OPSS has developed a Detriment Model which delivers evidence-based monetary values for the types detriment (aka harms) that OPSS seeks to reduce. Further work is underway to refine the assumptions and the model, and to validate the model for use in OPSS.

Alongside the Detriment Model, the wider M&E framework will support improved evidence of the outcomes delivered by OPSS. This evidence, combined with the detriment model, provides a robust foundation for further value for money analysis.