Review into bias in algorithmic decision-making

Published 27 November 2020

Preface

Fairness is a highly prized human value. Societies in which individuals can flourish need to be held together by practices and institutions that are regarded as fair. What it means to be fair has been much debated throughout history, rarely more so than in recent months. Issues such as the global Black Lives Matter movement, the “levelling up” of regional inequalities within the UK, and the many complex questions of fairness raised by the COVID-19 pandemic have kept fairness and equality at the centre of public debate.

Inequality and unfairness have complex causes, but bias in the decisions that organisations make about individuals is often a key aspect. The impact of efforts to address unfair bias in decision-making have often either gone unmeasured or have been painfully slow to take effect. However, decision-making is currently going through a period of change. Use of data and automation has existed in some sectors for many years, but it is currently expanding rapidly due to an explosion in the volumes of available data, and the increasing sophistication and accessibility of machine learning algorithms. Data gives us a powerful weapon to see where bias is occurring and measure whether our efforts to combat it are effective; if an organisation has hard data about differences in how it treats people, it can build insight into what is driving those differences, and seek to address them.

However, data can also make things worse. New forms of decision-making have surfaced numerous examples where algorithms have entrenched or amplified historic biases; or even created new forms of bias or unfairness. Active steps to anticipate risks and measure outcomes are required to avoid this.

Concern about algorithmic bias was the starting point for this policy review. When we began the work this was an issue of concern to a growing, but relatively small, number of people. As we publish this report, the issue has exploded into mainstream attention in the context of exam results, with a strong narrative that algorithms are inherently problematic. This highlights the urgent need for the world to do better in using algorithms in the right way: to promote fairness, not undermine it. Algorithms, like all technology, should work for people, and not against them.

This is true in all sectors, but especially key in the public sector. When the state is making life-affecting decisions about individuals, that individual often can’t go elsewhere. Society may reasonably conclude that justice requires decision-making processes to be designed so that human judgement can intervene where needed to achieve fair and reasonable outcomes for each person, informed by individual evidence.

As our work has progressed it has become clear that we cannot separate the question of algorithmic bias from the question of biased decision-making more broadly. The approach we take to tackling biased algorithms in recruitment, for example, must form part of, and be consistent with, the way we understand and tackle discrimination in recruitment more generally.

A core theme of this report is that we now have the opportunity to adopt a more rigorous and proactive approach to identifying and mitigating bias in key areas of life, such as policing, social services, finance and recruitment. Good use of data can enable organisations to shine a light on existing practices and identify what is driving bias. There is an ethical obligation to act wherever there is a risk that bias is causing harm and instead make fairer, better choices.

The risk is growing as algorithms, and the datasets that feed them, become increasingly complex. Organisations often find it challenging to build the skills and capacity to understand bias, or to determine the most appropriate means of addressing it in a data-driven world. A cohort of people is needed with the skills to navigate between the analytical techniques that expose bias and the ethical and legal considerations that inform best responses. Some organisations may be able to create this internally, others will want to be able to call on external experts to advise them. Senior decision-makers in organisations need to engage with understanding the trade-offs inherent in introducing an algorithm. They should expect and demand sufficient explainability of how an algorithm works so that they can make informed decisions on how to balance risks and opportunities as they deploy it into a decision-making process.

Regulators and industry bodies need to work together with wider society to agree best practice within their industry and establish appropriate regulatory standards. Bias and discrimination are harmful in any context. But the specific forms they take, and the precise mechanisms needed to root them out, vary greatly between contexts. We recommend that there should be clear standards for anticipating and monitoring bias, for auditing algorithms and for addressing problems. There are some overarching principles, but the details of these standards need to be determined within each sector and use case. We hope that CDEI can play a key role in supporting organisations, regulators and government in getting this right.

Lastly, society as a whole will need to be engaged in this process. In the world before AI there were many different concepts of fairness. Once we introduce complex algorithms to decision-making systems, that range of definitions multiplies rapidly. These definitions are often contradictory with no formula for deciding which is correct. Technical expertise is needed to navigate these choices, but the fundamental decisions about what is fair cannot be left to data scientists alone. They are decisions that can only be truly legitimate if society agrees and accepts them. Our report sets out how organisations might tackle this challenge.

Transparency is key to helping organisations build and maintain public trust. There is a clear, and understandable, nervousness about the use and consequences of algorithms, exacerbated by the events of this summer. Being open about how and why algorithms are being used, and the checks and balances in place, is the best way to deal with this. Organisational leaders need to be clear that they retain accountability for decisions made by their organisations, regardless of whether an algorithm or a team of humans is making those decisions on a day-to-day basis.

In this report we set out some key next steps for the government and regulators to support organisations to get their use of algorithms right, whilst ensuring that the UK ecosystem is set up to support good ethical innovation. Our recommendations are designed to produce a step change in the behaviour of all organisations making life changing decisions on the basis of data, however limited, and regardless of whether they used complex algorithms or more traditional methods.

Enabling data to be used to drive better, fairer, more trusted decision-making is a challenge that countries face around the world. By taking a lead in this area, the UK, with its strong legal traditions and its centres of expertise in AI, can help to address bias and inequalities not only within our own borders but also across the globe.

The Board of the Centre for Data Ethics and Innovation

Executive summary

Unfair biases, whether conscious or unconscious, can be a problem in many decision-making processes. This review considers the impact that an increasing use of algorithmic tools is having on bias in decision-making, the steps that are required to manage risks, and the opportunities that better use of data offers to enhance fairness. We have focused on the use of algorithms in significant decisions about individuals, looking across four sectors (recruitment, financial services, policing and local government), and making cross-cutting recommendations that aim to help build the right systems so that algorithms improve, rather than worsen, decision-making.

It is well established that there is a risk that algorithmic systems can lead to biased decisions, with perhaps the largest underlying cause being the encoding of existing human biases into algorithmic systems. But the evidence is far less clear on whether algorithmic decision-making tools carry more or less risk of bias than previous human decision-making processes. Indeed, there are reasons to think that better use of data can have a role in making decisions fairer, if done with appropriate care.

When changing processes that make life-affecting decisions about individuals we should always proceed with caution. It is important to recognise that algorithms cannot do everything. There are some aspects of decision-making where human judgement, including the ability to be sensitive and flexible to the unique circumstances of an individual, will remain crucial.

Using data and algorithms in innovative ways can enable organisations to understand inequalities and to reduce bias in some aspects of decision-making. But there are also circumstances where using algorithms to make life-affecting decisions can be seen as unfair by failing to consider an individual’s circumstances, or depriving them of personal agency. We do not directly focus on this kind of unfairness in this report, but note that this argument can also apply to human decision-making, if the individual who is subject to the decision does not have a role in contributing to the decision.

History to date in the design and deployment of algorithmic tools has not been good enough. There are numerous examples worldwide of the introduction of algorithms persisting or amplifying historical biases, or introducing new ones. We must and can do better. Making fair and unbiased decisions is not only good for the individuals involved, but it is good for business and society. Successful and sustainable innovation is dependent on building and maintaining public trust. Polling undertaken for this review suggested that, prior to August’s controversy over exam results, 57% of people were aware of algorithmic systems being used to support decisions about them, with only 19% of those disagreeing in principle with the suggestion of a “fair and accurate” algorithm helping to make decisions about them. By October, we found that awareness had risen slightly (to 62%), as had disagreement in principle (to 23%). This doesn’t suggest a step change in public attitudes, but there is clearly still a long way to go to build trust in algorithmic systems. The obvious starting point for this is to ensure that algorithms are trustworthy.

The use of algorithms in decision-making is a complex area, with widely varying approaches and levels of maturity across different organisations and sectors. Ultimately, many of the steps needed to challenge bias will be context specific. But from our work, we have identified a number of concrete steps for industry, regulators and government to take that can support ethical innovation across a wide range of use cases. This report is not a guidance manual, but considers what guidance, support, regulation and incentives are needed to create the right conditions for fair innovation to flourish.

It is crucial to take a broad view of the whole decision-making process when considering the different ways bias can enter a system and how this might impact on fairness. The issue is not simply whether an algorithm is biased, but whether the overall decision-making processes are biased. Looking at algorithms in isolation cannot fully address this.

It is important to consider bias in algorithmic decision-making in the context of all decision-making systems. Even in human decision-making, there are differing views about what is and isn’t fair. But society has developed a range of standards and common practices for how to manage these issues, and legal frameworks to support this. Organisations have a level of understanding on what constitutes an appropriate level of due care for fairness. The challenge is to make sure that we can translate this understanding across to the algorithmic world, and apply a consistent bar of fairness whether decisions are made by humans, algorithms or a combination of the two. We must ensure decisions can be scrutinised, explained and challenged so that our current laws and frameworks do not lose effectiveness, and indeed can be made more effective over time.

Significant growth is happening both in data availability and use of algorithmic decision-making across many sectors; we have a window of opportunity to get this right and ensure that these changes serve to promote equality, not to entrench existing biases.

Sector reviews

The four sectors studied in Part II of this report are at different maturity levels in their use of algorithmic decision-making. Some of the issues they face are sector-specific, but we found common challenges that span these sectors and beyond.

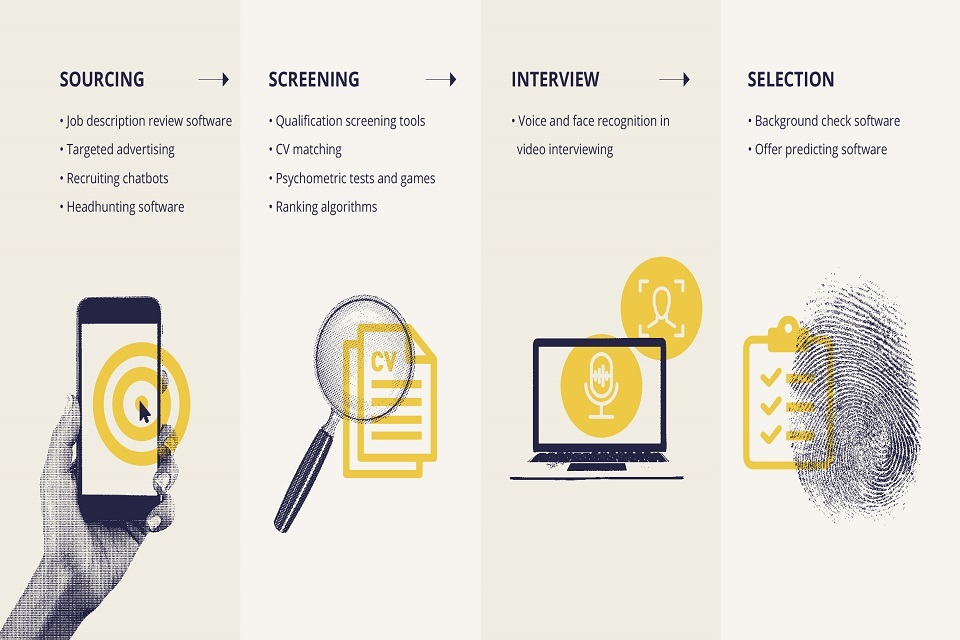

In recruitment we saw a sector that is experiencing rapid growth in the use of algorithmic tools at all stages of the recruitment process, but also one that is relatively mature in collecting data to monitor outcomes. Human bias in traditional recruitment is well evidenced and therefore there is potential for data-driven tools to improve matters by standardising processes and using data to inform areas of discretion where human biases can creep in.

However, we also found that a clear and consistent understanding of how to do this well is lacking, leading to a risk that algorithmic technologies will entrench inequalities. More guidance is needed on how to ensure that these tools do not unintentionally discriminate against groups of people, particularly when trained on historic or current employment data. Organisations must be particularly mindful to ensure they are meeting the appropriate legislative responsibilities around automated decision-making and reasonable adjustments for candidates with disabilities.

The innovation in this space has real potential for making recruitment fairer. However, given the potential risks, further scrutiny of how these tools work, how they are used and the impact they have on different groups, is required, along with higher and clearer standards of good governance to ensure that ethical and legal risks are anticipated and managed.

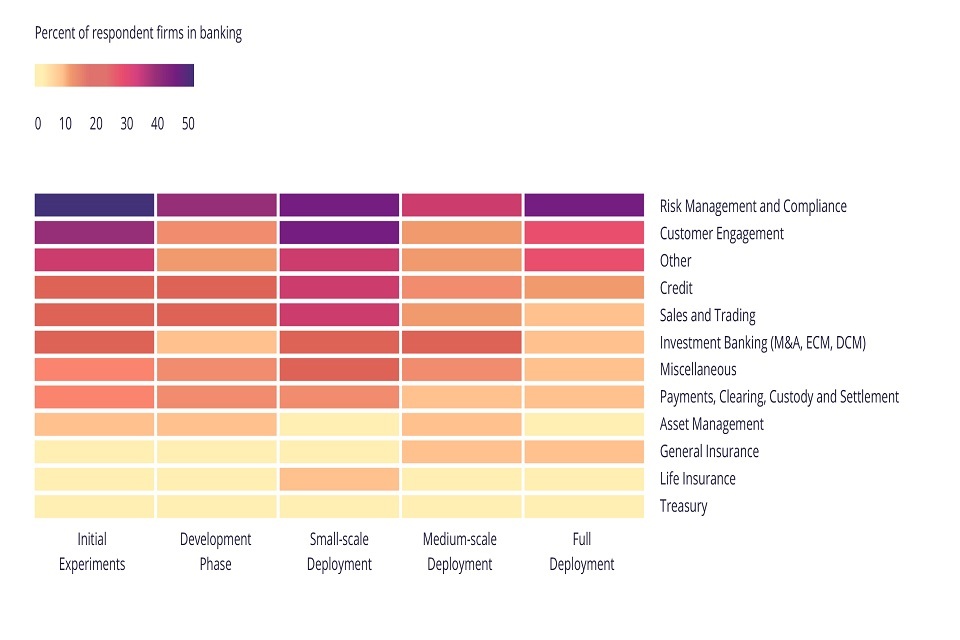

In financial services, we saw a much more mature sector that has long used data to support decision-making. Finance relies on making accurate predictions about peoples’ behaviours, for example how likely they are to repay debts. However, specific groups are historically underrepresented in the financial system, and there is a risk that these historic biases could be entrenched further through algorithmic systems. We found financial service organisations ranged from being highly innovative to more risk averse in their use of new algorithmic approaches. They are keen to test their systems for bias, but there are mixed views and approaches regarding how this should be done. This was particularly evident around the collection and use of protected characteristic data, and therefore organisations’ ability to monitor outcomes.

Our main focus within financial services was on credit scoring decisions made about individuals by traditional banks. Our work found the key obstacles to further innovation in the sector included data availability, quality and how to source data ethically, available techniques with sufficient explainability, risk averse culture, in some parts, given the impacts of the financial crisis and difficulty in gauging consumer and wider public acceptance. The regulatory picture is clearer in financial services than in the other sectors we have looked at. The Financial Conduct Authority (FCA) is the main regulator and is showing leadership in prioritising work to understand the impact and opportunities of innovative uses of data and AI in the sector.

The use of data from non-traditional sources could enable population groups who have historically found it difficult to access credit, due to lower availability of data about them from traditional sources, to gain better access in future. At the same time, more data and more complex algorithms could increase the potential for the introduction of indirect bias via proxy as well as the ability to detect and mitigate it.

Adoption of algorithmic decision-making in the public sector is generally at an early stage. In policing, we found very few tools currently in operation in the UK, with a varied picture across different police forces, both on usage and approaches to managing ethical risks.

There have been notable government reviews into the issue of bias in policing, which is important context when considering the risks and opportunities around the use of technology in this sector. Again, we found potential for algorithms to support decision-making, but this introduces new issues around the balance between security, privacy and fairness, and there is a clear requirement for strong democratic oversight.

Police forces have access to more digital material than ever before, and are expected to use this data to identify connections and manage future risks. The £63.7 million funding for police technology programmes announced in January 2020 demonstrates the government’s drive for innovation. But clearer national leadership is needed. Though there is strong momentum in data ethics in policing at a national level, the picture is fragmented with multiple governance and regulatory actors, and no single body fully empowered or resourced to take ownership. The use of data analytics tools in policing carries significant risk. Without sufficient care, processes can lead to outcomes that are biased against particular groups, or systematically unfair. In many scenarios where these tools are helpful, there is still an important balance to be struck between automated decision-making and the application of professional judgement and discretion. Given the sensitivities in this area it is not sufficient for care to be taken internally to consider these issues; it is also critical that police forces are transparent in how such tools are being used to maintain public trust.

In local government, we found an increased use of data to inform decision-making across a wide range of services. Whilst most tools are still in the early phase of deployment, there is an increasing demand for sophisticated predictive technologies to support more efficient and targeted services.

By bringing together multiple data sources, or representing existing data in new forms, data-driven technologies can guide decision-makers by providing a more contextualised picture of an individual’s needs. Beyond decisions about individuals, these tools can help predict and map future service demands to ensure there is sufficient and sustainable resourcing for delivering important services.

However, these technologies also come with significant risks. Evidence has shown that certain people are more likely to be overrepresented in data held by local authorities and this can then lead to biases in predictions and interventions. A related problem occurs when the number of people within a subgroup is small. Data used to make generalisations can result in disproportionately high error rates amongst minority groups.

Data-driven tools present genuine opportunities for local government. However, tools should not be considered a silver bullet for funding challenges and in some cases additional investment will be required to realise their potential. Moreover, we found that data infrastructure and data quality were significant barriers to developing and deploying data-driven tools effectively and responsibly. Investment in this area is needed before developing more advanced systems.

Sector-specific recommendations to regulators and government

Most of the recommendations in this report are cross-cutting, but we identified the following recommendations specific to individual sectors. More details are given in sector chapters below.

Recruitment

Recommendation 1: The Equality and Human Rights Commission should update its guidance on the application of the Equality Act 2010 to recruitment, to reflect issues associated with the use of algorithms, in collaboration with consumer and industry bodies.

Recommendation 2: The Information Commissioner’s Office should work with industry to understand why current guidance is not being consistently applied, and consider updates to guidance (e.g. in the Employment Practices Code), greater promotion of existing guidance, or other action as appropriate.

Policing

Recommendation 3: The Home Office should define clear roles and responsibilities for national policing bodies with regards to data analytics and ensure they have access to appropriate expertise and are empowered to set guidance and standards. As a first step, the Home Office should ensure that work underway by the National Police Chiefs’ Council and other policing stakeholders to develop guidance and ensure ethical oversight of data analytics tools is appropriately supported.

Local government

Recommendation 4: Government should develop national guidance to support local authorities to legally and ethically procure or develop algorithmic decision-making tools in areas where significant decisions are made about individuals, and consider how compliance with this guidance should be monitored.

Addressing the challenges

We found underlying challenges across the four sectors, and indeed other sectors where algorithmic decision-making is happening. In Part III of this report, we focus on understanding these challenges, where the ecosystem has got to on addressing them, and the key next steps for organisations, regulators and government. The main areas considered are:

The enablers needed by organisations building and deploying algorithmic decision-making tools to help them do this in a fair way, see Chapter 7.

The regulatory levers, both formal and informal, needed to incentivise organisations to do this, and create a level playing field for ethical innovation see Chapter 8.

How the public sector, as a major developer and user of data-driven technology, can show leadership in this area through transparency see Chapter 9.

There are inherent links between these areas. Creating the right incentives can only succeed if the right enablers are in place to help organisations act fairly, but conversely, there is little incentive for organisations to invest in tools and approaches for fair decision-making if there is insufficient clarity on expected norms. We want a system that is fair and accountable; one that preserves, protects or improves fairness in decisions being made with the use of algorithms. We want to address the obstacles that organisations may face to innovate ethically, to ensure the same or increased levels of accountability for these decisions and how society can identify and respond to bias in algorithmic decision-making processes. We have considered the existing landscape of standards and laws in this area, and whether they are sufficient for our increasingly data-driven society.

To realise this vision we need clear mechanisms for safe access to data to test for bias; organisations that are able to make judgements based on data about bias; a skilled industry of third parties who can provide support and assurance, and regulators equipped to oversee and support their sectors and remits through this change.

Enabling fair innovation

We found that many organisations are aware of the risks of algorithmic bias, but are unsure how to address bias in practice.

There is no universal formulation or rule that can tell you an algorithm is fair. Organisations need to identify what fairness objectives they want to achieve and how they plan to do this. Sector bodies, regulators, standards bodies and the government have a key role in setting out clear guidelines on what is appropriate in different contexts; getting this right is essential not only for avoiding bad practice, but for giving the clarity that enables good innovation. However, all organisations need to be clear about their own accountability for getting it right. Whether an algorithm or a structured human process is being used to make a decision doesn’t change an organisation’s accountability.

Improving diversity across a range of roles involved in the development and deployment of algorithmic decision-making tools is an important part of protecting against bias. Government and industry efforts to improve this must continue, and need to show results.

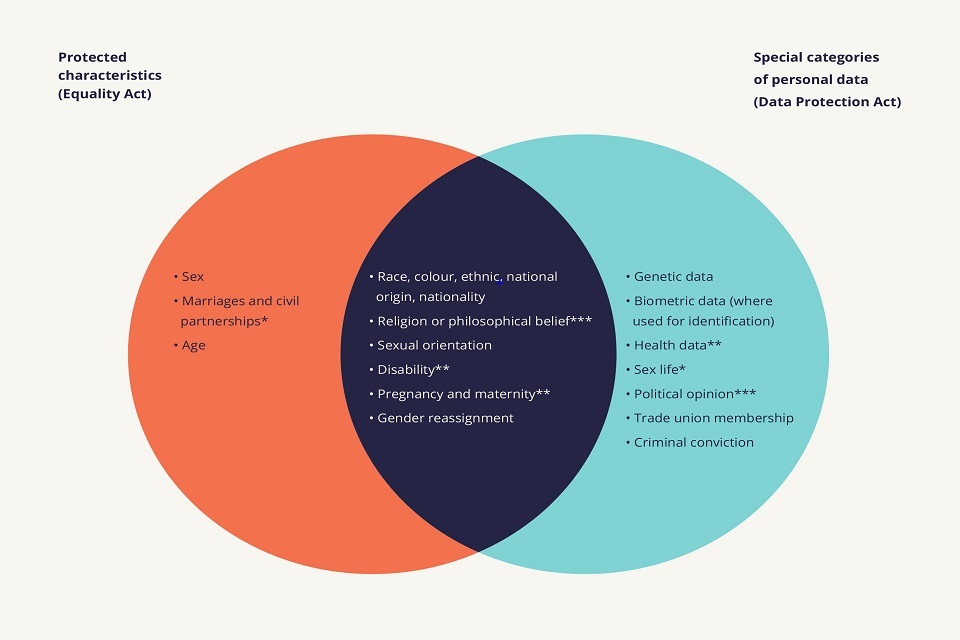

Data is needed to monitor outcomes and identify bias, but data on protected characteristics is not available often enough. One reason for this is an incorrect belief that data protection law prevents collection or usage of this data. Indeed, there are a number of lawful bases in data protection legislation for using protected or special characteristic data when monitoring or addressing discrimination. But there are some other genuine challenges in collecting this data, and more innovative thinking is needed in this area; for example around the potential for trusted third party intermediaries.

The machine learning community has developed multiple techniques to measure and mitigate algorithmic bias. Organisations should be encouraged to deploy methods that address bias and discrimination. However, there is little guidance on how to choose the right methods, or how to embed them into development and operational processes. Bias mitigation cannot be treated as a purely technical issue; it requires careful consideration of the wider policy, operational and legal contexts. There is insufficient legal clarity concerning novel techniques in this area. Many can be used legitimately, but care is needed to ensure that the application of some techniques does not cross into unlawful positive discrimination.

Recommendations to government

Recommendation 5: Government should continue to support and invest in programmes that facilitate greater diversity within the technology sector, building on its current programmes and developing new initiatives where there are gaps.

Recommendation 6: Government should work with relevant regulators to provide clear guidance on the collection and use of protected characteristic data in outcome monitoring and decision-making processes. They should then encourage the use of that guidance and data to address current and historic bias in key sectors.

Recommendation 7: Government and the Office for National Statistics (ONS) should open the Secure Research Service more broadly, to a wider variety of organisations, for use in evaluation of bias and inequality across a greater range of activities.

Recommendation 8: Government should support the creation and development of data-focused public and private partnerships, especially those focused on the identification and reduction of biases and issues specific to under-represented groups. The Office for National Statistics (ONS) and Government Statistical Service should work with these partnerships and regulators to promote harmonised principles of data collection and use into the private sector, via shared data and standards development.

Recommendations to regulators

Recommendation 9: Sector regulators and industry bodies should help create oversight and technical guidance for responsible bias detection and mitigation in their individual secin individual sectors, adding context-specific detail to the existing cross-cutting guidance on data protection, and any new cross-cutting guidance on the Equality Act.

Good, anticipatory governance is crucial here. Many of the high profile cases of algorithmic bias could have been anticipated with careful evaluation and mitigation of the potential risks. Organisations need to make sure that the right capabilities and structures are in place to ensure that this happens both before algorithms are introduced into decision-making processes, and through their life. Doing this well requires understanding of, and empathy for, the expectations of those who are affected by decisions, which can often only be achieved through the right engagement with groups. Given the complexity of this area, we expect to see a growing role for expert professional services supporting organisations. Although the ecosystem needs to develop further, there is already plenty that organisations can and should be doing to get this right. Data Protection Impact Assessments and Equality Impact Assessments can help with structuring thinking and documenting the steps taken.

Guidance to organisation leaders and boards

Those responsible for governance of organisations deploying or using algorithmic decision-making tools to support significant decisions about individuals should ensure that leaders are in place with accountability for:

- Understanding the capabilities and limits of those tools

- Considering carefully whether individuals will be fairly treated by the decision-making process that the tool forms part of

- Making a conscious decision on appropriate levels of human involvement in the decision-making process

- Putting structures in place to gather data and monitor outcomes for fairness

- Understanding their legal obligations and having carried out appropriate impact assessments

This especially applies in the public sector when citizens often do not have a choice about whether to use a service, and decisions made about individuals can often be life-affecting.

The regulatory environment

Clear industry norms, and good, proportionate regulation, are key both for addressing risks of algorithmic bias, and for promoting a level playing field for ethical innovation to thrive.

The increased use of algorithmic decision-making presents genuinely new challenges for regulation, and brings into question whether existing legislation and regulatory approaches can address these challenges sufficiently well. There is currently limited case law or statutory guidance directly addressing discrimination in algorithmic decision-making, and the ecosystems of guidance and support are at different maturity levels in different sectors.

Though there is only a limited amount of case law, the recent judgement of the Court of Appeal in relation to the usage of live facial recognition technology by South Wales Police seems likely to be significant. One of the grounds for successful appeal was that South Wales Police failed to adequately consider whether their trial could have a discriminatory impact, and specifically that they did not take reasonable steps to establish whether their facial recognition software contained biases related to race or sex. In doing so, the court found that they did not meet their obligations under the Public Sector Equality Duty, even though there was no evidence that this specific algorithm was biased. This suggests a general duty for public sector organisations to take reasonable steps to consider any potential impact on equality upfront and to detect algorithmic bias on an ongoing basis. The current regulatory landscape for algorithmic decision-making consists of the Equality and Human Rights Commission (EHRC), the Information Commissioner’s Office (ICO) and sector regulators. At this stage, we do not believe that there is a need for a new specialised regulator or primary legislation to address algorithmic bias.

However, algorithmic bias means the overlap between discrimination law, data protection law and sector regulations is becoming increasingly important. We see this overlap playing out in a number of contexts, including discussions around the use of protected characteristics data to measure and mitigate algorithmic bias, the lawful use of bias mitigation techniques, identifying new forms of bias beyond existing protected characteristics. The first step in resolving these challenges should be to clarify the interpretation of the law as it stands, particularly the Equality Act 2010, both to give certainty to organisations deploying algorithms and to ensure that existing individual rights are not eroded, and wider equality duties are met. However, as use of algorithmic decision-making grows further, we do foresee a future need to look again at the legislation itself, which should be kept under consideration as guidance is developed and case law evolves.

Existing regulators need to adapt their enforcement to algorithmic decision-making, and provide guidance on how regulated bodies can maintain and demonstrate compliance in an algorithmic age. Some regulators require new capabilities to enable them to respond effectively to the challenges of algorithmic decision-making. While larger regulators with a greater digital remit may be able to grow these capabilities in-house, others will need external support. Many regulators are working hard to do this, and the ICO has shown leadership in this area both by starting to build a skills base to address these new challenges, and in convening other regulators to consider issues arising from AI. Deeper collaboration across the regulatory ecosystem is likely to be needed in future.

Outside of the formal regulatory environment, there is increasing awareness within the private sector of the demand for a broader ecosystem of industry standards and professional services to help organisations address algorithmic bias. There are a number of reasons for this: it is a highly specialised skill that not all organisations will be able to support, it will be important to have consistency in how the problem is addressed, and because regulatory standards in some sectors may require independent audit of systems. Elements of such an ecosystem might be licenced auditors or qualification standards for individuals with the necessary skills. Audit of bias is likely to form part of a broader approach to audit that might also cover issues such as robustness and explainability. Government, regulators, industry bodies and private industry will all play important roles in growing this ecosystem so that organisations are better equipped to make fair decisions.

Recommendations to government

Recommendation 10: Government should issue guidance that clarifies the Equality Act responsibilities of organisations using algorithmic decision-making. This should include guidance on the collection of protected characteristics data to measure bias and the lawfulness of bias mitigation techniques.

Recommendation 11: Though the development of this guidance and its implementation, government should assess whether it provides both sufficient clarity for organisations on meeting their obligations, and leaves sufficient scope for organisations to take actions to mitigate algorithmic bias. If not, government should consider new regulations or amendments to the Equality Act to address this.

Recommendations to regulators

Recommendation 12: The EHRC should ensure that it has the capacity and capability to investigate algorithmic discrimination. This may include EHRC reprioritising resources to this area, EHRC supporting other regulators to address algorithmic discrimination in their sector, and additional technical support to the EHRC.

Recommendation 13: Regulators should consider algorithmic discrimination in their supervision and enforcement activities, as part of their responsibilities under the Public Sector Equality Duty.

Recommendation 14: Regulators should develop compliance and enforcement tools to address algorithmic bias, such as impact assessments, audit standards, certification and/or regulatory sandboxes.

Recommendation 15: Regulators should coordinate their compliance and enforcement efforts to address algorithmic bias, aligning standards and tools where possible. This could include jointly issued guidance, collaboration in regulatory sandboxes, and joint investigations.

Public sector transparency

Making decisions about individuals is a core responsibility of many parts of the public sector, and there is increasing recognition of the opportunities offered through the use of data and algorithms in decision-making. The use of technology should never reduce real or perceived accountability of public institutions to citizens. In fact, it offers opportunities to improve accountability and transparency, especially where algorithms have significant effects on significant decisions about individuals.

A range of transparency measures already exist around current public sector decision-making processes; both proactive sharing of information about how decisions are made, and reactive rights for citizens to request information on how decisions were made about them. The UK government has shown leadership in setting out guidance on AI usage in the public sector, including a focus on techniques for explainability and transparency. However, more is needed to make transparency about public sector use of algorithmic decision-making the norm. There is a window of opportunity to ensure that we get this right as adoption starts to increase, but it is sometimes hard for individual government departments or other public sector organisations to be first in being transparent; a strong central drive for this is needed.

The development and delivery of an algorithmic decision-making tool will often include one or more suppliers, whether acting as technology suppliers or business process outsourcing providers. While the ultimate accountability for fair decision-making always sits with the public body, there is limited maturity or consistency in contractual mechanisms to place responsibilities in the right place in the supply chain. Procurement processes should be updated in line with wider transparency commitments to ensure standards are not lost along the supply chain.

Recommendations to government

Recommendation 16: Government should place a mandatory transparency obligation on all public sector organisations using algorithms that have a significant influence on significant decisions affecting individuals. Government should conduct a project to scope this obligation more precisely, and to pilot an approach to implement it, but it should require the proactive publication of information on how the decision to use an algorithm was made, the type of algorithm, how it is used in the overall decision-making process, and steps taken to ensure fair treatment of individuals.

Recommendation 17: Cabinet Office and the Crown Commercial Service should update model contracts and framework agreements for public sector procurement to incorporate a set of minimum standards around ethical use of AI, with particular focus on expected levels of transparency and explainability, and ongoing testing for fairness.

Next steps and future challenges

This review has considered a complex and rapidly evolving field. There is plenty to do across industry, regulators and government to manage the risks and maximise the benefits of algorithmic decision-making. Some of the next steps fall within CDEI’s remit, and we are happy to support industry, regulators and government in taking forward the practical delivery work to address the issues we have identified and future challenges which may arise. Outside of specific activities, and noting the complexity and range of the work needed across multiple sectors, we see a key need for national leadership and coordination to ensure continued focus and pace in addressing these challenges across sectors. This is a rapidly moving area. A level of coordination and monitoring will be needed to assess how organisations building and using algorithmic decision-making tools are responding to the challenges highlighted in this report, and to the proposed new guidance from regulators and government. Government should be clear on where it wants this coordination to sit; for example in central government directly, in a specific regulator or in CDEI.

In this review we have concluded that there is significant scope to address the risks posed by bias in algorithmic decision-making within the law as it stands, but if this does not succeed then there is a clear possibility that future legislation may be required. We encourage organisations to respond to this challenge; to innovate responsibly and think through the implications for individuals and society at large as they do so.

Part I: Introduction

1. Background and scope

1.1 About CDEI

The adoption of data-driven technology affects every aspect of our society and its use is creating opportunities as well as new ethical challenges.

The Centre for Data Ethics and Innovation (CDEI) is an independent expert committee, led by a board of specialists, set up and tasked by the UK government to investigate and advise on how we maximise the benefits of these technologies.

Our goal is to create the conditions in which ethical innovation can thrive: an environment in which the public are confident their values are reflected in the way data-driven technology is developed and deployed; where we can trust that decisions informed by algorithms are fair; and where risks posed by innovation are identified and addressed.

More information about CDEI can be found at www.gov.uk/cdei.

1.2 About this review

In the October 2018 Budget, the Chancellor announced that we would investigate the potential bias in decisions made by algorithms. This review formed a key part of our 2019/2020 work programme, though completion was delayed by the onset of COVID-19. This is the final report of CDEI’s review and includes a set of formal recommendations to the government.

Government tasked us to draw on expertise and perspectives from stakeholders across society to provide recommendations on how they should address this issue. We also provide advice for regulators and industry, aiming to support responsible innovation and help build a strong, trustworthy system of governance. The government has committed to consider and respond publicly to our recommendations.

1.3 Our focus

The use of algorithms in decision-making is increasing across multiple sectors of our society. Bias in algorithmic decision-making is a broad topic, so in this review, we have prioritised the types of decisions where potential bias seems to represent a significant and imminent ethical risk.

This has led us to focus on:

- Areas where algorithms have the potential to make or inform a decision that directly affects an individual human being (as opposed to other entities, such as companies). The significance of decisions of course varies, and we have typically focused on areas where individual decisions could have a considerable impact on a person’s life, i.e. decisions that are significant in the sense of the Data Protection Act 2018.

- The extent to which algorithmic decision-making is being used now, or is likely to be soon, in different sectors.

- Decisions made or supported by algorithms, and not wider ethical issues in the use of artificial intelligence.

- The changes in ethical risk in an algorithmic world as compared to an analogue world.

- Circumstances where decisions are biased (see Chapter 2 for a discussion of what this means), rather than other forms of unfairness such as arbitrariness or unreasonableness.

This scope is broad, but it doesn’t cover all possible areas where algorithmic bias can be an issue. For example, the CDEI Review of online targeting, published earlier this year, highlighted the risk of harm through bias in targeting within online platforms. These are decisions which are individually very small, for example on targeting an advert or recommending content to a user, but the overall impact of bias across many small decisions can still be problematic. This review did touch on these issues, but they fell outside of our core focus on significant decisions about individuals.

It is worth highlighting that the main work of this review was carried out before a number of highly relevant events in mid 2020; the COVID-19 pandemic, Black Lives Matter, the awarding of exam results without exams, and (with less widespread attention, but very specific relevance) the judgement of the Court of Appeal in Bridges v South Wales Police. We have considered links to these issues in our review, but have not been able to treat them in full depth.[footnote 1]

1.4 Our approach

Sector approach

The ethical questions in relation to bias in algorithmic decision-making vary depending on the context and sector. We chose four initial areas of focus to illustrate the range of issues. These were recruitment, financial services, policing and local government. Our rationale for choosing these sectors is set out in the introduction to Part II.

Cross-sector themes

From the work we carried out on the four sectors, as well as our engagement across government, civil society, academia and interested parties in other sectors, we were able to identify themes, issues and opportunities that went beyond the individual sectors.

We set out three key cross-cutting questions in our interim report, which we have sought to address on a cross-sector basis:

1. Data: Do organisations and regulators have access to the data they require to adequately identify and mitigate bias?

2. Tools and techniques: What statistical and technical solutions are available now or will be required in future to identify and mitigate bias and which represent best practice?

3. Governance: Who should be responsible for governing, auditing and assuring these algorithmic decision-making systems?

These questions have guided the review. While we have made sector-specific recommendations where appropriate, our recommendations focus more heavily on opportunities to address these questions (and others) across multiple sectors.

Evidence

Our evidence base for this final report is informed by a variety of work including:

-

A landscape summary led by Professor Michael Rovatsos of the University of Edinburgh, which assessed the current academic and policy literature.

-

An open call for evidence which received responses from a wide cross section of academic institutions and individuals, civil society, industry and the public sector.

-

A series of semi-structured interviews with companies in the financial services and recruitment sectors developing and using algorithmic tools.

-

Work with the Behavioural Insights Team on attitudes to the use of algorithms in personal banking.[footnote 2]

- Commissioned research from the Royal United Services Institute (RUSI) on data analytics in policing in England and Wales.[footnote 3]

- Contracted work by Faculty on technical bias mitigation techniques.[footnote 4]

-

Representative polling on public attitudes to a number of the issues raised in this report, conducted by Deltapoll as part of CDEI’s ongoing public engagement work.

-

Meetings with a variety of stakeholders including regulators, industry groups, civil society organisations, academics and government departments, as well as desk-based research to understand the existing technical and policy landscape.

2. The issue

Summary

-

Algorithms are structured processes, which have long been used to aid human decision-making. Recent developments in machine learning techniques and exponential growth in data has allowed for more sophisticated and complex algorithmic decisions, and there has been corresponding growth in usage of algorithm supported decision-making across many areas of society.

-

This growth has been accompanied by significant concerns about bias; that the use of algorithms can cause a systematic skew in decision-making that results in unfair outcomes. There is clear evidence that algorithmic bias can occur, whether through entrenching previous human biases or introducing new ones.

-

Some forms of bias constitute discrimination under the Equality Act 2010, namely when bias leads to unfair treatment based on certain protected characteristics. There are also other kinds of algorithmic bias that are non-discriminatory, but still lead to unfair outcomes.

-

There are multiple concepts of fairness, some of which are incompatible and many of which are ambiguous. In human decisions we can often accept this ambiguity and allow for human judgement to consider complex reasons for a decision. In contrast, algorithms are unambiguous.

-

Fairness is about much more than the absence of bias: fair decisions need to also be non-arbitrary, reasonable, consider equality implications and respect the circumstances and personal agency of the individuals concerned.

-

Despite concerns about ‘black box’ algorithms, in some ways algorithms can be more transparent than human decisions; unlike a human it is possible to reliably test how an algorithm responds to changes in parts of the input. There are opportunities to deploy algorithmic decision-making transparently, and enable the identification and mitigation of systematic bias in ways that are challenging with humans. Human developers and users of algorithms must decide the concepts of fairness that apply to their context, and ensure that algorithms deliver fair outcomes.

-

Fairness through unawareness is often not enough to prevent bias: ignoring protected characteristics is insufficient to prevent algorithmic bias and it can prevent organisations from identifying and addressing bias.

-

The need to address algorithmic bias goes beyond regulatory requirements under equality and data protection law. It is also critical for innovation that algorithms are used in a way that is both fair, and seen by the public to be fair.

2.1 Introduction

Human decision-making has always been flawed, shaped by individual or societal biases that are often unconscious. Over the years, society has identified ways of improving it, often by building processes and structures that encourage us to make decisions in a fairer and more objective way, from agreed social norms to equality legislation. However, new technology is introducing new complexities. The growing use of algorithms in decision-making has raised concerns around bias and fairness.

Even in this data-driven context, the challenges are not new. In 1988, the UK Commission for Racial Equality found a British medical school guilty of algorithmic discrimination when inviting applicants to interview.[footnote 5] The computer program they had used was determined to be biased against both women and applicants with non-European names.

The growth in this area has been driven by the availability and volume of (often personal) data that can be used to train machine learning models, or as inputs into decisions, as well as cheaper and easier availability of computing power, and innovations in tools and techniques. As usage of algorithmic tools grows, so does their complexity. Understanding the risks is therefore crucial to ensure that these tools have a positive impact and improve decision-making.

Algorithms have different but related vulnerabilities to human decision-making processes. They can be more able to explain themselves statistically, but less able to explain themselves in human terms. They are more consistent than humans but are less able to take nuanced contextual factors into account. They can be highly scalable and efficient, but consequently capable of consistently applying errors to very large populations. They can also act to obscure the accountabilities and liabilities that individual people or organisations have for making fair decisions.

2.2 The use of algorithms in decision-making

In simple terms, an algorithm is a structured process. Using structured processes to aid human decision-making is much older than computation. Over time, the tools and approaches available to deploy such decision-making have become more sophisticated. Many organisations responsible for making large numbers of structured decisions (for example, whether an individual qualifies for a welfare benefits payment, or whether a bank should offer a customer a loan), make these processes scalable and consistent by giving their staff well-structured processes and rules to follow. Initial computerisation of such decisions took a similar path, with humans designing structured processes (or algorithms) to be followed by a computer handling an application.

However, technology has reached a point where the specifics of those decision-making processes are not always explicitly manually designed. Machine learning tools often seek to find patterns in data without requiring the developer to specify which factors to use or how exactly to link them, before formalising relationships or extracting information that could be useful to make decisions. The results of these tools can be simple and intuitive for humans to understand and interpret, but they can also be highly complex.

Some sectors, such as credit scoring and insurance, have a long history of using statistical techniques to inform the design of automated processes based on historical data. An ecosystem has evolved that helps to manage some of the potential risks, for example credit reference agencies offer customers the ability to see their own credit history, and offer guidance on the factors that can affect credit scoring. In these cases, there are a range of UK regulations that govern the factors that can and cannot be used.

We are now seeing the application of data-driven decision-making in a much wider range of scenarios. There are a number of drivers for this increase, including:

- The exponential growth in the amount of data held by organisations, which makes more decision-making processes amenable to data-driven approaches.

- Improvements in the availability and cost of computing power and skills.

- Increased focus on cost saving, driven by fiscal constraints in the public sector, and competition from disruptive new entrants in many private sector markets.

- Advances in machine learning techniques, especially deep neural networks, that have rapidly brought many problems previously inaccessible to computers into routine everyday use (e.g. image and speech recognition). In simple terms, an algorithm is a set of instructions designed to perform a specific task. In algorithmic decision-making, the word is applied in two different contexts:

- A machine learning algorithm takes data as an input to create a model. This can be a one-off process, or something that happens continually as new data is gathered.

- Algorithm can also be used to describe a structured process for making a decision, whether followed by a human or computer, and possibly incorporating a machine learning model.

The usage is usually clear from context. In this review we are focused mainly on decision-making processes involving machine learning algorithms, although some of the content is also relevant to other structured decision-making processes. Note that there is no hard definition of exactly which statistical techniques and algorithms constitute novel machine learning. We have observed that many recent developments are associated with applying existing statistical techniques more widely in new sectors, not about novel techniques.

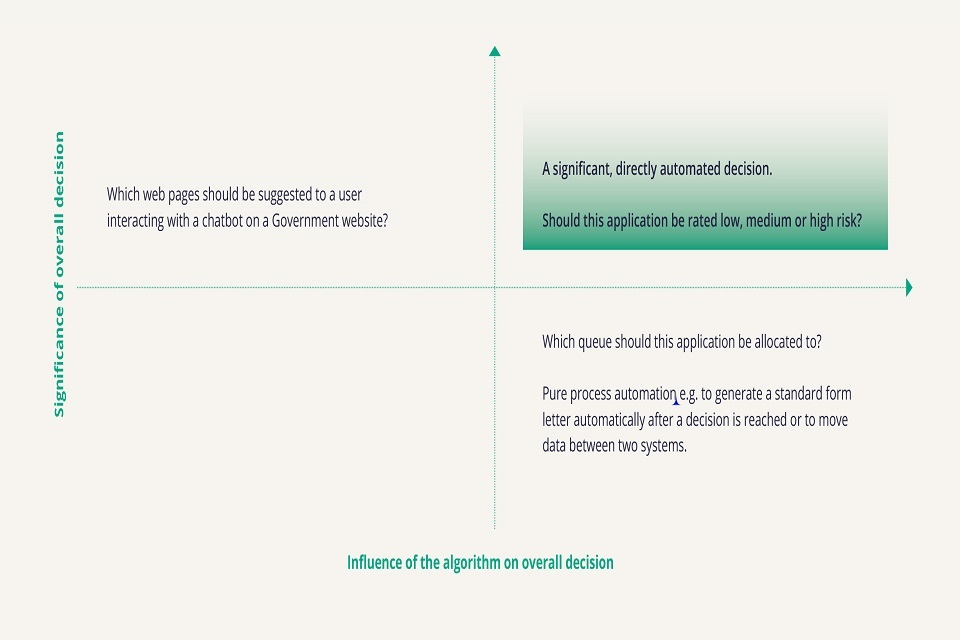

We interpret algorithmic decision-making to include any decision-making process where an algorithm makes, or meaningfully assists, the decision. This includes what is sometimes referred to as algorithmically-assisted decision-making. In this review we are focused mainly on decisions about individual people.

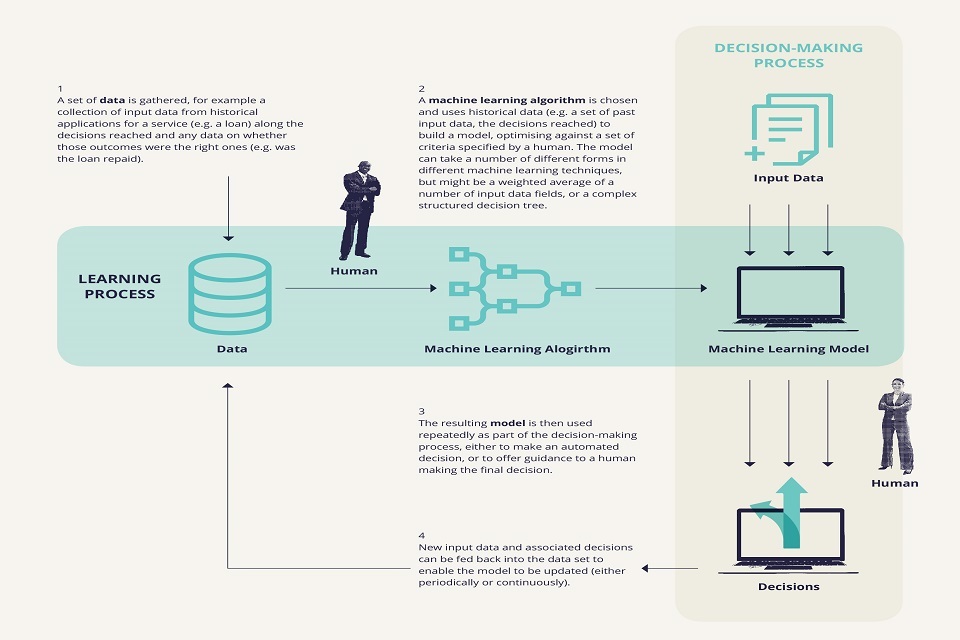

Figure 1 below shows an example of how a machine learning algorithm can be used within a decision-making process, such as a bank making a decision on whether to offer a loan to an individual.

Machine-learning algorithms can be used within decision-making processes. First, a set of data is gathered, for example a collection of input data from historical applications for a service (e.g. a loan) along with the decisions reached, and any data on whether those outcomes were the right ones (e.g. was the loan repaid). A human decides what data to make available to the model. Second, A machine learning algorithm is chosen, and uses historical data (e.g. a set of past input data (e.g. a set of past input data, the decisions reached) to build a model, optimising against a set of criteria specified by a human. The model can take a number of different forms in different machine learning techniques, but might be a weighted average of a number of a number of input data fields, or a complex structured decision tree. Third, the resulting model is then used repeatedly as part of the decision-making process, either to make an automated decision, or to offer guidance to a human making the final decision. A human can be involved at this stage to vet the machine-learning model’s outputs and make judgements about how to incorporate this information into a final decision. Fourth, new input data and associated decisions can be fed back into the data set to enable the model to be updated (either periodically or continuously).

Figure 1: How data and algorithms come together to support decision-making

It is important to emphasise that algorithms often do not represent the complete decision-making process. There may be elements of human judgement, exceptions treated outside of the usual process and opportunities for appeal or reconsideration. In fact, for significant decisions, an appropriate provision for human review will usually be required to comply with data protection law. Even before an algorithm is deployed into a decision-making process, it is humans that decide on the objectives it is trying to meet, the data available to it, and how the output is used.

It is therefore critical to consider not only the algorithmic aspect, but the whole decision-making process that sits around it. Human intervention in these processes will vary, and in some cases may be absent entirely in fully automated systems. Ultimately the aim is not just to avoid bias in algorithmic aspects of a process, but that the process as a whole achieves fair decision-making.

2.3 Bias

As algorithmic decision-making grows in scale, increasing concerns are being raised around the risks of bias. Bias has a precise meaning in statistics, referring to a systematic skew in results, that is an output that is not correct on average with respect to the overall population being sampled.

However in general usage, and in this review, bias is used to refer to an output that is not only skewed, but skewed in a way that is unfair (see below for a discussion on what unfair might mean in this context).

Bias can enter algorithmic decision-making systems in a number of ways, including:

-

Historical bias: The data that the model is built, tested and operated on could introduce bias. This may be because of previously biased human decision-making or due to societal or historical inequalities. For example, if a company’s current workforce is predominantly male then the algorithm may reinforce this, whether the imbalance was originally caused by biased recruitment processes or other historical factors. If your criminal record is in part a result of how likely you are to be arrested (as compared to someone else with the same history of behaviour, but not arrests), an algorithm constructed to assess risk of reoffending is at risk of not reflecting the true likelihood of reoffending, but instead reflects the more biased likelihood of being caught reoffending.

-

Data selection bias: How the data is collected and selected could mean it is not representative. For example, over or under recording of particular groups could mean the algorithm was less accurate for some people, or gave a skewed picture of particular groups. This has been the main cause of some of the widely reported problems with accuracy of some facial recognition algorithms across different ethnic groups, with attempts to address this focusing on ensuring a better balance in training data.[footnote 6]

-

Algorithmic design bias: It may also be that the design of the algorithm leads to introduction of bias. For example, CDEI’s Review of online targeting noted examples of algorithms placing job advertisements online designed to optimise for engagement at a given cost, leading to such adverts being more frequently targeted at men because women are more costly to advertise to.

-

Human oversight is widely considered to be a good thing when algorithms are making decisions, and mitigates the risk that purely algorithmic processes cannot apply human judgement to deal with unfamiliar situations. However, depending on how humans interpret or use the outputs of an algorithm, there is also a risk that bias re-enters the process as the human applies their own conscious or unconscious biases to the final decision.

There is also risk that bias can be amplified over time by feedback loops, as models are incrementally re-trained on new data generated, either fully or partly, via use of earlier versions of the model in decision-making. For example, if a model predicting crime rates based on historical arrest data is used to prioritise police resources, then arrests in high risk areas could increase further, reinforcing the imbalance. CDEI’s Landscape summary discusses this issue in more detail.

2.4 Discrimination and equality

In this report we use the word discrimination in the sense defined in the Equality Act 2010, meaning unfavourable treatment on the basis of a protected characteristic.[footnote 7]

The Equality Act 2010[footnote 8] makes it unlawful to discriminate against someone on the basis of certain protected characteristics (for example age, race, sex, disability) in public functions, employment and the provision of goods and services.

The choice of these characteristics is a recognition that they have been used to treat people unfairly in the past and that, as a society, we have deemed this unfairness unacceptable. Many, albeit not all, of the concerns about algorithmic bias relate to situations where that bias may lead to discrimination in the sense set out in the Equality Act 2010.

The Equality Act 2010[footnote 9] defines two main categories of discrimination:[footnote 10]

-

Direct Discrimination: When a person is treated less favourably than another because of a protected characteristic.

-

Indirect Discrimination: When a wider policy or practice, even if it applies to everyone, disadvantages a group of people who share a protected characteristic (and there is not a legitimate reason for doing so).

Where this discrimination is direct, the interpretation of the law in an algorithmic decision-making process seems relatively clear. If an algorithmic model explicitly leads to someone being treated less favourably on the basis of a protected characteristic that would be unlawful. There are some very specific exceptions to this in the case of direct discrimination on the basis of age (where such discrimination could be lawful if a proportionate means to a proportionate aim, e.g. services targeted at a particular age range) or limited positive actions in favour of those with disabilities.

However, the increased use of data-driven technology has created new possibilities for indirect discrimination. For example, a model might consider an individual’s postcode. This is not a protected characteristic, but there is some correlation between postcode and race. Such a model, used in a decision-making process (perhaps in financial services or policing) could in principle cause indirect racial discrimination. Whether that is the case or not depends on a judgement about the extent to which such selection methods are a proportionate means of achieving a legitimate aim.[footnote 11] For example, an insurer might be able to provide good reasons why postcode is a relevant risk factor in a type of insurance. The level of clarity about what is and is not acceptable practice varies by sector, reflecting in part the maturity in using data in complex ways. As algorithmic decision-making spreads into more use cases and sectors, clear context-specific norms will need to be established. Indeed as the ability of algorithms to deduce protected characteristics with certainty from proxies continues to improve, it could even be argued that some examples could potentially cross into direct discrimination.

Unfair bias beyond discrimination

Discrimination is a narrower concept than bias. Protected characteristics have been included in law due to historical evidence of systematic unfair treatment, but individuals can also experience unfair treatment on the basis of other characteristics that are not protected.

There will always be grey areas where individuals experience systematic and unfair bias on the basis of characteristics that are not protected, for example accent, hairstyle, education or socio-economic status.[footnote 12] In some cases, these may be considered as indirect discrimination if they are connected with protected characteristics, but in other cases they may reflect unfair biases that are not protected by discrimination law.

However the increased use of algorithms may exacerbate this difficulty. The introduction of algorithms can encode existing biases into algorithms, if they are trained from existing decisions. This can reinforce and amplify existing unfair bias, whether on the basis of protected characteristics or not.

Algorithmic decision-making can also go beyond amplifying existing biases, to creating new biases that may be unfair, though difficult to address through discrimination law. This is because machine learning algorithms find new statistical relationships, without necessarily considering whether the basis for those relationships is fair, and then apply this systematically in large numbers of individual decisions.

2.5 Fairness

Overview

We defined bias as including an element of unfairness. This highlights challenges in defining what we mean by fairness, which is a complex and long debated topic. Notions of fairness are neither universal nor unambiguous, and they are often inconsistent with one another.

In human decision-making systems, it is possible to leave a degree of ambiguity about how fairness is defined. Humans may make decisions for complex reasons, and are not always able to articulate their full reasoning for making a decision, even to themselves. There are pros and cons to this. It allows for good fair-minded decision-makers to consider the specific individual circumstances, and human understanding of the reasons for why these circumstances might not conform to typical patterns. This is especially important in some of the most critical life-affecting decisions, such as those in policing or social services, where decisions often need to be made on the basis of limited or uncertain information; or where wider circumstances, beyond the scope of the specific decision, need to be taken into account. It is hard to imagine that automated decisions could ever fully replace human judgement in such cases. But human decisions are also open to the conscious or unconscious biases of the decision-makers, as well as variations in their competence, concentration levels or mood when specific decisions are made.

Algorithms, by contrast, are unambiguous. If we want a model to comply with a definition of fairness, we must tell it explicitly what that definition is. How significant a challenge that is depends on context. Sometimes the meaning of fairness is very clearly defined; to take an extreme example, a chess playing AI achieves fairness by following the rules of the game. Often though, existing rules or processes require a human decision-maker to exercise discretion or judgement, or to account for data that is difficult to include in a model (e.g. context around the decision that cannot be readily quantified). Existing decision-making processes must be fully understood in context in order to decide whether algorithmic decision-making is likely to be appropriate. For example, police officers are charged with enforcing the criminal law, but it is often necessary for officers to apply discretion on whether a breach of the letter of the law warrants action. This is broadly a good thing, but such discretion also allows an individual’s personal biases, whether conscious or unconscious, to affect decisions.

Even in cases where fairness can be more precisely defined, it can still be challenging to capture all relevant aspects of fairness in a mathematical definition. In fact, the trade-offs between mathematical definitions demonstrate that a model cannot conform to all possible fairness definitions at the same time. Humans must choose which notions of fairness are appropriate for a particular algorithm, and they need to be willing to do so upfront when a model is built and a process is designed.

The General Data Protection Regulation (GDPR) and Data Protection Act 2018 contain a requirement that organisations should use personal data in a way that is fair. The legislation does not elaborate further on the meaning of fairness, but the ICO guides organisations that “In general, fairness means that you should only handle personal data in ways that people would reasonably expect and not use it in ways that have unjustified adverse effects on them.”[footnote 13] Note that the discussion in this section is wider than the notion in GDPR, and does not attempt to define how the word fair should be interpreted in that context.

Notions of fairness

Notions of fair decision-making (whether human or algorithmic) are typically gathered into two broad categories:

-

procedural fairness is concerned with ‘fair treatment’ of people, i.e. equal treatment within the process of how a decision is made. It might include, for example, defining an objective set of criteria for decisions, and enabling individuals to understand and challenge decisions about them.

-

outcome fairness is concerned with what decisions are made i.e. measuring average outcomes of a decision-making process and assessing how they compare to an expected baseline. The concept of what a fair outcome means is of course highly subjective; there are multiple different definitions of outcome fairness.

Some of these definitions are complementary to each other, and none alone can capture all notions of fairness. A ‘fair’ process may still produce ‘unfair’ results, and vice versa, depending on your perspective. Even within outcome fairness there are many mutually incompatible definitions for a fair outcome. Consider for example a bank making a decision on whether an applicant should be eligible for a given loan, and the role of an applicant’s sex in this decision. Two possible definitions of outcome fairness in this example are:

A. The probability of getting a loan should be the same for men and women.

B. The probability of getting a loan should be the same for men and women who earn the same income. Taken individually, either of these might seem like an acceptable definition of fair. But they are incompatible. In the real world sex and income are not independent of each other; the UK has a gender pay gap meaning that, on average, men earn more than women.[footnote 14] Given that gap, it is mathematically impossible to achieve both A and B simultaneously.

This example is by no means exhaustive in highlighting the possible conflicting definitions that can be made, with a large collection of possible definitions identified in the machine learning literature.[footnote 15]

In human decision-making we can often accept ambiguity around this type of issue, but when determining if an algorithmic decision-making process is fair, we have to be able to explicitly determine what notion of fairness we are trying to optimise for. It is a human judgement call whether the variable (in this case salary) acting as a proxy for a protected characteristic (in this case sex) is seen as reasonable and proportionate in the context. We investigated public reactions to a similar example to this in work with the Behavioural Insights Team (see further detail in Chapter 4.

Addressing fairness

Even when we can agree what constitutes fairness, it is not always clear how to respond. Conflicting views about the value of fairness definitions arise when the application of a process intended to be fair produces outcomes regarded as unfair. This can be explained in several ways, for example:

- Differences in outcomes are evidence that the process is not fair. If in principle, there is no good reason why there should be differences on average in the ability of men and women to do a particular job, differences in the outcomes between male and female applicants may be evidence that a process is biased and failing to accurately identify those most able. By correcting this, the process is both fairer and more efficient.

- Differences in outcomes are the consequence of past injustices. For example, a particular set of previous experience might be regarded as a necessary requirement for a role, but might be more common among certain socio-economic backgrounds due to past differences in access to employment and educational opportunities. Sometimes it might be appropriate for an employer to be more flexible on requirements to enable them to get the benefits of a more diverse workforce (perhaps bearing a cost of additional training); but sometimes this may not be possible for an individual employer to resolve in their recruitment, especially for highly specialist roles.

The first argument implies greater outcome fairness is consistent with more accurate and fair decision-making. The second argues that different groups ought to be treated differently to correct for historical wrongs and is the argument associated with quota regimes. It is not possible to reach a general opinion on which argument is correct, this is highly dependent on the context (and there are also other possible explanations).

In decision-making processes based on human judgement it is rarely possible to fully separate the causes of differences in outcomes. Human recruiters may believe they are accurately assessing capabilities, but if the outcomes seem skewed it is not always possible to determine the extent to which this in fact reflects bias in methods of assessing capabilities.

How do we handle this in the human world? There are a variety of techniques, for example steps to ensure fairness in an interview-based recruitment process might include:

- Training interviewers to recognise and challenge their own individual unconscious biases.

- Policies on the composition of interview panels.

- Designing assessment processes that score candidates against objective criteria.

- Applying formal or informal quotas (though a quota based on protected characteristics would usually be unlawful in the UK).

Why algorithms are different

The increased use of more complex algorithmic approaches in decision-making introduces a number of new challenges and opportunities.

The need for conscious decisions about fairness: In data-driven systems, organisations need to address more of these issues at the point a model is built, rather than relying on human decision-makers to interpret guidance appropriately (an algorithm can’t apply “common sense” on a case-by-case basis). Humans are able to balance things implicitly, machines will optimise without any balance if asked to do so.

Explainability: Data-driven systems allow for a degree of explainability about the factors causing variation in the outcomes of decision-making systems between different groups and to assess whether or not this is regarded as fair. For example, it is possible to examine more directly the degree to which relevant characteristics are acting as a proxy for other characteristics, and causing differences in outcomes between different groups. If a recruitment process included requirements for length of service and qualification, it would be possible to see whether, for example, length of service was generally lower for women due to career breaks and that this was causing an imbalance.

The extent to which this is possible depends on the complexity of the algorithm used. Dynamic algorithms drawing on large datasets may not allow for a precise attribution of the extent to which the outcome of the process for an individual woman was attributable to a particular characteristic and its association with gender. However, it is possible to assess the degree to which over a time period, different characteristics are influencing recruitment decisions and how they correlate with characteristics during that time.

The term ‘black box’ is often used to describe situations where, for a variety of different reasons, an explanation for a decision is unobtainable. This can include commercial issues (e.g. the decision-making organisation does not understand the details of the algorithm which their supplier considers their own intellectual property) or technical reasons (e.g. machine learning techniques that are less accessible for easy human explanation of individual decisions). The Information Commissioner’s Office and the Alan Turing Institute have recently published detailed joint advice on how organisations can overcome some of these challenges and provide a level of explanation of decisions.[footnote 16]

Scale of impact: The potential breadth of impact of an algorithm links to the market dynamics. Many algorithmic software tools are developed as platforms and sold across many companies. It is therefore possible, for example, that individuals applying to multiple jobs could be rejected at sift by the same algorithm (perhaps sold to a large number of companies recruiting for the same skill sets in the same industry). If the algorithm does this for reasons irrelevant to their actual performance, but on the basis of a set of characteristics that are not protected, then this feels very much like systematic discrimination against a group of individuals, but the Equality Act provides no obvious protection against this.

Algorithmic decision-making will inevitably increase over time; the aim should be to ensure that this happens in a way that acts to challenge bias, increase fairness and promote equality, rather than entrenching existing problems. The recommendations of this review are targeted at making this happen.

Case study: Exam results in August 2020