Measuring the economic social and environmental value of public sector location data

Updated 22 August 2022

A note from the Geospatial Commission

Location data is fundamentally embedded into our daily lives yet its true value is not well understood. It delivers significant benefits for people, organisations and wider society through the location-hungry services and products that underpin our digital society such as live traffic updates on our phones, tracking construction of new infrastructure, and providing key information for emergency resilience planning.

Location data is used by a diverse range of sectors across the UK economy including the public sector. It is increasingly central to policy development and delivery of key government priorities, such as Net Zero and Levelling Up, following its crucial role in supporting management of the COVID-19 pandemic.

Despite its clear importance as a strategic national asset, public sector projects to invest in the creation, improvement and sharing of location data have struggled to understand, assess and articulate the benefits of the investment – which is a necessity to unlock funding. Describing the value of location data is hard because:

- Value is often realised only when location data is combined with other datasets - Once a location dataset is linked with other subject-specific data, it provides new insights and opportunities that inform decisions and operations. This makes it difficult to fully value the location data at any given point in time, with a high likelihood of being underestimated.

- Value varies depending on the intended use – For example, mobile phone data could be considered more valuable for understanding total hourly footfall on high streets, but less valuable for understanding priority land preservation areas. There is also no one size fits all valuation method for data, which can make the decision about the best approach to estimating value difficult.

- Value can be difficult to foresee - Data may have limited use today but be very valuable in the future following changes in processing capability, technology and/or new needs unknown as of today. This makes it very challenging to predict future value with certainty. Furthermore, location data use can also spill over onto the rest of society and the economy, for example timely location data can provide better routing decisions that avoid congestion areas for drivers, resulting in faster journey times (direct impact). Fewer cars on popular roads will ease congestion leading to a reduction in emissions and pollution improving health outcomes (spill over impact). Such value is not routinely captured.

Existing guidance can provide general frameworks for valuing government interventions, however for the reasons outlined above valuing geospatial data requires a more tailored approach. The Geospatial Commission committed to publishing guidance for measuring the economic, social and environmental value of public sector location data investments as part of Mission 1 of the UK Geospatial Strategy to ‘promote and safeguard the use of location data’, reiterated in our Annual Plan 22/23.

Many of the principles set out in this guidance are also relevant for data investments more generally and support commitments to improve the use of digital and data, as set out in the National Data Strategy and the Roadmap to Digital and Data 2022-2025.

The guidance comprises a practical and proportional seven-step framework providing public sector organisations with the tools necessary to consistently and coherently understand and assess the value of location data such as best practice approaches and tangible case studies. Built on a foundation of existing research information and experiences, the framework aims to empower public sector organisations to more effectively drive the investment case for location data. However, it does not assume that government intervention is the only way to achieve impact in the ecosystem.

We hope the structured approach to considering investments and impacts will also be relevant and beneficial for private sector investment decisions relating to the geospatial ecosystem.

We envision this guidance being used alongside the Commission’s published works - namely the Q-FAIR Assessment and the ABC’s of ethical location data use, collectively working in harmony to form the UK’s national location data framework.

We would like to thank Frontier Economics and the diverse range of organisations and individuals who have contributed to the development of the guidance. We see this guidance as a crucial component of addressing the challenges of valuing location data in an accurate and consistent manner and look forward to working with the community to put it into action.

Geospatial Commission

Executive Summary

Geospatial data, otherwise known as location data, plays a significant role for business success, individual convenience and public sector delivery. It tells us where people and objects are and underpins key services used by organisations and individuals daily. If used effectively, location data drives economic, social and environmental value.

Geospatial data (and data more generally) does not always have an established market price: its full value may only be determined after it has been used or applied. This in turn makes it challenging to understand and appraise the value of new or improved geospatial data and can lead to underinvestment in the geospatial data ecosystem. To support organisations in addressing this challenge, the Geospatial Commission commissioned Frontier Economics to develop a practical framework for appraising benefits in this context.

This guidance provides a methodical, logical and consistent framework for measuring the economic, social and environmental benefits associated with geospatial data investments in the public sector. It is a practical resource to value improvements in location data and/or the wider geospatial ecosystem particularly for public sector bodies when building a business case for investment. These investments include creation or acquisition of new data assets, improvements to or maintenance of existing geospatial data assets, or efforts to support the wider ecosystem.

This guidance focuses primarily on public sector appraisals and is guided by and consistent with wider best practice in the public sector, particularly HM Treasury’s Green Book. This guidance tailors the key principles of the Green Book to geospatial data investments, providing a structured approach to understanding and more effectively articulating its benefits and value.

A number of complementary methods were deployed to build on existing work and address evidence gaps including a targeted evidence and literature review and semi-structured interviews with a range of key stakeholders.

The framework for appraising geospatial investments contains seven steps. These steps are illustrated in Figure 1.

Figure 1: Step-By-Step Framework

-

Articulate rationale and strategic fit of investment

-

Classify type of investment

-

Set out how the investment will affect data characteristics

-

Identify data uses or applications

-

Identify potential benefits

-

Prioritise high impact benefits for further in-depth analysis

-

Assess benefits associated with known & unknown uses

The first five steps of this seven step framework involve development of a conceptual theory of change. This theory of change articulates the rationale behind the intervention, classifies the investment, links it to specific data characteristics (if relevant) and identifies use cases and their benefits. In doing so it enables the user to map out the expected pathways to impact and value.

Steps 6 and 7 relate to the empirical assessment, quantification and monetisation of the benefits included in the theory of change. In general, the user should estimate the value of each use case in full and then consider the specific impact of geospatial data. This approach is broadly applicable and can cover different types of benefits. [footnote 1]

This guidance is intended to be as practically useful as possible, acknowledging the resource constraints that public sector organisations face. Some of the steps cover best practice when developing a business case (e.g. Step 1) whereas others are more novel and are specific to geospatial investments (e.g. Steps 2 and 3). Other steps (e.g. Steps 4, 5, 6 and 7) can be thought of as cross-cutting advice that are key elements of an impactful business case. The guidance can support both decision makers and assessors to better understand and compare the benefits of geospatial data investments.

Introduction

Context

Geospatial data, otherwise known as location data, [footnote 2] describes where places, objects and people are. It takes many different forms and can relate for example to specific building addresses, larger geographic areas, geology or the location of people. There are foundational datasets which relate to the underlying fixed geographic framework (such as addressing and topography), and dynamic datasets which cover spatial patterns of movement by subjects across a fixed geographic framework (such as GPS or mobile phone data).

It plays a significant role for business success, individual conveniences and the public sector delivery. Location data is strategic national asset and underpins a significant amount of activity within the UK economy, society and the environment [footnote 3] as well as existing services used by organisations and individuals daily. These include real-time information on travel disruptions, insurance policies informed by coastal erosion patterns and geo-fenced zones that can support sustainable urban planning.

Public sector organisations operate across multiple areas of the geospatial data ecosystem including the direct supply of geospatial data in some cases. There is a clear economic rationale for this involvement.[footnote 4] For example, compelling commercial motives to supply some forms of geospatial data across all of the UK in a consistent format are not always present, even if there is clear demand for such products. Previous attempts to value geospatial data investments across the public sector have used a variety of different methods and approaches, each with their own strengths, weaknesses and suitability given the context. Challenges in consistently and accurately articulating the value of geospatial data hampers public sector organisations in obtaining resources to fund location data projects. This in turn leads to underinvestment in the geospatial data ecosystem.[footnote 5]

Structure of the report

This report is structured as follows:

- Chapter 1 the introduction

- Chapter 2 sets out general principles and challenges of valuing investments in geospatial data.

- Chapter 3 then presents the entire recommended framework. This covers firstly the rationale for the intervention, broad categories of intervention and the role of data characteristics in determining value. Then potential use cases are set out and benefits associated with interventions are described. Finally, prioritisation of use cases and benefit appraisal methods are considered.

- Chapter 4 presents areas of potential future research which can build on the work undertaken to date.

Annexes:

Annex A presents additional detail on the evidence review and qualitative engagement.

Annex B describes characteristics of data and geospatial data in detail.

Annex C presents theories of change which relate to specific data characteristics.

Annex D sets out example data sources that may be helpful when considering the environmental benefits of an intervention.

Annex E sets out further detail on current methods for valuing location data investments.

Annex F outlines how and when to undertake direct valuation of data.

Accompanying this guidance is a series of case studies demonstrating the application of the guidance to existing investments and interventions by the public sector. Readers are encouraged to use these case studies as learning tools for demonstrating the value of geospatial data investment.

What does this guidance aim to do?

This guidance provides a practical step-by-step framework for appraising the benefits of public sector interventions in the geospatial ecosystem. It is focused on the evidence required for the public sector to make investment decisions. As such the framework that has been developed is consistent with wider best practice in the public sector, particularly HM Treasury’s Green Book. This guidance is a resource to support public sector assessments of value for investments in location data and interventions in the wider geospatial ecosystem when building relevant business cases. Appraisal guidance will also promote the use of location data, improve access to better location data and enable innovation.

What does this guidance not aim to do?

This guidance does not cover all areas of business case development. Nor is the framework intended to be a best practice guide for project evaluation.[footnote 6] This guidance does not aim to provide specific appraisal values or ranges for geospatial data. It is focused on providing a framework for undertaking robust and consistent analysis within a geospatial context. To access public sector funding, an organisation will have to provide a wide range of information. This includes content for each of the standard 5 cases (Strategic, Economic, Financial, Commercial and Management), as set out by HM Treasury’s Green Book. This guidance is not intended to cover all of these areas and should be read in conjunction with other best practice information.

Who is this guidance aimed at?

This framework is intended to be used by both public sector decision makers and assessors. However, the principles of geospatial data valuation will have wider applications beyond the public sector. Private sector organisations who are considering the value for money of geospatial data investments may find it useful to follow some or all of the framework steps, particularly when considering the added value of a particular geospatial solution. This guidance and the underlying framework have been developed with location data in mind. However, many of the challenges that apply to valuation of geospatial data will also apply to other forms of data (which do not contain a locational element). Therefore, the framework described in this document may also be useful when considering investments in data more generally.

When should this guidance be used?

This guidance should be used whenever a public sector body is considering intervening in the geospatial data ecosystem. These interventions could cover creation of data assets, improvements to existing data assets, efforts to support the wider ecosystem and maintenance of existing data assets. It could also cover interventions targeted at making efficiency savings (for example, replacing legacy data, tools and processes).[footnote 7]

This guidance should be used as part of a wider suite of guidance and principles which the Geospatial Commission is developing. This suite will help users to better understand and compare the merits of one investment over another. It includes the Q-FAIR Assessment report, as well as the guidance on Building Public Confidence in Location Data which seeks to support organisations unlock value from sensitive location data whilst mitigating security, ethical and privacy risks.[footnote 8]

How was this guidance developed?

This guidance was informed by evidence that was collected via a number of complementary methods. Frontier’s work was also informed by ongoing communication and feedback with the Geospatial Commission through a series of internal meetings and wider workshops. Overall, the guidance reflects three inputs:

-

A targeted but wide-ranging evidence and literature review. This covered the different types of geospatial data that exist and how to measure the value of data. A full list of sources reviewed are included in Annex A.

-

Qualitative engagement in the form of semi-structured interviews with a range of stakeholders. Topic guides were developed for each interview to ensure that key areas were covered, and interviewees could provide input which aligned with their expertise and experience. Representatives included the Geospatial Commission, public sector holders of geospatial data as well as private and public sector data users. During this phase the primary objective was to identify key geospatial data characteristics that drive value. Potential use cases were also discussed.

-

Case study engagement which consisted of four deep dive examinations into previous geospatial investments. These case studies allowed for further exploration of the different methodologies used to estimate the value of location data, supported by additional desk review using published documentation and material provided by stakeholders. The case studies explore a diverse range of interventions, their impacts and the valuation methods used. The methods and approaches were the key focus to inform development of the guidance. Readers may find it helpful to draw on specific elements of the methodologies used. The four case studies are:

-

National Underground Asset Register (NUAR): This investment involves developing a data-sharing platform to provide a combined, interactive, standardised digital view of the location and attributes of buried assets (such as pipes and cables). This investment addresses the legal, commercial, safety and security concerns expressed by owners of underground assets. These concerns have previously acted as a barrier to bringing together data in a consistent digital format.

-

Public Sector Geospatial Agreement (PSGA): This sets out how Ordnance Survey (OS) provides enhanced location data, services and expertise to the public sector, developers and OS Partners. This has involved significant investments including the provision of improved data sets and facilitating more flexible access to granular data attributes. PSGA members access this data through a customer engagement platform (OS Data Hub).

-

Transport for London (TfL) open data: This example relates to the decision made by TfL to release open information via APIs on timetables, service status and disruption which covers all modes of transport. This decision enables users of the transport network to easily access travel information both through TfL’s Go app, and other customer-facing products and applications created by multiple businesses who use TfL’s open data.

-

HM Land Registry (HMLR) data valuation: HMLR wanted to quantify and evidence the value of its datasets to direct data consumers. This was motivated by an interest in demonstrating the impact of existing datasets and potentially exploring how releasing additional HMLR datasets could drive future economic growth. This model has been applied to four HMLR datasets.

Geospatial Commission

The Geospatial Commission was established in 2018 as an independent, expert committee responsible for setting the UK’s geospatial strategy and coordinating public sector geospatial activity. Its aim is to unlock the significant economic, social and environmental opportunities offered by location data and to boost the UK’s global geospatial expertise. The Commission has a mandate and budget to drive and deliver changes by working in partnership with others.

The Geospatial Commission has a mandate and budget to drive and deliver changes by working in partnership with others. This means they:

- Provide strategic oversight of the geospatial ecosystem in the UK, setting geospatial strategy, policy and standards.

- Hold the budget for the public sector’s largest investment in geospatial data; and

- Make targeted investments in data projects that accelerate innovation and adoption of geospatial data applications.

Principles and challenges of valuing location data Investments

This chapter presents overarching principles which apply to geospatial investments and articulates some of the key challenges with carrying out robust and proportionate benefit appraisal.

Characteristics of Geospatial Data which make valuation challenging

Considering the value of location data investments has several complexities:

(1) Market prices for data might not reflect its full value

- Location data has a wide variety of applications and its benefits often spillover, generating economic, social and environmental benefits that accrue beyond the direct users of the data. Externalities (both positive and negative) occur when benefits or costs are felt by those not initially involved in the initial exchange or use of the data. For example, better vehicle routing based on live road congestion data can lead to less congestion and less air pollution. This results in fewer adverse health outcomes in citizens. These benefits are not captured by market prices, which could result in underinvestment. The full extent of the value generated by geospatial data needs to consider a wide range of potential use cases as well as the direct value, indirect value and spill-over effects for each use case, which can be difficult to assess in full. Some data has very widespread economic, social and environmental value, for example identifiers that provide a ‘golden thread’ to enable other datasets to be linked together.

(2) There are dependencies to extracting value from geospatial data

-

The value of geospatial data may only be fully realised when combined with another dataset. Location data is unique in that it offers spatial insights that can help answer and inform a vast array of potential policy questions. However, given the wide range of potential applications,[footnote 9] it is difficult to be comprehensive and foresee the full breadth of potential impacts. Furthermore, combining datasets may raise important ethical and privacy considerations which may act as a barrier to achieving the full economic, social and environmental value that location data can drive.

-

The value of geospatial data is often one of multiple inputs into enabling decision making. In order to unlock the full value of geospatial data complementary investments in software, hardware and skilled people will be required. The United Nations Initiative on Global Geospatial Information Management (IGIF) has defined nine strategic pathways which can enable the efficient use of geospatial information - reflected in the UK Geospatial Strategy’s 4 missions. One of these pathways is data. However, this needs to be supported by other investments, including: (i) governance, (ii) standards and (iii) capacity building programmes. Geospatial data appraisals should consider the extent to which these supporting factors are in place when assessing potential benefits.

(3) There are uncertainties around when or where benefits can arise

-

Geospatial data can have a large option value (value of retaining options for the future). When valuing location data, it is important to consider existing and potential use cases which may not fully materialise until after the investment has taken place. Some location data today may not seem useful. However, given the right advances in technology, processing capability or changes in societal challenges, it could be important for future applications [footnote 10]. This large option value increases the complexity of any geospatial investment appraisal relative to other forms of appraisal. This particular characteristic is likely to apply to geospatial data to a greater extent than other forms of data because geospatial data has a particularly wide range of potential uses (and does not have suitable substitutes). As a result, the need for a logical and clear articulation of benefits is especially important. This guidance will provide a common framework for this.

-

Geospatial data is an experience good. Its full value is usually determined after use as the suitability of a dataset will vary from one use case to the next. Specific datasets have known traits and characteristics that make them suitable in some situations but not others. For example, aggregated mobile phone data can inform hourly footfall into a city. However, this same dataset is less valuable for understanding habitat preservation areas (unlike SSSI [footnote 11] or AONB [footnote 12] datasets). This makes value appraisals inherently more difficult.

As a result, valuation approaches for location data interventions are not always straightforward. Methods are wide ranging, divergent and inconsistent. Some approaches are expensive and resource-intensive to deploy but highly specific. Others are not sufficiently detailed to inform an assessment of value but require less resources. Chapter 3 sets out the recommended framework that provides the best balance between resource requirements and specificity, enabling a consistent valuation of location data investments.

Importance of joining up across Government

When considering the value of geospatial data, it is vital that public sector organisations are coordinated. Additional value will be generated where different forms of data are brought together and where multiple worthwhile use cases can be identified.[footnote 13] The potential positive implications of this integration should be considered as part of any valuation exercise. The geospatial ecosystem is evolving rapidly and there are common themes and trends that affect multiple public holders of geospatial data simultaneously. These could include improvements in technology and changes in data users’ requirements.

Therefore, multiple public sector holders of geospatial data may face similar decisions about how to improve existing data assets. There may be opportunities to share learnings across different organisations, or if requirements are similar enough, opportunities to acquire data collectively and more efficiently. Likewise advances in technology may mean that certain types of geospatial data can now be provided in a cost-effective way by the private sector. Thus, the optimal role played by multiple public sector organisations in relation to geospatial data may need to evolve over time.

Step-by-Step Framework

The question of how best to value data assets has been considered in previous research. A detailed assessment of methods that have been used in the past is presented in Annex E covering their strengths, weaknesses and when the method is most appropriate. This assessment informed the development of the framework, which is now presented in this chapter. The methods are broadly categorised into three groups: cost-based methods, market-based methods and use-based methods. This chapter sets out our recommended use-based method to value the benefits of location data use - referred to as the “use case approach” from here onwards. This approach is intended to be a proportionate way of capturing value, maintaining analytical rigour and integrity.

Overview of Framework

The framework contains seven steps:

-

Articulate rationale and strategic fit of investment

-

Classify type of investment

-

Set out how the investment will affect data characteristics

-

Identify data uses or applications

-

Identify potential benefits

-

Prioritise high impact benefits for further in-depth analysis

-

Assess benefits associated with known & unknown uses

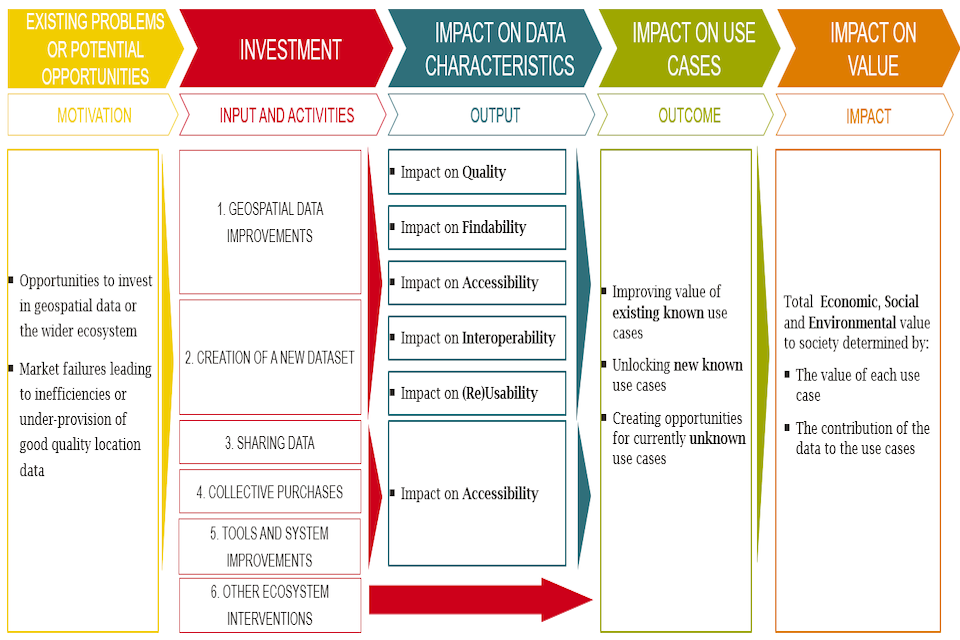

The first five steps involve the development of a conceptual theory of change (see Figure 3 below). Step 1 articulates the rationale behind the intervention, Step 2, classifies the investment to identify potential gaps or overlaps in intended impact, Step 3 links the investment to specific data characteristics (if relevant) to determine drivers of change to potential applications and uses, whilst Steps 4 and 5 identifies these use cases and potential benefits. Steps 6 and 7 relate to the empirical assessment, quantification and monetisation of the benefits included in the theory of change. Further detail on each step is contained in the following sections. Note that in some cases, Steps 3 and 4 may require more than one iteration in order to be as comprehensive as possible.

Figure 3: Stylised Theory of Change

Existing problems or potential opportunities> Investment> Impact on data characteristics> Impact on use cases> Impact on economic, social and environmental value

Source: Frontier based on desk review and qualitative engagement

Step 1: Strategic Rationale

Prior to the detailed appraisal of economic, social and environmental benefits, it is vital to articulate the underlying rationale for the specific investments in the geospatial data ecosystem and their Strategic Fit.[footnote 14] Strategic Fit may encompass exploration of the underlying problem or opportunity, links to other programmes as well as congruence with policy objectives (see figure below).

Figure 4 Framework Step:1

- Articulate rationale and strategic fit of investment

- Classify type of investment

- Set out how the investment will affect data characteristics

- Identify data uses or applications

- Identify potential benefits

- Prioritise high impact benefits for further in-depth analysis

- Assess benefits associated with known & unknown uses

Figure 5 Elements of strategic fit

- Opportunities or market failures

- Links to other similar projects/programmes

- Links to wider policy objectives

This assessment will generally be included within the Strategic Case of any business case. [footnote 15] Moreover, the impact of data investments will be maximised when supporting investments, such as in capabilities and infrastructure, are made.

Opportunities or Market Failures

The underlying rationale for specific investments will vary depending on the context. These could relate to addressing a specific problem or seeking to take advantage of an opportunity. Q-FAIR (Quality, Findable, Accessible, Interoperable and Reusable) is the Geospatial Commission’s initiative to establish systematic improvements to the UK geospatial data ecosystem. The first Q-FAIR report presents current approaches to using FAIR principles and how their application may benefit users, helping organisations think about problems and opportunities in a consistent way.

Links to Other Similar Projects/Programmes

It is recommended that organisations undertake the necessary due diligence on relevant programmes either existing, planned or underway across the public sector and note how they interact. Organisations should highlight why their proposed intervention either contributes to, enhances or, in some cases replaces existing programmes and projects. The types of programmes that will be considered are highly specific to the intervention under consideration.

Links to Wider Policy Objectives

In particular, organisations should think about how best an investment aligns to the UK Geospatial Strategy, which is aimed at enabling the government to unlock opportunities from location data. This will ensure the use of geospatial data helps to drive broader value by aligning with where the UK is focusing its collective effort, such as science and technology, levelling up and net zero.

Strategic Rationale

The Strategic rationale for the investment under consideration needs to be articulated by the responsible organisation. There are several questions that the organisation should answer to help to tease out this underlying rationale and identify the right investments:

- What is the current situation and why is it not optimal (i.e. what are the opportunities or issues)? [footnote 16]

- What would happen if no action were taken (i.e what is the counterfactual)? 3 What are the goals/objectives of any potential investment? 4 Who are the main stakeholders that any potential investment could impact? 5 Has the organisation making the case for investment engaged with these stakeholders to identify or validate the potential opportunities or issues? 6 Has the organisation thought about and set out a clear list of options for potential investments? 7 How will the investments contribute to the goals listed above? 8 Do the investments have anything in common with other recent investments made in this context by other public sector bodies? Were they successful or unsuccessful? Why? 9 Are there any ethical and privacy risks that need to be considered or mitigated? 10 What are the technical challenges associated with the investments?

The Counterfactual for Investment

Describing the rationale for investment will also require establishing and articulating the counterfactual against which any potential investments would be assessed. In many cases the appropriate counterfactual will be continuation of the status quo. This would be the case where for example a new data set under consideration would never be developed in the absence of public sector investment.

In other cases, the ongoing evolution of technology means that a new data set will be provided by the private sector at some point in the future, which can make the setting of the counterfactual challenging. In these cases, it is important for organisations to engage with stakeholders to understand the progress and scope of this evolution, to then recognise how public sector interventions may be accelerating its development or reducing associated risks.

Step 2: Identifying and Classifying the Type of Investment

There are many possible location data interventions to address a given situation. After determining the overall Strategic Fit of the potential investment (see Step 1) it is important to categorise the various investment options that are being considered to identify any gaps or overlaps in impacts. The specific opportunities and problems identified in Step 1 through Q-FAIR will require different types of solutions. The impact of investing in different categories of investment are described in detail in Step 3 and Annex C.

Table 1 shows a number of different investment categories that have been defined as part of this work. These have been informed by past investments [footnote 16] and potential future interventions identified across a range of stakeholders (see Annex 2) and aimed at making it easier for organisations to consider where their intervention is best classified. [footnote 17]

Figure 6 Framework Step:2

- Articulate rationale and strategic fit of investment

- Classify type of investment

- Set out how the investment will affect data characteristics

- Identify data uses or applications

- Identify potential benefits

- Prioritise high impact benefits for further in-depth analysis

- Assess benefits associated with known & unknown uses

Each investment will have its own: (i) underlying rationale which motivates the deployment of public resources; (ii) data characteristic groups most likely to be impacted (if any); (iii) common use cases that may be unlocked; and (iv) economic, social and/or environmental benefits that may occur as a result of the investment.

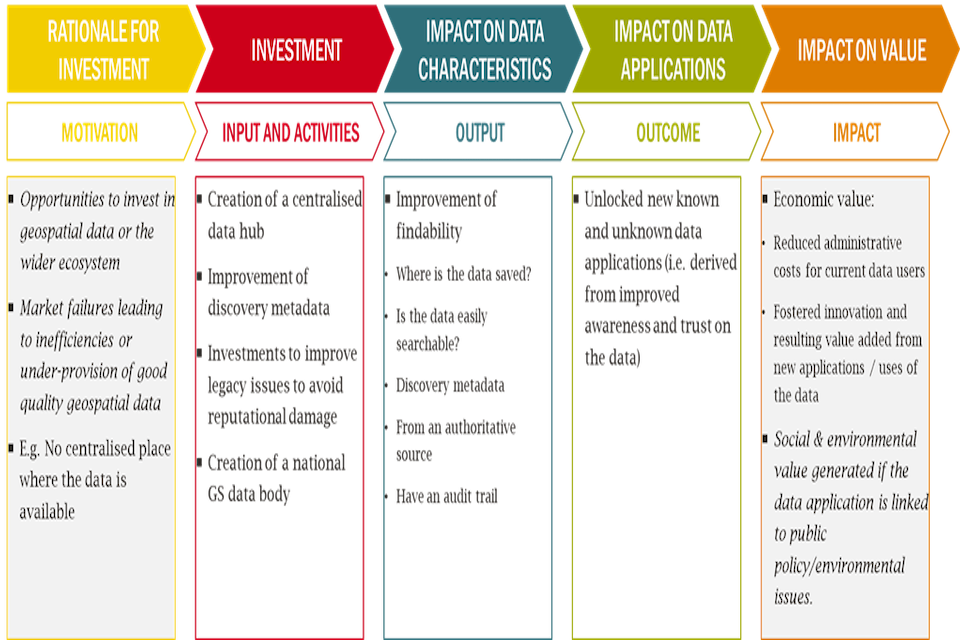

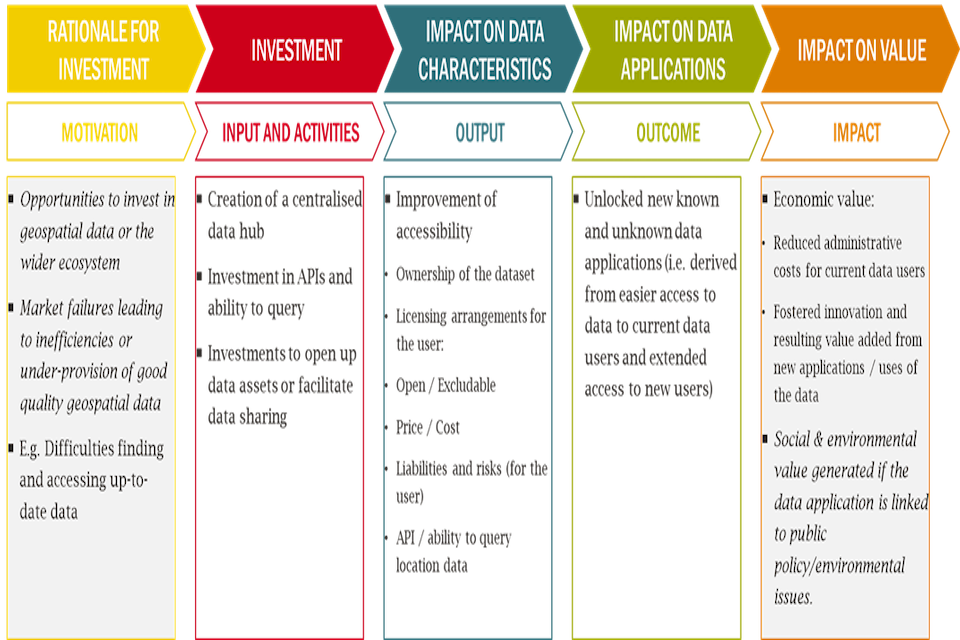

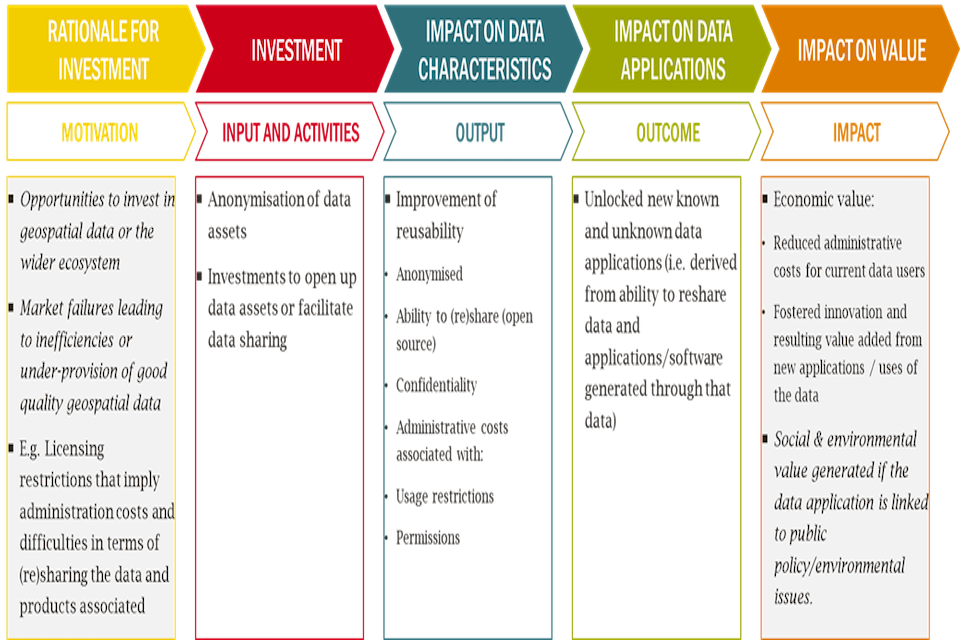

Annex C sets out examples of Theories of Change for different types of investments - broadened out to QFAIR categories. Responsible organisations may refer to these examples to help understand the likely impacts of their own investment.

Table 1: List of Geospatial data investment categories

| Investment Category | Explanation | Example |

|---|---|---|

| Improving or maintaining existing geospatial datasets | These are aimed at either maintaining the current contents of existing datasets or improving its quality and interoperability. For improvements, quality affects the specific contents of the dataset, whilst interoperability changes how the data can be used with other data. [footnote 19] These investments can ensure current applications can continue or improve current use cases as well as unlock future use cases to foster innovation, productivity and economic growth. | Maintaining: HM Land Registry have valued some existing datasets which require ongoing maintenance and curation. Future decisions can be guided by this valuation exercise. Improving: The Public Sector Geospatial Agreement (PSGA) replaced the previous Public Sector Mapping Agreement (PSMA) and improved the characteristics of a range of geospatial data assets. The PSGA built on the existing OS offer and facilitated the provision of new, richer data. The PSGA enabled the release of four new OS OpenData products improving data interoperability, including key unique identifiers such as Unique Property Reference Numbers (UPRNs) and Unique Street Reference Numbers (USRNs).[footnote 20] |

| Creation of new dataset | New datasets are typically created to address an identified problem or opportunity. However, a new dataset can have additional uses beyond its primary purpose. These additional uses may be unknown at the time of creation, thereby unlocking more value than initially anticipated. Coordination issues or the absence of commercial incentives mean that certain geospatial datasets do not exist nor are properly maintained by the private sector. This can occur at the national and regional level because the investment costs are high or because the data provider will not be able to capture resulting benefits. However, the investment could yield significant benefits to both users and wider society at large, meaning public sector intervention may be required. | The British Geological Society created the GeoCoast package of datasets to inform and support coastal management and adaptation. Use of this data can facilitate greater understanding of coastal erosion risk, can ensure that coastal defences are placed in the optimal locations and also improve the accuracy of insurance premia. |

| Data sharing policy | Improvements in geospatial data sharing policy seek to make data more available, usually at an affordable price (as set out in licensing terms and arrangements). These tend to affect the findability and accessibility of data, which can open up a wide range of use cases. These use cases can generate new markets, increase competition within existing markets and foster innovation. This sort of investment tends to improve the findability and accessibility characteristics of geospatial data and increase the potential pool of users. Interventions in this category may also reduce administrative and data handling costs amongst the current user base. The associated benefits often relate to efficiencies, productivity and economic growth. Access to affordable geospatial data reduces the barriers to entry for small companies and organisations that could not make use of the data before. The resulting commercialised products and applications can generate jobs and support economic growth. | Transport for London (TfL) made data on timetables, arrivals/departures, service status and disruption openly available to users in stages since 2009 [footnote 21]. Following this intervention, over 600 apps were released based on TfL data and analysis in 2017 suggested that annual economic benefits and savings of up to £130m for travellers, as well as 500 jobs generated in London as a result of this data sharing and subsequent commercial usage. |

| Collective purchases | Collective purchase of geospatial data involves centralised purchasing for a range of public bodies or centralising the licensing agreements for geospatial data. These investments may reduce duplication and lead to cost savings and public sector efficiencies. These investments also have a positive impact on the accessibility of data if new bodies gain access to the data for the first time. | The Geospatial Commission is exploring the use of centrally funded, collective purchase arrangements for geospatial data sets to deliver public sector wide access. For example, having already secured access to aerial photography data for all public sector bodies. |

| Tools and systems improvements | This category of geospatial investments covers developments of data platforms and other mechanisms to aid in the use and dissemination of geospatial data. Depending on the improvement, this can affect the findability, accessibility, interoperability and reusability of a dataset. | The National Underground Asset Register (NUAR) is a data-sharing platform providing a combined, interactive, standardised digital view of the location and attributes of buried assets (such as pipes and cables). |

| Other ecosystem interventions | Interventions outside of those categories above are also important. The United Nations initiative on Global Geospatial Information Management (UNGGIM) has defined nine strategic pathways which can enable the efficient use of geospatial information. One of these pathways is data. However, this data pathway needs to be supported by other investments in the other pathways which include governance, policy, standards and education. Therefore, these supporting investments aim to unlock the full value of existing geospatial assets. | The Geospatial Commission has previously convened a Skills Forum. This forum has a diverse and cross-section membership seeking to enhance the UK’s geospatial capabilities, skills and awareness |

Step 3: Data Characteristics and Links to Q-Fair

This step relates to how a specific intervention is likely to affect data characteristics. The value that can be extracted from a geospatial data asset will depend on its inherent characteristics. Investments to change the data may alter these traits and have knock-on effects on value. An existing data asset’s value will depend on how relevant current traits are for different uses and applications. Understanding how an intervention will affect data characteristics will also help identify relevant use cases and subsequent benefits that can then be valued. These latter stages are covered in the subsequent steps of this guidance.

Some geospatial interventions may not directly alter any characteristics. When appraising the value of investments which do not lead to any change in data characteristics Step 3 of the framework does not need to be followed.

Equally, when valuing whole datasets for the purposes of informing decisions about continued maintenance, we recommend responsible organisations follow a similar methodology to HM Land Registry (see accompanying case studies, Case Study 2 – HM Land Registry) by conducting a Q-FAIR assessment of the data and engaging closely with stakeholders before using the approaches set out in Annex E and Annex F to assess value.

Figure 7 Framework Step:3

- Articulate rationale and strategic fit of investment

- Classify type of investment

- Set out how the investment will affect data characteristics

- Identify data uses or applications

- Identify potential benefits

- Prioritise high impact benefits for further in-depth analysis

- Assess benefits associated with known & unknown uses

Previous work has identified a longlist of data characteristics which can impact on value [footnote 22] by affecting the demand or supply of a dataset. The full list is in Annex B. For the purposes of this guidance a subset of the most relevant geospatial data characteristics has been identified in Table 2.

Table 2: Short list of data characteristics

| Characteristic | Description |

|---|---|

| Where is the data (Location of storage and use) | Where is the data stored and to what jurisdiction is it subject to |

| Source | From where the data has been collected, purchased, obtained or transformed |

| Ownership | Who owns the data from a legal perspective |

| Licensing | Legal instrument specifying the terms and conditions around using and (re)sharing the data |

| Open/Excludable | How data can be accessed: is data freely available or is it part of a private market |

| Price / Cost | For excludable data, what is the price / cost of purchasing it |

| API / ability to query | Software that allows a user to obtain the data filtered and organised as requested by the user |

| Anonymised | Data where the details have been removed so a person cannot be identified by name, address, etc. |

| Ability to (re)share (open source) | Determined by the licensing agreements, open data that can be freely (re)shared |

| Liabilities and risks (from the supplier and passed on to the user) | Legal responsibilities that may trigger a financial loss for the data user, originated at the moment the data was collected (e.g. phone data, with names and addresses) |

| Confidentiality | Protection against disclosure of sensitive or personal information |

| Usage restrictions | Type, duration and source of the limitation to the use of the asset |

| Permissions | Authorisation that allows users to access the data |

| Support | Whether the data is in digital, analogue or mixed format |

| Format / Structure | Defined structure for the processing, storage, or display of data |

| Joinability / Linkability | Number and type of data assets with which it can be linked |

| Unique identifiers | Variable in a dataset that serves as a key or reference to pin down a particular observation |

| Standardised | Formatted in a way such that it conforms with common guidelines |

| Coordinates | Groups of numbers that indicate the position to a point, allowing different layers to come together |

| Authoritative / Reputable source | Trusted to being accurate and reliable |

| Audit trail / Lineage | A record that provides evidence on the sequence of activities and transactions that affected the data |

| Liabilities and risks (for the user) | Potential legal responsibilities that may trigger a financial loss and/or a reputational damage for the data user if they do not comply with the usage restrictions in the data |

| Completeness | Proportion of missing values |

| Consistent / Coherent | Proportion of data-points recorded in the same way |

| Representativeness / Generality | Size of the group/population to which the data refer |

| Interpretable / Good metadata | Ability to be understood and to derive insights, accounting for any potential caveats and limitations |

| Accuracy | Proportion of correct data-points |

| Timeliness | Whether the data asset is real-time, delayed or historic |

| Time Series | The data includes several data periods. Suitability for before and after analysis and to control for more factors. Key driver of the descriptive, analytical and predictive power of the data |

| Granularity / Precision / Resolution | How precise are the data-points in the asset (level of detail) |

| Relevant subject matter | What does the data asset refer to and is it useful for the given purpose |

Source: Frontier review of evidence. The Value of Data Assets. A report for the Department for Digital, Culture, Media and Sport. Frontier Economics. In press.

Note: The short list of data characteristics includes data characteristics that can be targets for investment and may determine fitness for purpose for one or more use cases.

Investments in data assets tend to impact a group of interrelated data characteristics. To acknowledge these interdependencies, relevant characteristics have been grouped into categories which are fully consistent with Q-FAIR. Thinking in terms of groups of characteristics rather than individual traits allows for more practical considerations of impacts, supporting the development of a clear Theory of Change model for decision makers, which maps out the pathway to impact and value.

Project leads considering location data investments should consider the impact on users when undertaking this exercise to identify how their intervention affects the characteristics of data. The figure below sets out groupings of relevant data characteristics that should be considered.

Figure 8: Geospatial data characteristics: Q-FAIR framework

Quality

Objective Quality

“more always better”

Completeness

Consistent/Coherent

Representativeness/Generality

Interpretable/Good metadata

Accuracy

Relevant subject matter

Subjective Quality

“more not always better”

Timeliness

Time Series

Granularity/precision/Resolution

Findability

Where is the data saved/published?

Is the data easily searchable?

Discovery metadata

From an authoritative/reputable source

Have an audit trail/lineage

Accessibility

Ownership of the dataset

Licensing arrangements for the user: Open/Excludable, Price/Cost

Liabilities and risks (for the user)

API/ability to query location data

Size

Interoperability

Processing requirements: Support, Format/Structure

Joinability/Linkability: Unique identifiers, Standardised, Coordinates

(Re)Usability

Anonymised

Ability to (re)share (open source)

Confidentiality

Administrative costs associated with: Usage restrictions, permissions

Source: Frontier

Note: It is important to consider some of the “accessibility categories” separately, since some investments may impact value in conflicting directions. For example, an investment to open data will make it available for more users and new use cases will be unlocked. However, additional administrative costs may come up in order to minimise liabilities and risks for the user to ensure that confidential information is not disclosed.

Quality characteristics can be classified into objective and subjective quality characteristics. Objective quality characteristics are likely to be relevant to most data applications whereas subjective quality traits will be key to determining value only for particular data applications. The quality of the information will be generally determined according to how relevant it is to the use case.

Findability characteristics determine whether the dataset is easily discovered, and the relevant data users are aware of the data’s existence. Certain traits such as the reputability of the source and the quality of the metadata are key to finding data and determining fitness for purpose.

Accessibility characteristics relate to how easy it is to obtain the data. This could include the existence of licensing requirements and whether or not the data is open. More accessible data will allow users to make personalised queries to better understand the data.

Interoperability characteristics determine how easy it is from a technical point of view to use a dataset and merge/link it with other data assets. The structure of data, the availability of unique identifiers and level of standardisation will determine whether the dataset can be used for certain use cases. These characteristics will also determine whether other use cases may be unlocked by being combined with other data.

Reusability characteristics determine whether and how the data can be used to generate value through its (potential) use cases. Reusability characteristics may be related to data security and data governance which impact how data can be used beyond its initial purpose. Certain characteristics such as the level of anonymisation may facilitate handling the data, whereas others such as confidentiality requirements may impose additional administrative costs.

Investments may impact multiple characteristic groups. For example, an investment focused on improving data access by opening up the data may simultaneously lead to the imposition of user restrictions in order to mitigate confidentiality risks, affecting both accessibility and reusability. Understanding what data traits are affected by an intervention is a key part of benefit appraisal.

Step 4: Identifying use cases

Use cases are the ways in which individuals and organisations use or apply location data assets. After determining how an investment option is likely to impact data characteristics (Step 3), organisations should consider any use cases that may be impacted by these changes. An intervention may add value to existing use cases and/or unlock new applications. In some cases, Steps 3 and 4 may require more than one iteration in order to be as comprehensive as possible. Only after these use cases have been considered that the benefits should be appraised (appraisal set out in subsequent steps).

Figure 9 Framework Step:4

- Articulate rationale and strategic fit of investment

- Classify type of investment

- Set out how the investment will affect data characteristics

- Identify data uses or applications

- Identify potential benefits

- Prioritise high impact benefits for further in-depth analysis

- Assess benefits associated with known & unknown uses

Improvements in data characteristics should be targeted at the likely application of the data. In some cases, increasing the frequency of data collection may add value. For example, a frequently updated dataset on the height of buildings may allow users to determine potential risks associated with drone flights in an area. However, at other times, more up-to-date data adds little extra value but bears additional costs.

Examples of use cases

The table below provides examples of use cases associated with various geospatial datasets. This is not intended to be an exhaustive list. Within these examples, location data can allow users to: improve measurement and monitoring (e.g. of how well current policies are working), uncover patterns and trends, make better decisions and deliver better services.

Table 3: Examples of use cases for datasets

| Use case category | Description |

|---|---|

| Local government – education | Local authorities use census data to plan resources such as school places |

| Retail location planning | This is an industry where location of stores in relation to customers is very important. Small area census data are used extensively to maximise returns on investment |

| Utilities | The main water and sewerage companies in England and Wales use census data to plan new investment and monitor leakage |

| Utilities | Excavators need to identify underground assets to dig safely without striking an underground asset |

| Marketing | Geospatial data can be used to facilitate the better adoption of location-based advertising. |

| On-site efficiency and project savings | Excavators need to identify underground assets data so that workers can efficiently orientate themselves on site. Geospatial data can also be used to facilitate digital surveying |

| Site planning and data exchange | Project planners need to identify the location and attributes of underground assets to avoid project delays. Data needs to be shared through a central platform so asset owners can efficiently respond to data requests |

| Construction | Geospatial data can be used to support the optimal route locations for new pipelines or power lines |

| Conveyancing and mortgage lending | Used in the transfer of the legal title to (of) property from one person to another |

| Tax collection | The administration and efficiency of property tax collection is reliant on accurate geospatial data on the location and status of buildings |

| Transport | Businesses such as Waze, Google, Apple and Citymapper can create customerfacing apps to improve the travel experience |

| Housing, land use and planning | Developers can use data to understand impact of construction process on local species |

| Planning | Planners use a range of data (including risk assessment information, environmental data, and land use data) to identify critical and vulnerable infrastructure assets. This allows for better understanding of risks and disaster response planning. Geo-demographic data can also be used by urban planners to forecast demand for public services for example |

| Emergency response | Emergency responders need to access up to date information about their environment (e.g. building access point locations and the locations of traffic congestion) to better understand an emergency and can take immediate informed action |

| Natural resource management | Data is used to ensure land and other natural resources are used sustainably and safeguarded in the long run. This will include data on soil quality, planning permission data and local area air quality data. Location data can also be used to facilitate precision agriculture and automation of farm equipment. Public bodies can use location data to manage the distribution of the support payments they provide |

| Enabling smart infrastructure | Data is needed to support the deployment and optimisation of smart infrastructure such as remote sensors which can enable better management of the road network |

| Managing heritage assets | Data on the location of points of interest can be used to identify sites of archaeological or cultural interest to better protect and safeguard their future |

| Flood risk planning | Geospatial data can be used to understand the likelihood of flooding in a particular location given historical patterns. This type of analysis can provide a holistic view of the entire urban environment and enable better planning for and mitigate of flood events |

| Alternative energy production and distribution | Geospatial data can ensure that organisations know the location of existing infrastructure (in particular electricity). This enables for strategic planning of new renewable energy production, network connections or consumption points (charging stations) |

Source: Frontier based on evidence review and qualitative engagement

Approaches for identifying and categorising use cases

Various evidence sources can be used to create a list of known existing and future potential use cases. The approach used should follow a proportionate approach to appraisal, considering the likely scale of the impact as well as time and resource constraints. Evidence sources could include:

-

Expert internal knowledge: public sector holders of geospatial data have a good understanding of how their datasets are used. They may have also proactively identified areas where potential improvements would add value

-

Previous appraisals or evaluations: previous appraisals or evaluation of similar investments may have focused on particular benefits or use cases.

-

Direct discussion with stakeholders: this could include data users, industry stakeholders, members of the six partner bodies to the Geospatial Commission, or the Geospatial Commission itself. This is an effective method, but care may need to be taken to ensure that the views presented are representative (readers may wish to consider more formal expert elicitation approaches to help ensure balanced views [footnote 23], but this is not a requirement). This is relevant here as this method will typically involve a small sample size and typically be more practical to undertake given resourcing constraints.

-

Focus groups/stakeholder workshops: this involves gathering together a panel of informed stakeholders (e.g. data users), industry bodies or a trade association. Care needs to be taken to ensure that the views presented within the group are likely to relate to the full population.

-

Questions in Government consultation documents or discussion papers: these publications present an opportunity to formally invite responses.

-

Surveys: conducted using a representative sample.

-

Qualitative interviews with specific stakeholders: this process typically involves targeted and open questions with specific stakeholders (including feedback from existing users) to understand key problem areas or opportunities in depth.

Engagement with stakeholders tends to be more effective when stakeholders are familiar with the data (given that data is an experience good). In contrast, where discussions relate to unfamiliar data, this can make it more challenging for users to conceptualise potential use cases. In these circumstances, appraisers should consider articulating these in a way that relates to the user’s experience of using similar types of data.

Known Vs Unknown use Cases

For an existing dataset, some use cases will already exist and be enhanced or changed following an investment. Other use cases will be new and enabled for the first time, with some more challenging to predict.

For the purposes of this guidance three categories of use case are defined:

-

Known use cases which either exist currently or can be predicted to develop in the future with a relatively high level of certainty and granular understanding of users and associated economic, social and environmental benefits. For example, improving or maintaining UKHO data will allow for continued use by the commercial shipping market for safe navigation.

-

Known Unknowns relate to specific future use cases which can be identified at some level (“known”) but are subject to some uncertainty (“unknown”). For example, investing in the collective purchase of aggregated mobile phone mobility data across the public sector may have applications in relation to planning decisions or infrastructure. However, predicting each of the precise applications ahead of time is challenging.

-

Unknown Unknowns are use cases which are not possible to predict (“unknown”) with any level of confidence ahead of time (“unknown”). These are more likely with investments which affect a wide range of stakeholders, such as accessibility, findability, and interoperability which can increase the potential user base of the data asset. For example, improvements in technology or the emergence of specific societal challenges or behaviour change may lead to applications for current data which are not currently foreseeable.

Step 5: Identification of benefits

Step 5 sets out the types of benefit an intervention could generate. Holders of location data should use this step to make informed decisions about data access policies based on the scale of public good it can generate.

Figure 10 Framework Step:5

- Articulate rationale and strategic fit of investment

- Classify type of investment

- Set out how the investment will affect data characteristics

- Identify data uses or applications

- Identify potential benefits

- Prioritise high impact benefits for further in-depth analysis

- Assess benefits associated with known & unknown uses

Benefit categories

Investments that improve existing use cases or create new use cases can ultimately lead to a range of benefits. Benefits created by geospatial data use cases will tend to fall into one of three broad categories:

-

Economic benefits affect the economy at a local or national level and capture changes to the location and volume of economic activity.[footnote 24]

-

Social benefits cover wider impacts on individuals from an investment and capture the extent to which people’s ability to live happy and healthy lives are impacted.

-

Environmental benefits cover any change to the natural environment and landscape, whether adverse or beneficial, resulting from an intervention.

Identification of benefits should cover all three categories. Examples of benefits that fall under each category are outlined in Figure 11 below. These examples are not intended to be comprehensive, and a specific intervention may generate different benefits.

Figure 11: Examples of Economic, Social and Environmental benefits

Economic Value

- Business creating more employment or becoming more productive (allowing for higher wage premia);

- Creation of international trade opportunities

- Reductions in business travel or commuting time;

- Governments delivering public services and policy making more efficiently;

- Interventions which increase human capital can have positive labour supply effects;

- Avoidance of economic disruption associated with negative outcomes (eg resilience planning)

- Appraisers could also consider changes in the structure of the economy, benefits from dynamic clustering or agglomeration

Environmental Value

- Impacts on air quality;

- Reductions in greenhouse emissions;

- Improved water quality;

- Reduced risk of flooding;

- Improvements in stock of natural capital (this includes both the living and non-living aspects of ecosystems). Stocks of natural capital provide flows of environmental or “ecosystem’ services over time;

- Better management of biodiversity and natural landscape

Social Value

- Impacts on physical activity;

- Reductions in accidents and mortality;

- Improved longevity and quality of life;

- Reductions in leisure travel time; and

- Reductions in crime

Source: Frontier based on review of evidence,

Notes: Useful data sources that can help inform assessments of economic value are included in Annex D

Central to the approach of this guidance is the notion of proportionality. In some instances it may be pragmatic to value specific high-priority benefits, whilst in other situations it may not be worthwhile. This is discussed further in the next subsection.

Appraisers should balance the level of effort and resources required to assess the direct, indirect and spillover effects of an intervention against the resources available. Thought should also be given to the practicality and feasibility of the benefits being realised. In particular, considering what processes, tools and other dependencies that might act as limiting factors.

Beneficiaries and other affected parties

Alongside identifying benefits, it is important to identify beneficiaries and all potential stakeholders who may be affected by the data intervention (both positively and negatively - where the latter is referred to as disbenefits), in-line with HM Treasury Green Book. Relevant stakeholders may be (i) upstream producers, gatherers or acquirers of information; (ii) data users, (iii) those who manage, transform, or store data, (iv) those who use the data to set policies or standards, and (v) wider members of society who may be impacted indirectly.

Like many other forms of data, the value of geospatial data will not solely accrue to the data user. Value can be subdivided into several different categories, based on who the value accrues to:

-

Direct use value: where value accrues to users of geospatial data or data assets. This could include the public sector using geospatial data to better manage public assets like roads and highways. These are typically more straightforward and common to assess as they are more immediate and certain.

-

Indirect use value: where value is also derived by indirect beneficiaries who interact with direct users. This could include users of the public assets who benefit from better public service provision. These are typically less straightforward as some dependencies that affect the behaviour and activities of affected parties, which are less certain

-

Spillover use value: value that accrues to others who are not a direct data user or indirect beneficiary. This could, for example, include lower levels of emissions due to improved management of the road network by the government. The benefits of lower emissions are felt by all of society, even those who do not use the road network. These are typically the most challenging to assess as there are further dependencies and factors that can affect behaviour, making them the most uncertain category.

Logic models and theories of change

The first five steps of the guidance can be summarised in a theory of change diagram. For the purpose of this guidance a logic model framework has been used to highlight the mechanisms by which geospatial data investments could produce value. Logic models are recognised as best practice within evaluation and appraisal guidance - see HM Treasury Magenta Book.

Theories of change prompt the appraiser to identify key aspects of an investment and highlight the causal link from inputs and activities to expected outputs, outcomes and impacts. These are helpful in theoretically stress-testing the relevance of potential investment options. In particular, they can:

- Articulate how various options are expected to work, setting out all the expected steps to achieve the desired outcome;

- Identify where the uncertainties and risks lie (i.e. what assumptions the investment relies on and why it may or may not work, including any supporting evidence);

- Draw any commonalities in outcomes and impacts from investments aimed at particular data characteristics or parts of the geospatial ecosystem; and

- Establish a clear narrative and link between types of investment, data characteristics, use cases and feasible valuation methods.

For the purpose of this guidance, the first five steps of the framework can be summarised in the general theory of change below. Annex C sets out more detailed theories of change associated with investments that impact data quality, findability, accessibility, interoperability and reusability.

Figure 12: Generic Theory of change for geospatial data investments

This figure sets out a theory of change for geospatial data investments, covering 5 stages: (1) Existing problems or potential opportunities (2) Investment (3 )Impact on data characteristics (4) Impact on use cases (5) Impact on value

Source: Frontier,

Note: Annex C sets out more detailed theories of change associated with investments that impact data quality, findability, accessibility, interoperability and reusability.

The accompanying case study report sets out how the logic model framework has been applied in previous geospatial data interventions. Only certain elements of each case study have been explored in detail as part of this work. More detailed explorations of each case study can also be found in the accompanying case study report.

Key takeaways from the case studies

Variation: the four case studies illustrate the significant variation in context that applies to individual data investments. This in turn leads to a wide variety of potential economic, environmental and social impacts. These diverse impacts are linked to geospatial investments in particular (relative to other forms of data investment). As described in the following subsection this has implications for the valuation methods used. In particular this reinforces the importance of tailoring approaches to specific investments.

Benefit appraisal: the case studies also reinforce some of the benefit valuation challenges that were discussed in Section 2. In particular externalities associated with geospatial data’s usage and the lack of established markets for geospatial data may require specific attention.

Stakeholder engagement: finally, the case studies all emphasise the importance of stakeholder and user engagement. This engagement is crucial to understand use cases and provide context for the investment’s rationale.

Step 6: Prioritisation of benefits

This step describes how to prioritise effort when considering the impact of a geospatial data investment on a wide range of potential benefits and use cases that were identified in previous steps of the framework.

Figure 13 Framework Step:6

- Articulate rationale and strategic fit of investment

- Classify type of investment

- Set out how the investment will affect data characteristics

- Identify data uses or applications

- Identify potential benefits

- Prioritise high impact benefits for further in-depth analysis

- Assess benefits associated with known & unknown uses

Certain investments may lead to a large number of potential use cases. As such, it may not be proportionate or possible to quantify and monetise all benefits that relate to each of these use cases.

Prioritisation criteria

In line with best practice included in HMT’s Green Book the appraiser should focus on uses cases / benefits which meet the following criteria:

- They are expected to generate the largest economic, social and environmental impacts;

- They are perceived to be most important to stakeholders; and

- They are quantifiable via proportionate analysis.

These criteria can be used to create a shortlist of high priority use cases.

Prioritisation Methods

There are multiple approaches that can be used to aid with prioritisation decisions.

-

Internal discussion:For relatively low-cost investments, prioritisation could rely on internal expertise and market experience within the appraising organisations to assess different use cases / benefits against the criteria listed above.

-

Stakeholder engagement: As noted above, understanding which use cases are likely to be most important from the point of view of data users is likely to be an important factor in deciding which elements are subject to detailed appraisal. Speaking directly to existing and potential customers could help to inform prioritisation decisions.

-

Multi-Criteria Decision Analysis (MCDA) could also help to identify the best performing use cases for further examination. MCDA is a set of techniques, with the goal of providing an overall ordering of options, from the most preferred to the least preferred option. The extent to which each option meets each criterion can be considered transparently and objectively using both quantitative and qualitative information side-by-side.[footnote 25]

Regardless of the method used, the prioritisation process should be documented and the underlying rationale behind the prioritisation decision should be clearly outlined (including reference to the three criteria above).

Step 7: Assessment of Benefits

Having identified use cases and potential benefits, it is important to understand their significance and where possible quantify and monetise them in a robust way. This is the focus of this step. Assessment should generally consider each use case separately, and consider the most appropriate methodology in each case.

This step outlines a spectrum of methods for assessing existing and potential future known use cases. These include approaches which are (i) fully quantitative, (ii) indicatively quantitative, and (iii) qualitative. While unknown use cases cannot be quantified to the same extent, some of the indicative quantification and qualitative approaches can equally be applied.

As set out in Annex E there are multiple ways that benefits of data can be valued - each of these approaches has their own strengths and weaknesses. Multiple approaches can be used in parallel to assess the benefits, however using different approaches in conjunction with each other does raise the possibility of doubling counting. Extra care should be taken to understand the theoretical and practical underpinnings of the methods in question and the suitability of the approaches for simultaneous use.

Figure 14 Framework Step:7

- Articulate rationale and strategic fit of investment

- Classify type of investment

- Set out how the investment will affect data characteristics

- Identify data uses or applications

- Identify potential benefits

- Prioritise high impact benefits for further in-depth analysis

- Assess benefits associated with known & unknown uses

The figure below outlines a summary of the valuation approaches that this guidance recommends for geospatial data interventions. These approaches are informed by previous work undertaken to value data (see Annex E) and by qualitative engagement undertaken as part of this project. Benefits should be monetised where possible to provide a common metric for comparison relative to the investment costs, however it is recognised that this is not always possible nor practical.

Figure 15: Valuation Approaches

This figures sets out the different methods that are appropriate to use when assessing the three types of use cases: known use cases, known unknowns and unknown unknowns.

Most of the approaches shown in the figure are collectively referred to as the “use-case approach” (barring the Direct Valuation of Data, which are recommended for use in exceptional circumstances). The focus of the use-case approach is to estimate and derive value based on the different applications affected by the geospatial investment. The first category of approaches (full quantification - except direct valuation) is likely only to be feasible when considering the benefits from known use cases. The second category of approaches (indicative quantification) may be most appropriate when considering known unknowns. Unknown unknowns are best tackled using a qualitative approach.

Known use cases: Full problem or opportunity valuation and data attribution

This is the recommended approach for most geospatial data investments assessing known use cases given its specificity, ability to capture indirect and spillover benefits and relatively low resource requirements. Appraisers should first estimate the full cost of a problem or opportunity that a geospatial investment can change (Part 1), covering the economic, social and environmental cost to society as a whole. Then the second step is to determine the contribution of the investment to resolving the problem or opportunity (Part 2). This approach aligns with what is known as “use-based methods” in the literature as set out in Annex E.[footnote 26]

Part 1 Full valuation of the problem/opportunity

The problems/opportunities for the prioritised use cases should first be valued in their entirety. A topdown approach is generally used to facilitate this – starting from a high-level benefit or cost. Examples from the case studies are below:

NUAR

-

Use case: NUAR could help reduce the risk of construction projects striking underground assets. These strikes lead to direct costs incurred by companies such as costs of repair and additional materials (economic costs). They also lead to wider indirect costs (including traffic delays and environmental damage - wider societal and environmental costs).

-