Learning during the pandemic: the context for assessments in summer 2021

Published 12 July 2021

Applies to England

Authors

Paul E. Newton, from Ofqual’s Strategy, Risk, and Research Directorate.

With thanks to colleagues from

- Department for Education

- Education Endowment Foundation

- Education Policy Institute

- FFT Education Datalab

- GL Assessment

- ImpactEd

- Institute for Fiscal Studies

- Juniper Education

- National Foundation for Educational Research

- No More Marking

- Ofsted

- Open Data Institute

- Renaissance

- RS Assessment from Hodder Education

- SchoolDash

- University College London

- University of Exeter

Thanks also to those who have very kindly provided feedback on report drafts, including colleagues from Ofqual, and the Department for Education, and Tina Isaacs.

Executive summary

Since schools across the nation terminated whole-class in-school tuition, back in March 2020, a body of research and analysis devoted to learning during the pandemic has emerged. To support effective policy making in the run up to summer 2021 (and beyond) we have closely monitored this work, to provide an evidence base for the many decisions that needed to be taken.

We have now drawn this work together as a series of literature reviews. Alongside the present report – which provides an overview of the project and its conclusions – we are publishing 4 additional reports in our ‘Learning During the Pandemic’ series:

- Quantifying lost time (report 2).

- Quantifying lost learning (report 3).

- Review of research from England (report 4).

- Review of international research (report 5).

Our overarching objective was to understand the impact of the pandemic on levels of learning that were likely to be achieved by summer 2021, with a particular focus upon students in England from years 11 to 13. Our reports provide a context for understanding qualification results in summer 2021. In compiling these reports, we have drawn upon a wide range of research and analysis. This includes evidence from England, and from overseas, spanning both primary and secondary schooling.

Over the past year or so, media headlines have repeatedly expressed concerns over the weeks and months that students have lost since COVID-19 struck. Unfortunately, this idea of ‘lost time’ is a slippery one in the context of teaching and learning, because it can be interpreted in a variety of different ways. Report 2 helps to make sense of this idea. It begins by explaining the chronology of the pandemic in terms of its impacts on schooling, having broken down the two most recent academic years into five distinct phases:

- Phase 0 – September 2019 to late March 2020 (pre-pandemic)

- Phase 1 – late March 2020 to end summer term 2020 (mainly remote learning)

- Phase 2 – autumn term 2020 (mainly ‘new normal’ learning)

- Phase 3 – January 2021 to early March 2021 (mainly remote learning)

- Phase 4 – early March 2021 to mid May 2021 (mainly ‘new normal’ learning)

It then unpacks alternative meanings of ‘lost time’ before estimating how much students in different circumstances might actually have lost.

Report 3 attempts to quantify ‘lost learning’ directly, by reviewing research and analyses based upon large-scale attainment datasets collected during the pandemic. In theory, this represents an ideal source of evidence for the purpose of the present project. Unfortunately, in practice, these data are limited in a variety of ways, and can only tell a partial story.

Report 4 reviews the broader landscape of research and analysis into learning during the pandemic, providing a comprehensive overview of reports published in England since March 2020. We restricted our review to reports that focused upon impacts on learning, and factors that were likely to influence levels of learning. We did not focus upon longer term consequences of the pandemic, nor upon how those consequences might be addressed.

Finally, report 5 provides a more selective overview of insights from research and analysis conducted internationally since March 2020. We focused upon reports that appeared to be particularly important and influential, as it would not have been feasible to attempt a comprehensive review.

On the basis of the research and analysis that we reviewed, it seems safe to conclude that:

- during each of the phases of the pandemic, students in England have had to study under abnormal circumstances, typically less than ideal circumstances, and sometimes very unfavourable ones

- • by summer 2021, the most widespread concern will have been less effective learning during periods that students were studying, rather than less time spent studying, because most students will have been studying for much of the time that was available to them, albeit under abnormal circumstances – this questions the relevance of the ‘summer holiday learning loss’ phenomenon to understanding learning during the pandemic

- as the pandemic progressed through a number of quite distinct phases, learners seem likely to have lost relatively less learning, as teachers, students, and parent or carers became more adept at managing circumstances

- by summer 2021, most students are likely to have experienced a net learning loss as a result of the pandemic

- the amount of learning loss that students will typically have experienced is hard to gauge, although it may not be as extreme as some of the earliest projections

- by summer 2021, a minority of students may have achieved a certain amount of learning gain, although how large a group this might be is unclear

- socioeconomically disadvantaged students are likely, on average, to have lost relatively more learning than their more advantaged peers – however, there will be many exceptions to this general conclusion, as many advantaged students will also have been extremely seriously affected, and many disadvantaged students will not have been

- students from certain areas are likely, on average, to have lost relatively more learning than students from other areas, although this is best understood locally rather than regionally, and not all students from even the worst affected areas will have been disproportionately affected

- learning loss is a phenomenon that needs to be understood from the perspective of each and every student, individually – impacts from the pandemic are too complex and nuanced to be described simply (or even primarily) in terms of group effects

- even students from very similar backgrounds, registered in the same class at the same school, might have experienced quite different levels of learning loss, depending on the particular circumstances of their learning during the pandemic

Beyond these general conclusions, little can be said with confidence, especially concerning the details of how students in particular circumstances might have been affected.

These conclusions provide a backdrop against which patterns of results from summer 2021 can be interpreted. This context is very important, because it enables us to develop hypotheses concerning underlying attainment patterns, which in turn will help us to make sense of the patterns that we will see in results this year. We always need to make assumptions concerning underlying attainment patterns when judging the validity and fairness of national result patterns. For instance, we typically assume that national subject cohorts are unlikely to change radically from one year to the next, in terms of their (average) overall attainment levels, and if national subject grade distributions were to change radically then serious questions would be raised. This year, in the wake of the pandemic, we could not assume consistency of this sort, and we turned to the literature on learning during the pandemic to help gauge the lay of the land. Our reviews have shed light on patterns of attainment that are likely to have occurred by the end of the 2020 to 2021 academic year, which provides a backdrop against which patterns of results from summer 2021 can be interpreted.

Attainment is shorthand for the learning that has occurred in a particular subject area, that is, the knowledge and skills that have been acquired. Roughly speaking, students with the same overall level of attainment in a subject area will have mastered the same body of knowledge and skills to the same extent. The function of a grade, during normal times, is to represent the overall level of attainment achieved by a student in a subject area: higher grades indicating higher overall levels of attainment. So, when we talk about results rising or falling over time at the national level, for instance, we are actually talking about (average) overall attainment levels rising or falling over time. In other words, during normal times, result trends directly mirror attainment trends – that is their job. However, this year, we have permitted a content coverage concession, which means that students were only assessed on course content that they had been taught. As such, we would not expect result trends to mirror attainment trends directly.

This is an important point of principle for assessment in summer 2021, which is worth reiterating before unpacking its implications, because of how significantly it differs from grading policy during normal times. During normal times, if teachers were to teach substantially less, and students were to learn substantially less, then we would expect results to fall substantially too. This is because – during normal times – results represent student learning directly, and result patterns mirror attainment patterns. This year, because the pandemic led to some students being taught less than others, students were only assessed on content that they had been taught. Because this breaks the direct link between attainment (that is, learning) and results, result patterns will not map straightforwardly onto attainment patterns this year. Therefore, to understand likely result patterns for summer 2021, we needed to interpret the best available evidence of attainment patterns – which is set out in our review of learning during the pandemic – in the context of the exceptional grading policy this year.

In terms of attainment trends by the end of the 2020 to 2021 academic year, we developed the following hypotheses. First, if most students in years 11 to 13 are likely to have been affected by a certain amount of learning loss, then (average) overall attainment levels are likely to be down, nationally, by the end of the 2020 to 2021 academic year, across subjects and across qualification types.

Second, if socioeconomically disadvantaged students are likely, on average, to have been worse affected by learning loss, then (average) overall attainment levels are likely to be correspondingly lower for disadvantaged students. Again, many disadvantaged students will not have been among the worst affected, so this only refers to the hypothesis that attainment will be disproportionately lower on average.

Third, if the previous hypotheses are correct, then we might also hypothesise that group membership categories that correlate with socioeconomic disadvantage – which might include ethnicity or qualification type – may also be associated with disproportionate drops in (average) overall attainment levels. However, we have no strong basis for estimating the likely size of any such effect.

Fourth, if (average) overall attainment levels are disproportionately lower for students from socioeconomically disadvantaged backgrounds, then it stands to reason that longstanding attainment differentials – known as disadvantage gaps – will also have widened, however the extent to which gaps might widen is unclear.

In terms of result trends in summer 2021, we developed the following hypotheses. First, although attainment levels may fall, nationally, the content coverage concession should help to compensate for this, meaning that we should not expect to see an equivalent drop in results at the national level.

Second, although Ofqual’s guidance permits a small amount of compensation for individual students who have lost more study time than their classmates, this does not extend to compensating students for having learned less effectively as a result of unfavourable home learning circumstances. If so, then we might hypothesise that students who studied under extremely unfavourable circumstances would be more likely than their classmates who studied under extremely favourable circumstances to achieve grades that were lower than they might have achieved if the pandemic had not struck.

Third, extending this line of reasoning, if socioeconomically disadvantaged students are more likely, on average, to have experienced relatively more learning loss, even just among their classmates, then these effects will also be seen in aggregated results. The implication is that disadvantage gaps in results are likely to widen in summer 2021. However, the content coverage concession was introduced specifically to help mitigate differential impacts on learning. Consequently, any widening of disadvantage gaps in results should be less pronounced than any widening of disadvantage gaps in underlying attainment.

Introduction

When the potential scale and severity of the developing pandemic became apparent, in March 2020, the government decided that the impending exams season would have to be cancelled. This raised the thorny question of whether it might still be possible to award grades for high stakes assessments – such as GCSEs, A levels, BTECs, and so on – in summer 2020; and, if so, then exactly what those grades should represent. It was decided that a grade should represent the level of learning achieved by a student in the period running up to March 2020, projected forward as a prediction of the grade that they were on target to achieve in the summer.

With processes established for summer 2020, attention began to turn to the even thornier question of how to award grades for the same high stakes assessments in summer 2021. It was clear, even then, that the situation would be very different for this cohort of students. Although, at the time, we hoped that it would be possible to run an exam season, it was not at all clear that it would be appropriate to run exams in exactly the same way as normal. After all, this cohort of students had already experienced serious disruption to their learning by summer 2020, and this looked likely to continue for the foreseeable future. Bearing in mind the likely impact of the pandemic on teaching and learning in the run up to summer 2021, the fairness of assessing students in exactly the same way as normal seemed to be in doubt.

The policy position that was agreed during autumn of 2020 assumed that exams would be run in summer 2021. Specifications for teaching would remain largely unchanged, but various steps would be taken to ensure that exams could run fairly.,[footnote 1][footnote 2] These steps included: delaying the start of the exam season, to allow for additional teaching and learning; freeing up teaching time via approaches intended to ease the assessment burden (for example, increased choice in GCSE English Literature and GCSE History); and modifying assessments to allow for more focused teaching and revision, as well as to make them a less daunting experience (for example, advance notice of topics, focuses and content areas that would be covered).

On 4 January 2021, the Prime Minister announced that it was no longer possible or fair for all exams to go ahead in the summer. A new approach to grading students was required, which would be based on teacher assessment. It was decided that a grade should represent the learning achieved by a student in the period running up to summer 2021.[footnote 3] However, recognising that teaching and learning had been seriously affected by the pandemic – and that some schools and colleges[footnote 4] seemed unlikely to cover the entire curriculum by the summer – it was decided that students should only be assessed on content that they had been taught.

Learning during the pandemic

To support effective policy making in the run up to summer 2021, Ofqual closely monitored the emerging body of research and analysis into learning during the pandemic. We attempted to keep track of all relevant reports published in England, from March 2020 onwards, as well as key reports from overseas. Our goal was to ensure that we understood as fully as possible the context for high stakes assessments in summer 2021 (and subsequent years).

We have now drawn this work together as a series of literature reviews. Alongside the present report – which provides an overview of the project and its conclusions – we are publishing four additional reports in our ‘Learning During the Pandemic’ series:

- Quantifying lost time (report 2).

- Quantifying lost learning (report 3).

- Review of research from England (report 4).

- Review of international research (report 5).

Our overarching objective was to understand the impact of the pandemic on levels of learning that were likely to be achieved by summer 2021, with a particular focus upon students in England from years 11 to 13.

The information that we gathered was not used to establish expectations for grade distributions in summer 2021. From the outset, we recognised that it would be impossible to derive plausible quantitative estimates of learning (or learning loss) based upon fragments of information produced in the year following the outbreak of the pandemic. Limitations of this evidence base are considered in more detail below. More importantly, the decision that students should only be assessed on content that that had been taught significantly changed the rules of the assessment game for summer 2021, making implications for results far more challenging to unpick. These implications are also considered in more detail below.

Because results from summer 2021 cannot be interpreted in exactly the same way as they would be during normal times – for a variety of reasons, including the fact that students are only being assessed on content that they have been taught – information from our reviews has an important role to play in helping to contextualise the interpretation of results from summer 2021. Our reviews help to shed light on patterns of attainment that are likely to have occurred by summer 2021, which provides a backdrop against which patterns of results from summer 2021 can be interpreted.

Over the past year or so, the anticipated impact of the pandemic on learning has often been discussed in terms of ‘lost time’ and media coverage along the following lines has become commonplace: ‘GCSE and A-level students have lost more than half of their teaching time over the course.’ Unfortunately, the idea of ‘lost time’ is a slippery one, because it can be interpreted in a variety of different ways. Report 2, ‘Learning during the pandemic: quantifying lost time’, helps to make sense of this idea. It begins by explaining the chronology of the pandemic in terms of its impacts on schooling. It then unpacks possible meanings of ‘lost time’ before estimating how much students in different circumstances might actually have lost. Our intention for this report, as for all of our reports, was to tell a more nuanced story than has sometimes been told.

Report 3, ‘Learning during the pandemic: quantifying lost learning’, attempts to quantify ‘lost learning’ directly, by reviewing research and analysis based upon large-scale attainment datasets collected during the pandemic. In theory, this represents an ideal source of evidence for the purpose of the present project. Unfortunately, in practice, these data are limited in a variety of ways, and can only tell a partial story.

Report 4, ‘Learning during the pandemic: review of research from England’, reviews the broader landscape of research and analysis into learning during the pandemic, providing a comprehensive overview of reports published in England since March 2020. We restricted our review to reports that focused upon impacts on learning, and factors that were likely to influence levels of learning. We did not focus upon longer term consequences of the pandemic, nor upon how those consequences might be addressed. Similarly, although we did consider a number of more distant factors that seemed likely to influence levels of learning – including mental health, for instance – we tended to touch upon them only occasionally, rather than reviewing them comprehensively.

Finally, report 5, ‘Learning during the pandemic: review of international research’, provides a more selective overview of insights from research and analysis conducted internationally since March 2020. We focused upon reports that appeared to be particularly important and influential, as it would not have been feasible to attempt a comprehensive review. We assumed that this international review might usefully complement our national one – either by supporting apparent trends, or by challenging them, or simply by providing different kinds of insights from types of research or analysis that had not been conducted in England.

The impact of the pandemic on learning

The normal function of a qualification is to certify that a specified level of learning has been achieved, and Ofqual has a statutory objective to ensure that the organisations that we regulate uphold this function effectively. This is why we focused our research specifically upon understanding the impact of the pandemic on likely levels of learning. We assumed (in line with the general zeitgeist) that the pandemic would typically result in lower levels of learning, whilst acknowledging that there might be some students for whom the reverse was true.

The concept of learning loss

The body of research and analysis that has emerged over the past year or so has come to be known as the ‘learning loss’ literature. Although there is no universally agreed definition of learning loss, we defined it like this:

Learning loss refers to a reduction in the overall level of attainment that a student achieves by the end of their course of study, for example, lower attainment in GCSE maths, which is attributable to both direct and indirect impacts from COVID-19.

Although the pandemic has affected attainment in a variety of different ways – including breadth of learning, depth of learning, gaps in learning, consolidation of learning, and so on – our operational interest in the certification of overall attainment levels led us to this generic, cumulative, holistic conception of learning loss, referenced to a specific point in time (the end of a course of study).

It is important to recognise that learning loss is a counterfactual concept, in the sense that it can only be defined relative to the level of attainment that a learner would have achieved if the pandemic had never occurred. The degree of learning loss experienced by any particular learner is therefore unknowable. Having said that, evidence from patterns of attainment across learners in different circumstances can certainly help to establish its nature and prevalence in a more general sense.

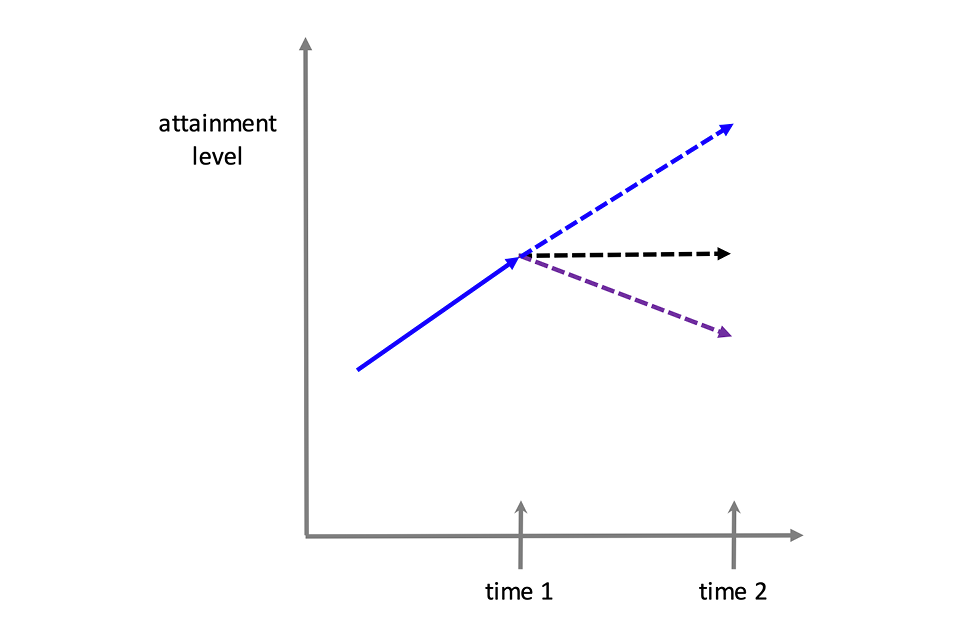

The concept of learning loss attributable to the pandemic is not well theorised. Where researchers have attempted to model it, this has typically involved extrapolating from the summer (holiday) learning loss literature, as the following example illustrates. Figure 1 is an adaptation of Figure 2 from Azevedo et al (2020), which used a framework derived from summer learning loss literature to model learning loss in the context of the COVID-19 pandemic. It represents:

- the normal trajectory of learning across the duration of a course (blue line)

- the anticipated impact on attainment when learning stops, at time 1 (black line)

- the anticipated additional impact on attainment during the period that learning remains stopped, from time 1 to time 2 (purple line)

The difference between the projection of the blue line at time 2 (top arrow) and the projection of the purple line at time 2 (bottom arrow) represents the anticipated amount of learning loss. This is judged in relation to the level of learning that could have been achieved by time 2, had the learning not stopped (at time 1).

Lines indicate level of attainment if learning does not stop, compared with level of attainment if learning simply stops with no new learning, compared with level of attainment if learning stops and learning lost.

In this model, the extrapolation of thinking from the summer learning loss context to the pandemic context is clear:

- time 1 – the onset of the pandemic – is analogous to the end of the summer term (beginning of the vacation)

- time 2 – the end of the period of disruption – is analogous to the beginning of the autumn term (the new school year)

In the summer learning loss context, the model assumes that students are not spending any time studying during the vacation. The implication is that we would not expect their attainment to increase (black line), but we might expect their attainment to decrease due to forgetting (purple line).

Needless to say, this is a very simple model, and it may not even represent the situation for summer learning loss very well. For instance, Wiliam (2020) noted a distinction that cognitive psychologists have drawn between retrieval strength and storage strength (Bjork & Bjork, 1992). He suggested that summer learning loss research may misrepresent students’ actual levels of learning, at the beginning of the autumn term, by tapping into retrieval strength (which will have declined over the summer) but not into storage strength (which will not have declined). In other words, the learning in question is not lost (no longer stored in memory), it is just harder to retrieve, and only temporarily so. This may help to explain conflicting evidence from the summer learning loss literature, which raises the question of whether summer learning loss is even really a thing (von Hippel, 2019).

Following Bjork & Bjork (1992), we will assume that prior learning is not simply lost during a period of disuse, although it will become harder to retrieve. However, what might genuinely be lost during a pandemic is the opportunity to acquire new learning. This invites us to think of learning loss as the difference between the learning that actually happened in the wake of the pandemic and the learning that could have happened if the pandemic had never struck.

In theory, learning loss (of this sort) could arise via two quite different routes:

- not studying at all for an extended period of time

- studying continuously yet learning less effectively

This distinction is important because it marks a key difference between the summer learning loss context and the pandemic learning loss context. During the summer holidays, we might expect students simply to ‘down tools’ and not to study at all. During the pandemic, however, it was generally assumed that students ought to continue studying, to the best of their ability. In fact, as we shall soon see, many students ended up spending somewhat less time studying than if the pandemic had never struck, and were also likely to have learnt somewhat less effectively when they did attempt to study.

Time spent ‘not studying at all’ is fairly straightforward to conceptualise. It is simply the difference between the total amount of time that a student would normally have committed to learning (if the pandemic had not struck) and the total amount of time that they actually committed to learning (in the wake of the pandemic). It would therefore be possible to quantify ‘lost study time’ as a proportion of the total amount of time that a student would normally have committed to learning throughout a particular course of study, for example, X weeks as opposed to Y weeks over a 2-year course (so the time lost is Y minus X weeks). For example, a Swiss research group asked a panel of students how much time they spent in normal learning activities each week (school time, online lessons, homework, and so on) both before the pandemic and during a period of closure due to the pandemic (Gratz & Lipps, 2021). They found that students reduced their studying time, on average, from 35 to 23 hours per week. Clearly, if students were spending one third less time studying, then we might expect this to translate into substantial learning loss.

Learning effectiveness is harder to conceptualise, let alone to measure. Presumably, a key determinant would be the amount and quality of teaching that students received. Where this suffered as a consequence of the pandemic, it would contribute to learning loss. The extent to which a teacher was able to tailor their teaching to abnormalities in learning trajectories that resulted from the disruption would therefore also be likely to contribute to learning loss. The less effectively a teacher adapted their instruction (for example, for a class of students with idiosyncratic gaps in their learning) the more likely those learning losses would be compounded. This underscores the importance of formative assessment in identifying learning gaps and instructional needs, as a foundation for effective teaching and learning during the pandemic.

Another key determinant would be the circumstances that students found themselves in whilst attempting to study. During normal times, a large amount of studying occurs in school, where all students have access to the same resources. During the pandemic, however, a large amount of learning happened remotely, at home. It was clear that students did not have access to identical resources for remote learning when the pandemic struck. Many therefore assumed that students in the most socioeconomically disadvantaged of circumstances were likely to lose the most learning.

The distinction between spending less time studying and learning less effectively helps us to tell a more nuanced story of learning during the pandemic. However, it may also be important for interpreting attempts to measure learning loss. For instance, periods spent not studying at all might have different implications for the retrieval of prior learning than periods spent learning less effectively. We explore this possibility below, when considering how to interpret results from quantitative research into learning loss based upon large-scale attainment datasets.

Although this report is mainly concerned with learning loss, we should not overlook the possibility of learning gain. For some students, the net impact of the pandemic on their level of learning by summer 2021 might be positive. Presumably, this would be most likely for students who were able to commit more time to studying or who were able to learn more effectively. Admittedly, it seems unlikely that many students will have learned more in the wake of the pandemic than if it had never struck. Yet, the pandemic may have caused some students to knuckle down, and to engage with their learning far more assiduously than would otherwise have been the case, especially if closely supervised and supported by newly furloughed parents.[footnote 6]

Likely causes of learning loss

It is helpful to unpack the two principal causal determinants of learning loss – less time spent studying, and learning less effectively – by considering what might cause each of them to occur, and by considering how each of these subsidiary causes might be mediated by student-level, family-level, and school-level factors. Table 1 illustrates a variety of subsidiary causes and mediating factors, which cluster as follows:

- 1. amount of time spent studying

- a. less compulsion to work

- b. less opportunity to work

- c. less inclination to work

- 2. effectiveness of learning

- a. less ability to concentrate on learning tasks

- b. less ability to engage with learning tasks

The suggestion, here, is that students might spend less time studying during the pandemic for a variety of reasons, which include: feeling less compelled, or obliged, to work; being unable to work; or simply feeling less inclined to work. Each of these subsidiary causes might be mediated by student-level factors, family-level factors, or school-level factors. School-level factors might differ depending upon whether the school is effectively closed (and has switched to ‘remote’ learning), or whether it has returned to whole-class in-school tuition (that is, to a ‘new normal’).

For instance, the act of closing a school, or sending a class home, frustrates a key mechanism by which students are compelled to learn. If a parent or carer is unable to facilitate studying effectively, then the general lack of compulsion might translate into a lack of commitment on behalf of their child. Having said that, even when a parent or carer does attempt to facilitate engagement somehow, their child might still decide that they are simply not inclined to work, perhaps believing that the pandemic has somehow licensed them to skip schooling.

Some students, of course, will be very keen to commit the necessary time to their studying, even when learning remotely. However, they may find themselves unable to do so, because they lack something that is critical to their engagement. For example, their teacher might have failed to have told them what they needed to be studying, or failed to have provided them with necessary resources for studying. And this may have prevented them from making any meaningful progress, at least in the short term. Or, they may simply have been unable to access resources that had been provided, for example, if they lacked an internet connection, or associated technology.

Table 1. Factors that might be associated with lower attainment (learning loss)

| Mechanism | Individual factors | Family factors (remote) | School factors (remote) | School factors (new normal) |

|---|---|---|---|---|

| Amount of time spent studying: Less compulsion to work | [Cell left blank] | Weak enforcement by parents or carers. | Termination of in-school education (national school closure). | Termination of in-school education (school or class closures, or self-isolation). |

| Amount of time spent studying: Less opportunity to work | Consequence of being ill with COVID-19. | Problems accessing critical study tools (laptop, internet, books, etc.). Having to take on caring responsibilities due to COVID-19. | School or teacher failure to provide critical home- learning materials. Reduced access to critical support services (teaching assistants, SEND support, etc.). | New burden of COVID-19-related activities (eg, sanitising, one-way systems, staggered starts). School emphasising wellbeing activities, and sacrificing curriculum coverage. |

| Amount of time spent studying: Less inclination to work | Consequence of COVID-19-related impacts (abuse, depression, bereavement, etc.). Use of COVID-19 as an excuse to slack off. | Parents or carers not appearing to care about learning loss. | School having limited engagement with students during lockdown. | [Cell left blank] |

| Effectiveness of learning: Less ability to concentrate on learning tasks | Consequence of COVID-19-related impacts (eg, depression, anxiety, abuse, eating disorders). Response to illness or death of close family member due to COVID-19. Response to fear of falling behind peers. | Home-learning distractions – family (eg, younger siblings, furloughed parents, working parents). Home-learning distractions – conditions (eg, cold, hunger, noise). Non-conducive home-learning workspace (eg, access to desk). | School grappling with online learning for the first time and not having adequate systems in place. | Heightened anxiety due to new regime of COVID-19-related practices (eg, distancing, masks). |

| Effectiveness of learning: Less ability to engage with learning tasks | Insufficient rehearsal of foundational knowledge and skills. | Home-learning disadvantages – technology (eg, slower internet speeds, no printer). | Instructional breakdown (failure to spot learning gaps, mis-sequenced teaching). Poorer quality of interaction with teachers or assistants (online). Poorer feedback on work (online). Poorer quality lessons or materials (online). Lack of direct access to own teacher due to COVID-19 absence (eg, ill, or isolating). Having to re-structure course content coverage to accommodate remote learning. | Instructional breakdown (failure to spot learning gaps, mis-sequenced teaching). Poorer quality interaction with teachers or assistants (eg, distancing, forward-facing rows, less group work or practicals). Poorer feedback on work (eg, less marking, less one-to-one interaction). Poorer quality lessons or materials (eg, competing demands on teacher time). Lack of direct access to own teacher due to COVID-19 absence (eg, ill, or isolating). |

Table 2. Factors that might be associated with higher attainment (learning gain)

| Mechanism | Individual factors | Family factors (remote) | School factors (remote) | School factors (new normal) |

|---|---|---|---|---|

| Amount of time spent studying: More compulsion to work | More opportunity to be independent and self-motivated. | Strong enforcement by parents or carers. | Strong oversight by teachers. | [Cell left blank] |

| Amount of time spent studying: More opportunity to work | Fewer social activities during evenings or weekends. Less time spent with friends. | Procurement of additional home-tutoring. | [Cell left blank] | Additional evening or weekend schooling. Fewer extra-curriculum activities. Fewer non-essential curriculum activities. |

| Amount of time spent studying: More inclination to work | Desire to make the most of a bad situation. Response to fear of falling behind peers. | Strong encouragement to keep on top of learning from parents or carers. | Strong encouragement to keep on top of learning from teachers or assistants. | Strong encouragement to keep on top of learning from teachers or assistants. |

| Effectiveness of learning: More ability to concentrate on learning tasks | Response to fear of falling behind peers. | Provision of new home-learning tools and resources. | Lack of distractions from classmates. | [Cell left blank] |

| Effectiveness of learning: More ability to engage with learning tasks | [Cell left blank] | High level of supervision and support from parents or carers (eg, furloughed parents). | School establishing highly effective systems for online learning (or honing effective systems already in place). | [Cell left blank] |

Table 1 is not intended to provide a definitive deconstruction of the potential mechanisms of learning loss, and some of these factors may well interact. It is simply intended to illustrate the variety of subsidiary causes of learning loss, even if we assume that they all (ultimately) operate either via less learning or via less effective learning. It is also useful for illustrating how different schools, different families, and different students will mediate impacts from the pandemic in a variety of different ways. Table 2 develops essentially the same idea, to illustrate a variety of subsidiary causes and mediating factors associated with higher attainment as a result of the pandemic, that is, learning gain.

Likely correlates of learning loss

The variety of subsidiary causes and mediating factors illustrated in Tables 1 and 2 helps to explain why learners in different circumstances may have been affected quite differently by the pandemic. However, where certain groups of learners are affected in similar ways (and differently from other groups) this will lead to correlated effects: where certain kinds of impact, or a certain level of severity of impact, affects one group of students more than another. For example, if boys disengaged more from their learning than girls, even if just on average, then this might lead to disproportionately more learning loss overall for boys. Observations of differential impact have been a widespread feature of research and analysis into learning loss. Unfortunately, when we move beyond proximal causes to distal correlates, the story of learning loss becomes much more complicated, and often far more ambiguous.

As the autumn 2020 term progressed it became increasingly clear that some regions of England were being affected by the pandemic more seriously than others, leading to greater disruption for schools in those regions. The severity of this disruption, particularly in the north-west, led to a call for students in this region to be given special treatment when their exams were graded in summer 2021:

[He] said that some institutions had been turned into ‘zombie schools’ that were ‘minding teenagers rather than educating them.’ He said that exams must go ahead next summer but that Ofqual should vary its grading by geographical area.

(Hamid Patel, chief executive of Star Academies, quoted in The Times, 2020)

Had the government not decided to cancel exams in summer 2021, this proposal could, in theory, have been implemented. Students would have been examined in the normal way, but then split into separate groups for the purpose of grading. Lower grade boundaries could have been set for students in schools from the north-west of England, with higher grade boundaries set for students from all other regions.

Although superficially attractive, this proposal would not have been acceptable, in practice, because it would have glossed over huge amounts of variation in impact, both across regions and within them. In other words, just because one region is affected far more seriously than another on average, this does not mean that the same will be true for each and every school within that region, let alone each and every student.[footnote 7]

An FFT Education Datalab blog from October 2020 presented outcomes from analyses of attendance data, which focused upon regional effects such as these (Burgess et al, 2020). It concluded that students in the poorest areas of the country were missing out on the most schooling: including the north-west, the north-east, and Yorkshire and the Humber. It was clear that attendance was substantially lower in regions with substantially higher infection rates, at least, on average.

Critically, though, there were many exceptions. For instance, in line with the general conclusion, the East Midlands had a far lower test positivity rate than the north-east, and a higher attendance rate. Yet, attendance rates varied hugely across local authorities within both regions. Indeed, the worst affected authority in the north-east of England was not as badly affected as the worst affected authority in the East Midlands, and the least badly affected authority in the north-east of England was less badly affected than the least badly affected authority in the East Midlands. In other words, the trend that seemed clear on the average, was reversed for authorities at the extremes.

The problem that this example lays bare is fairly obvious, but important. Although disruption to schooling was genuinely correlated with region, this was merely a correlation, and there were many exceptions to be found. Indeed, just 2 months later, the correlation was quite different, with schools in the south-east of England now being particularly badly affected (Nye et al, 2020). Furthermore, this shift affected other conclusions from the FFT Education Datalab October analysis. The fairly strong negative correlation between attendance in secondary schools and disadvantage at the local authority level was very much weaker in the December analysis.

As we shall soon see, one of the most important conclusions from the research and analysis into learning loss in England is that some students appear to have been far more seriously affected than others. And, in a similar fashion, some groups of students appear to have been far more seriously affected than others. However, the reasons why this seems to be true are many and varied, which makes it hard to tell a straightforward story. Even when impact is strongly correlated with one or another group membership category – be that region, socioeconomic status, ethnicity, school type, and so on – there will still be many exceptions to ‘prove’ the rule, and multiple interactions to complicate any conclusion.

Researching impacts on learning

The body of work that we have reviewed comprises a new literature, produced by researchers who (in the main) have not previously worked in the area of learning loss. Their research and analysis has been produced rapidly, particularly in order to support policy making. Before considering what this new evidence seems to be telling us, it is important to consider the circumstances under which it has been produced, in order to properly contextualise our interpretation of emerging findings.

Some of the earliest reports that were published in England considered lessons that might be learned from previously published research into the impacts of school closures on learning – including holiday closures, volcano closures, snow closures, hurricane closures, as well as a small body of research on pandemic closures (see report 5). Subsequently, a variety of research teams began to produce empirical evidence on learning during the current pandemic, based largely upon surveys which targeted teachers, students, and their parents or carers (see report 4). Only more recently has it been possible to generate empirical evidence on attainment during the pandemic, based mainly upon results collated by commercial test publishers (see report 3).

Just as in many other fields, a tremendous amount has been learnt over the past year or so, from a fairly shallow baseline of knowledge and understanding. Yet, in many ways, we are still just scratching the surface as far as learning during the pandemic is concerned. We have assembled fragments of information, which do seem to be telling a plausible story. But there are many gaps in our knowledge and understanding, and it is hard to be absolutely certain about many of the claims that have been made.

Chronological challenges

Perhaps the biggest challenge in telling a coherent story about learning during the pandemic is that it is already clear that it is best presented as a collection of short stories. In other words, the pandemic can be broken down into distinct phases, and experiences of both teaching and learning will have differed radically across these phases (see report 2).

Because we are particularly interested in understanding how students from current year 11 to year 13 cohorts have learnt in the lead up to their assessments in summer 2021 – that is, across the full duration of a standard two-year course – we introduced the idea of a pre-pandemic phase. This spanned the period from early September 2019 until COVID-19 brought teaching and learning to a crisis point in late March 2020.

Whole-class in-school tuition was brought to an abrupt end from 23 March 2020 onwards. Schools in England were forced to implement what report 2 called a ‘remote instructional delivery mode’ with the vast majority of students having to study from home. This period tested the capacity, resilience and resourcefulness of schools, families and students. Much of the early survey research began to differentiate between relative ‘winners’ and ‘losers’ in this respect, with disadvantaged students appearing to be disproportionately negatively affected.

This first phase of the pandemic ran until the end of the summer term. A small number of students were allowed to attend school during this period, including vulnerable children, and children of critical workers. However, most students who fell into these categories ended up studying remotely. Schools began to reopen to certain groups of students during June 2020. Yet, even then, this was not on a fulltime basis, so this was very much an extended period of remote learning for almost all students.

The second phase spanned the autumn term, from September to December 2020. This was meant to represent a return to a ‘new normal instructional delivery mode’ as far as schooling was concerned, with a return to whole-class in-school tuition. This initially felt closer to the truth in rural areas, in regions such as the south-west of England, where the pandemic remained most in abeyance. However, for certain schools in certain parts of the north of England, and later on in the south-east of England, the autumn term felt like a period of extended disruption. The research and analysis narrative shifted somewhat, with students from certain regions appearing to be disproportionately negatively affected. This focused attention on challenging interaction effects, whereby problems seemed to be greatest in regions where both infection rates and levels of disadvantage were highest – although some of these interaction effects became less evident towards the end of the year. This felt like a turbulent phase, where schools in different parts of the country found themselves in quite different circumstances. This was generally not true during the other phases, when national policies rolled out more consistently across regions. During this phase, even students within the same class sometimes found themselves in quite different circumstances, when year groups, bubbles, or individuals were forced to self-isolate at short notice.

The third phase included the majority of the spring term, from the beginning of January until early March 2021. Again, this was meant to represent a return to a ‘new normal’ for all schools, meaning a return to whole-class in-school tuition for all students. However, another national lockdown scuppered these plans, and schools were forced to teach the majority of their students remotely. We do not yet have a great deal of evidence concerning how schools and students have fared during this period. However, it does seem as though, on the whole, students fared considerably better during the second school lockdown than during the first.

At the time of writing this report, we have just entered the fourth phase, which has represented a more authentic return to a ‘new normal’ for the vast majority of students in England (except for occasional groups of students being sent home to isolate from time to time). Early signs suggest that things may remain fairly stable, for the vast majority of schools, until the end of the summer 2021 term.

Interpretational challenges

Beyond the chronological challenge of the pandemic, which has developed through quite different phases, we also need to consider interpretational challenges associated with the circumstances under which this new body of work has been produced.

One potential problem is that almost none of the research or analysis that we identified over the past year or so has been published in an academic journal, perhaps inevitably so, given the timespan in question. Instead, reports have tended to be published by the organisations responsible for producing them (or that sponsored their production) meaning that these reports may not always have gone through a rigorous external review process. The academic review process is far from perfect, but it is useful for helping authors to identify potential flaws in their research design, or potential limitations to the conclusions that have been drawn. It provides a degree of reassurance for the reader that tricky methodological questions have already been asked and answered satisfactorily. This makes it safer to take findings at face value. While many of the organisations that have published reports over the past year or so are likely to have implemented rigorous internal review processes, this may not always have been true, and questionable conclusions may occasionally have slipped through the net. In some cases, the ‘need for speed’ has resulted in minimally detailed reporting, so we found it useful to contact research teams directly to explore outstanding questions.

Much of the published research has been survey based, and there is always a risk with this approach that those who respond may not be representative of the population for whom conclusions are to be drawn. During the pandemic, a particular risk is that those who were the worst affected at the time of the research may have been least able to contribute, so the results might underestimate the severity of the situation. On the other hand, surveys are also susceptible to a bias that might work in the opposite direction, for example, if the general sense of fear, if not panic, induced by the pandemic led respondents to overestimate the severity of the situation. This is an important risk to bear in mind in relation to some of the more ‘ambitious’ questions that were posed in some of the surveys, for instance, where teachers were asked to judge how many months behind in their learning they believed students to be.

Questions of this sort were better answered by using attainment data, for example, where schools had administered progress monitoring tests during the autumn term (2020). Even for these studies, though, the data were largely opportunistic, and few research teams were able to exercise optimal levels of control over the samples that were being compared. These studies also faced the risk of under-representing results from the worst affected schools and students, who might have been amongst the least able to engage with the testing process.

A particular problem for this overview report was the relative lack of evidence related specifically to those students who are its main focus (current years 11 to 13). Almost all of the conclusions based upon attainment data, for example, concerned students in primary schools, which are the largest consumers of commercially published tests. What these studies reveal is that conclusions concerning younger primary students cannot necessarily be generalised to older primary students. Extrapolating results from primary school students to upper secondary school students would be equally precarious, if not far more so. Similar caveats would apply when attempting to extrapolate from schools and students overseas to schools and students in England.

A year or so into the pandemic, it seems fair to say that the research and analysis that has been produced so far is indicative of effects, yet often not definitive, and the following section should therefore be read with this caveat in mind.

Insights from the literature

Since schools across the nation terminated whole-class in-school tuition, back in March 2020, a body of research and analysis devoted to learning during the pandemic has emerged. To support effective policy making, we have closely monitored this work, providing an evidence base for the many decisions that needed to be taken.

We have now brought this review of research together in advance of the publication of results in summer 2021, to provide critical contextual information for understanding the patterns of results that will emerge. Our set of five reports – including the present one which provides an overview – is not intended to represent the last word on learning loss attributable to the pandemic. It is simply an account of what appears to be known at this particular point in time. We have drawn upon a wide range of research, including research from England and from overseas, and from across both primary and secondary schooling.

The following subsections integrate insights from the literature that we discuss in more detail in the other 4 reports (Reports 2 to 5). Rather than straightforwardly summarising these reports, they are drawn upon selectively to construct an account of the context for assessments in summer 2021. The analysis that follows makes considerable use of the distinction that was drawn in report 2 (and introduced earlier) between the various phases of the pandemic:

- Phase 0 – September 2019 to late March 2020 (pre-pandemic)

- Phase 1 – late March 2020 to end summer term 2020 (mainly remote #1)

- Phase 2 – autumn term 2020 (mainly new normal #1)

- Phase 3 – January 2021 to early March 2021 (mainly remote #2)

- Phase 4 – early March 2021 to mid May 2021 (mainly new normal #2) ### Gauging the challenge

As soon as it became clear how seriously the pandemic would affect teaching and learning, work began to gauge the challenge that England would have to face. With no prior experience of this kind of disruption to draw upon, attention turned to what we might learn from parallel contexts (see report 5). This included disruptions and closures in the wake of hurricanes, volcanoes, strikes, and so on, including lessons from the summer learning loss literature (for example, Edge Foundation, 2020; Education Endowment Foundation, 2020; Eyles et al, 2020; Müller & Goldenberg, 2020).

This was closely followed by studies that attempted to estimate the potential scale of learning losses attributable to the pandemic, conducted both in England and overseas (for example, RS DELVE Initiative, 2020; Kuhfeld et al, 2020). Similar modelling exercises continued well into the autumn term, typically offering sobering warnings, such as:

The findings on learning losses support four general inferences. First, the findings are chilling – if .31 std roughly equals a full year of learning, then recovery of the 2019-2020 losses could take years.

(CREDO, 2020, p.6)[footnote 8]

There are limits to what can be achieved via modelling, especially when questions are raised about the research that underpins the assumptions that are used to build the models. Because there has been a lot of debate over the summer learning loss phenomenon (for example, Kuhfeld et al, 2020; von Hippel, 2019) – and because this research base has featured prominently in much of the pandemic impact modelling – this recommends a certain amount of caution when interpreting outcomes. Indeed, subsequent sections will cast further doubt upon the relevance of summer learning loss to understanding learning during the pandemic.

Painting a picture

Researchers soon turned to investigating teaching and learning impacts directly. During the first phase of the pandemic – following the termination of whole-class in-school tuition and the switch to remote learning for most students – evidence was gathered predominantly via questionnaire surveys (see report 4). These were typically conducted with teachers and parent or carers, generally online. This was very much research in the ‘rapid response’ mode, intended to characterise the state of education in England shortly after entering largely uncharted waters.

The earliest reports tended to paint a fairly negative picture, for example:

[This report] finds that the average amount of schoolwork being done at home, according to parents and family members, has been very low: Children locked down at home in the UK spent an average of only 2.5 hours each day doing schoolwork. This figure is about half that suggested by a previous survey, suggesting that learning losses are much greater than feared.

(Green, 2020, p.2)

The same report – based on a survey that was conducted after a month or so of remote learning – also emphasised a theme that was to characterise research conducted during this phase and subsequently: the wide variation in students’ experiences of learning remotely.

Remote learning #1: Phase 1 – late March 2020 to end summer term 2020

For the reasons identified in Table 1 it was fairly obvious that learning was likely to suffer during Phase 1 of the pandemic. Inevitably, the vast majority of schools were not prepared to deliver remote learning at the drop of a hat, the vast majority of parents or carers struggled to work out how to accommodate and support home learning, and students likewise struggled to make sense of their own role at the centre of this confusing scene. It soon became clear that it would be harder for those from disadvantaged backgrounds to respond optimally to this unanticipated educational challenge, and this became a key focus for research.

A survey by Andrew et al (2020) concluded that the amount of home learning was considerably lower than average hours spent on educational activities prior to lockdown. According to their study, primary and secondary students were spending an average of 4-and-a-half hours a day on home learning – including online classes, other school work, private tutoring and other educational activities – which represented a 30% reduction in pre-pandemic learning time for secondary school students, and a 25% reduction for primary.

More importantly, there was plenty of evidence that students and parent or carers from more disadvantaged backgrounds often found themselves in less favourable remote learning circumstances. Findings included:

All studies report a significant gap in the amount of home learning by socio-economic background. Graduate parents were more likely to provide home-schooling at least 4 days per week (80 per cent) compared with non-graduates (60 per cent). Children eligible for free school meals were less likely to receive 4 hours per day (11 per cent) than other children (19 per cent).

(Sibieta & Cottell, 2020, p.34)

Around half of primary schools, and nearly 60% of secondary schools, offered some active learning materials, such as online classes or online chats. But these resources were 37% (24%) more likely to be provided to the richest third of primary (secondary) school children than to the poorest third.

(Andrew et al, 2020, p.10)

On average, disadvantaged pupils gave their home learning environment a score which was over 6% poorer compared to their peers. Students eligible for Pupil Premium were less likely to have developed a routine for learning, less likely to be able get help from their family, and less likely to understand the schoolwork that they had been set.

The availability of internet-enabled devices is clearly a factor here. But access to a workspace and the opportunity for regular exercise are also significant. Students eligible for Pupil Premium were likely to live in a household with access to a device (and only 2% less likely than their wealthier peers). However, the availability of a device was far more limited. It seems likely that this is due to wealthier households having multiple devices.

(ImpactEd, 2021, p.16)

By far the largest differences that emerge are between private school pupils and their state school counterparts. In the raw data alone, nearly three quarters (74 percent) of private school pupils were benefitting from full school days – nearly twice the proportion of state school pupils (39 percent). Descriptive data confirms that many elite schools effectively insulated themselves from learning losses during lockdown.

(Elliot Major et al, 2020, p.6)

Parents in the highest quintile of incomes were over four times as likely to pay for private tutoring during lockdown than those in the lowest quartile of incomes (15.7 percent compared with 3.8 percent). On average, 9.2 percent of parents report[ed] paying for private tutoring during lockdown.

(Elliot Major et al, 2020, p.7)

Even under the traditional instructional delivery mode – which normally involves compulsory whole-class in-school tuition – opportunities to learn are not evenly distributed. Students in some schools have better teachers than others or better resources for studying. And, even when considering students within the same school, some parents or carers are more proactive in providing support and encouragement than others. Yet, compulsory education does play an important role in helping to distribute opportunities more equally, and when this scaffolding is removed, the likelihood of opportunities being less equally distributed increases. Evidence from questionnaire surveys, such as those cited above, suggests that opportunities to learn were, indeed, less evenly distributed under the remote learning model of spring and summer 2020.

Having said that, we should bear in mind three important, interrelated observations. First, although differences were often observed when results were broken down by traditional indices and correlates of disadvantage – family income, Pupil Premium status, school type, and so on – these differences were not always major. Furthermore, overall differences in averages concealed considerable overlap between students across the groups being compared. In other words, it was not the case that all disadvantaged students fared worse under lockdown than all advantaged ones – they fared worse on average, with some disadvantaged students faring better than more advantaged peers, and vice versa.

Second, although it was obviously predictable that students from disadvantaged backgrounds would (on average) find it disproportionately more challenging to learn effectively at home, plenty of effects were observed that were far less obvious. For instance, when discussing outcomes from Ofsted’s interim visits in October, the Chief Inspector explained that learners could be divided into 3 broad groups:

There are those who have been, and still are, coping well in the face of restrictions; there is a group who have been hardest hit, largely because of the interplay between their circumstances and the impact of the pandemic; and there is the majority – a group who have slipped back in their learning to varying degrees since schools were closed to most children and movement restricted.

(Spielman, 2020)

Although she associated coping well with having good support structures during lockdown and having benefitted from quality time spent with families and carers, she was also quick to emphasise that this was not a simple message concerning privilege versus deprivation. Instead, the students who were coping well came from all backgrounds, including those within the care system who saw relationships with carers improve at the same time as the lockdown meant they were not dealing with wider pressures and challenges that might exist outside of the home.

Research from the National Foundation for Educational Research (NFER) added another layer of complexity related to the potential benefits for vulnerable students and children of critical workers who were studying in school, rather than remotely, during Phase 1. These students were “in many cases better supported and supervised” than their classmates who were studying at home (Julius & Sims, 2020, p.3).

Other research teams explored wider causes or correlates of more or less effective learning during lockdown. For instance, ImpactEd (2021) found that students who exercised regularly were more likely to say that they had developed a positive learning routine (58% compared to 33%).

Third, although this rapid response questionnaire survey research has been invaluable in characterising the state of education during Phase 1 of the pandemic, it is also important to acknowledge that research outcomes have not always been entirely consistent. For example, estimates of how much time students were spending on learning activities during lockdown varied substantially between Green (2020) and Andrew et al (2020). When we attempted to work out why such differences might have arisen, we began to unpick additional layers of complexity. We can illustrate this by interrogating a ‘lost time’ estimate from Green (2020), which was based upon a dataset that ended up being analysed by a variety of research teams.[footnote 9]

Green concluded that one-fifth of students was doing either no schoolwork or less than 1 hour of schoolwork a day during the first lockdown (late April 2020). This figure was widely quoted at the time (and subsequently) and spawned numerous national media headlines.[footnote 10] Subsequently, Eivers et al (2020) published an analysis of responses to the same question, which was broken down by primary and secondary school students (see page 5 of their report). These results, however, seemed to suggest that only around 13% of primary school students, and 7% of secondary school students, were spending less than 1 hour studying – both substantially less than the 19.6% reported by Green.[footnote 11]

In fact, scrutiny of the underlying questionnaire revealed that results from Eivers et al actually related to a follow-on question. In other words, it was only answered by parents whose child had actually been set work to do at home by their school. So, to understand what proportion of students did either no schoolwork or less than 1 hour of schoolwork a day, we would need to combine responses to the ‘routing’ question and to the main one, which is the approach that Green took.[footnote 12] Adopting an approach of this sort takes us back towards Green’s overall figure of 19.6%.

The more interesting point, however, concerns the context that the Eivers et al analysis provides for understanding Green’s overall figure. Green’s analysis included results for children from all phases of schooling, from Reception to key stage 5. Yet, data from Eivers et al (see page 2) indicated that students from year 11 and year 13 were outliers in the sense that about half of teachers had set these year groups no work at all – perhaps not surprisingly, as they no longer had any exams to revise for. In contrast, only 2% of students in years 7 to 9 had been set no schoolwork, and almost all year 10 students (now in year 11) had been set schoolwork. In other words, the percentage of secondary school students in years 7 to 10 who were doing either no schoolwork or less than 1 hour of schoolwork a day during the first lockdown was probably closer to the figure of 7% after all.[footnote 13]

Ambiguities and inconsistencies aside, we should certainly not dismiss estimates of disengagement during this phase of the pandemic. We may not be able to say exactly how many students completely disengaged from their learning during the first period of remote learning, but a small minority probably did. More importantly, it is clear from both Green and Eivers et al that some students were studying far less than others. The data (on page 5) from Eivers et al are particularly informative on this point. They revealed that:

- 15% of the highest income bracket students were studying for less than 2 hours a day, while 65% of students from this bracket were studying for more than 3 hours a day

- 30% of the lowest income bracket students were studying for less than 2 hours a day, while 43% of students from this bracket were studying for more than 3 hours a day

There are three important points to draw from this analysis. First, many secondary school students were spending quite a lot of time studying, a month or so into this period of remote learning (even though study time was down, on average, when compared with a normal school day). This is the ‘good news’. Second, on average, students from lower income brackets were reported to be studying less. This is the ‘bad news’. Third, even within the same income bracket, students were reported to be spending very different amounts of time studying. This is the ‘challenging news’ which reminds us that there will be many exceptions to the dominant finding from research into learning during the pandemic – that disadvantaged students are likely, on average, to have lost disproportionately more learning.

New normal #1: Phase 2 – autumn term 2020

The first point to note about the autumn return to a ‘new normal’ instructional delivery mode was that it was still a long way from business as usual, and a substantial proportion of students returned to a somewhat shorter school day. Only 59% of respondents to a survey conducted between mid-September and mid-October reported that students were attending for a full day (Elliot Major et al, 2020). Having said that, students in this study were reported to be studying far longer each day than during Phase 1. The length of the school day may have been affected by COVID-19-related policies, such as staggered starts, or related social distancing measures.

It is also important to note that a sizeable proportion of students did not return to schooling during Phase 2, and attendance rates fluctuated across the autumn term. Sibieta & Cottell (2021) described the situation like this:

In secondary schools in England, attendance fell from over 85 per cent through most of September to 82 per cent by the end of October. Secondary school attendance rose back up to 87 per cent after half-term, before falling over the rest of term. A further dramatic fall in the last week of term led to a low of 72 per cent at the end of the autumn term.

(Sibieta & Cottell, 2021, p.25)

They also noted that, in previous years, secondary school attendance would normally be in the range of 95%, meaning that attendance rates were down by around 10% from the outset. This would have been for a variety of reasons: some students were withdrawn from school and home educated, including for reasons of shielding; other students would have been self-isolating; some would have been ill; and so on. Sibieta (2020) suggested that about 4 to 5% of students in England were absent from school for COVID-19-related reasons during October, rising to 6 to 7% in the week before half-term. Roberts & Danechi (2020) noted that, as at 3 December 2020, around 7 to 8% of state-funded school students did not attend for COVID-19-related reasons, including:

- 0.2% with a confirmed case of COVID-19

- 0.3% with a suspected case of COVID-19

- 6.4 to 7.0% self-isolating due to potential contact with a case of COVID-19

- 0.5% in schools closed for COVID-19 related reasons

It is clear that many students were absent from school during any particular day during the autumn term. Equally, though, it is clear that on any particular day the vast majority of students were attending school, even at the very end of term when secondary school attendance rates were at their lowest (72%).

Bibby et al (2021) demonstrated that absence rates throughout the autumn term increased with each year group: from around 10% for year 7 students to around 16% for students in years 10 and 11. This reflected higher infection rates in older age groups.[footnote 14] Consistent with a dominant theme of this report, they also noted substantial variation: almost 40 schools had year 11 absence rates over 32%; and around 150 schools had year 11 absence rates of 8% or lower. Furthermore, consistent with another theme, they noted that disadvantaged students missed the most sessions: 21% of sessions for disadvantaged year 11 students, compared to 14% amongst other year 11 students.

Although the autumn term was clearly a period of extended disruption, it is quite hard to work out how the ‘typical class in the typical school’ might have experienced this disruption, and therefore the extent to which the ‘typical class in the typical school’ managed to return to the new normal of whole-class in-school tuition during this term. If we were to assume that 7% of students in any particular school were forced to study at home for COVID-19-related reasons on any particular day during the autumn term – and it was a different 7% of students each week – then that would amount to each student having to spend just 1 teaching week at home. However, students were required to self-isolate for 2 weeks at a time, so perhaps this is better characterised as the typical student self-isolating for either 0 weeks or 2 weeks? Either sounds like a fairly plausible new normal, with the vast majority of the term spent in school.

In a briefing on school attendance during the autumn term, the Children’s Commissioner commented on the widespread negative narrative that characterised this period, which seems far less consistent with the idea of a new normal:

Some have called for all schools to be closed on the basis that the virus is ‘running riot’ through schools and posing an unacceptable risk to staff.

[…] Others have argued that school attendance has ‘collapsed’ to such a point that the continued opening of schools to all children was not viable.

[…] The evidence we have analysed for this briefing does not support these conclusions. Instead, we find the reopening of schools to have been highly successful and that, given the increasing prevalence of Covid-19 in the community, schools have done a remarkable job in limiting transmission.

(Children’s Commissioner, 2020, p.4)

This report also suggested that students had missed about a week of whole-class in-school tuition this term, at least, on average. Of course, even within a school, the average is going to conceal a considerable amount of variation. There were no doubt plenty of schools in which certain year groups, or certain class bubbles, had to self-isolate repeatedly, while others did not self-isolate at all. Unfortunately, at the time of writing this report, we did not have the data with which to explore this in detail.

An equally tricky narrative concerned the idea – which came to dominate media coverage as the term progressed – that schools in the north of England were in a far worse state than in the rest of England. By the middle of October, it was certainly true that many areas in the north of England were seriously affected. Burgess et al (2020) demonstrated how attendance tended to be lower in regions with higher infection rates. The north-east, north-west, and Yorkshire and the Humber appeared to be particularly badly affected. However, as noted earlier, there were many exceptions to this rule, and attendance rates varied hugely across local authorities, both within and across regions.

Roberts & Danechi (2021a) illustrated this variation graphically using a map of England – which cycled through each week of the autumn term – to classify local authorities in terms of proportions of students absent for COVID-19-related reasons, with categories ranging from light pink (0 to 5%) to dark red (more than 20%).[footnote 15] Most of the time, most local authorities were in the lowest prevalence categories. What is more interesting, though, is how the highest prevalence categories swept diagonally downwards, like a weather front, from the north-west down to the south-east as the term progressed. Indeed, attendance rates fell consistently in London, the south-east, and the east of England throughout the whole of the second half of the autumn term. Again, though, we should not forget that attendance rates were substantially down across all regions of England throughout this term, even in the least affected regions.