An evaluation of centre assessment grades from summer 2020

Updated 2 August 2021

Applies to England

Authors

- Tim Stratton

- Nadir Zanin

- Philip Noden

Acknowledgements

The authors would like to thank colleagues from Ofsted, the Department for Education and Ofqual for their invaluable input into this research project as part of the GRADE (Grading and Admissions Data for England) joint initiative.

Executive summary

In summer 2020, GCSE and A level exams were cancelled in England as part of the government’s response to the COVID-19 pandemic. Instead, schools and colleges (centres) were asked to allocate grades based on their best judgement regarding what grade they believed candidates would have achieved if exams had gone ahead. These were referred to as Centre Assessment Grades (CAGs). CAGs were intended to form only part of a process to produce standardised final grades. However, the standardisation process did not command public confidence, and a subsequent policy change in August 2020 meant that final grades were awarded to students based on the higher grade from their CAG or the standardised grade.

This has resulted in the largest dataset on teacher grading judgements available in the UK. This creates a unique opportunity to understand the factors that influence teacher-graded judgements and how these relate to grades awarded by exams under normal circumstances. Previous studies have indicated that teacher grades tend to be more generous than those achieved by candidates in exams. There is also some evidence that teacher judgements can be affected by various candidate or school level characteristics, although in most cases evidence across previous studies does not show a consistent pattern. With respect to summer 2020, although evidence suggests that students’ protected characteristics and socio-economic background did not influence pupil’s grades (Lee, et al., 2020), it is not known whether other factors, such as those related to centre characteristics or subject could have had an influence on CAGs.

The main aim of this study was not to measure the size of the overall change in grades in 2020, since this has been carried out in detail previously (He & Black, 2020). Instead, we aimed to identify if the factors related to grades in 2020 based on teacher judgement differed from the factors related to grades awarded in previous years in a consistent way, in terms of candidate, centre or subject characteristics.

Methodology

In this study, we use data held by Ofqual on CAGs in 2020 and exam results from the previous two years (2018 and 2019), combined with data on candidate characteristics from the National Pupil Database. This is to identify if there are any differences in the patterns of relationships between CAGs and other candidate, school and subject level features, when compared to those relationships in ‘normal years’.

The core analysis took the form of a series of nested multi-level models, run separately for each year (CAGs in 2020, awarded grades in 2018 and 2019). These models were used to identify how certain characteristics were related to grades after holding all other characteristics constant. We then compare the outputs from these models between years to identify where there are notable differences in 2020 from the previous two years. Nested models allowed us to identify which ‘level’ of features explained the most variation in grades in each year (prior attainment, candidate, centre, subject-within centre, subject) and how this changed between years. A series of additional analyses were used to provide confidence in the core model results as well as provide additional insight into certain features of interest.

Using this methodology we are only able to draw conclusions for the variables included in the analysis. Results indicated that there was still a substantial proportion of variation in grades unexplained by our modelling. We cannot say whether this unexplained variation follows a similar pattern to previous years.

Key findings

Grades from Centre Assessment Grades in 2020 were on average around half a grade higher than grades from previous years for both GCSE and A levels. However, reassuringly, analysis indicated that the majority of relationships between grades and other features studied had not substantially changed compared to previous years, once normal variation between years had been accounted for. This suggests that although teacher grades were moderately higher than previous years’ grades, they did not introduce any substantial bias or different patterns of grading. The strongest predictor by far of grade outcomes was a candidate’s prior attainment for both GCSE and A level. This relationship was slightly stronger in 2020 compared to previous years. This increase in predictive power of prior attainment may represent CAGs factoring out ‘unpredictable’ variation in student outcomes, seen in normal years due to factors such as exam anxiety, last minute revision or the combination of questions which come up on exam papers. Alternatively, it may represent teachers’ over-reliance on prior attainment as a source of data and not sufficiently taking into account individual candidate differences in performance.

Due to the increase in mean grade, there was some evidence that at the top of the grade distribution there was a plateauing of the relationship with prior attainment. Effectively in 2020 candidates with the highest prior attainment received on average slightly smaller increases in grades compared to previous years as they were already attaining the highest grades possible. This was reflected at centre level as schools and colleges which had lower previous performance and candidates with lower mean prior attainment were those with the largest increase in mean grades. Again, this was likely due to the fact that lower attaining schools had ‘greater headroom’ and therefore ‘further to travel’ up the grade range, whereas the level of increase for higher performing schools was limited due to the ceiling effect of the top of the available grade range.

Results about candidate level features reflected those already published from an analysis of the equalities impact of grading in 2020 by Ofqual (Lee et al., 2020). The most notable effect was a decrease in the attainment gap between male and female students at A level. Whereas previously boys have somewhat outperformed girls, this effect was slightly reduced in 2020. This may represent a genuine closing of the attainment gap, following a trend from previous years, although it could represent a small bias in favour of girls in A level CAGs. In a qualitative study by Ofqual (Holmes et al., 2021) teachers suggested that in preparation for exams boys are more likely to show a ‘last minute push’ and this was difficult to legitimately factor in to CAGs.

Other more marginal effects suggested that those candidates attending centres in areas with the highest deprivation indices received relatively higher grades than previously in 2020, closing the gap with candidates from less deprived areas. This could again be due to more deprived centres tending to be lower performing and so have ‘more headroom’ in the grade range. The grades of candidates from independent centres increased slightly more in 2020 compared to other centre types, and at A level the increase in grades of candidates at sixth form centres was somewhat lower than for candidates from other centre types. There was also some indication that small cohorts received marginally higher CAGs than larger cohorts in 2020 compared to previous years, after accounting for subject and centre type differences. We suggest this may be because teachers of small cohorts had less consistent data to anchor their judgements, as outcomes for small groups are naturally more variable between years. This may have led to some additional generosity for these groups due to this increased uncertainty.

However, all of these effects only explained a very small amount of the variation in grades. In fact, all centre and candidate level effects combined explained less than 1% of variation in grades at A level, where most of the differences were found. Most variation in grades was due to prior attainment, between candidate differences not explained by any of the variables included in the analysis, and additional unexplained variation in grades. This unexplained variation, both between candidates and between subjects taken by the same candidate, is likely to be due to factors such as motivation, exam preparation or teaching quality - measures of which were not available for this analysis.

There was evidence at both GCSE and A level that subjects with more non-exam assessment, which tend to be more applied and expressive subjects, tended to be those with the largest increase in grades in 2020. We hypothesise that this may be due to teachers using non-exam assessment grades, which are usually candidates’ highest graded element, to inform the allocation of CAGs. Data also suggests that ‘facilitating’ subjects at A level, those subjects sometimes considered to provide access to the widest range of university courses, tended to be more generously graded than non-facilitating subjects.

Overall, we conclude that although there are some minor differences in the relationships between the candidate and centre level features analysed and grades in 2020, the patterns of grading are remarkably similar to previous years, particularly for GCSE.

Introduction

In summer 2020, exams for GCSEs and A levels, among other qualifications, in England were cancelled as part of the government’s response to the coronavirus (COVID-19) pandemic. The decision to cancel exams was announced in March 2020. To continue to be able to award grades and ensure the ability of candidates to progress, centres were asked to submit to exam boards’ judgements of the grades they believed candidates would have been most likely to achieve if exams had gone ahead. Centres were asked to provide a grade for each candidate in each subject taken. These grades were referred to as Centre Assessment Grades (CAGs).

The aim of this study is to provide some insights into the factors which may have affected centre decisions around the allocation of CAGs and how these decisions may cause differences in the patterns of grades awarded in 2020 compared to grades received by candidates in previous years, when exams went ahead. The intent was not to define whether these differences were valid or justified but to highlight any notable changes, which may give insight into teacher grading judgements in future.

This study is produced as part of a series of research projects carried out on a preliminary version of a combined data set from the Department for Education (DfE) and Ofqual being made available to independent researchers, GRADE. The intention of this dataset is to allow further evaluation of the grading approach taken in summer 2020, and to facilitate future research and insights into assessment.

The utilisation of teacher judgements in 2020

When producing CAGs in 2020, there was no national pre-standardisation of centres’ grading judgements. Centres were asked to provide their best judgement of the ability of their candidates and, more precisely, to assign students the grade they believed they would have achieved if they had sat exams as usual. To support these grading decisions, Ofqual provided guidance on the best evidence centres should use and how to avoid any unconscious bias in their grading decisions (Ofqual, 2020a). It was emphasised to centres that judgements should be objective and not be influenced by any protected characteristics, such as gender or ethnic group, or any other student characteristics not related to their academic achievement, such as behaviour, appearance or social background. However, teachers were asked to take into consideration any reasonable adjustments usually provided to candidates with special educational needs or disabilities, such as the provision of a reader or scribe, or the allowance of extra time, when making their grading decisions. Centres were also provided with guidance on how data on previous performance of the centre could be used to ‘sense check’ judgements to avoid under or over predicting grades at a cohort level.

Centres were asked to not discuss or disclose the grades to students or parents before results were issued. This was intended to protect the integrity of teachers’ judgements so that teachers could make their judgements fairly and without external pressure, which may have distorted grades. Guidance stated that CAGs had to be signed off by two teachers in the assessed subject, one of whom should be the head of department or subject lead. In addition to this, the Head of Centre was required to submit a declaration that the grades submitted were accurate and represented the objective and professional judgement of staff at the centre (Ofqual, 2020a). An appeals process was put in place for centres in case they believed there had been an error in the allocation of grades or in the data used in the standardisation model (see below), and guidance was provided to students on how to progress if they believed they had evidence of bias or discrimination relating to their CAG. Students who were not able to be issued a CAG or who were not happy with their final grade were also offered the opportunity to take their exams in autumn 2020.

CAGs were initially intended to be included as part of a process to produce a standardised grade. However, the standardisation method failed to command public confidence. Following a subsequent policy change in August 2020, it was decided the higher of the CAG and the standardised grade would be awarded instead. The majority of students received the CAGs provided by the centre. Only a small number of candidates received their standardised grades where these were higher than the original CAG. Details of the standardisation process and how it was applied are discussed in an interim report published by Ofqual on A level results day 2020 (Ofqual, 2020b).

These CAG judgements provide the largest dataset of teacher judgements available in England’s education system and provide a unique case study for evaluation and for the comparison of factors affecting teacher judgement to those affecting performance in exams.[footnote 1] There have been previous studies of teacher judgements, both in the UK and elsewhere, but rarely have they utilised a dataset of this size at both A level and GCSE level.

Previous studies of teacher judgements in assessment

In 2020, centres provided grades using their professional judgement and expertise. Previous analysis produced by Ofqual has shown that there is no evidence that CAGs systematically disadvantaged candidates with protected characteristics or those from less advantaged socio-economic backgrounds when compared to previous years (Lee et al., 2020). Despite this reassuring finding, producing consistent judgements across students, centres and subjects is a highly challenging task. There is prior evidence that the accuracy of judgements provided by teachers in other contexts can be inconsistent across candidates (Campbell, 2015). Accuracy can vary between teachers and different centres, and previous studies have identified relationships between grading judgements and candidate or centre level demographic characteristics (see Lee & Walter, 2020; Lee & Newton, 2021).

Before further discussing previous studies of teacher judgement it is worth acknowledging that any time we are comparing exam-based assessments with teacher judgements, when differences are identified it does not necessarily imply that teacher judgements are ‘wrong’. One or both methods of assessment may include biases, although as teacher judgement is inherently more subjective, biases are potentially more likely.

However, it is also possible that differences may emerge if teachers are assessing a slightly different construct, consciously or unconsciously, from exam-based assessments. For example, attainment through exams inherently partially assesses candidates’ exam taking ability, alongside the intended knowledge or skills. Whereas, a more holistic view of a students’ overall performance is unlikely to include ‘exam skills’ but may include other qualitative ‘attitudinal’ factors or other features teachers deem important to success in a subject. Teacher judgements may therefore be legitimate representations of this construct, although it may differ from the construct measured by exams. A direct comparison is always likely to result in differences as the two constructs, and subsequently the grades awarded, may not be directly equivalent.

The majority of studies comparing exam results and teacher judgement have shown that grades provided by teachers tend to be on average more generous than grades received from exam-based assessments (Delap, 1995; Dhillon, 2005; Gill and Benton, 2015). Most studies of teacher judgement in the UK have been based on data on A level predictions produced by teachers for use in university application. At this level, Delap (1994) found that the difference between teacher predictions and exam grades to be on average around half a grade and Wyness (2016) found that 75% of applicants were over predicted across their best three A levels. Thiede et al. (2019) describe this type of over estimation as confidence bias, although there is little empirical research to evaluate the reason behind these types of over estimations.

One potential reason for over estimation at A level may be to avoid closing down students’ opportunities for which universities or university courses they are able to apply to. Wyness (2016) demonstrated that, although much fewer than those overpredicted, underpredicted candidates were more likely to attend universities for which they were overqualified, which may then negatively affect their future earnings (Walker and Zhu, 2013) - something which teachers are likely keen to avoid. In this situation there are also no negative consequences to teachers for over-prediction and aspirational grades may help to encourage students to work harder to meet their predicted grades. There is less evidence of the generosity of grading by teachers at GCSE level, but research suggests that teacher judgements at all levels tend to be somewhat over optimistic (Lee & Newton, 2021).

Previous studies have also aimed to identify if any characteristics of candidates may have led to grades awarded by teacher judgement that were more or less generous. For example, Burgess and Greaves (2009) looked at the difference between teacher assessments and externally marked test scores at age 11 and found that teachers gave lower grades to pupils in receipt of free school meals (FSM), pupils with special educational needs (SEN) and black pupils. Similarly, Campbell (2015) found potential biases in judgements of pupils’ reading ability at primary school, where pupils from low-income families, boys, pupils with SEN and pupils who have English as an additional language (EAL) were less likely to be judged ‘above average’, after controlling for other factors including ability on a related cognitive test. A study of UCAS data (UCAS, 2016) found that Asian, Black, ‘Mixed’ and ‘Other’ ethnic groups were more likely to be over predicted, along with those from more disadvantaged areas and female students.

Ofqual published a review of studies of teacher predictions in 2020 (Lee & Walter, 2020) and an update with a review of concurrent teacher judgements in 2021 (Lee & Newton, 2021), including those studies mentioned above. From these reviews it was concluded that, overall, the results of previous research were mixed, but suggest a slight bias in favour of female students in teacher assessments and some evidence for higher ratings for black and Asian students when teachers predict A level grades.

The largest and most consistent effect across previous studies of teacher judgement suggested a bias against those with special educational needs, although the size of this effect was still relatively small. Results for socioeconomic status were less clear, with studies of teacher predictions generally suggesting higher teacher grades for more disadvantaged students, whereas studies of concurrent teacher assessment suggest the opposite effect and a relative advantage for less disadvantaged students. Overall, evidence from the relatively small number of relevant studies available suggested that where differences between exam results and teacher judgements can be linked to student characteristics, the effects are usually small and results between studies are inconsistent and, in some cases, contradictory. Even within the same study different effects are sometimes found between subjects. This suggests that given current evidence, there is not a substantial reason to expect there to be additional bias in CAGs related to candidate characteristics, when compared to grading in a normal year.

However, previous research published by Ofqual on results from 2020 has shown that the generosity of teacher judgements differed between subjects, which had some impact on inter-subject comparability compared to previous years (He & Black, 2020). Analysis of both GCSE and A level results showed that, in general, subjects had been graded more leniently in 2020 compared to previous years, both from looking at weighted mean grades and by applying a Rasch difficulty model. For GCSE, this constituted an overall leniency of around three fifths of a grade, and, at A level, around half a grade. Analysis showed that this resulted in a narrowing of differentiation between grades in terms of difficulty. The study identified that there had also been substantial differences between subjects in the amount of leniency, which had an impact on the rank order of subject difficulties seen in previous years and represented significantly more change in subject rank order than is usually found between years. This study did not attempt to identify if there were any patterns across subjects in this leniency in terms of assessment structure or subject type.

Current study

In this study we use the grades awarded via teacher judgement in 2020 (CAGs) as a unique case study to evaluate the similarities and differences between the factors influencing CAGs in 2020 and those linked to grades awarded in previous years, when candidates took assessments as normal. For this we use results data for England in 2019 and 2018 as a benchmark for normal outcomes and changes between these two years as an indication of the size of normal fluctuations in the relationships between grades and other factors. Uniquely here we have access to a large dataset covering all results awarding in all three years as well as a large number of candidate and school level attributes taken from data held by Ofqual and linked to demographic data from the National Pupil Database (NPD) compiled by the Department for Education (DfE).

When assigning grades to students, teachers were asked to make an absolute judgement of students’ academic ability (Thiede et al., 2019). The accuracy of absolute judgement decisions is usually evaluated by looking at the difference between predicted and actual levels of achievement (Thiede et al., 2019). Unfortunately, in this case we have no ‘actual level of achievement’ to compare teacher judgements to, as no students took exams alongside receiving a teacher judgement. Instead, here we examine if the relationships between grade awarded and candidate, school and subject characteristics are substantially different to the patterns of those relationships in previous years. Identifying unique changes in these relationships in 2020 may help to shed light on the decision-making processes used by schools and colleges in allocating CAGs, and more broadly some features which may influence teacher judgement in general. This may also help to identify areas where bias, unconscious or otherwise, could have occurred. In any year, there will be differences in results between groups of candidates and these will have complex causes. So, we are not aiming to evaluate the reasons for these differences in a normal year, but to see if the relationships with candidate, school or subjects change between 2019 and 2020 in a way which cannot be attributed to normal fluctuations (as defined by the 2018 to 2019 change).

It is worth acknowledging upfront that any relationships identified through the following analysis cannot be said to be causative of grading differences. In this type of study, it is important to acknowledge that some of the relationships may be simple correlations and there may be other unmeasured causative factors involved, which were not available to the analyses, that may have had an influence on results. Also, due to the nature of the very large datasets used here, even very small differences between groups may be ‘statistically significant’, when in reality the effect on grades due to these differences may have very little real-world impact. Only where factors have a significant relationship with grades and the size of that effect is meaningful in one or more years, are changes in those relationships likely to be meaningful. Therefore, throughout we will attempt to put results into context by defining notable levels of change in the relationships between variables and candidates’ grades. However, we also acknowledge that even small effects may have an impact on some individuals.

Aim and research questions

The main aim of the study is to evaluate whether there were any observable patterns in the allocation of CAGs in 2020 that differ from patterns observable in grades awarded in normal years (represented by years 2018 and 2019). These grading patterns are defined by the relationships between grade awarded and other information known about candidates, schools and subjects.

Research questions

- Did features at the candidate, centre or subject level explain more or less of the variability in CAGs compared to the variability explained in grades in a normal year, suggesting those features had a greater or lesser impact on grades in 2020 than in a normal year?

- Did students with certain characteristics obtain higher or lower CAGs relative to other students, when compared to normal years, after controlling for other factors?

- Did certain types of centres award higher or lower CAGs relative to other centre types, when compared to normal years, after controlling for other factors?

- After controlling for centre and candidate features, were grades awarded in certain subjects or subject types higher or lower than other subjects, when compared to grades awarded in normal years?

Methods

Data

For the analysis, data on GCSE and A level results from years 2018-2020 held by Ofqual was combined with data on candidate characteristics from DfE and other additional publicly available sources of data on school attributes and subject details (Ofqual, 2017, 2018). Ofqual data was matched to the NPD using candidates’ first name, surname and date of birth and only NPD data for candidates with unique matches was retained. Candidates who could not be uniquely matched or who were matched but had no available data in the NPD were classed as having ‘missing NPD data’. An analysis and discussion of issues with missing data in the NPD can be seen in the previously published equalities analysis by Ofqual (Lee et al., 2020).

A key point highlighted is that candidates with missing NPD data are not randomly distributed across centres. Independent centres have particularly high rates of missing data (making up 69% of all missing data at GCSE and 36% at A level, in the sample used for analysis), as submitting this data to the NPD is not mandatory for non-state schools. Sixth form colleges also have a relatively high rate of missing data at A level (48% of all missing data in sample, see Appendix A for further details of patterns of missing data). However, as patterns of missing data are relatively stable between the years included in the analysis, particularly at A level where overall missing rates are higher, this should not substantially affect comparisons made over time, which is the focus of the analyses presented here.

Data was restricted to only ‘typical’ candidates to support making like-for-like comparisons between years. This helps make the interpretation of analyses easier and subsequent conclusions clearer for this core group of candidates. For A levels this consisted of 18-year-old students taking three A levels, and for GCSEs, 16-year-old candidates taking at least 3 GCSEs. Age was judged as on the 31st August in the year candidates took exams. For the core modelling, data was also restricted to only candidates who also had prior attainment data available, as this is a key predictor of exam results. Again, missing data for prior attainment is not distributed randomly, and is proportionally highest for candidates at independent schools at GCSE (55% of ‘typical candidate’ entries at independent centres have missing prior attainment, making up 22% of all entries with missing data). At A level, missing prior attainment was more evenly distributed although the majority came from sixth form centres (31%) followed by FE/Tertiary colleges (26%) and Independent centres (23%; see Appendix A for further details).

Candidates from ‘Missing’ and ‘Other’ centre types were also removed as there is evidence from the data that entry patterns for candidates from centres in the ‘Other’ category were different in 2020 compared to previous years, reducing the comparability of this group over time. Private candidates who are not taught at a centre, who would fall under this category in a normal year, were only able to receive CAGs in 2020 if Heads of Centres could be confident in ranking them alongside other students, which in many cases was not possible (Ofqual, 2020a). Students with ‘Other’ and ‘Missing’ centre types represent 2.1% of the GCSE data and 1.3% of all A level entries across all three years, but only 1.3% of GCSE ‘typical’ candidate entries and 0.7% of A level ‘typical’ candidate entries. Centre level variables were calculated before restricting samples, to give the most accurate indication of centre characteristics. See Appendix B for summary statistics of data before and after filtering.

For GCSE, only subjects which were awarded on the reformed 9 to 1 grading scale were included in analysis. This means that only subjects in phase 1 and phase 2 of GCSE reform – those with results first issued in 2017 and 2018 respectively - were included (Ofqual, 2017). Due to issues of comparability this also involved removing combined science awards from the analysis as this subject is awarded on a double grading scale, making conversion and comparison to other subjects difficult. Only subjects which were awarded in all three years were included in analyses, although it is possible that individual assessment specifications may not have been available across all three years, for example where specifications are available in the same subject but from different exam boards in different years.

The majority of GCSE modelling was carried out on a fully random sample of 1,500,000 results (approximately 500,000 from each year modelled, ~17% of entries before sampling), to create a more manageable dataset for computational reasons. The sample was checked to ensure it was representative of the total data. A comparison of the dataset before and after sampling is shown in Appendix B. Key models were also run on the full dataset, which indicated there were no substantial differences in model estimates, all results presented are those from models using the sampled data for consistency. Table 1 provides details of the candidates and results included in the final analysis, while a more detailed table and a table for the full dataset before filtering is included in Appendix B.

Table 1. Numbers and percentages of entries, centres and candidates in the sample used for analysis. Centre breakdowns represent number and percentage of total centres in each group, candidate breakdowns represent number and percentage of candidates in each group.

| GCSE 2018 | GCSE 2019 | GCSE 2020 | A level 2018 | A level 2019 | A level 2020 | |

|---|---|---|---|---|---|---|

| Total Entries | 481720 | 501001 | 517198 | 377447 | 387600 | 420129 |

| Total Centres | 3883 | 3990 | 4046 | 2513 | 2500 | 2475 |

| Centre Type: Mainstream secondary | 3099 (79.8%) | 3147 (78.9%) | 3179 (78.6%) | 1762 (70.5%) | 1779 (70.8%) | 1746 (70.5%) |

| Centre Type: College (FE/Tertiary) | 39 (1%) | 34 (0.9%) | 37 (0.9%) | 79 (3.2%) | 70 (2.8%) | 75 (3%) |

| Centre Type: Grammar | 73 (1.9%) | 73 (1.8%) | 73 (1.8%) | 70 (2.8%) | 70 (2.8%) | 72 (2.9%) |

| Centre Type: Independent | 662 (17%) | 725 (18.2%) | 750 (18.5%) | 494 (19.8%) | 493 (19.6%) | 485 (19.6%) |

| Centre Type: Sixth Form college | 10 (0.3%) | 11 (0.3%) | 7 (0.2%) | 95 (3.8%) | 101 (4%) | 97 (3.9%) |

| Total Students | 302110 | 315349 | 326896 | 129155 | 130944 | 141869 |

| Gender: Female | 152952 (50.6%) | 159682 (50.6%) | 165319 (50.6%) | 73700 (57.1%) | 73750 (56.3%) | 80431 (56.7%) |

| Gender: Male | 149158 (49.4%) | 155667 (49.4%) | 161577 (49.4%) | 55473 (42.9%) | 57204 (43.7%) | 61462 (43.3%) |

Analysis methodology

The core modelling approach used a series of multilevel models using grades converted to a numeric score as the dependent variable. For GCSE, grades 9 to U were converted to a 9 to 0 scale and for A level, grades A* to U were converted to a scale of 6 to 0, with the assumption that grades adequately reflect a linear scale, which is discussed further below. Models were nested by layering in variables from different levels to the analysis: prior attainment, candidate, centre, subject-within-centre and subject. This set of nested models was run separately for each year, on awarded grades in 2018 and 2019 and CAGs in 2020. The core models presented in the following sections included all subjects and a fixed effect variable to account for differences in mean subject grade. Random effects of candidate ID and centre number were included in all models to account for the hierarchical structure of the data and the non-independence of datapoints within these groups, that is students within the same centre being more similar to each other than to students in other centres.

This type of multivariate analysis allows the effect of individual variables to be assessed after holding other effects constant. We can also compare the coefficients produced by the three separate sets of models for each year to observe if there have been meaningful changes in these relationships between years, with the assumption that there have not been major shifts in the cohort not controlled for by the factors included in the model.

The use of a series of nested models allows us to identify how much variation in grades is explained by the variables included at each level - prior attainment, candidate, centre, subject-within-centre or subject - and crucially to the analysis presented here, whether and how the amount of variance explained changes between the years analysed. For example, an increase in the variance explained at a particular level in a particular year would suggest that characteristics included at that level have a stronger relationship with, and may be having a larger effect on, candidates’ grades in that year compared to other years.

The set of nested models comprised of:

- Model 0 - Null model only containing subject fixed effect (also included in all other models)

- Model 1 - just prior attainment

- Model 2a - prior attainment and other candidate level variables

- Model 2b - prior attainment and centre level variables

- Model 3 - prior attainment, centre and candidate level variables

- Model 4 - prior attainment, centre and candidate variables and subject-within-centre prior performance.

Additional models 5a and 5b were used to evaluate subject level effects. Model 5a included all the variables in model 4 and in addition the proportion of Non-Exam Assessment (NEA) included in each subject. Model 5b included variables indicating if the subject was a ‘facilitating subject’ for A level or an EBacc subject for GCSE and five broad subject groupings (STEM, Applied, Languages, Humanities, Expressive, as in Bramley 2014, see Appendix C for details). Facilitating subjects are those included in a list of subjects which historically were deemed valuable to a wide range of degree courses at selective universities, representing subjects which tend to be more academic in nature, although this list has been discontinued in recent years (Russell Group, 2016). EBacc subjects are those that are included in the English Baccalaureate, a designation which is used for school accountability to encourage students to take GCSEs that “keep young people’s options open” and again include some of the more academic subjects (DfE, 2019). 5a and 5b were modelled separately as subject type and amount of NEA are highly related and co-vary, creating difficulty in interpretation if included in the same model. Table 2 provides details of the variables included in each of the models.

Table 2. Details of variables included in core modelling. Reference groups are either the first category listed for unordered categories or the ‘medium’ group for ordered categories, shown in bold.

| Variable | Level | Details | Included in models |

|---|---|---|---|

| Prior attainment score | Candidate - Prior attainment | Normalised mean GCSE or KS2 score -Standardised to mean 0, SD of 1 | 1,2a,2b,3,4,5a,5b |

| Prior attainment category | Candidate - Prior attainment | Quintiles: Very low, low, medium, high, very high | 1,2a,2b,3,4,5a,5b |

| Prior attainment score by Prior attainment category interaction | Candidate - Prior attainment | 1,2a,2b,3,4,5a, 5b | |

| Gender | Candidate | Female, Male | 2a,3,4, 5a, 5b |

| DOB by quarter | Candidate | 1:Sep-Nov, 2:Dec-Feb, 3:March-May, 4:June-Aug | 2a,3,4, 5a, 5b |

| SEN Status | Candidate | No, Yes | 2a,3,4, 5a, 5b |

| Language Group | Candidate | English, Other, Unclassified | 2a,3,4, 5a, 5b |

| Ethnic Group | Candidate | White, Asian, Black, Chinese, Mixed Background, Unclassified, Any Other Ethnic Group | 2a,3,4, 5a, 5b |

| IDACI Score group | Candidate | Quintiles: Very low, low, medium, high, very high | 2a,3,4,5a, 5b |

| Free School Meal Eligible | Candidate | No, Yes | 2a,3,4, 5a, 5b |

| Missing NPD flag | Candidate | Flag to indicate if candidates had missing NPD data: No, Yes | 2a,3,4, 5a, 5b |

| Subject cohort size group | Centre | Number of students taking subject within centre. Split into three equal groups: Small, medium, large | 2b,3,4, 5a, 5b |

| Centre Type | Centre | Secondary (includes all mainstream state schools ie academies, free schools, comprehensive), College (FE and Tertiary), Independent, Grammar, Sixth Form (sixth form colleges) | 2b,3,4, 5a, 5b |

| Proportion SEN group | Centre | Three equal groups: Low, medium, high | 2b,3,4, 5a, 5b |

| Mean IDACI score group | Centre | Quintiles: Very low, low, medium, high, very high | 2b,3,4, 5a, 5b |

| Proportion EAL group | Centre | Three equal groups: Low, medium, high | 2b,3,4, 5a, 5b |

| Mean prior attainment group | Centre | Three equal groups: Low, medium, high | 2b,3,4, 5a, 5b |

| Region of country | Centre | London, East Midlands, East of England, North East, North West, South East, South West, West Midlands, Yorkshire and Humber, Missing | 2b,3,4,5a, 5b |

| Mean previous years grade group | Subject within Centre | Mean grade from the subject/centre in previous year in three equal groups: low, medium, high | 4, 5a, 5b |

| Mean previous year value added group | Subject within Centre | Mean VA score from previous year (scaled grade -scaled prior attainment) in three equal groups: low, medium, high | 4, 5a, 5b |

| Mean previous years grade group by Mean previous year value added group interaction | Subject within Centre | 4, 5a, 5b | |

| Subject | Subject | Subject groupings used to produce CAGs | 0,1,2a,2b,3,4,5a, 5b |

| NEA group | Subject | None, low (10-20%), medium (30-40%), high (50-60%), All | 5a |

| Subject type | Subject | Humanities, Applied, STEM, Expressive, Languages | 5b |

| Facilitating/EBacc subject | Subject | No, Yes | 5b |

Prior attainment scores for both Key Stage 2 results and mean GCSE scores were initially provided on a normalised scale, ranging from 0 to 100. For analysis these variables were standardised to a mean of 0 and a standard deviation of 1 across years. For the core modelling prior attainment was included as both five categorical quantiles, very low to very high, and a continuous linear variable, as well as the interaction between the two. This allows the relationship between prior attainment and grade to be non-linear and enables us to estimate the slope of the relationship between prior attainment and grade for each of the prior attainment quantiles. For each variable divided into quantiles, either three or five groups, groups were calculated based on percentile ranks across years to divide variables into roughly equal sized categories, meaning the same boundary scores were used in all three years.

Quantiles were calculated on candidates and centres included in the analysis rather than the whole population. Cut-offs were calculated after restricting to unique candidates for candidate level variables and unique centres for centre level variables. Details of the cut-off scores for each of these categories are provided in Appendix D. For special education needs, candidates who were either classified as SEN with a statement or without a statement were grouped as having SEN, all other candidates were classed as ‘not SEN’.

Alternative models

A series of alternative models were run to provide additional confirmation of the findings of the core models and to provide additional insight into some specific areas of interest.

The core models assume that grades represent a linear scale, therefore the interval between each grade is consistent and that the relationship between each variable and grade is consistent across the grade distribution, which may not always hold. To provide confidence in these results the models were rerun as binary logistic models evaluating the probability of candidates attaining at least specific key grades or above. The dependent variable was 0 for candidates that did not achieve at least the grade modelled and 1 if they achieved that grade or above. For GCSE, the key grades evaluated were grade 4 and grade 7 or above, and for A level the grades evaluated were grade C and grade A or above.

A second alternative set of models were produced with prior attainment as a nine-category quantile and an additional category for ‘missing prior attainment’ instead of the two prior attainment variables and interaction described above. This was to avoid excluding candidates based on missing prior attainment, allowing us to examine if their inclusion or exclusion has any substantial impact on model estimation. It also allows us to investigate the change in the relationship between prior attainment and grade at multiple steps along the distribution.

A final set of additional models were carried out to examine some interactions of interest. These models followed the specification for model 4 but added in interactions between prior attainment and socio-economic status (SES), between SES and ethnicity, and between ethnicity and proportion of non-white students in the centre.

Identifying changes between years

Models were evaluated in multiple stages to identify notable changes in relationships in 2020 compared to previous years. First, the variance explained by the variables included in each nested model was evaluated to identify the percentage of variance explained at each level - prior attainment, candidate, centre, subject-within-centre - and how this changed between 2020 and previous years. Percentage of total variance explained was calculated for each model using pseudo R-squared values, based on the method described by Nakagawa and Schielzeth (2012). This method provides a breakdown of the marginal R-squared, the percentage of variance explained by the fixed effect variables included in the model, and the conditional R-squared, the total percentage of variance explained by the model including both the fixed effects and the random effects included to account for the clustered nature of the data (student ID and centre number). This allows partitioning of the variance into ‘explained’ variance by the variables included in each model and additional ‘unexplained’ variance between candidates and between centres.

Next, we evaluated the meaningfulness of changes in model coefficients. Due to the fact that these models contain a large number of observations, variables may be statistically significant even if effect sizes are very small. We therefore cannot simply rely on significance, which may be misleading, we also need to consider the meaningfulness of the effect sizes. Variables which showed no significant relationship with grade in either 2020 or 2019 were subsequently not evaluated further. For model coefficients which were significantly different than 0 in 2019 or 2020, we compared the size of the coefficients between different years to evaluate how the relationship between each variable and grade awarded differed between years. Changes in 2020 were considered notable if there had been a larger change in the coefficients between 2019 and 2020 than between 2018 and 2019 and the change in coefficient between 2019 and 2020 was larger than 1/10th of a grade (±0.1). This is to ensure that coefficient changes were only discussed as being of substantive interest if they represented changes larger than ‘normal fluctuation’ between years and also represent an effect which is large enough to be meaningful in the interpretation of assessment results.

A second criterion was used to identify changes of borderline interest, by identifying coefficients which were at least 0.05 outside of the ‘normal’ range, defined by the coefficients from 2018 and 2019 as the maximum and minimum of this range. Highlighting coefficients in 2020 that were 0.05 higher than the highest previous coefficient from 2018 or 2019 or 0.05 lower than the lowest previous coefficient.

Results

Results are presented for A levels followed by those for GCSE. Within each of these sections we start by highlighting some descriptive analyses of some of the key variables. Next, we present the results of the core series of models for 2018, 2019 and 2020. To do this we follow the steps outlined above, first by looking at the variance explained at each level and how these differ between years to identify if there have been significant shifts in which groups of variables have the strongest relationship with grade in 2020. Next, we look at changes in regression coefficients with significant effects using the procedure discussed previously. Coefficients from these models represent the relative difference in mean grade between the group of interest and the reference category for that variable. Where these coefficients have changed between years it represents a relative increase or decrease in the mean grade for candidates in that category relative to the mean grade of those in the reference category. Changes in ‘Missing’ categories are only discussed where they are likely to be meaningful.

Following this evaluation of the core linear models, we present findings from the additional model specifications. For each of these additional models, results are only discussed in detail where they notably differ from the results of the core models (full model outputs can be found in Appendix E and F).

First, the binomial models, which include grade as a binary variable, whether candidates achieved a C or A or above for A level or 4 or 7 or above for GCSE, and evaluate the probability of candidates achieving each grade or above are discussed. These models provide an additional assurance of the results found in the core modelling. These binomial models also allow us to identify if the effects identified in the core models apply over the whole grade range or have more of an impact in the middle of the grade distribution (grade 4 or C) or only towards the top of the grade range (grades A or 7).

Second, the models including candidates with missing prior attainment and including prior attainment as a series of quantile categories are discussed. This model specification allows us to ensure that model results are not biased by the exclusion of candidates with missing prior attainment data. The prior attainment quantile modelling approach also allows additional evaluation of the relationship between prior attainment and grade across the prior attainment range.

Finally, we present the results of a series of models using the core modelling approach but layering in a few additional interactions between prior attainment, socio-economic status and ethnicity. These are effects that have been variously identified in previous studies of teacher judgement as having a possible impact, but their inclusion in the core models here would make the main effects difficult to interpret.

A level

Descriptive statistics

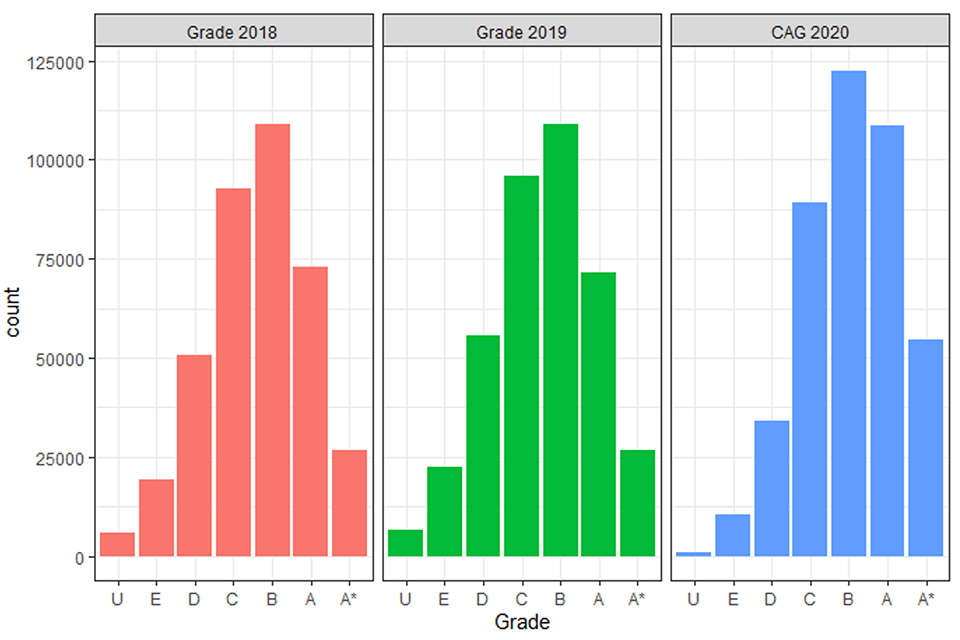

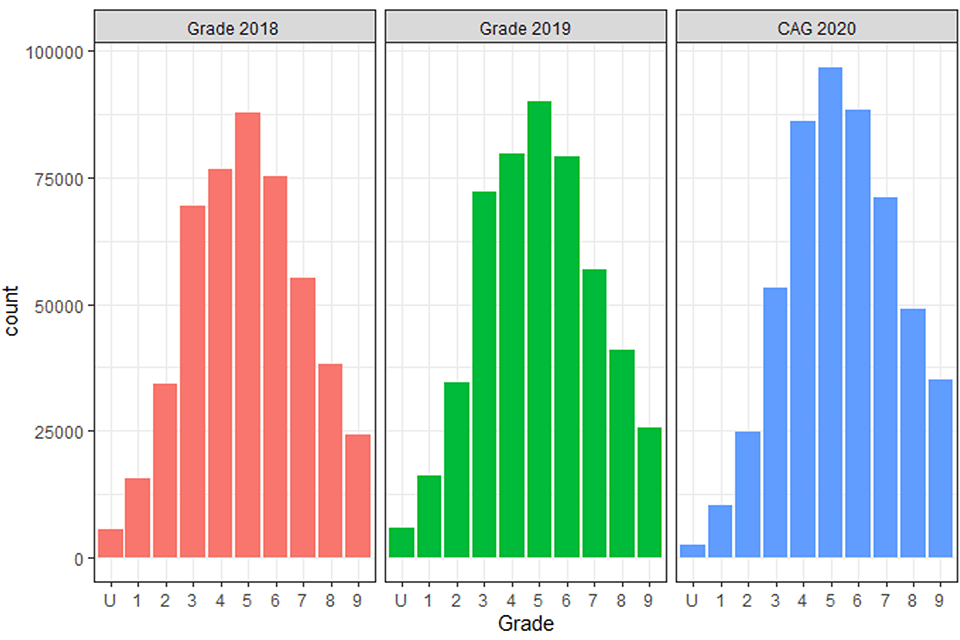

At A level there has been an average across subject increase in outcomes of around half a grade in 2020 compared to previous years (+0.49, Table 3). Figure 1 shows the grade distribution for results in 2018 and 2019 and CAGs in 2020 and it can be seen there is a marked shift of the distribution to the right, indicating grades have overall moved up the grade range.

Figure 1. Grade distributions in 2018, 2019 and CAG distribution in 2020.

Three histograms showing the number of each grade awarded in 2018, 2019 and CAGs awarded in 2020. The grade distribution for CAGs in 2020 is higher up the grade range compared to the other two histograms.

Table 3. Descriptive statistics for A level data used in analysis.

| 2018 | 2019 | 2020 | 2020 minus average of previous years | |

|---|---|---|---|---|

| Overall Mean Grade | 3.60 (1.35) | 3.55 (1.37) | 4.06 (1.25) | 0.49 |

| Correlation with prior attainment | 0.55 | 0.56 | 0.60 | 0.05 |

| Mean Grade – prior quintile 1 | 2.67 (1.19) | 2.58 (1.20) | 3.07 (1.07) | 0.45 |

| Mean Grade – prior quintile 2 | 3.12 (1.19) | 3.05 (1.20) | 3.57 (1.08) | 0.49 |

| Mean Grade – prior quintile 3 | 3.5 (1.18) | 3.45 (1.19) | 3.99 (1.06) | 0.52 |

| Mean Grade – prior quintile 4 | 3.97 (1.14) | 3.92 (1.14) | 4.49 (1.01) | 0.55 |

| Mean Grade – prior quintile 5 | 4.73 (1.01) | 4.71 (1.03) | 5.20 (0.84) | 0.48 |

Note: Standard deviations shown in brackets.

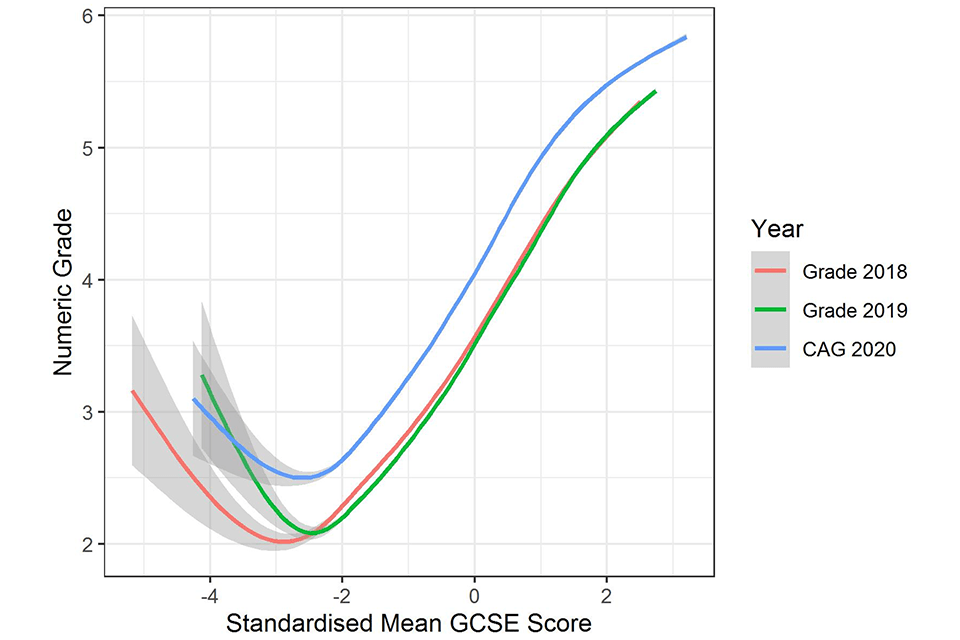

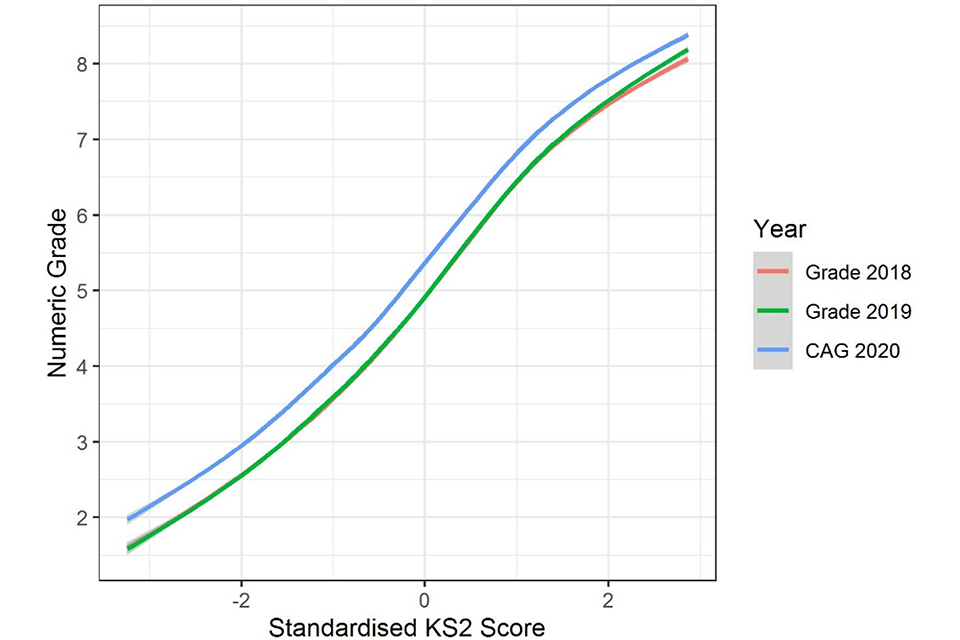

Figure 2 represents the relationship with prior attainment and indicates that this relationship has remained relatively consistent between years, as the lines are broadly parallel. However, data in Table 3 suggests in 2020 slightly more generous grades were awarded to candidates with higher prior attainment, except for those in the highest quintile group. This suggests a slight plateauing of the relationship with prior attainment at the top end of the distribution. This is likely to be due to the grade distribution reaching the top limits of the available grade range causing truncation at the top end through a ceiling effect. The reduced standard deviation in grades in 2020, particularly for the highest prior attainment quintile would support this (see Table 3).

Figure 2. Relationship between prior attainment and grade in all three years evaluated with confidence intervals.

A line graph showing the relationship between mean GCSE score and grades. Three curves show the relationship in 2018 and 2019 and for CAGs in 2020. The curve for CAGs is higher than the other two curves but has a similar shape.

Note: Smoothed curve fitted using a generalized additive model from R package ggplot2. Confidence intervals are wider towards the bottom end of the mean GCSE scale as the number of candidates is small.

Table 4 shows the mean grade in each year for each of the category breakdowns and the difference between 2020 and an average of the previous two years. The most notable difference in this descriptive data is that small cohorts appear to have greater increases in grades in 2020 than larger cohorts. Also, that colleges, grammar schools and sixth form centres have a slightly smaller increase in grades and independent centres the largest increase from previous years. At subject level, the biggest differences are the larger relative increase in grades in languages and expressive subjects compared to others.

Table 4. Mean grades in each year for breakdowns by subject cohort size, centre type and subject group.

| Subgroup | 2018 | 2019 | 2020 | 2020 minus average of previous years |

|---|---|---|---|---|

| Subject Cohort Size: Not-small | 3.61 | 3.55 | 4.05 | 0.47 |

| Subject Cohort Size: Small | 3.49 | 3.49 | 4.26 | 0.77 |

| Centre Type: College | 3.48 | 3.20 | 3.77 | 0.43 |

| Centre Type: Grammar | 3.82 | 3.80 | 4.21 | 0.40 |

| Centre Type: Independent | 4.04 | 4.03 | 4.58 | 0.55 |

| Centre Type: Secondary | 3.53 | 3.46 | 4.00 | 0.51 |

| Centre Type: Sixth form | 3.57 | 3.51 | 3.93 | 0.39 |

| Subject Group: Applied | 3.40 | 3.36 | 3.88 | 0.50 |

| Subject Group: Expressive | 3.84 | 3.86 | 4.40 | 0.55 |

| Subject Group: Humanities | 3.61 | 3.59 | 4.06 | 0.46 |

| Subject Group: Languages | 3.87 | 3.85 | 4.47 | 0.61 |

| Subject Group: STEM | 3.57 | 3.45 | 4.00 | 0.49 |

Note: Final column shows the difference between 2020 grades and an average of the previous two years.

Variance explained at each level

Total variance of results was smaller in 2020 compared to previous years (2018: 1.90, 2019: 1.94, 2020: 1.59), suggesting that the overall spread of grades was smaller. In model 0 (only including the subject fixed effect and random effects), there was both less between candidate variance (2018: 1.02, 2019: 1.08, 2020: 0.98) and between centre variance (2018: 0.33, 2019: 0.34, 2020: 0.2). This is likely in part due to the truncation of the grade range discussed above.

Table 5. Percentage of variance explained by fixed model effects (marginal pseudo – R squared) and full model including fixed and random effects (conditional pseudo R-squared)

| Model | Marg. 2018 | Marg. 2019 | Marg. 2020 | Cond. 2018 | Cond. 2019 | Cond. 2020 |

|---|---|---|---|---|---|---|

| Mod 0 (null) | 7% | 8% | 7% | 71% | 73% | 73% |

| Mod 1 (prior att.) | 38% | 40% | 45% | 67% | 69% | 70% |

| Mod 2a (cand.) | 38% | 40% | 45% | 67% | 69% | 70% |

| Mod 2b (centre) | 39% | 41% | 45% | 67% | 69% | 70% |

| Mod 3 (cand & centre) | 40% | 42% | 45% | 67% | 69% | 70% |

| Mod 4 (centre prior perf.) | 41% | 43% | 46% | 68% | 70% | 70% |

Table 6. Estimates of the variance explained at each level, calculated as the difference in marginal R-squared between nested models.

| Level | 2018 | 2019 | 2020 |

|---|---|---|---|

| Between Subjects | 7.0% | 8.0% | 7.1% |

| Prior Attainment | 30.6% | 31.6% | 38.1% |

| Candidate Variables | 0.7/0.8% | 0.6/0.4% | 0.2/0.1% |

| Centre Variables | 1.7%/1.8% | 1.8%/1.6% | 0.1%/0% |

| Subject-Centre Prior Performance | 0.7% | 1.1% | 1.0% |

| Unexplained Centre | 1.7% | 1.9% | 2.0% |

| Unexplained Candidate | 22.3% | 25.0% | 24.8% |

| Residual Variance | 29.6% | 30.2% | 32.4% |

Note: For candidate and centre level variables two estimates are provided. The first as the difference between model 1 and 2a/2b, the second as the difference between model 2a/2b and 3.

Tables 5 and 6 show the percentage of the total variance explained by each model and at each model level, as the total variance was slightly smaller in 2020 these proportions may not represent the same amount of variance in absolute terms. For the A level models prior attainment explained the largest proportion of variance of any of the fixed effect variables included in the models for all years. In addition, in 2020 the variance explained by prior attainment was notably higher than in previous years by 6-7% suggesting that prior attainment is a stronger predictor of grade in 2020 compared to previous years. Candidate level variables explained only a very small amount of variance in previous years (around 1%) and this was even smaller in 2020, explaining only around 0.1% of variance. In previous years centre level variables explained around 2% of the variance but they explained almost no variance in 2020. This suggests that both candidate and centre level variables are overall having a smaller impact on grades in 2020 than in a normal year. Finally, the addition of centre prior performance explained around 1% of the variance in all three years. In all cases, adding additional fixed effects variables did not improve the overall explanatory power of the models (conditional R-squared), due to random effects for candidate and centre already taking account of much of the variance. However, adding in additional variables did help account for some of the unexplained variation between centres and between candidates (see Table 5). Although even once all variables were included, a large proportion of the variance in grades in all years was due to unexplained differences between candidates (22-25%) and residual variance representing additional unexplained variation in grades (30-32%).

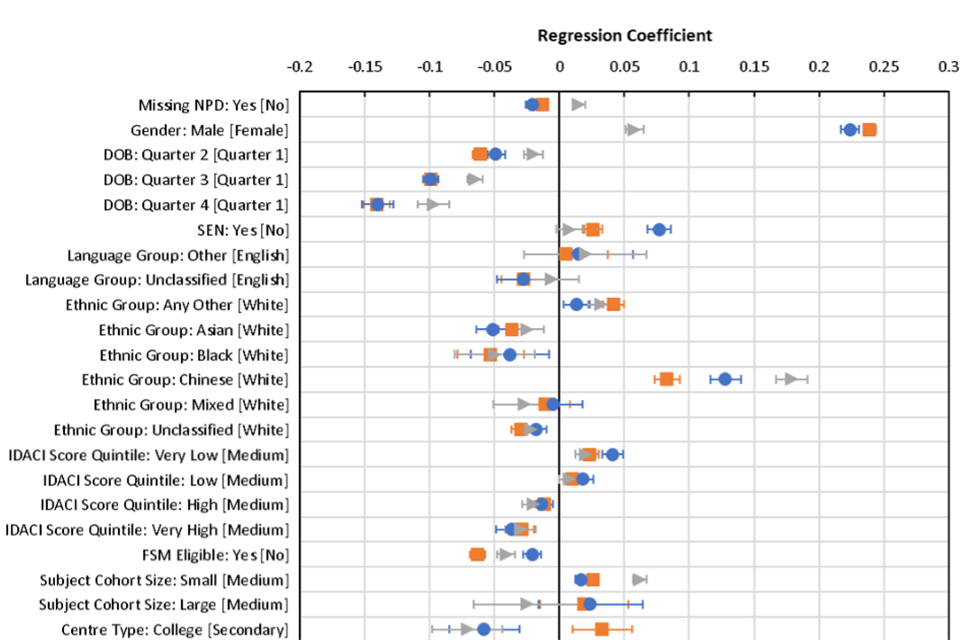

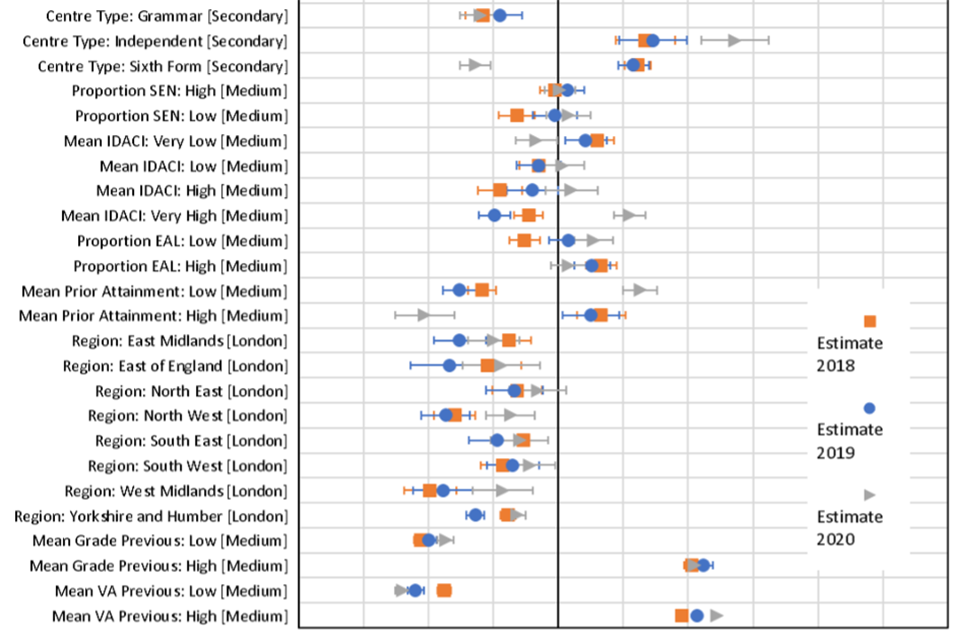

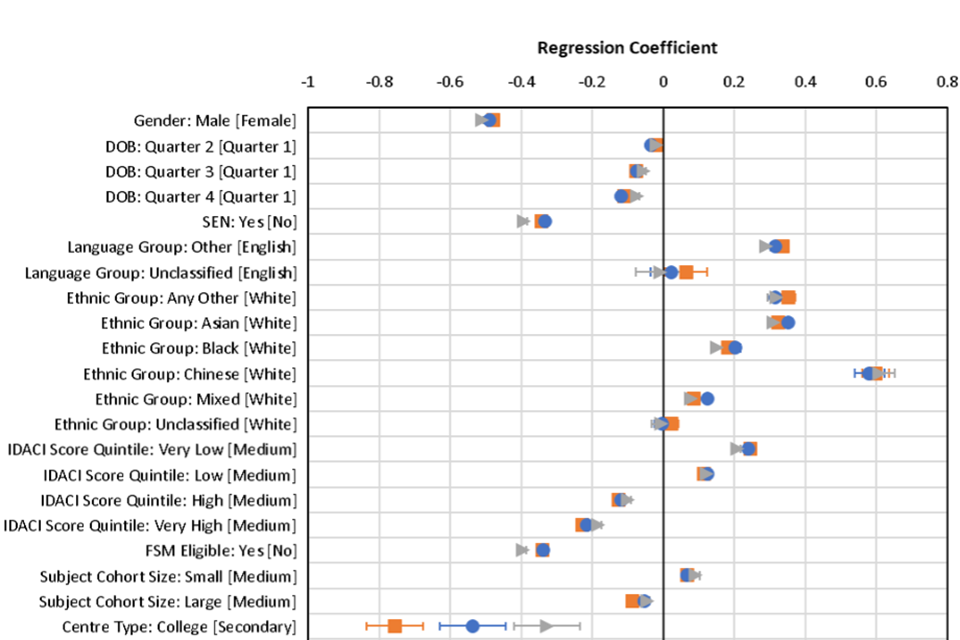

Changes in coefficients

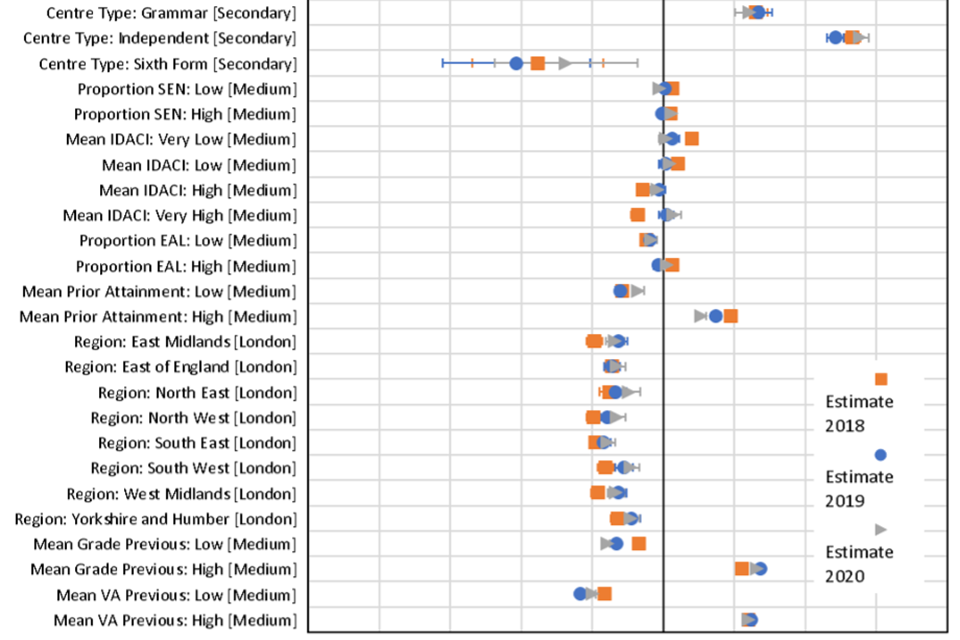

Following the main criteria set out above for interpretable changes in coefficients - that is, those with a change greater than 0.1 between 2019 and 2020 and a greater absolute change between 2019 and 2020 than between 2018 and 2019 - there were a small number of notable changes at A level (See Figure 3). After controlling for all other factors, male students outperformed female students in 2018 and 2019 by around 0.22-0.24 grades, however in 2020 this gap was reduced to 0.06 grades. This suggests a small relative decrease in outcomes for male students in 2020 compared to previous years.

Compared to mainstream secondary schools, sixth form colleges had relatively less generous grades in 2020 by around 0.12 grades on average than in 2019. In previous years candidates at sixth form centres usually outperformed secondaries by on average around 0.06 grades, whereas in 2020 their grades were on average 0.06 grades lower.

Centres with the highest average deprivation scores had relatively higher outcomes in 2020 compared to 2019 by 0.1 grades on average. Usually, candidates at these centres attain grades slightly lower on average than candidates at centres with average deprivation scores, but in 2020 had slightly higher grades by 0.06 grades.

Schools with high average prior attainment had lower outcomes in 2020 by 0.13 grades compared to medium prior attainment centres, whereas schools with low prior attainment grades increased on average by 0.14 grades. This is likely due to the effect noted previously of an overall increase in mean grade, resulting in a truncation of the grade distribution at the top end. Essentially, schools with previously lower performance had further to increase in 2020, whereas those at the top end were limited in the amount of increase possible.

Evaluating the effect of centre prior performance, suggests that centres with missing prior performance and value-added data, essentially those entering a subject for the first time, had relatively higher grades in 2020 than previous years. These centres in normal years tend to have outcomes lower than the reference group (2018: -0.18 grades, 2019: -0.12 grades), whereas in 2020 they had very similar outcomes to centres with average prior performance and value added.

Modelling also identified the plateauing effect of the relationship with prior attainment for candidates in the highest prior attainment group (See Appendix E). The slope of the relationship between prior attainment and grade for this group was around 0.72 in previous years but only 0.53 in 2020. The slopes for other prior attainment groups did not substantially change. This effect is more noticeable in the prior attainment quantile model, which shows that it is only those in the top prior attainment quantile (top 11%) which show this relative disadvantage (see details below). Again, this is likely due to candidates at the top end of the distribution not being able to increase their grades in 2020 by as much as those lower down the distribution as they are already attaining the maximum grade possible.

At subject level, modelling suggests that facilitating subjects were slightly more generously awarded than non-facilitating subjects by around 0.4 grades compared to 2019 (see Table 7). The order of subject generosity ranges from the Expressive subject group as the most generously awarded compared to 2019, followed by facilitating STEM, facilitating Humanities, Applied, not-facilitating STEM, not-facilitating Humanities and finally Languages. Breaking the subjects down separately by proportion of NEA showed that the most generous subjects were those with high proportions of NEA, the lowest increases in grades from 2019 were in subjects with low and medium levels of NEA, however those with all NEA or no NEA were in between (see Table 8).

Table 7. Model coefficients from Model 5b for facilitating and subject groups, with standard errors in brackets.

| Group | 2018 | 2019 | 2020 | 2020 minus average |

|---|---|---|---|---|

| Applied | -0.18 (0.02) | -0.24 (0.02) | 0.11 (0.01) | +0.33 |

| Expressive | -0.43 (0.04) | -0.47 (0.04) | 0.03 (0.03) | +0.48 |

| Languages | 1.77 (0.15) | 2.02 (0.16) | 1.64 (0.19) | -0.26 |

| STEM | 0.22 (0.07) | 0 (0.07) | 0.11 (0.05) | 0 |

| Facilitating | -0.52 (0.04) | -0.66 (0.04) | -0.29 (0.04) | +0.3 |

Note: Reference group is non-facilitating humanities. Final column shows difference between 2020 coefficient and the average of the previous years.

Table 8. Model coefficients from Model 5a for NEA groups, with standard errors in brackets.

| Group | 2018 | 2019 | 2020 | 2020 minus average |

|---|---|---|---|---|

| Low | 0.29 (0.06) | 0.66 (0.06) | 0.18 (0.04) | -0.3 |

| Medium | 1.55 (0.15) | 2.02 (0.16) | 1.53 (0.20) | -0.26 |

| High | -0.13 (0.07) | 0.19 (0.07) | 0.21 (0.05) | +0.18 |

| All | 0.30 (0.08) | 0.45 (0.09) | 0.35 (0.06) | -0.03 |

Note: Reference group is the no NEA group. Final column shows difference between 2020 coefficient and the average of the previous years.

Figure 3. Model coefficients from core model 4 for 2018, 2019 and 2020, with standard error bars.

Note: Reference category for coefficients shown in square brackets. Missing categories and interaction effects are not shown.

Borderline changes

Using the criteria previously outlined for changes of borderline interest - coefficients more than 0.05 grades outside the ‘normal’ range defined by 2018 and 2019 models - then a few additional changes are highlighted as potentially notable.

Students in the Chinese ethnic group did particularly well in 2020, following a trend where their grades were 0.05 grades higher in 2019 than 2018 and again 0.06 grades higher in 2020 than 2019. Looking at centre type, independent centres average grades increased in 2020 by around 0.06 grades compared to mainstream schools. Finally, we see that there is a significant change in the interaction between centre mean prior value added and centre mean prior performance.

The group with the largest relative increase in grades is those from schools with low previous mean grades and low previous value added. These schools saw a relative increase in grades by 0.08 grades from 2019. The absolute difference in outcomes between this group and the medium group became non-significant in 2020. This may again be due to the truncation effect as centres starting from a lower starting point have further to increase on average in the grade range.

Although not significant by the criteria laid out above, and therefore only relatively minor effects, there were a few other interesting patterns in variables with multiple levels. The overall effect of birth date, by quarter of the year, was reduced in 2020. Fairly consistently in 2018 and 2019, younger students tend to have a slightly lower grades by around 0.04-0.05 grades per quarter of the year, whereas in 2020 this effect was reduced to only around 0.02-0.03 grades. There also appears to be a small change in the effect of cohort size; in previous years there are minor differences with both large and small cohorts attaining grades on average around 0.02 grades higher than medium sized cohorts. Whereas in 2020 there seems to be a small cohort advantage, with small cohorts attaining grades on average 0.06 grades higher than previously compared to the medium group and large cohorts attaining grades 0.03 lower. Finally, there seems to be a very small change in the effect of region of the country, although the differences between regions are usually relatively minor; in 2020 the majority of coefficients were closer to 0 than previous years suggesting a weakening of this effect.

Binomial models

The A level binomial model for both C and above and A and above overall showed similar patterns to those discussed above in the core modelling. Table 9 shows the percentage of candidates reaching at least grade C and grade A increased in 2020, with the increase at grade C particularly in the lower prior performance groups and the increase at grade A particularly in the highest prior attainment groups. When identifying notable changes in coefficients again only coefficients which saw a larger change between 2019 and 2020 than between 2018 and 2019 were considered. We also used a cut-off of at least a change of 0.2 in the log-odds of the coefficient between 2019 and 2020 to be notable. However, coefficients are reported in the text using odds-ratios for ease of interpretation (full output in Appendix E). Odds ratios greater than 1 indicate an increase in the probability of that group attaining the key grade compared to the reference group, whereas odds-ratios below 1 indicate a lower probability relative to the reference group.

Table 9. Percentage of candidates achieving each key grade analysed or above, by prior attainment group. C or Above indicated by C+, A or Above indicated by A+.

| C+ 2018 | C+ 2019 | C+ 2020 | C+ 2020 -average | A+ 2018 | A+ 2019 | A+ 2020 | A+ 2020 -average | |

|---|---|---|---|---|---|---|---|---|

| Overall percentage attaining grade or above | 80% | 78% | 89% | 10% | 26% | 25% | 39% | 13% |

| % Grade – prior quintile 1 | 58% | 55% | 73% | 17% | 5% | 4% | 8% | 4% |

| % Grade – prior quintile 2 | 72% | 70% | 85% | 14% | 10% | 9% | 18% | 8% |

| % Grade – prior quintile 3 | 82% | 80% | 92% | 11% | 19% | 17% | 32% | 14% |

| % Grade – prior quintile 4 | 90% | 89% | 97% | 7% | 33% | 32% | 54% | 22% |

| % Grade – prior quintile 5 | 97% | 97% | 99% | 2% | 64% | 63% | 83% | 19% |

Note: Additional column shows the difference in percentage between 2020 and the average of the previous two years.

Modelling the probability of candidates gaining at least C, in addition to some of the factors discussed above, the probability for candidates in small subject cohorts showed a notable increase from an odds ratio of 1.05 (5% more likely than medium group to attain at least a C) compared to medium sized cohorts in 2019 to 1.36 (36% more likely to attain a C compared to the medium group) in 2020.

At A and above there was a slight decrease in the probability of candidates with SEN status achieving an A from a significant odds ratio of 1.21 in 2018 (p<0.001) and 1.07 in 2019 (p<0.05), to a non-significant odds ratio of 0.986 in 2020 (p=0.65). Although this appears to be following a trend of decreasing effect, which was only moderately significant in 2019.

The binomial models highlighted some additional insights into variables already discussed as having notable changes in the core linear modelling. The reduced effect of gender in 2020 seen in the linear models was evident in the A and above model (Odds Ratio (OR) – 2018:1.61, 2019:1.57, 2020:1.19), but the shift was slightly larger in the C and above model (OR – 2018:1.57, 2019:1.57, 2020:1.03). The effect of students in the Chinese ethnic group doing particularly well in 2020 was seen more notably in the A and above model (OR – 2018:1.18, 2019:1.16, 2020:1.47) than the C or above model where the effect was marginal. Candidates from independent centres had a notable increase in the probability of achieving an A or above (OR – 2018:1.24, 2019:1.29, 2020:1.98), but not C or above, whereas candidates from sixth form centres had relatively lower probability of achieving at least a C (OR – 2018:1.12, 2019:1.15, 2020:0.89) and A (OR – 2018:1.08, 2019:1.10, 2020:0.85). Candidates in centres with very high mean IDACI scores saw an increase in their probability of getting both a C (OR – 2018:0.97, 2019:0.95, 2020:1.17) and A or above (OR – 2018:0.96, 2019:0.90, 2020:1.10). Finally, candidates in centres with high mean prior attainment saw a decrease in their probability of getting at least an A (OR – 2018:1.02, 2019:1.02, 2020:0.77), but not C. Whereas candidates at centres with low mean prior attainment saw an increase in their probability of getting a C or above (OR – 2018:0.91, 2019:0.87, 2020:1.11), but not A. This is again likely due to the truncation effect discussed previously.

Quantile prior attainment model including candidates with missing prior attainment.

A version of the model was run to include candidates with missing prior attainment data. For this model prior attainment was split into nine quantile groups of approximately equal size with an additional ‘missing prior attainment’ category. Overall, the variance explained by the fixed effects in the model were lower than the core modelling (marginal R-squared- 2018: 35%, 2019: 37%, 2020: 40%), likely to be because the model was a poor predictor of outcomes for candidates with missing prior attainment. However, patterns in model coefficients did not substantially differ from those in the core modelling (full output in Appendix E).

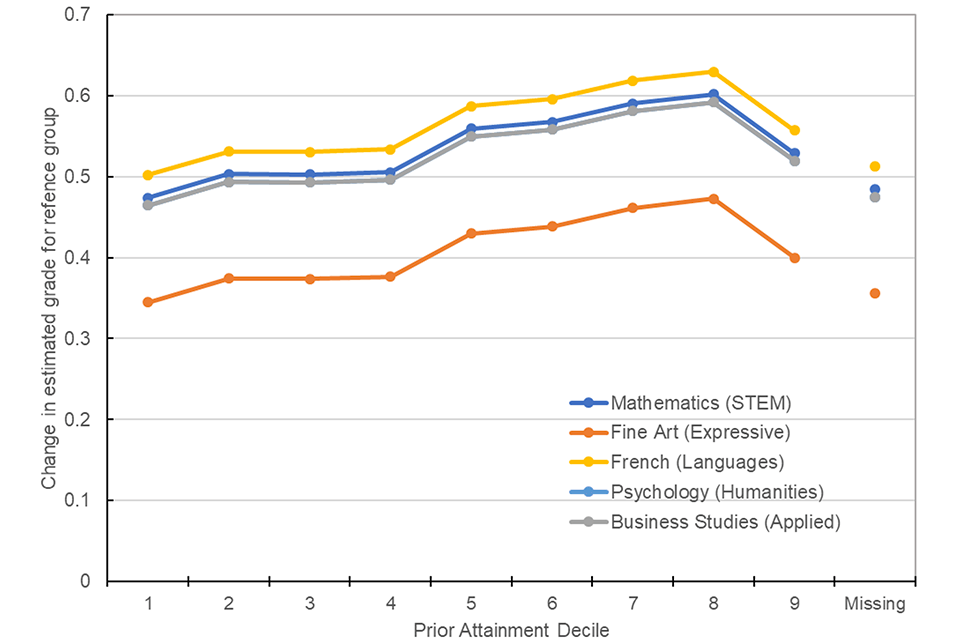

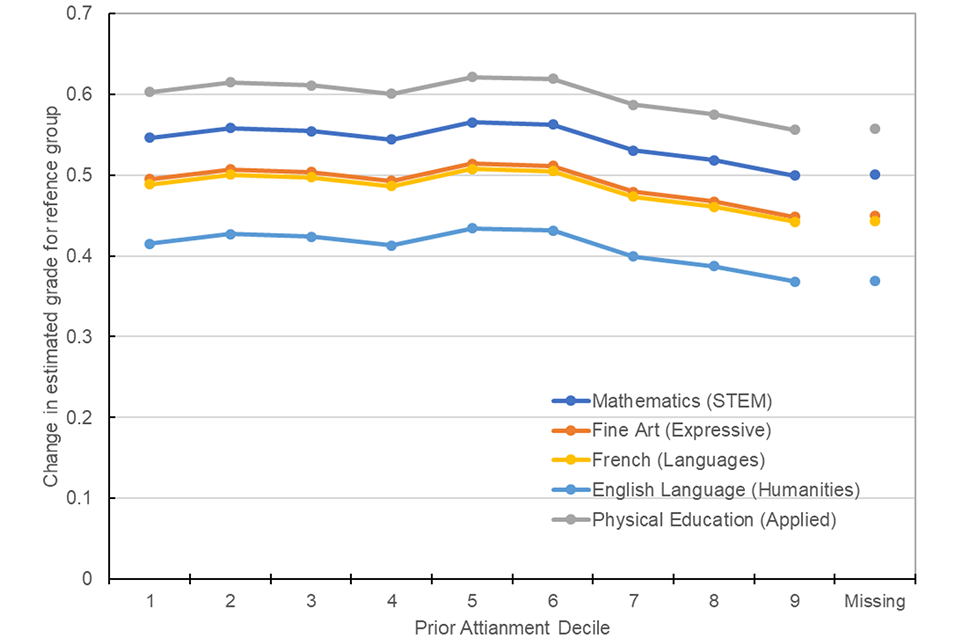

Modelling prior attainment as quantile groups helps to highlight the relative differences in generosity in grades in 2020 across the prior attainment distribution. Results indicate that there is a slight positive bias in generosity of grades in 2020, with candidates with higher prior attainment receiving more generous grades on average after all other variables are controlled for. Comparing model coefficients across years suggests the largest positive effects in 2020 when compared to an average of the previous two years were in quantiles 6, 7 and 8, whereas least generous grades were awarded to those in quantiles 1, 2 and 3. However, quantile 9 was less generously awarded, which is likely to again highlight the truncation effect at the top end of the grade range as these high performing candidates are not able to receive any higher grades. Candidates in the ‘missing’ category were also the least most generously awarded. Figure 4 shows the estimated change grades between from prior years to 2020 for each prior attainment quantile for the largest subjects in each subject group for candidates in the reference category (see Table 2 for details of reference group).

Figure 4. Difference in estimated grade between 2020 and average of 2018 and 2019 by prior attainment quantile for largest entry subjects in each subject group.

Mean change in estimated grades from the average of 2018 and 2019 to CAGs in 2020 for candidates in each prior attainment quantile. Discussed in the main text.

Note: Estimates calculated from coefficients in quantile prior attainment-based model 4. Business studies line overlaps psychology.

Additional interaction models

The first additional interaction effect evaluated was between prior attainment group and socio-economic status. The overall effect was significant in all three years (p<0.001), as candidates with low prior attainment but from a low deprivation area tend to not do as well as the main effects would predict. The effect of prior attainment is also more moderate for candidates with missing IDACI scores, who tend to perform closer to the average than other candidates. However, following the guidelines for interpreting coefficients set out above there were no notable changes for individual groups.

The second interaction investigated was between socio-economic status and ethnicity. This interaction was not significant in 2018 (p=0.71), but was significant in both 2019 (p<0.05) and 2020 (p<0.001). The key changes in coefficients were for candidates in the ‘Other’ ethnic group, where candidates in the high and very high IDACI score groups received relatively lower grades by 0.15 and 0.10 grades respectively. This was also the case for students in the Chinese ethnic group in the high and very high IDACI score groups, that gained relatively lower grades in 2020 by 0.33 and 0.12 grades respectively.

The final interaction effect evaluated was the relationship between the proportion of non-white students in a centre and candidates’ individual ethnicity. In this case the effect was significant in 2018 (p<0.01) and 2019 (p<0.001) but not 2020 (p=0.203). In previous years the only group significantly different from the reference group (White candidates at a centre with a medium proportion of non-white candidates), was from those with missing ethnicity variables at schools with low proportions of non-white candidates. These candidates in previous years attained grades significantly lower than other candidates by 0.45 grades in 2018 and 0.55 grades in 2019, but only non-significantly by 0.04 grades in 2020. The only other notable change in coefficients was for candidates in the Black ethnic group at schools with low proportions of non-white students who’s mean grades were higher in 2020 by 0.11 grades than in 2019. This suggests that candidates of unknown ethnicity and those in the Black ethnic group going to predominately white schools attained moderately higher grades than previously in 2020.

GCSE

Descriptive statistics

For GCSE the calculated average increase in grade in 2020 is slightly lower than A level at around 0.4 grades (Table 10). The grade distributions shown in Figure 5 again show a shift in the distribution up the grade range. As can be seen from Figure 6, the relationship with prior attainment has again remained relatively consistent. Although for GCSE, initial data suggests the plateauing of the relationship with prior attainment at the top end is slightly more severe, with the average increase being around 0.05-0.08 lower for the top quintile (Table 10). This again is likely due to the grade distribution approaching the top limits of the available grade distribution causing truncation of grades at the top end.

Figure 5. Grade distributions for awarded grades in 2018 and 2019 and CAGs in 2020.

Three histograms showing the number of each grade awarded in 2018, 2019 and CAGs awarded in 2020. The grade distribution for CAGs in 2020 is higher up the grade range compared to the other two histograms.

Table 10. Descriptive statistics for GCSE results.

| 2018 | 2019 | 2020 | 2020 minus average of previous years | |

|---|---|---|---|---|

| Overall Mean Grade | 4.98 (2.07) | 4.99 (2.08) | 5.38 (1.99) | 0.40 |

| Correlation with prior attainment | 0.63 | 0.64 | 0.65 | 0.02 |

| Mean Grade – prior quintile 1 | 3.03 (1.53) | 3.04 (1.54) | 3.45 (1.47) | 0.42 |

| Mean Grade – prior quintile 2 | 3.97 (1.61) | 3.99 (1.62) | 4.41 (1.53) | 0.43 |

| Mean Grade – prior quintile 3 | 4.71 (1.67) | 4.71 (1.67) | 5.16 (1.58) | 0.45 |

| Mean Grade – prior quintile 4 | 5.53 (1.69) | 5.55 (1.70) | 5.96 (1.59) | 0.42 |

| Mean Grade – prior quintile 5 | 6.70 (1.66) | 6.73 (1.66) | 7.08 (1.52) | 0.37 |

Note: Standard deviations shown in brackets.

Figure 6. Relationship between prior attainment and grade in all three years evaluated with confidence intervals.

A line graph showing the relationship between mean GCSE score and grades. Three curves show the relationship in 2018 and 2019 and for CAGs in 2020. The curve for CAGs is higher than the other two curves but has a similar shape.

Note: Smoothed curve fitted using a generalized additive model from R package ggplot2.^

Table 11 shows the mean grade in each year for each of the category breakdowns and the difference between 2020 and an average of the previous two years. The most notable differences in this descriptive data is that small cohorts saw on average greater grade increases than larger cohorts. Across centre types, colleges saw particularly large increases in mean grade. Out of the other centre types, independent centres and sixth form colleges were on average more generous. At GCSE applied subjects had the greatest increases in mean grade and humanities and STEM the smallest increases.

Table 11. Mean grades in each year with breakdowns, by subject cohort size, centre type and subject group.

| Subgroup | 2018 | 2019 | 2020 | 2020 minus average of previous years |

|---|---|---|---|---|

| Subject Cohort Size: Not-small | 4.98 | 4.99 | 5.37 | 0.39 |

| Subject Cohort Size: Small | 4.99 | 5.03 | 5.68 | 0.67 |

| Centre Type: Secondary | 4.90 | 4.91 | 5.30 | 0.40 |

| Centre Type: College | 3.73 | 4.05 | 4.56 | 0.67 |

| Centre Type: Grammar | 6.72 | 6.77 | 7.06 | 0.32 |

| Centre Type: Independent | 6.11 | 6.09 | 6.59 | 0.49 |

| Centre Type: Sixth form | 4.33 | 4.04 | 4.67 | 0.48 |

| Subject Group: Humanities | 4.81 | 4.83 | 5.19 | 0.37 |

| Subject Group: Applied | 4.72 | 4.83 | 5.40 | 0.63 |

| Subject Group: Expressive | 5.05 | 5.04 | 5.58 | 0.54 |

| Subject Group: Languages | 4.75 | 4.76 | 5.25 | 0.50 |

| Subject Group: STEM | 5.31 | 5.33 | 5.70 | 0.38 |

Note: Final column shows the difference between 2020 grades and an average of the previous two years.

Variance explained at each level