DLUHC evaluation strategy

Published 18 November 2022

Applies to England

Foreword

Permanent Secretary

The Department for Levelling Up, Housing and Communities (DLUHC) has a wide ranging and pivotal role in the government’s agenda of levelling up the whole of the United Kingdom. Our work includes investing in local areas to drive growth and jobs, delivering the homes our country needs, tackling homelessness, supporting our community and faith groups, overseeing the local government, planning and building safety systems.

In July 2022, we published a refreshed Areas of Research Interest document, which set out our strong commitment to having robust evidence in place to enable the department to continue to deliver on its wide-ranging priorities. DLUHC has an active programme of data and evidence gathering, analysis, research and evaluation underway to underpin our key policy areas. We have a strong record for setting in place innovative and trail-blazing evaluations in many of our key policy areas.

This is our first overarching evaluation strategy document, which sets out our commitment to undertake and learn from evaluation activity across all our policy areas. It outlines evaluation activity that is already underway and future plans.

Our aim is to maintain and enhance the culture of evaluation within our department and put in place robust and proportionate evaluation activity for our key programmes, thus ensuring we have a wide range of evidence in place on impact, value for money, and what works.

I am therefore pleased to introduce this strategy which sets our direction on evaluation, and how we are strengthening our culture of evaluation for the future.

Analytical foreword – Stephen Aldridge, Chief Analyst/Chief Economist

Our department has a wide-ranging policy remit, a strong record in evidence-based policy making and remains firmly committed to better and more extensive policy and programme evaluation. There is great interest at all levels of government and across the Civil Service in getting a better handle on what works, and we continue to build on our links with various What Works centres that are closest to our interests.

Technology and the latest analytical methods give us opportunities to test, trial and evaluate on a scale and using data that was previously impossible or so costly that it just wasn’t feasible a few years ago.

The technology we now have means we can and are exploiting administrative datasets held by government in ways that we have never done before to better establish what works and what does not and thus improve peoples’ lives.

At the same time, I have learnt that robust evaluation is or can be a tremendous challenge, whether it is accessing data, quality assuring it, identifying the appropriate method for analysing data once you have it, or interpreting results. Establishing policy or programme impact is hard enough. Going on to establish why a programme had an impact, if it did, is even harder.

High quality evaluation evidence enables us to better target our interventions; reduce delivery risks; and maximise the chance of achieving the desired objectives. Without robust, defensible evaluation evidence government cannot know whether interventions are effective or even if they deliver any value at all. More critically, it can help us understand all effects including unintended, adverse impacts. Evaluation of government’s largest, most innovative strategies, policies and interventions should be thorough, rigorous and routine.

My aim is to have in place impact and other evaluations of all the department’s key programmes. And we have made great progress towards this goal with evaluations recognised by the Evaluation Task Force as examples of best practice in public policy evaluation. This includes our Supporting Families Programme Evaluation and those on Homelessness and Rough Sleeping. On other programmes, we are establishing our track record through initiating scoping studies, such as that on the Housing Infrastructure Fund, or by publishing Monitoring and Evaluation Strategies such as that for the Levelling Up Fund evaluation.

I am keen that we build on our strong track record across all our policy areas and play a strong role in the leadership and promotion of monitoring and evaluation activity across all areas of government. We continue to engage in cross-government groups and are outward looking and open to learning best practice from other government departments, devolved governments, and internationally.

Do let me have your feedback on this Strategy: stephen.aldridge@levellingup.gov.uk

Introduction

1. The purpose of this document is to set out the department’s strategy for evaluation: the systematic assessment of the effectiveness of an intervention’s delivery, its impact and its value for money. The document provides an overarching summary of our current approach and highlights where we see our priority evaluations in this Spending Review period and beyond.

2. It is our first overarching evaluation strategy document for the department. We set out our current position and how we are seeking to build on our strong track record of evaluation in some of our key policy areas - extending across to other areas of our wide policy remit where we have a less established evaluation track record. The document also sets out how we use evaluation evidence to inform policy making. In setting out our aims, we intend to have a stronger evaluation evidence base in place across all our key areas within the next 3 years.

3. The department is committed to evaluating its key policies and to build better, more effective and efficient public policy interventions and public services. High quality evaluation of policy interventions allows for systematic learning of ‘what works’, ‘what doesn’t work’ and ‘why’. This evidence provides greater accountability for our spending decisions and enables evidence-based policy making.

4. This strategy demonstrates the department’s status as a learning and transformative organisation, with a culture of evaluation to support effective policy making. The department is open to learning what works and what does not and being transparent with our findings to promote public trust in our policy making.

5. In particular, the department wants to ensure that place is at the heart of decision-making and that policy is informed by spatial considerations. Departmental evaluations therefore have a strong focus on place and on using spatial data.

6. The strategy complements our recently published Areas of Research Interest (ARI) publication, which highlights our key evidence needs to the broader research community. A link to our ARI document is here: DLUHC Areas of Research Interest 2022.

7. The strategy will underpin departmental decision making and will be delivered within our robust and proportionate governance arrangements. In drafting this strategy document, we have considered how it complements the “Theory of Change” set out by the Evaluation Task Force in relation to its own activities. This document aims to make our own approach to evaluation and evaluation plans more transparent, leading to ongoing improvements in evaluation culture, better evidence-based policy making, and contributing to the increased effectiveness and efficiency of government spending.

Why evaluation is important for DLUHC

8. The Department for Levelling Up, Housing and Communities (DLUHC) has a wide ranging and pivotal role in the government’s agenda of levelling up the whole of the United Kingdom, ensuring that everyone across the country has the opportunity to flourish, regardless of their socio-economic background and where they live. Our work includes investing in local areas to drive growth and jobs, delivering the homes our country needs, supporting our community and faith groups, and overseeing the local government, planning and building safety systems.

9. Our priority outcomes are to:

- Level Up the United Kingdom;

- Regenerate and Level Up communities to improve places and ensure everyone has a high quality, secure and affordable home;

- Enable strong local leadership and increase transparency and accountability for the delivery of high quality local public services; and improve integration in communities;

- Ensure that buildings are safe and system interventions are proportionate

- Strengthen the Union to ensure that its benefits, and the impact of levelling up across all parts of the UK, are clear and visible to all citizens.

10. Across this ambitious agenda and broad policy portfolio, evaluation is necessary to understand which interventions work in achieving their outcomes and which do not. Evaluation can also help us to understand what works in different places from across the UK. Furthermore, evaluation can provide insights into how an intervention has been implemented and its impacts, who has been affected, how and why.

11. Doing robust evaluation can help us to make informed decisions on whether to launch, continue, expand, or stop an intervention and thus can help to ensure that we are investing public money wisely. Value-for-money evaluation considers whether the benefits of a policy outweigh the costs and whether an intervention is the most effective use of resources.

12. Adopting robust approaches to evaluation can help to:

- Provide accountability to investors and stakeholders as to the value for money of interventions;

- Enable local and national partners from across the UK to measure the economic and social impact of policy programmes;

- Inform decisions at all levels about the allocation of resources;

- Generate ideas for improving future policy development through better co-design, consultation, implementation and cost-effectiveness; and

- Develop knowledge of what works in different local areas and why to inform local roll out of interventions.

How evaluation fits into policy making

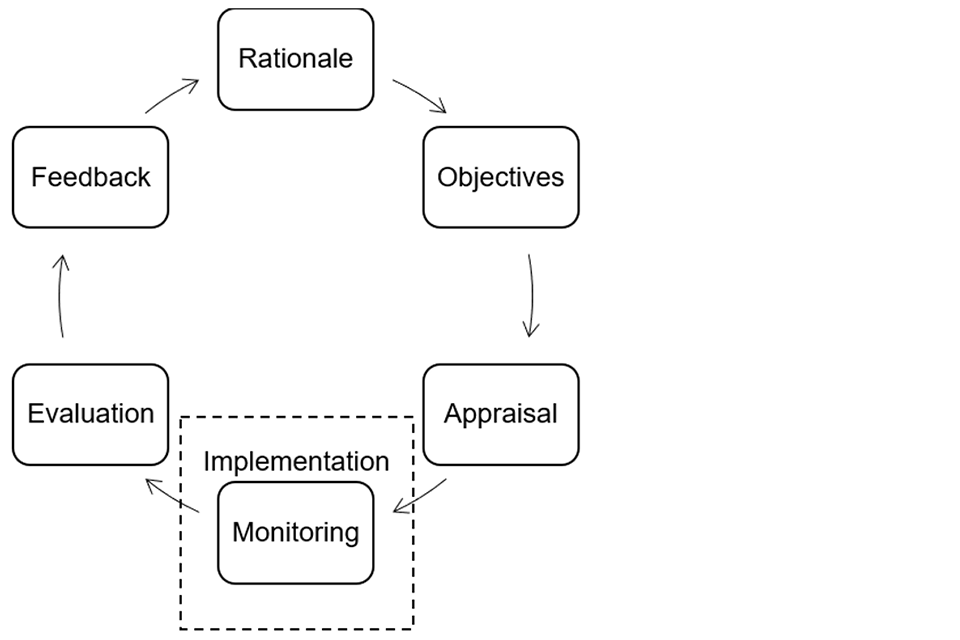

13. Central government guidance, including the HM Treasury Green Book[footnote 1] and Magenta Book[footnote 2], set out how evaluation is integral to the policy making and delivery cycle. The policy making cycle of ‘ROAMEF’ Rationale, Objectives, Appraisal, Monitoring, Evaluation and Feedback is depicted in Figure 1.

Figure 1 ROAMEF Policy making cycle

Source: HM Treasury Green Book

14. The department seeks to embed evaluation planning in all new interventions from their inception, to improve design and implementation as well as understanding outcomes. Table 1 outlines how evidence is used throughout the policy making cycle and the key departmental processes that govern evaluation and ensure it is embedded in the organisational culture. There is more information on organisational arrangements, governance, transparency, transparency and use of evidence and capabilities in later sections of this document.

Table 1 Departmental activities and processes which support policy making

| Policy stage | Activities | Departmental Processes |

| Rationale | Using existing evidence and analysis to inform problem definition | Investment Sub Committee ensures appropriate rationale and objectives |

| Objectives | Define measurable success criteria and draft and iterate logic model and theory of change |

Investment Sub Committee ensures appropriate rationale and objectives |

| Appraisal | Cost-benefit analysis of policy options informed by evidence | Investment Sub Committee ensures the economic case is sound |

| Monitoring | Routine data collection during policy implementation by policy teams | Responsibility lies with the senior reporting officer for the programme, reporting to portfolio boards. Portfolio offices integrate information on monitoring of performance and outcomes, provide constructive challenge and facilitate learning |

| Evaluation | Conduct evaluation ideally covering process, impact and value for money – making use of administrative data where possible | Investment Sub Committee ensure the evaluation plan is appropriate and costed. Research Gateway scrutinises the evaluation plan. Evaluations led by analysts working closely with policy colleagues and with specific governance arrangements including an Evaluation Steering Group. Evaluation evidence also reported up to Programme and Portfolio Boards for oversight and continuous learning and development |

| Feedback | Interpret, synthesise and use results to inform future policy decisions | Programme and portfolio boards discuss evaluation findings at key decision points, including ahead of HMT fiscal events. Monitoring and Evaluation Strategy Group and others share best practice, lessons learnt and feed into strategic evaluation approach |

15. Delivering evaluation requires collaborative working and expertise from policy and analytical professionals and finance colleagues. We also work alongside HM Treasury and the Evaluation Task Force to ensure our evaluations are fit for purpose. Some staff are members of the Trials Advisory Panel, and we also draw on this panel for support on particular evaluations. There are more details on our capabilities and how we work with other organisations in a later section.

16. There are opportunities for learning from evaluations throughout the policy making cycle. This includes what we learn works for how policies are designed, what works to improve outcomes overall and what works in individual places.

17. The next section outlines the departmental commitment to rigorous, robust policy and programme evaluation and provides an overview of some of the challenges associated with different methodologies for our policy areas.

Departmental context

Conducting evaluation

18. This strategy builds on the department’s track record for evaluation in a range of priority areas. Previously, we have set out our commitments to evaluating housing policy interventions in the Housing Monitoring and Evaluation Strategy. Work is currently underway to develop strategies for other areas such as Safer Greener Buildings. Evaluation was a key feature of the Levelling Up White Paper, in which we committed to working with a range of stakeholders to publish and visualise sub national data needed for our evaluations, working with academics and industry experts to test and trial how best to design evaluation of local interventions and to introduce more experimentation at the policy design stage.

19. The departmental evaluations of Supporting Families (previously Troubled Families), Homelessness and Rough Sleeping and the Community-Based English Language initiative have been recognised by the Evaluation Task Force as exemplar evaluations of best practice in policy evaluation.

20. Furthermore, our practices and processes have been recognised by the National Audit Office in their recent publications as actions which can strengthen the provision and use of evaluation in practice.[footnote 3] This includes our governance processes on evaluation, which are described in more detail in the subsequent section.

21. We also have a strong track record of transparency and are committed to publishing our evaluations promptly, in line with the Government Social Research Protocol. There is more detail on our transparency in a later section and a list of our recently published evaluations is at Annex B.

22. A recent NAO report concluded that the department has not consistently undertaken formal evaluations of the impacts of its past interventions for stimulating local economies. The department recognises our evidence base on what works for stimulating local economies is limited. We accept that there is more to do to evaluate new interventions from the start and have committed effort and money to do so. There is now an active programme of work underway and planned across this policy area.

Methods

23. Robust evaluation typically makes use of the three different types of evaluation: process, impact and economic (also known as value-for-money evaluation). We use these in conjunction to understand our policy interventions in the round.

- Process evaluation assesses activities and implementation and helps us learn from how an intervention was delivered;

- Impact evaluation assesses the change in outcomes directly attributable to an intervention and helps us learn the difference an intervention has made;

- Economic evaluation (or value for money evaluation) assesses the benefits and costs of an intervention to understand whether it was a good use of resources.

24. Data is an essential component of any evaluation and there is more detail on the departmental approach to data in a later section.

25. Evaluations are designed to test out theories of change, which set out how an intervention is intended to achieve its objectives. Evaluations are therefore designed to incrementally improve our understanding of the underlying causal mechanisms. Designing evaluations around a theory of change helps us to regularly test and question the assumptions behind interventions and maintain a clear line of sight on whether policies are delivering the intended results. There are more details on how the department uses theory of change in a later section.

26. There are several appropriate methodologies for evaluating our policy domains. Wherever possible we will consider random control trials and quasi-experimental methods. We can make use of trials in policy areas where interventions are targeted at individual citizens, as evaluators may have scope to assign, sometimes randomly, recipients to treatment or control conditions. An example of this approach is our randomised controlled trial of a Community-Based English Language intervention, which used a waiting-list design to demonstrate that the intervention can improve English proficiency and promote social integration of people with low levels of functional English.

27. In other areas where programmes provide targeted support with individuals or families, it may be more appropriate or ethical to use quasi-experimental methods. One example is Propensity Score Matching, which allows evaluators to control for differences between the programme and comparison groups by accounting for a range of characteristics, was used in the Supporting Families Programme Evaluation. This helped to evidence that the programme delivered positive outcomes against key metrics, including rates of looked after children and juvenile convictions and custodial sentences. In other areas we may use “difference in difference” designs, as discussed below.

Place based methodological challenges

28. A lot of what the department does is intervene in place, which gives rise to specific challenges around evaluation. Random control trial approaches are difficult because of the ethics and practicalities of randomly allocating a place to receive or not receive funds, or to roll out or not roll out a particular policy programme or infrastructure project. Often the department seeks to empower local places to decide their own interventions, which rules out evaluators assigning an intervention to a local place.

29. Quasi-experimental designs (QED) can be a useful alternative, which perhaps exploit natural randomness in a system, or use statistical techniques to construct a comparison group. For example, in the Towns Fund evaluation we are exploring the option of using Difference in Difference to compare changes in ‘treated’ towns, with ‘untreated’ towns (i.e., those who have not received the funding).

30. In some cases, there are also challenges in adopting a QED approach. For example, if a large number of places receive funding and policy interventions it may not be possible to sufficiently control for differences between places which will affect the outcomes. For the UK Shared Prosperity Fund, there may be lots of places receiving funding and policy interventions, which could limit our ability to find a credible counterfactual.

31. Another challenge is developing a meaningful and credible counterfactual. One aspect to this challenge is that the unique characteristics of a place can influence the outcome of a given intervention. Another issue that for some interventions and funding it is not possible to identify an appropriate counterfactual. This can be due to all places receiving funding or the uncertainties around a counterfactual receiving funding at a later date.

32. Another option is to use theory-based evaluations which rigorously test causal chains which are thought to bring about change, without needing a comparator area. Such designs establish the extent to which hypothesised causal chains are supported by evidence and whether other explanations can be ruled out. Such evaluations centre on a well-defined theory of change and often use qualitative data. The Freeports evaluation is an example of using a Theory-based evaluation, as part of a mixed-methods evaluation, to test several assumptions underpinning the logic of the intervention.

33. Where there are multiple interacting interventions in local places, for example, if a place has been a recipient of multiple growth-related funds, it becomes complex to identify the contribution of particular interventions and whether there are cumulative impacts. In the example given, an alternative might be to compare the impact of different types of funded interventions. It will also be possible in some cases to conduct quasi-experimental evaluations to understand the impact of specific interventions rather than the fund as a whole.

34. Recognising these challenges, feasibility studies are being conducted to explore the options available to robustly measure the impact of the Levelling Up Fund and the UK Shared Prosperity Fund.

Other methodological challenges

35. There are other practical and methodological challenges that the department faces in its portfolio. One issue is timing, as when evaluating large-scale investments, such as those in local growth or disadvantaged individuals and families, there is often a lag between providing support and the time taken for impacts to be apparent. It can be challenging within the life of a programme’s funding to evidence the impacts. We continue to work with HM Treasury to make the case for evaluation funds beyond the life of programmes which would enable the measurement of impacts which happen later.

36. Given the methodological challenges and complexities in evaluating many of our policy areas it is often necessary to conduct multiple strands of evaluation, combining process, impact and value for money evaluations and utilising multiple techniques within these. Often this will include qualitative evidence drawn from a variety of stakeholders. Qualitative evidence typically answers the ‘how’ and ‘why’ and can therefore provide more in-depth understanding. Often theory-based impact evaluations will use qualitative methods. The advantage of using multiple strands of evidence is that these can be triangulated, or combined, to increase the explanatory power and trustworthiness of our evaluations and give greater confidence in our findings.

37. The next section considers the guiding policies and organisational approach for prioritising evaluation and our organisational arrangements which facilitate it.

Scope of evaluation strategy

What we evaluate

38. The DLUHC ambition is to ensure we have evaluations for all key programmes in each policy area. We also commit to all new programme business cases containing a Theory of Change and evaluation plan set up from commencement. An initial canvas of evaluation activity mapped against our current major spend areas shows that we have a range of evaluation activity underway across our major spend areas and at different levels of development. We are mindful that given resource constraints, we must take a proportionate approach, to account for budgeting and staffing for example, and the feasibility and cost of evaluation activity. We will also ensure we take account of any demands that evaluation activity can place our delivery partners and local areas.

39. We use a number of criteria to guide our decisions on the most appropriate approach – including evaluation priorities. In doing so we consider:

- Outputs, reach, and impact of the policy (including equalities impacts where appropriate);

- The extent of innovation / novelty inherent in the programme;

- Costs, financial commitments and liabilities incurred by the policy;

- The profile of the policy and likely level of scrutiny;

- Contribution to the evidence base and ability to fill key evidence gaps;

- Feasibility and cost of evaluation activity;

- Impact on delivery partners.

40. Because of these guiding principles, the department is confident that its efforts are focused on priority evaluations of its most influential policies and programmes, as well as those which are innovative or novel. We will continue to discuss progress and priorities at our quarterly internal Monitoring and Evaluation Strategy Group. This information is regularly reviewed by the Chief Analyst, senior analysts and also the Chief Scientific Advisor with any key gaps being highlighted through this process.

41. Our department has a strong culture of evidence based policy making, and is keen to share the evidence it collects to promote cross government decision making and the most efficient use of resources. Evaluation evidence is also central to making the case for future funding with HMT.

Organisational arrangements

42. To deliver good quality evaluation, evaluation is embedded into the key departmental decision-making processes. This section outlines the organisational capabilities and governance procedures that make up the assurance functions for our evaluations, as set out in Table 1.

43. The processes set out in this section are generally followed and the publication of this strategy will further help to embed these processes, to ensure evaluation is planned for at the outset of policy development.

Capabilities

44. Evaluations are typically developed alongside policy development and co-designed by analytical, policy and project delivery professions. We will continue to build on this. For example, there could be an new role for data delivery specialists, given the way that new technologies are developing and the potential these developments could bring to help with data collection.

45. Analysis and Data Directorate is led by the Chief Analyst, Stephen Aldridge and is home to a range of multidisciplinary analytical experts who contribute to the overall evidence for the department.

46. Within the Analysis and Data Directorate there is a small central evaluation team, staffed by social researchers and economists, who provide advice and support to other analytical and policy teams and are also leading on some areas. Each policy area has its own dedicated analytical division and evaluation activity is taken forward by analysts in these policy facing teams. For example, there is a Housing and Planning Analysis Division and a Vulnerable People Data and Evaluation Division which respectively support policies on all housing and planning matters and policies on homelessness and interventions including Domestic Abuse, Changing Futures and Supporting Families.

47. In addition, there is a dedicated analytical division working alongside programme teams to support the range of evaluations on local growth and levelling up. There is also a Spatial Data Unit employing a range of data scientists and engineers tasked with materially improving how we collect, share and visualise sub-national data and use it within our evaluations. Over time, we hope to build on the way that new digital tools are allowing for the collection and analysis of data in more streamlined ways.

48. Where possible the department seeks to make data available, in useable formats, to transform its accessibility and give local partners or other interested parties the opportunities to conduct evaluations.

49. Within the Policy Profession, there is greater emphasis placed on evaluation skills, supported by the recent refresh of the Policy Profession Standards which are explicit about the requirement for using evaluation throughout the policy process to generate evidence and learning that informs decisions. These standards emphasise the need for routine mapping of policy outcomes, the importance of trial-based policy designs, generating baseline data to enable measurement and having proportionate and transparent evaluations and sharing the learning from these.

50. We have a strong track record of ongoing learning and development within the analytical community and across the department more generally. Looking forwards, we will review whether more can be done to support capability building on evaluation for analysts and non-analysts.

51. Evaluation approaches are routinely designed in tandem with refinements to the policy design. A theory of change is a necessary pre-requisite, co-designed by Policy and Analytical professionals. A theory of change must clearly articulate the process by which the policy intends to achieve its outcomes. The department has recently developed an internal theory of change template, with supporting guidance. The guidance clarifies how a theory of change differs from a logic model, which sets out what is expected to happen but does not detail the causal mechanisms or the relative contributions of different elements on the outcome.

52. One important aspect of developing a theory of change is setting out what metrics will be collected to provide evidence of the degree of success in achieving specified outcomes.

53. There are a variety of models for commissioning evaluations, with some teams having capacity for some in-house evaluation, others who commission independent evaluations and others still with a hybrid approach. Commissioning evaluations can ensure independence and gives confidence that the department is not ‘marking its own homework’. Recently, colleagues in Housing and Planning have developed a ‘call-off contract’ approach for their commissioning and we are piloting whether such an approach offers efficiencies compared to separate commissions for each research or evaluation project.

Data

54. The department uses a range of data within evaluations. Evaluations may utilise monitoring data, which comprises the regular data collection which provides oversight of how an intervention is delivered. For example, monitoring data may include how many houses are being built or how an area is spending its programme funds. Monitoring data is a key aspect of project and programme delivery which can enable early identification of problems with delivery and therefore enable changes to be made if necessary and contribute to process evaluations, which seek to learn lessons from programme delivery. The department is working to continuously improve the monitoring data it collects. For example, work is being undertaken, in conjunction with partners, to refine and develop the monitoring data collected on rough sleeping to ensure progress can be properly understood and areas where further improvement are needed can be appropriately prioritised.

55. The department has a strong track record of using administrative data to facilitate the delivery of evaluations. For example, the Supporting Families Evaluation was ground-breaking in its use of linking administrative datasets held by a range of government departments to allow us to carry out analysis at the individual and family level . Using administrative data in this way can be cost-effective and limit the requirement for complex and expensive data collection activities.

56. The department is going further in this area, with a new project ‘Better Outcomes from Linked Data’ (BOLD) which will work across Whitehall to tackle policy challenges and develop quality evidence on what works in substance misuse, homelessness, victims of crime and reoffending. This work is funded by the HMT Shared Outcomes Fund.

57. The Local Data Accelerator Fund aims to improve the use of data to improve the evidence base on supporting vulnerable children and families. An evaluation of this project is underway to understand the processes of improving capability in data systems and data linkage and asses its usefulness and impact.

58. The department is also expanding its use of spatial data, with a dedicated new Spatial Data Unit to transform the use of data to help deliver levelling up across the UK, by improving our ability to map and track local areas’ progress on levelling up and to understand how government interventions interact with places across the UK at a local level. Specifically, this unit will focus on four key objectives: (1) to map and track public spending outcomes at local level, (2) to design the government’s Levelling Up Data Strategy, (3) to build DLUHC and cross-government data capabilities, and (4) to provide data and data science support to DLUHC teams.

59. The department has ambitions to use the Office for National Statistics Integrated Data Service to unlock the full potential of data and use it for better analysis and evaluation.

60. The department recognises that monitoring requirements across different funding streams can combine to form additional requirements for delivery partners. We are therefore working to streamline and simplify the requirements we put in place, whilst ensuring there are adequate opportunities to learn about what works. Related to this, there is further work going on within DLUHC to make better use of up to date technologies and standards, which should present opportunities to streamline and improve reporting.

Governance mechanisms including Research Gateway and programme boards

61. Common to each evaluation is the governance framework which demonstrates that Monitoring and Evaluation is embedded from the outset. The first step is within Business Cases for policy programmes which go to the Investment Sub Committee. Here the department has recently revised its templates to include a dedicated section for evaluation requirements, including requirements to ringfence finance and staff resource, and to justify the approach and costs. Where officials do not feel evaluation is warranted this must be justified.

62. Following the Investment Sub Committee, which ascertains that evaluation is appropriately described and costed for within the policy business case, all evaluations must then be scrutinised by the internal DLUHC Research Gateway who are responsible for approving all monitoring and evaluation activity in DLUHC. This is a panel of experts, chaired by the Chief Analyst, which includes senior analysts from each of the analytical professions and representatives from the Chief Scientific Advisor’s office, commercial, digital, data protection, cyber security and central finance teams. The panel assesses proposals to ascertain whether they have necessary approvals, appropriately robust methodology and follow the relevant ethical procedures.

63. The panel determines whether a proposal is in line with the departmental priorities and explores the efficiency of the proposed procurement and funding model. The Research Gateway panel ask for regular progress updates, feedback on interim findings and act as a further quality check on final reports.

64. The quality assurance function of Research Gateway has recently been recommended by the NAO as evidence of good practice to improve the quality of evaluations and make sure findings are valid.[footnote 4]

65. Further to the Research Gateway, evaluation activity is sponsored by the relevant programme and portfolio boards that oversee delivery for those policy areas. Such boards are critical for communicating evaluation findings to senior leaders at key decision points, with a view that this feeds into policy decisions. This is identified as good practice and our Supporting Families Evaluation was included in a recent NAO publication as an example of utilising this approach to inform the decision on the future of the policy.[footnote 4]

66. As well as quality assurance being an integral part of each evaluation, and further augmented by our own internal processes, additional quality assurance can be provided throughout the life cycle of an evaluation through advisory groups involving key stakeholders. Such groups may involve academics and analytical experts from other government departments or elsewhere. This type of assurance can provide broad oversight of project delivery and advice, expertise and quality assurance of publications. This can be supplemented by expert peer review, by evaluation or subject experts, who are unconnected to the evaluation. Such experts can offer high quality challenge and their expertise and independence can enhance the credibility of an evaluation.

67. In 2020 the department established a Monitoring and Evaluation Strategy Group to drive high standards of policy and programme evaluation in the department, ensure continuous learning reflecting on evaluation findings, share best practice and improve the strategic approach to and planning for evaluation. The group is chaired by the Chief Analyst, Stephen Aldridge, and includes representatives from the Policy Profession as well as Analysts from the range of professions. The group was recently used as a case study of good practice by the NAO.[footnote 4]

68. The department has also appointed a Chief Data Officer and is in the process of establishing a Data Board to oversee both its data strategy and the ongoing development of its data infrastructure. These will provide invaluable support for DLUHC’s evaluation efforts.

Transparency

69. It is important that our evaluative evidence is trustworthy, high quality and adds value so that it can effectively support open and transparent policy making, as set out in the UK Statistics Authority evaluation statement.

70. To facilitate trustworthiness the department is committed to sharing information responsibly and being open about our decisions, plans and progress on evaluation.

71. In line with the Government Social Research Publication Protocol, the department is committed to publishing the main finalised reports of all its evaluations. This includes the final reports to be published on gov.uk. The department seeks to publish any associated documentation (trial protocols, completed interim reports, data collection measures, data sets etc.) following appropriate data protection procedures. A list of recent published evaluations is at Annex B.

72. The department is committed to quality, including using appropriate quality data and methods and quality assurance processes including expert reviews. All evaluation publications include information on the quality and limitations of the project. By publishing the evaluation protocols, results and data we ensure others can scrutinise, replicate our analyses and undertake new analyses.

73. The department is currently reviewing its publication protocols, to ensure we are following cross-government protocols, and have an established and clearly understood process in place. The department works closely with colleagues in the Evaluation Task Force to ensure that we widely disseminate the findings from our top-rated interventions so that these can inform good practice in evaluation across Whitehall. We have an internal register of evaluations. In addition, we will make use of the Evaluation Task Force’s Evaluation Registry (when it is available) on gov.uk to improve visibility of our evaluations.

74. We have strong links with a number of the What Works Centres, who have contributed to the thinking and design of some of our evaluations, but also use our evaluation evidence as part of evidence reviews. In addition, we also carry out our own internal evidence reviews and synthesis work, and make the best use of a wide range of data, including evaluation evidence, as we develop now policy proposals. “Meta evaluations” which bring together a range of evaluation evidence from different programmes can be useful in learning from past programmes and interventions, for future initiatives.

75. We also have an active track record in promoting sharing of good practice examples and learning from each other across government. For example, we have shared examples of DLUHC evaluations on homelessness and also on High Streets/Towns Fund at Government Social Research conferences.

76. We have an active approach to academic engagement and have recently provided examples of this to support a cross-government review of academic engagement using examples for homelessness and also integration. Engagement varies according to different projects, but can include providing synthesis reviews, evidence reviews, advice on research design and possible methodological issues, acting as expert sounding boards on particular issues e.g. ethics, and providing an additional layer of quality assurance to our analysis.

Engagement with other organisations

77. The department recognises the delivery of many monitoring and evaluation activities will be in collaboration with, or led by, other organisations. The department has several strategic initiatives to ensure engagement with other organisations, increase transparency and share good practice in monitoring and evaluation. This includes a regular seminar series of external experts, bespoke roundtables and practitioner sessions. We collaborate with other departments, and are members of the cross-government evaluation group.

78. On housing, the department works with Homes England, an executive non-departmental public body, sponsored by DLUHC to accelerate housing provision. We collaborate with Homes England to determine appropriate responsibilities for evaluations, considering resourcing and the relative merit of the agency leading delivery of an evaluation versus DLUHC.

79. DLUHC and Homes England have an agreement as to whether the agency will lead on evaluations of the interventions it delivers on its own or be managed by the department. Annex A shows ongoing evaluations led by Homes England, alongside those led by DLUHC.

80. Regardless of the evaluation lead, DLUHC and Homes England work closely together on evaluating all housing interventions the agency is involved in delivering, through membership of each other’s study steering groups. All process, impact and value for money evaluations led by the agency are published, following sign off through formal governance channels, including approval by the Senior Responsible Officer for the Homes England’s evaluation work: the agency’s Chief Economist.

81. The department also works collaboratively with the Greater London Authority on evaluating several housing interventions it helps deliver. Where appropriate we encourage third parties to monitor and evaluate the impact of locally driven approaches for example on housing and planning which we are able to use and enhance our understanding of local housing markets.

82. The department regularly engages with other government departments via advisory groups. For example, the Levelling Up Fund monitoring and evaluation group includes the Department for Transport (DfT) and the Department for Digital, Culture, Media and Sport (DCMS).

83. Further, the department has good links with the Cross Government Evaluation Group (CGEG) which brings together analysts from across Whitehall to share good practice and discuss emerging methodologies.

84. We meet regularly with the Evaluation Task Force to ensure our evaluation plans are fit for purpose and ensure we have the evidence needed to inform HM Treasury ahead of future fiscal events.

85. In addition, we have links with centres of academic excellence and What Works Centres that are closest to our interests including the What Works Centre for Local Economic Growth, the Centre for Homelessness Impact, the What Works Centre for Wellbeing and the ESRC funded UK Collaborative Centre for Housing Evidence (CaCHE). The What Works Centres routinely publish evidence syntheses and literature reviews on the latest state of the evidence. The department ensures that it learns from such evaluations and evidence reviews, including those that it does not directly commission.

86. In the Levelling Up White Paper we committed to working with local leaders from across the UK and devolved administrations to bring together evidence across a range of policy areas. Devolution in the UK enables different approaches to be taken to tackling common challenges, and therefore offers potential for all levels of government to learn from each other about “what works” in different policy areas.

87. The department works with local authorities to support their evaluation activities. This can include providing support for theory of change workshops, data explorations or ‘deep dives’. In addition, the What Works Centre for Local Economic Growth has a programme of practical workshops and training events which support local authorities with how to evaluate. We are considering how we can streamline and simplify monitoring requirements on local authorities and others, while at the same time supporting and encouraging rigorous evaluation.

88. The department is open to exploring innovative ways in which it can engage with wider academia to support more effective policy making. This might include knowledge sharing and networking events on particular themes or topics. To further facilitate the transparency of the department’s priorities for monitoring and evaluation the Areas of Research Interest document was recently published, and outlines a range of possible next steps in relation to external engagement.

Upcoming evaluation priorities

89. This section provides a review of progress to date in generating evidence for our priority outcome areas and outlines our plans to build on these.

Regenerate and Level Up communities to improve places and ensure everyone has a high quality, secure and affordable home

90. The department requires more evaluation evidence to understand how effective levelling up interventions, such as skills, employment support, business support and capital support are in changing outcomes for people across different local areas. We also have several evaluation priorities relating to housing.

Levelling Up and Local growth funds

91. To date, local growth funds such as the European Regional Development Fund (ERDF) and Regional Growth Fund (RGF) and Coastal Communities Fund (CCF) have been evaluated.[footnote 5] This particular strand of evaluation is generating useful evidence, not just for example on ERDF, but for current and future area based regeneration programmes. However, the department has not consistently evaluated other local growth funds and is therefore making plans to improve this.[footnote 6] We plan to evaluate the Towns Fund (Town Deals and Future High Street Funds) using counterfactuals to estimate the extent to which economic, social and environmental outcomes have changed as a result of the fund.[footnote 7] This will explore whether local economies, quality of life and wellbeing, resident incomes, business profitability, employment, footfall and perceptions of place have improved. A process evaluation will ascertain how two different fund applications have worked and will share lessons which can be implemented throughout the life cycle of projects. A value for money evaluation will assess the impact of expenditure on economic regeneration.

92. The Monitoring and Evaluation Framework for the Levelling Up Fund sets out our approach to gathering evidence on what works in upgrading local transport, regenerating town centres and high streets, and investing in cultural and heritage assets. The programme-level theory of change sets out the overall logic underpinning the Levelling Up Fund, which the evaluation will seek to test. A forthcoming feasibility study will fully assess the options for impact and value for money evaluations, which will be methodologically challenging given the complexity of the delivery model and potential for successful places to be in receipt of other funds with similar aims and objectives.

93. Freeports are national hubs for global trade and investment across the UK designed to promote regeneration, skills and innovation. The plan is to deliver a process, impact, and value for money evaluation, guided by a mixed method, quasi-experimental and theory-based approach. This will enable maximum learning for a complex programme, in which traditional econometric evaluation methods may not always be feasible. We have publicly committed to publishing an annual report on Freeports’ progress based on the findings of M&E, which will be submitted to Parliament by 30 November each year, and we intend to publish our final evaluation in 2026.

94. We are devising the evaluation framework for the UK Shared Prosperity Fund, supported by the What Works Centre for Local Economic Growth and the Evaluation Task Force. Discussions are ongoing about how to balance evidence of the success and impact of individual interventions, such as business employment support programmes or public realm improvements, and evidence on the programme wide impact evaluation for UKSPF. At the intervention level we want to understand, through impact evaluation, what works for local growth and local pride. At the programme level we are currently exploring the feasibility of causal impact evaluation, although given the design of the fund there exist significant difficulties in establishing a suitable counterfactual. A key challenge across these funds is to understand the quantifiable and direct impacts of particular funds, where places receive multiple funds. This will be further explored in feasibility studies to inform our strategic approach.

95. Levelling up and local growth is a dynamic area with several interventions being introduced in a short space of time. We will continue to review and prioritise our evaluation activities for these programmes, in line with the criteria outlined in para 39.

Housing and planning

96. The department has a very active programme of work underway spanning housing and planning across the £65.5 billion of interventions it is currently delivering. We have previously published two evaluations of Help to Buy, to evidence the additionality of the scheme. These demonstrated Help to Buy as a significant policy for increasing home ownership, which was successful in boosting both demand and supply.[footnote 8]

97. One of the key improvements we are making within housing is to look to improve evaluation within the Affordable Homes programme. The department has published a scoping study for one of the largest housing interventions, the Affordable Homes Programme 2021-2026, which outlines how to conduct a robust process, impact and economic evaluation. The full evaluation has been commissioned and will follow.[footnote 9]

98. The department has completed a scoping study to understand how best to evaluate the Housing Infrastructure Fund, a grant programme which funds infrastructure to unlock new housing sites. Following that an initial process evaluation has now been completed, is due to be published shortly and has implementation and delivery lessons for future policy making. It details what happened in advance of bids being awarded as well as what happens in spending and delivering the infrastructure and housing outputs. An impact evaluation of the fund is currently being commissioned with a further process evaluation planned.

99. Initial evidence has been published on the possible impacts of improving quality standards in supported housing and this will be followed by more robust evaluations as the Supported Housing Improvement Programme continues.[footnote 10] Future evaluations of any leasehold reform, Private Rented Sector Place-based Quality pilots, Social Housing Quality programme and Help to Buy are planned.

100. On planning reform there is considerable interest in understanding whether the intended outcomes of planning reform have been achieved. Long-term outcomes for the programme are that:

- New and existing developments are better designed (‘beautiful’) places that support better living

- Built and natural environments have more sustainable environmental impacts, are in better environmental condition

- Communities are more positive about development, which is better shaped to suit community needs.

- Development is accompanied by increased access to infrastructure and affordable housing (as locally required).

- More development, in the places and for the uses local economies & leaders need.

- The planning system has more capability and capacity, works faster and delivers outcomes more effectively.

101. Due to the complexity of planning reform and the challenges of designing a robust evaluation, a scoping study will take place in Winter 2022, before launching a full, multi-year evaluation of the system changes in quarter two of 2023. DLUHC is committed to assessing the holistic impact of previous and future planning reforms and evaluation plans are being developed. As part of successful implementation of full planning system change, DLUHC has undertaken a series of pilot projects, covering design codes, digital local plans, PropTech engagement, digital planning tools and neighbourhood plans. These are being individually evaluated, with the first evaluation published in November 2022 covering digital local plans. A further evaluation has recently been published in June, covering phase 1 of the design codes pilot.

Enable strong local leadership and increase transparency and accountability for the delivery of high quality local public services; and improve integration in communities

102. This priority outcome includes evaluations of initiatives for homelessness, families and individuals experiencing multiple disadvantage and integration in local communities. This is an ambitious area of monitoring and evaluation work with a range of evaluations in progress or set to begin, as well as plans to build on and improve existing data on rough sleeping. On Homelessness and Rough Sleeping evaluations of the Next Steps Accommodation Programme and Housing First are in progress, with early findings from process evaluations feeding into planning a new programme of research.[footnote 11]

103. The proposed Homelessness and Rough Sleeping Research Programme will build on previous evaluation evidence, such as the London Homelessness Social Impact Bond and Rough Sleeping Initiative (RSI) evaluations. The research programme will include new research and evaluation, but will also take account of evaluations funded by other government departments and use data from existing data collections, such as the Homelessness Case Level Information Collection (H-CLIC) to measure outcomes. New research projects include: a system-wide evaluation to better understand and assess the interaction between and the contribution of core initiatives within the wider Homelessness and Rough Sleeping System to delivering outcomes and value for money; and a Test and Learn programme to build our evidence of ‘what works’ from a range of initiatives at strategic points within the system and build capabilities for evidence use and generation amongst partners. Feasibility studies will inform the programmes of work.

104. The department has a strong body of evidence on for the impact of Supporting Families. This work has been highly praised for its innovative use of administrative data which have evidenced impact and enabled further funding to be secured. Future planned evaluations of Supporting Families will seek to test effective practice and service delivery models through efficacy trials. This is an example of the department’s commitment to continue building the evidence base, ensuring we are optimising policy delivery and making best use of public funds.

105. Changing Futures is a Shared Outcomes Fund funded by HMT. The programme aims to improve outcomes for adults experiencing multiple disadvantage including combinations of homelessness, substance misuse, mental health issues, domestic abuse and contact with the criminal justice system, by focussing on creating change across at the individual, service and system level. The programme has a robust evaluation in place to build the evidence base at all three levels and inform future work on multiple disadvantage in government. Qualitative and quantitative fieldwork is currently in progress and we are also establishing the feasibility of using quasi-experimental designs within our impact evaluation.

106. We are in the process of setting up an evaluation of the Domestic Abuse Duty which introduced a requirement for local authorities to provide support for victims of domestic abuse and their children in 2020. We have carried out a feasibility study to explore the options for an impact evaluation to inform the main evaluation. This includes an integrated process and theory-based impact evaluation alongside continued scoping for an additional quasi-experimental design. Data collection will focus on in-depth case study development at the Local Authority level, feedback from service providers and the lived experience of service users. An evaluation of the Respite Rooms pilot programme is in the early phase of delivery. The service provides short and intensive trauma-informed support and places a particular emphasis on women affected by domestic abuse with multiple complex needs who are at risk of homelessness or sleeping rough and unlikely to approach statutory services. The evaluation includes a process evaluation consisting of qualitative research interviews with local area stakeholders and service users, alongside in-depth case studies in a selected number of pilot areas. There is also scope for a quantitative impact evaluation with a comparison group formed from individual level service users either in Respite Room Local Authorities or matched comparators in other local authorities.

107. On the integration and communities agendas, the focus is on developing an evidence base to inform new policies and working across government to identify and develop suitable metrics to demonstrate the longer-term impacts of our policies on strengthening pride in place, integration, tackling extremism, boosting resident wellbeing and social capital. Currently, the Partnership for People and Places evaluation is exploring how flows of funding from central government to local authorities is used to tackle persistent social integration/community challenges. The insights from this research should help the way Whitehall departments can better organise place based funded programmes to be more efficient and effective. An example of planned research, is a commissioned process and impact evaluation of the UK-wide Community Ownership Fund, to better understand the social and economic impacts of different kinds of assets (as social infrastructure) on the lives of communities across the four nations.

108. To further develop our evidence on supporting local public services, an evaluation of Local Resilience Forum (LRFs) Funding Pilots (2021-22) is nearing completion. The aim of the funding pilot was to gather evidence on the potential efficacy, challenges and opportunities of HMG providing a degree of central funding to LRFs following the Integrated Review commitment to consider strengthening the role and responsibilities of LRFs in England. DLUHC’s Resilience and Recovery Directorate (RED) lead on the LRF commitment, working closely with the Cabinet Office (CO). Funding of LRFs has since been agreed for a further 3 years, until 2025. A 3-year evaluation will be commissioned that will cover process, economic and longer-term outcomes. Work is also underway on piloting, evidence and an evaluation strategy for wider work on this key Integrated Review commitment.

Ensure that buildings are safe and system interventions are proportionate

109. On building safety, the department has focused on setting up robust monitoring arrangements for key programmes including the Aluminium Composite Material (ACM) remediation and the Building Safety Fund. Work is underway to scope more detailed evaluation activity which could consider the effectiveness of funds, and benefits, risks and transferrable lessons from key funded building remediation programmes. In advance of confirming the final evaluation specification, the department will assess which of the distinct strands are viable options for evaluation, paying attention to the quality of the evidence that can be produced when the options for a robust counterfactual are limited. Work is underway to harmonise and improve the monitoring data that is collected from each strand so that robust, real time performance monitoring can occur. Further scoping activity is underway, including agreeing overarching programme outcome and objectives, and identifying evaluation priorities and evidence gaps in a series of Evaluation Team led activities and workshops. The department will also assess which of the complementary activities, such as the Waking Watch Relief Fund and recently updated advice on External Wall System assessments can feasibly be evaluated either as part of, or separate from, remediation activities.

110. The other priority is to evaluate the implementation of the new Building Safety Regulator and National Regulator of Construction Products, both of which must be independently reviewed within 5 years of the Building Safety Act receiving Royal Assent (i.e. by April 2027). Work to define the indicators of success has started, and theory of change developed. The department is working closely with evaluation colleagues at the Department for Business, Energy and Industrial Strategy and the Health and Safety Executive to coordinate evaluation activity as both intend to support or deliver evaluation in this space. The key challenge for this area is the limited availability baseline data to assess the future impact of changes to the system, and the level of complexity that is likely to exist in radically changing of a regulatory environment. This in turn creates challenges in how to measure success. The department will be running a consultation with policy stakeholders to assess what value can be derived from an evaluation to inform the ongoing development of the new regime, and to ensure the indicators devised are able to meaningfully demonstrate in the successful implementation of the new regime.

Strengthen the Union to ensure that its benefits, and the impact of levelling up across all parts of the UK, are clear and visible to all citizens

111. Within this priority outcome, the key area for evaluation is to develop evidence on the safe, secure and effective running of elections including through the Modern Electoral Registration Programme and the Electoral Integrity Programme. The evaluation of the Modern Electoral Registration Programme evaluation is underway and is planned to run until 2023. The evaluation combines qualitative and quantitative data. In each year we are running a survey on the electoral service teams’ experience with canvass reform, which is baselined against a pre-reform survey, and conducting interviews and focus groups with electoral administrators and Electoral Registration Officers.

112. Plans for the Electoral Integrity Programme evaluation are underway which aim to investigate implementation of the programme and its effectiveness against the stated strategic objectives. The evaluation aims to be proportionate and ensure that sufficient data is collected to understand impacts and implementation. Cost-benefit analysis will also be carried out as part of the evaluation, as well as in the Impact Assessments for the Statutory Instruments in the secondary legislation of the Elections Act.

113. We also have a monitoring programme that underpins our programme of work to build devolution knowledge and expertise across the UK Civil Service. This includes an annual capability survey and regular surveys of our learning activities, conducted in house, and qualitative and quantitative analysis to support the development of intergovernmental loans and secondments. We conduct quarterly analysis of intergovernmental relations and ministerial meetings.

Conclusions and next steps

114. This is our first departmental evaluation strategy document and sets out our current position and strong track record in some key policy areas, and how we are actively building on this and extending evaluation activity to all our key policy areas.

115. It provides an overarching summary of our current approach and highlights where we see our priority evaluations in this spending review period and beyond. It also covers the processes which will ensure we have cross-cutting oversight of evaluation activity across DLUHC, ensure we have proportionate and well planned evaluations, that we continue to innovate, and make the best use of emerging technologies.

116. The overall aim of this is to further inform and influence better evidence-based policy making and decision making across the department. The strategy document will be a key tool for engaging with and learning from analyst and policy colleagues in the department, across government and with external stakeholders.

117. As priorities change and new methodologies become possible the balance and focus of the evaluation programme may change. Consequently, the strategy will be reviewed internally on an annual basis with the document updated as necessary within 3 years.

118. Collaboration with partner organisations will be important to support more effective evaluations and inform policy making. If you have views or any queries about this strategy document please contact Lesley.Smith@levellingup.gov.uk.

Annex A: Ongoing or planned evaluations

Levelling Up and Local Growth funds

The list below sets out the planned & resourced Local Growth programme evaluations and is accurate as of September 2022.

Underway

- Investment Funds Evaluation: 5 yearly independent Impact evaluation for each Area (no National Evaluation). A process evaluation will also take place.

- ERDF 2014-20 National Evaluation Phase 3: Impact and Economic evaluation (includes Welcome Back Fund)

- Freeports: Process, Impact and Economic evaluation

- Towns Fund (Town Deals and Future High Street Funds) Evaluation: Process, Impact and Value for Money evaluation

- UK Levelling Up Fund: Process, Impact and Economic evaluation

Planned

- Community Renewal Fund: Process, Impact and Value for Money evaluation

- Local Growth Fund: Process and Impact (depending on feasibility)

- Growth Deals in Devolved Administrations (Borderlands): Evaluation planning underway

- UK Shared Prosperity Fund: Process, Impact and Economic evaluation

Housing and Planning

Underway

- Affordable Homes Programme 2021-2026 process, impact and vfm evaluation

- Housing Infrastructure Fund (HIF): process and impact evaluation

- Supported Housing improvement programme evaluation

Planned

- Brownfield Housing impact evaluation

- Help to Buy: past and future process, impact and VFM evaluation

- Leasehold and Commonhold reform: Evaluation planning underway to include process, impact and value for money evaluation

- Planning reforms: Scoping study, followed by holistic process, impact and value for money evaluation

- Private Rented Sector Place-based Quality pilots: multi-year process and impact evaluation

- Social housing quality programme: Evaluation planning underway with full process, impact and VFM evaluation to follow.

Homes England led projects

- First Homes - Phase 1 and Phase 2 Pilots process, impact and value for money evaluation

- Help to Build process, impact and value for money evaluation

- Land Assembly Fund, process, impact and value for money evaluation

- Levelling Up Home Building fund: process, impact and value for money evaluation

- Local Authority Accelerated Construction, process, impact and value for money evaluation

- Shared Ownership and Affordable Homes Programme 2016-21: Impact Evaluation

- Public Sector land acquisitions 2015-20, process, impact and value for money evaluation.

Homelessness and Rough Sleeping

Underway

- Housing First Pilots: outcome evaluation

- Data Accelerator Fund: Process and Impact evaluation

- Changing Futures evaluation: system level process evaluation, quantitative impact evaluation and cost effectiveness evaluation planned

- Respite Rooms Programme process and impact evaluation, including a possible quantitative impact evaluation using an individual level matched comparison group

Planned

- Homelessness and Rough Sleeping system-wide evaluation: process and impact evaluation (feasibility being explored)

- Test and Learn Programme: impact evaluation (feasibility being explored)

- Supporting Families efficacy trials: impact evaluation using quasi-experimental methods

- Domestic Abuse Duty: Integrated process and theory-based impact evaluation with additional scoping for a quasi-experimental design

- Supported housing improvement programme; impact and process evaluation

Integration and Communities

Underway

- Partnership for People and Places Programme: Process and Impact Evaluation - underway

- Community vaccine champion evaluation: Process and Impact Evaluation - underway

Planned

- Community Ownership Fund: Evaluation planning underway for process and impact evaluation

Building Safety and Net Zero/ Future Homes Standard

Underway

- Programme outcome/ objective setting is running across the portfolio of remediation strands, with the intention of bringing these together into a single, coherent, programme for future evaluation.

- Review and development of remediation portfolio monitoring data to report on the performance of the strands individually, and with the aim of being able to collect and compare similar data across strands. This project is being developed for both real time reporting for the Secretary of State and with an eye on future evaluation activity.

- Desk review to develop our departmental position on what constitutes good regulation. This will help develop a Theory of Change for the Act.

- Finalising a Memorandum of Understanding between Health and Safety Executive and the Department for Business, Energy and Industrial Strategy on agreeing and publishing research and evaluation related to the Building Safety Regulator and National Regulator of Construction Products.

- Developing and maintaining a cohort of industry contacts involved in Net Zero pilots where opportunity for data collection and/or evidence gathering may occur in the future. Projects have been identified in Birmingham and Walthamstow and links made with the relevant research and/or project leads.

Planned

- Submitting Evaluation Framework to internal Research Gateway review so that we can progress to a Feasibility Study phase

- Consultations with policy leads and partner departments (BEIS and HSE) on the evaluation needs for the Building Safety Regulator.

- Commissioning of external expert on regulatory systems to help propose methodological approaches to evaluating regulation where complexity is a factor.

Resilience and Recovery Directorate (RED)

Underway

- Local Resilience Forum (LRF) Funding pilots (2021-22): Process, Impact and Economic evaluation

Planned

- Local Resilience Forum Funding (2022-2025) : Evaluation planning underway

Union and Constitution

Planned

- Common Frameworks: Evaluation planning underway to include process, impact and economic evaluation

- Electoral Integrity Programme: Evaluation planning underway

- Modern Electoral Registration Programme evaluation: Process evaluation

Annex B: Recent published evaluations and strategy documents

Levelling up and local growth

- UK Community Renewal Fund Monitoring and Evaluation Guidance (2021)

- Towns Fund Monitoring and Evaluation Strategy (2021)

- Levelling Up Fund Monitoring and Evaluation Strategy (2022)

- Freeports Monitoring and Evaluation Strategy (2022)

- Coastal Communities Fund Evaluation (2022)

Local Growth - European Regional Development Fund (ERDF)

- ERDF 2014-2020 Ex-Ante Evaluation (2014)

- ERDF 2007-2013 Economic Impact Report (2015)

- ERDF 2017-2013 Economic Efficiency and Policy Report (2015)

- ERDF 2017-2013 Localism Report (2015)

- Scoping of the National Evaluation of the 2014-2020 ERDF programme for England (2018) ERDF scoping report 2014-2020 (2018)

- ERDF 2014-20 Process Evaluation (2022)

Housing and Planning

All of our latest housing and planning evaluation reports can be found here.

- The value, impact and delivery of the Community Infrastructure Levy (2017)

- Evaluation of Help to Buy Equity Loans Scheme (2018)

- Housing Monitoring and Evaluation Strategy 2019

- Quality of homes delivered through change of use permitted development rights (2020)

- Incidence, Value and Delivery of Planning Obligations and the Community Infrastructure Levy in England in 2018-19 (2020)

- Evaluation of Midlands Voluntary Right to Buy Pilot (2021)

- Housing and Infrastructure Fund (HIF) evaluation scoping study (2021)

- Evaluation of the Supported Housing Oversight Pilots (2022)

- Affordable Homes 2021-2026 Scoping Study

Homelessness and Rough Sleeping

- London Homelessness Social Impact Bond Evaluation (2018)

- Fair Chance Fund Evaluation (2018)

- Skills, Training, Innovation and Employment (STRIVE) Evaluation (2018)

- Homeless Prevention Trailblazers Evaluation (2018)

- Rough Sleepers Initiative Evaluation (2019)

- Homelessness Reduction Act Review (2020)

- Housing First interim process evaluation (2020).

- Understanding the Multiple Vulnerabilities, Support Needs and Experiences of People who Sleep Rough in England (2020)

- Housing First Mobilisation Toolkit (2021)

- Housing First interim process evaluation (2021)

- Housing First Third Process Report (2022)

Supporting Families (previously Troubled Families)

- Troubled Families Evaluation Reports (2019) -

- Troubled Families Evaluation Report (2020)

- Supporting Families Against Youth Crime Fund Evaluation (2021)

Integration and Communities

- Community Based English Language Randomised Control Trial (2018)

- Open Doors pilot programme evaluation (2020)

- Communities Fund Evaluation (2021)

Union and Constitution

- Voter identification pilots (2018)

- Voter Identification pilots (2019)

- Student Electoral Registration Condition Evaluation (July 2021)

- Modern Electoral Registration Programme Evaluation (2021)

-

The Green Book: appraisal and evaluation in central government (HMT 2022) ↩

-

Magenta Book March 2020 Central Government guidance on evaluation (HMT 2020) ↩

-

National Audit Office: Evaluating government spending Report and Evaluating government spending: an audit framework ↩

-

National Audit Office: Evaluating government spending: an audit framework ↩ ↩2 ↩3

-

Evaluation of the European Regional Development Fund 2014 to 2020 ↩

-

Supported housing oversight pilots: independent evaluation ↩