FCDO annual evaluation report 2023 to 2024

Published 12 May 2025

1. Introduction

Evaluation is one of the tools used at the Foreign, Commonwealth and Development Office (FCDO) that helps us to understand what works, how, and why. This supports the FCDO to achieve its objectives, improve performance, and target resources on activities that will carry the most impact.

This report presents an overview of evaluation in the FCDO from April 2023 to March 2024 and builds on the previous annual report for the 2022 to 2023 financial year. The report also presents a forward look for the refreshed Evaluation Strategy, which covers the FCDO’s approach to evaluation up until March 2025.

This report outlines central work conducted in the FCDO’s central Evaluation Unit (EvU) in section 2, including work on thematic and portfolio evaluations. Section 3 provides an outline on evaluative products, including sectors and geographical spread. Latter sections highlight procurement processes (section 4) and quality assurance (section 5), before ending with a forward look for evaluation in the FCDO.

2. Central evaluation service

Evaluation in the FCDO is largely decentralised, with decisions about when, where and why to evaluate distributed across teams in the UK and the global network. This system relies on a network of embedded evaluation specialists who develop, commission and manage independent evaluations of FCDO activities, supported by the central Evaluation Unit, and driven through the FCDO’s evaluation strategy and evaluation policy.

The Evaluation Unit supports evaluation across the FCDO. The Unit sits within the Analysis Directorate, led by the Chief Economist. As of March 2024, the Evaluation Unit consisted of 10 members, a mixture of evaluation specialists and programme managers. Central to the work of the Evaluation Unit is the continued delivery of the four pillars of the FCDO’ Evaluation Strategy which applies across the FCDO:

- Strategic evaluation evidence is produced and used in strategy, policy and programming: relevant, timely, high-quality evidence is produced and used in areas of strategic importance.

- High-quality evaluation evidence is produced: with users having confidence in the findings generated from evaluations.

- Evaluation evidence is well communicated to support learning: findings are accessible and actively communicated in a timely and useful manner.

- FCDO has an evaluative culture, the right evaluative expertise and capability: sufficient resource with skilled advisors processing up-to-date knowledge of evaluation, with a minimum standard of evaluation literacy mainstreamed across the FCDO.

The Evaluation Unit also works closely with the Head of Profession for Evaluation and Social Research, who leads and champions the evaluation community and evaluation within FCDO

Economics and evaluation modular learning offer

Working with colleagues across the Economics and Evaluation Directorate, the EvU delivered a pilot training event in Nairobi, Kenya to FCDO staff from across the region. The training, delivered by evaluation advisors and economists, aimed to improve evaluation and economic skills amongst participants, contributing to pillar 4 of the Evaluation Strategy which is focused on building the capability of FCDO staff. Sessions covered topics such as outcome harvesting and change stories, theory of change, and the monitoring and evaluation of influencing.

Evaluation Helpdesk

The Evaluation Unit also offered support throughout the FCDO through the Evaluation Helpdesk; an email resource that FCDO employees can use to request evaluation information or technical support. Requests included the facilitation of theory of change workshops, signposting evaluative resources and good practice, defining evaluative expectations and standards, and support in developing terms of reference.

2.1. Central evaluations

The Evaluation Unit manages a number of centrally run evaluation programmes which complement evaluations commissioned by programme and country teams.

Thematic and portfolio evaluations

Thematic and portfolio evaluations provide insight on the impact of work on a particular theme or across a portfolio, looking across a number of programmes and other activities. These evaluations aim to inform policymaking in line with the first strategy outcome: producing relevant, timely, and high-quality evidence in areas of strategic importance for the FCDO, HMG and international partners. Funding for thematic and portfolio evaluations is allocated through a demand-responsive programme, the Evidence Fund, held in collaboration between the Evaluation Unit and the Research and Evidence Directorate (RED).

In the 2023 to 2024 financial year, a bidding round was completed resulting in 9 new thematic evaluations. These evaluations covered climate, development and diplomacy, elections, economic growth, health systems, and resilience. Work began on these evaluations in the 2023 to 2024 financial year, in addition to the 6 evaluations ongoing from the previous financial year. Publication is expected in the 2024 to 2025 financial year.

Strategic Impact Evaluation and Learning

The Strategic Impact Evaluation and Learning (SIEL) programme will deliver experimental and quasi-experimental impact evaluations of FCDO activities priority areas. In 2023 to 2024 EvU concluded the design process and procurement of the programme through a Call for Proposals leading to a 6-year learning partnership between the FCDO’s EvU, Innovations for Poverty Action (IPA), and Abdul Latif Jameel Poverty Action Lab (J-PAL).

The programme will focus on humanitarian assistance, growth, climate and nature, and conflict and fragility, with cross-cutting themes of women and girls, technology and innovation and migration. It will provide funding, resources, and technical capacity for experimental and quasi-experimental impact evaluations which explore what has worked, for whom and why, facilitating better value-for-money for FCDO programmes and policies.

Evaluation Quality Assurance and Learning Service

The Evaluation Quality Assurance and Learning Service 2 (EQUALS2) provides independent and responsive quality assurance for all evaluations across the FCDO, in addition to call-down technical assistance which complements FCDO’s internal capability. The FCDO’s evaluation policy mandates quality assurance for evaluation products at various stages[footnote 1]. This quality assurance is a core component is delivering evaluative rigour, as a part of the evaluation strategy (see 5. Quality Assurance for further information and EQUALS usage).

3. Evaluation across the FCDO

Published evaluations

The FCDO published 17 evaluation products in the 2023 to 2024 financial year, including inception reports, baseline and midline reports, and final evaluation reports (see Appendix 1 for a full list of published evaluative products). The 17 evaluation products were produced from 13 projects. Case study spotlights at the end of this section, highlighted in blue, demonstrate a range of methods used within FCDO evaluations to provide evidence on wide-ranging policies and settings. The numbers in this section represent evaluations of FCDO programmes and do not include evaluations funded through the Research and Evidence Directorate investments[footnote 2].

The 17 evaluative products published in the 2023 to 2024 financial year covered a total of 11 different primary sectors, as seen in Figure 1.

Figure 1: The number of evaluation products in the 2023 to 2024 financial year split by primary sector

| Sector | Number of evaluative products |

|---|---|

| Basic healthcare | 1 |

| Climate change and environment | 1 |

| Education policy and administrative management | 1 |

| Ending violence against women and girls | 1 |

| Food assistance | 1 |

| Humanitarian research | 1 |

| Material relief assistance and services | 1 |

| Primary education | 1 |

| Road transport | 1 |

| Economic and development policy and planning | 2 |

| Social protection, social infrastructure and services | 6 |

| Grand total | 17 |

The 17 evaluation products included process, impact, and value-for-money components, often combining them. A range of data collection and analysis approaches were used to answer process, impact and value for money questions. Figure 2 sets out the data collection mechanisms used.

Figure 2: Overview of data collection methods used in evaluative products for the 2023 to 2024 financial year

The colour and number correspond to the frequency of usage.

| Type of data | Impact | Process | Value for money |

|---|---|---|---|

| Secondary data analysis | 3 | 2 | 1 |

| Case study | 3 | 3 | 0 |

| Financial reporting | 0 | 0 | 2 |

| Focus group | 5 | 1 | 0 |

| Document review | 6 | 4 | 0 |

| Observation | 2 | 1 | 0 |

| Interview | 9 | 6 | 0 |

| Survey | 11 | 6 | 0 |

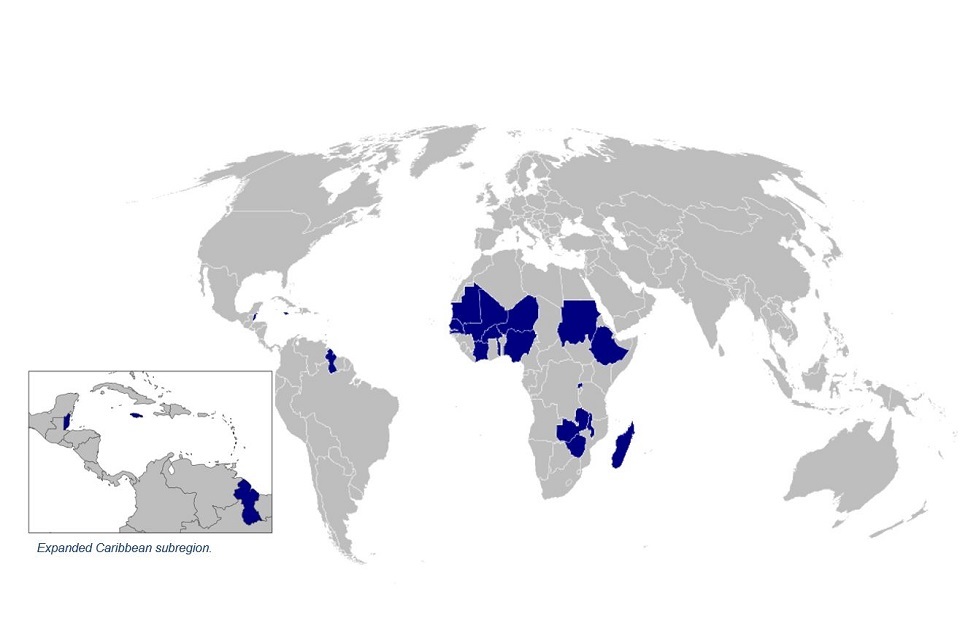

Evaluation products in 2023 to 2024 covered work in 25 countries, 13 of which were concentrated in Sub-Saharan Africa. Figure 3 shows the global coverage of evaluation products[footnote 4]. For a full list of countries, please see Appendix C.

Figure 3: The geographical spread of evaluation products in the 2023 to 2024 financial year

Figure 3: The geographical spread of evaluation products in the 2023 to 2024 financial year, the focus box on the left highlights the Caribbean subregion.

- Antigua and Barbuda

- Belize

- Burkina Faso

- Dominica

- Ethiopia

- Gambia

- Grenada

- Guyana

- Ivory Coast

- Jamaica

- Madagascar

- Malawi

- Mali

- Mauritania

- Montserrat

- Niger

- Nigeria

- Rwanda

- Saint Lucia

- Saint Vincent and the Grenadines

- Senegal

- Sudan

- Togo

- Zambia

- Zimbabwe

Evaluation spotlights

This section highlights small methodological components in evaluative products published by the FCDO in the 2023 to 2024 financial year. Full summaries of the evaluations can be found in Appendix B, including the broader aims and objectives. This section aims to specifically highlight a range of methods, some of which are considered traditional such as randomised controlled trials, and others more innovative, such as resilience analysis.

Case study 1: Randomised Control Trial, The Livelihood Transfer Component of the Productive Safety Net Programme IV

The evaluation of the Livelihood Transfer (LT) Component of the Productive Safety Net Programme IV (PSNP4) utilized a cluster randomised control trial (RCT) design to assess the impact of the programme on ultra-poor households in Ethiopia. The LT component involved providing monetary and mentoring assistance to assist with investment costs for inputs, assets, or job searches.

This method involved dividing participants into one control group and four treatment groups, each receiving different levels of intervention.

The RCT design allowed for a rigorous comparison between the groups, ensuring that any observed differences in outcomes, such as asset accumulation, agricultural production, and food security, could be attributed to the programme interventions rather than external factors. By using this approach, the evaluation provided robust evidence on the effectiveness of the various components of the programme, highlighting areas of success and identifying aspects that require further improvement, such as a statistically significant increase in livestock assets owned by beneficiary households, both in terms of physical size and value.

Case study 2: Community Based Resilience Analysis, Zimbabwe Resilience Building Fund

The evaluation of the Zimbabwe Resilience Building Fund (ZRBF) programme utilized the Community-Based Resilience Analysis (CoBRA) approach to understand and measure resilience at the community level. This method involved engaging community members in focus group discussions to define resilience in their context, identify key resilience characteristics, and prioritize these characteristics based on their importance. The programme aims to enhance the resilience of communities in 18 districts experiencing chronic food insecurity due to recurring climatic shocks and underlying poverty

The CoBRA approach also included key informant interviews with households identified as resilient by the community to explore their pathways to resilience and the factors contributing to their resilience capacities. Quantitative data was gathered through household surveys. This participatory method provided a comprehensive understanding of community resilience, highlighting where ZRBF interventions were effective and identifying areas for future improvement.

Case study 3: Longitudinal Design, Evaluation of Girls’ Education Project Phase 3 (GEP3) 2012 to 2022 in Northern Nigeria

The evaluation of the Girls’ Education Project Phase 3 (GEP3) 2012 to 2022 in Northern Nigeria employed a longitudinal design to track changes over time. This approach involved collecting data at multiple points from the same cohort of beneficiaries throughout the programme’s implementation, allowing for the assessment of individual change over time, and long-term impacts, using propensity score matching to create a comparison group. The programme aimed to improve basic education, increase social and economic opportunities for girls, and reduce disparities in learning outcomes between girls and boys in Northern Nigeria

The design included baseline, midline, and end-line evaluations to provide a comprehensive view of the programme’s effectiveness and impact on girls’ education. By comparing data across these time points, the evaluation was able to identify improvements in enrolment, retention, and learning outcomes, as well as the influence of interventions such as cash transfers and community engagement initiatives.

Case study 4: Contribution Analysis, Independent Evaluation of the Humanitarian Emergency Response Operations and Stabilisation Programme

The evaluation of the Humanitarian Emergency Response Operations and Stabilisation (HEROS) programme employed a contribution analysis approach to understand how the programme’s activities contributed to its intended outcomes. This method involved developing contribution stories that outlined the expected pathways through which the programme’s interventions would lead to desired results. The Humanitarian Emergency Response Operations and Stabilisation (HEROS) programme provides capacity, advisory, and operational support to the UK’s global humanitarian emergency response and stabilisation efforts

These initial stories were then validated through targeted interviews with stakeholders and refined based on the collected data. By systematically examining the evidence and considering alternative explanations, the evaluation put forward a number of recommendations, such as: developing a coherent theoretical framework for programme performance management, improving monitoring data quality, and ensuring strategic use of the HEROS contract.

4. Procurement

The Global Monitoring and Evaluation Framework Agreement (GEMFA) was launched in 2023. GEMFA allows users, both FCDO and other government departments, to access qualified, expert suppliers ensuring a provision for services for the design and implementation of Monitoring, Evaluation and Learning (MEL) for programmes. The framework agreement has 23 unique lead suppliers, each able to access a consortium of specialist suppliers, and provides global coverage (including the UK). Evaluations and monitoring can be undertaken for Official Development Assistance (ODA) and non-ODA programmes across 4 different lots covering a wide range of contexts within 7 thematic pillars.

Use of the GEMFA framework was higher than anticipated meaning that the re-procurement of the framework (GEMFA2) commenced in February 2024 with stakeholder consultation. Learnings from GEMFA will be incorporated into the new framework, including the inclusion of new regional lots to provide opportunities of regional suppliers to access the framework. The increased need for specialist experience in emergency MEL and portfolio-level MEL will also be reflected in the new framework structure.

5. Quality assurance

As set out in the FCDO evaluation policy, all evaluations are required to undertake independent quality assurance, either through the Evaluation Quality Assurance and Learning Service 2 (EQUALS2) or another equally rigorous independent Quality Assurance (QA) process. The EQUALS2 programme assesses evaluation products against a standardised checklist, covering areas such as independence, stakeholder engagement, ethics, safeguarding, and methodological rigour. The service is also open to other ODA spending government departments.

During the 2023 to 2024 financial year, a total of 79 quality assurance requests were raised by the FCDO, nearly double the number of requests the previous financial year (41 requests). Figure 5 outlines the nature of requests. Products are reviewed and rated as excellent, good, fair, and unsatisfactory and cannot progress until they have met minimum quality standards. Qualitative feedback is provided to improve products, with products rated as unsatisfactory having to undergo re-review following improvements. Out of the 79 product EQUALS quality assured, 61 met the minimum quality criteria on first review (87%). The products shown as unsatisfactory in Figure 4 consisted of terms of reference (5), inception reports (3), and an evaluation report (1). All were resubmitted for re-review after qualitative feedback.

Figure 4: Quality assurance requests by EQUALS for FCDO evaluative products in the 2023 to 2024 financial year, split by product type and rating given

| Report type | Excellent | Good | Fair | Unsatisfactory |

|---|---|---|---|---|

| Terms of reference | 7 | 21 | 10 | 5 |

| Inception report | 0 | 4 | 4 | 3 |

| Baseline report | 0 | 3 | 0 | 0 |

| Evaluation report | 5 | 4 | 3 | 1 |

The EQUALS service is also open to other ODA spending government departments. In this period, the service was utilised by the Department for Energy Security and Net Zero and the Department of Health and Security.

EQUALS2 also provides call-down evaluation technical assistance to complement internal capacity and produces Evaluation Insights, deep dive learning products on specified topics. There were 37 requests for technical assistance, the most common of which were requests for evaluability assessments and theory of change workshops. A single Evaluation Insights report was requested and delivered, covering lessons learnt from systems and management arrangements for data in a crisis.

6. Progress against the FCDO evaluation strategy

The 2023 to 2024 financial year represents the penultimate year of the 3-year Evaluation Strategy. The overarching goal of the strategy is to advance and strengthen the practice, quality, and use of evaluation so that the FCDO’s strategy, policy, and programming are more coherent, relevant, efficient, effective, and have greater impact.

Work to deliver the Evaluation Strategy is focused on four key outcomes with corresponding actions and milestones to ensure monitoring of progress. These workplans and milestones are reviewed and updated annually, with progress reported to an internal Investment and Delivery Committee.

6.1. Strategic evaluation evidence is produced and used in strategy, policy, and programming

It is vital that relevant, timely, and high-quality evaluation evidence is produced and used in areas of strategic importance for FCDO, HM Government and international partners. This includes consistently delivering thematic and portfolio evaluations to support the evidence needs of the FCDO across the international landscape. In addition to funding nine new evaluations and work around SIEL (see 2.1 Central evaluations), in the coming year, the EvU will also develop and agree the new Evaluation Strategy for 2025.

6.2. High quality evaluation evidence is produced

Users must have confidence in the findings generated from evaluations of FCDO interventions, policies, and strategies. The continued implementation and embedding of the FCDO Evaluation Policy promoted a common understanding of evaluation principles and standards required for support. The increased usage and output of the Evaluation Quality Assurance and Learning Service (EQUALS2), compared to the previous financial year, shows a widening demand for high quality evaluation evidence. The EvU will continue to promote the use of the EQUALS2 across the FCDO to support the full breadth of development and diplomatic activities. In the coming year, the EvU will also look to update the Evaluation Programme Operating Framework[footnote 5] guide.

6.3. Evaluation evidence is well communicated to support learning

Evaluation findings need to be accessible and communicated in a timely and useful way to inform future strategy, programme, and policy design. The EvU continue to maintain a publicly available evaluation database of evaluations published by the FCDO for access to evidence and use of findings. The EvU also established a regular rhythm of communication products to raise visibility and awareness of evaluation. This included monthly spotlights providing high level summaries of evidence on published evaluations, relevant internal events, and evaluation resources. In the coming year, the EvU will look to improve management information systems to strengthen the ability to monitor and track evaluative activity across the whole of the FCDO, in addition to continuing regular communication around products, events and organisational learning of evaluation.

6.4. FCDO has an evaluative culture, the right evaluation expertise and capability

It is important that the FCDO is sufficiently resourced with skilled advisors with up-to-date knowledge of evaluation, and minimum standards of evaluation literacy are maintained across the FCDO. To increase capacity, the EvU provided technical assistance to FCDO teams working on a range of topics, and launched an evaluative toolkit for technical experts and generalists, with drop-in and bespoke sessions delivered for overseas missions and directorates.

As previously mentioned in this report, the EvU will continue with the Economics and Evaluation Modular offer, the Evaluation Helpdesk, and technical assistance. This will be supplemented by implementation of a workplan for promoting the use of innovative methods, including a library with resources, an external speaker series, and development of proof-of-concept case studies for specific evaluation techniques.

Appendix A: List of published evaluation products 2023 to 2024

Appendix B: Full case studies

1. The Livelihood Transfer Component of the Productive Safety Net Programme IV

Publication date: August 2023

Full report: The Livelihood Transfer Component of the Productive Safety Net Programme IV

The programme

The Productive Safety Net Programme (PSNP) is a social assistance programme of the Government of Ethiopia that aims to improve disaster risk management systems and provide livelihood services and nutritional support for food insecure households in rural Ethiopia. The programme provides conditional food or cash transfers, in addition to the livelihood component which is designed to facilitate opportunities through crop and livestock packages, self-employment success, and wage employment resources.

The evaluation

The evaluation of the Livelihood Transfer Component was conducted by the International Food Policy Research Institute and Cornell University. The evaluation aimed to assess the impact of the programme on the ultra-poor households in Ethiopia. The evaluation used a cluster randomised control trial design with one control arm and 4 treatment arms.

The treatment arms included a control group, a group that received the livelihoods transfer only, a group that received the livelihoods transfer plus training and follow-up support, a group that received the livelihoods transfer plus training and follow-up support plus Digital Green-type videos, and a group that received the livelihoods transfer plus training and follow-up support plus Digital Green-type videos and aspiration videos.

The impact and recommendations

The impact of the programme was assessed by tracking indicators of asset accumulation, improved agricultural production, enhanced aspirations, higher food security, and lower poverty. The results showed that the programme increased livestock assets owned by beneficiary households, with the most intensive intervention leading to the highest observed effect on the average size of livestock assets.

However, the programme did not lead to conclusive statistically significant increases in modern input use, off-farm employment, or food security. The evaluation also found that the programme did not produce discernible impacts on the aspirations and expectations of beneficiary households.

The recommendations from the evaluation include earmarking a budget for the administration of the programme, providing better training and incentives to development agents, initiating a matching loan scheme, and integrating the programme with Woreda/Region development plans and interventions more effectively.

The evaluation suggests that better outcomes would require improvements in several areas, including the size of the grant, the support provided by development agents, and the implementation of the livelihoods component. The findings also highlight the need for further exploration and refinements to address the challenges faced during the implementation of the programme.

2. Impact Evaluation Endline Study of UNDP Zimbabwe Resilience Building Fund Programme

Publication date: August 2023

- full report: Impact Evaluation Endline Study of UNDP Zimbabwe Resilience Building Fund Programme

- Management response

The programme

The Zimbabwe Resilience Building Fund (ZRBF) is a 5-year government-integrated resilience programme implemented by the Ministry of Lands, Agriculture, Fisheries, Water, and Rural Development (MLAFWRD) and the United Nations Development Programme (UNDP).

The programme aims to enhance the resilience of communities in 18 districts experiencing chronic food insecurity due to recurring climatic shocks and underlying poverty. ZRBF is implemented through seven consortia led by various organizations, including Christian Aid, Care International, and ActionAid International.

The programme focuses on building the resilience of individuals, households, communities, and systems through 3 components: firstly, increasing effective institutional, legislative, and policy frameworks for resilience at regional and sub-regional levels. Secondly, increasing the adaptive and transformative capacities to face shocks and effects of climate change. Lastly, implementation of early warning system for climate-induced shocks.

The evaluation

The evaluation of the ZRBF programme was conducted to assess the endline status of key indicators and understand what works in resilience programming in Zimbabwe. The evaluation aimed to achieve five interrelated objectives:

- conducting a robust final impact evaluation

- testing the programme’s theory of change

- investigating the relationships between household outcomes, shock exposure, and resilience capacities

- assessing the use of evidence generated by the programme

- evaluating the effectiveness of the crisis modifier

The evaluation used a mixed-method design, integrating quantitative and qualitative approaches to data collection, analysis, and interpretation. Quantitative data was gathered through household surveys, while qualitative data was collected through Focus Group Discussions (FGDs) and Key Informant Interviews (KIIs) with community members and stakeholders.

The impact and recommendations

The evaluation found that ZRBF interventions significantly increased the resilience capacity of beneficiary households. The resilience index among beneficiary households increased by 30% from baseline, compared to a negligible 0.3% for control households.

The programme effectively supported asset accumulation, livelihood diversification, market development, and improved access to extension services and early warning information systems. However, transformative capacities were undermined by economic stressors such as currency depreciation and high inflation.

The evaluation also highlighted the importance of a sequenced and layered approach to resilience building, as households participating in multiple interventions experienced better outcomes.

The evaluation recommended continuing to invest in adaptive capacities while strengthening absorptive and transformative capacities through increased attention to informal savings groups, policy work, and local-level advocacy. It also emphasised the need to focus on water availability and access as a central component of agro-based resilience interventions.

The evaluation suggested adopting a long-term approach to resilience building, with a programme duration of at least ten years, and scaling up best practices identified in the current phase. Additionally, the crisis modifier mechanism should remain operational to help households recover from shocks but should be gradually withdrawn as household capacities improve.

3. Final Evaluation of Girls’ Education Project Phase 3 (GEP3) 2012 to 2022 in Northern Nigeria

Publication date: May 2023

Full report: Final Evaluation of Girls’ Education Project Phase 3 (GEP3) 2012 to 2022 in Northern Nigeria

The programme

The Girls’ Education Project Phase 3 (GEP3) 2012 to 2022 in Northern Nigeria was developed by the Oversee Advising Group and commissioned by UNICEF on behalf of the Federal and State Ministries of Education with financial support from the UK’s Foreign, Commonwealth and Development Office.

The programme aimed to improve basic education, increase social and economic opportunities for girls, and reduce disparities in learning outcomes between girls and boys in Northern Nigeria. It focused on addressing barriers to girls’ education, including socio-cultural norms, economic constraints, and governance issues.

The programme was implemented in 6 states: Bauchi, Katsina, Niger, Sokoto, Zamfara, and Kano, and involved various stakeholders, including government agencies, NGOs, and community-based organisations.

The evaluation

The evaluation of GEP3 aimed to assess the programme’s impact, effectiveness, efficiency, and sustainability. It used a mixed-methods approach, combining quantitative and qualitative data collection and analysis. The methodology included a quasi-experimental longitudinal panel design that tracked a cohort of schools over the life of the programme. The design included a before and after component, using both baseline data (from previous evaluations) and surveys respectively.

The comparator group was created through propensity score matching, a non-experimental statistical method of matching individuals with similar characteristics. Causal outcomes were identified through using the difference-in-difference method. The evaluation included household surveys, school surveys, interviews with key stakeholders, and focus group discussions with community members.

The evaluation also used secondary data sources, such as the Education Management Information System (EMIS) and national surveys, to assess changes in key indicators over time. The evaluation was conducted in 2 phases: a midline evaluation in 2016 and an endline evaluation in 2021.

The impact and recommendations

The findings of the evaluation showed that GEP3 had a significant positive impact on girls’ enrolment, retention, and learning outcomes in the target states, compared to the comparator group. The programme also increased the gross enrolment ratio of girls in primary education and improved the gender parity index.

The evaluation also found that the programme’s interventions, such as cash transfers, teacher training, and community mobilisation, were effective in addressing barriers to girls’ education. The evaluation also identified some challenges, such as the need for more trained teachers, better infrastructure, and stronger governance mechanisms.

The recommendations from the evaluation include continuing to invest in girls’ education, scaling up successful interventions, and addressing the identified challenges. The evaluation suggests that future programmes should focus on improving the quality of education, increasing community involvement, and strengthening governance and accountability mechanisms.

The evaluation also recommends that the government and development partners should continue to support and invest in girls’ education to ensure sustainable progress and achieve the desired outcomes. The findings and recommendations of the evaluation provide valuable insights for policymakers, practitioners, and stakeholders involved in girls’ education in Northern Nigeria.

4. Independent Evaluation of the Humanitarian Emergency Response Operations and Stabilisation Programme

Publication date: May 2023

- full report: Independent Evaluation of the Humanitarian Emergency Operations and Stabilisation Programme

- management response

The programme

The Humanitarian Emergency Response Operations and Stabilisation (HEROS) programme provides capacity, advisory, and operational support to the UK’s global humanitarian emergency response and stabilisation efforts. Managed by the Humanitarian Response Group (HRG) and the Office for Conflict, Mediation and Stabilisation (OCSM) within the Foreign, Commonwealth and Development Office (FCDO), the programme aims to support effective and timely responses to crises, build a robust humanitarian supply chain, and contribute to National Security Council (NSC) objectives.

The current iteration of the HEROS programme runs from November 2017 to October 2024 and is primarily delivered by Palladium International’s Humanitarian Operations and Stabilisation Team (HSOT).

The HEROS programme is designed to provide flexible and scalable operational capacity to respond to at least six new crises each year, including large-scale and protracted crises. It also aims to increase the FCDO’s ability to provide bespoke responses to rapid-onset disasters and chronic crises.

The programme involves direct service delivery functions, such as early warning analysis and 24-hour duty rosters, as well as indirect service delivery functions, including consultant management and warehouse stockpiles. HSOT has supported over 102 different responses and worked in 150 countries between 2018 and 2022, providing a wide range of services and products to various clients across the UK government.

The evaluation

The evaluation of the HEROS programme was commissioned by the FCDO and conducted by Integrity Research and Consultancy. The evaluation aimed to assess the institutional set-up, processes, and performance of HSOT as a supplier, working with HRG and OCSM clients to achieve HEROS objectives.

The evaluation used a theory-based mixed-methods approach, including 132 key informant interviews, participatory workshops, secondary analysis of programmatic data, and a review of over 200 documents. The evaluation focused on understanding what has worked well, what has not, and identifying ways to improve the programme over its remaining life and future iterations.

The impact and recommendations

The evaluation found that HSOT has established effective systems and capabilities that enable timely and high-quality responses to crises. HSOT’s combination of standing and surge delivery capacity provides sufficient expertise and flexibility to respond to requests and adapt to shifts.

The evaluation also identified challenges, such as the need for better performance management of deployees, clearer guidelines on Duty of Care, and improved monitoring systems for programme delivery. The evaluation highlighted the importance of strong relationships between HSOT, HRG, and OCSM for successful delivery.

The recommendations from the evaluation include developing a coherent theoretical framework for programme performance management, improving monitoring data quality, and ensuring strategic use of the HEROS contract. The evaluation also suggests exploring the feasibility of a scalable approach to security clearance for HEROS deployees and developing standardised advice on security clearance requirements.

Additionally, the evaluation recommends improving the performance management system for deployees and considering opportunities to learn and share technical lessons from deployments.

Appendix C: Geographical spread of evaluation products

List of countries

- Antigua and Barbuda

- Belize

- Burkina Faso

- Dominica

- Ethiopia

- Gambia

- Grenada

- Guyana

- Ivory Coast

- Jamaica

- Madagascar

- Malawi

- Mali

- Mauritania

- Montserrat

- Niger

- Nigeria

- Rwanda

- Saint Lucia

- Saint Vincent and the Grenadines

- Senegal

- Sudan

- Togo

- Zambia

- Zimbabwe

-

The FCDO Evaluation Policy outlines evaluation principles and the minimum standards to support them, where robust quality assurance is required for any evaluation undertaken by the FCDO or external partners. ↩

-

Work in ongoing to integrate these figures for the next annual report. ↩

-

Counts are of individual evaluation products, not projects, therefore a single project within a sector can have multiple evaluation products. See Appendix A for a full list of evaluation products. ↩

-

5 out of the 18 evaluation products were not confined to countries and had a global benefit, these evaluation products are not represented in Figure 3. ↩

-

The Programme Operating Framework is a framework for excellence in programme delivery, created by the FCDO, which defines mandatory rules and where space for judgment and flexibility can exist. ↩

-

These summaries were created using an AI software (MS Copilot), then edited by a researcher. ↩