Evaluation Academy - Final Evaluation Report 2025 (HTML)

Published 29 October 2025

This evaluation was conducted by the Evaluation Task Force, a joint Cabinet Office and HM Treasury unit.

| Project Title | Evaluation Academy 2025 - Impact and Process Evaluation |

| Principal investigator(s) | Lydia Beckett, Dr. Jack Blumenau, Jake Dolby, Prof. Oliver Hauser, Miriam Styrnol |

| Design | - Impact evaluation - Two-armed waitlist randomised controlled trial - Implementation and Process evaluation |

| Evaluation setting | Hybrid (Intervention delivered in person, data collection conducted online) |

| Target group | Civil servants |

Executive Summary

- The Evaluation Academy is a capability building course, developed by the Evaluation Task Force (ETF), which uses a train-the-trainer model to improve civil service analysts’ ability to teach others about evaluation methods. The ETF conducted an impact evaluation (IE) and an implementation and process evaluation (IPE) of the first stage of the Evaluation Academy’s train-the-trainer model in July 2025.

- The impact evaluation employed a randomised controlled trial (RCT) which used a two-arm, individual-level waitlist design to investigate the impact of the Evaluation Academy on two primary outcomes – participants’ confidence in delivering evaluation training and the size of participants’ evaluation network – and one secondary outcome – participants’ knowledge of evaluation methods and processes.

- The impact evaluation demonstrated large and significant effects of the Evaluation Academy on both primary outcomes. Compared to those in the control group, participants reported substantially higher levels of confidence in delivering evaluation training (an average increase of approximately 1-point on a 5-point scale) as well as substantial increases in the size of their cross-government evaluation networks (an average increase of 8 people).

- By contrast, the impact evaluation found more limited evidence of impact on participants’ knowledge of evaluation methods and processes. Although treatment group participants performed better on average than the control group on a post-intervention set of evaluation knowledge questions, this difference was not statistically significant. The absence of large knowledge effects reflects the fact that civil servants participating in the first stage of the train-the-trainer model had reasonably high levels of pre-existing evaluation knowledge.

- The implementation and process evaluation aimed to understand participant and teacher experiences of the Evaluation Academy to inform future delivery. Data was collected from surveys, participant and teacher feedback, a focus group, and observations. Key findings revealed that the Academy was well-organised, and the in-person format, mixed groupings, and external expert support enabled networking and confidence building. Barriers included the intense pace, assumed prior knowledge, and text-heavy slide packs. Participants recommended refreshing materials, clearer expectation setting, and more support for online/hybrid delivery in the future.

- The IPE complemented the IE, broadly finding that it was delivered as intended which was key to delivering the key outcomes and obtaining positive feedback from participants. It also provided clear recommendations for improving future iterations of the Academy to maximise its impact and usefulness to participants in Phase 2 which is crucial for the overarching aim of the course to improve the quantity and quality of evaluation across government.

1. Introduction

1.1 Background

The Evaluation Academy is a training programme designed to increase analytical civil servants’ ability to teach policy evaluation skills, with the ultimate aim of improving the quantity and quality of evaluation across government. Conceived and delivered by the Evaluation Task Force (ETF), a joint Cabinet Office and HM Treasury unit, the Academy was created in response to a National Audit Office and Public Accounts Committee report (National Audit Office 2021) which identified skills shortages as a long-standing barrier to evidence-based policymaking.

The Evaluation Academy is a train-the-trainer model which follows a two-stage process:

- In stage 1, evaluation specialists train a group of analytical civil servants (“the participants”) to provide evaluation training. In this stage, the Academy focuses on improving the ability of participants to deliver training on a variety of topics, such as technical evaluation methods and planning and managing evaluations. This component of the Academy is delivered in person in a five-day workshop spread over two weeks.

- In stage 2, participants then use the materials and skills developed in stage 1 to provide training in evaluation methods and skills to others in their home departments. Departmental models of delivering this training differ, with some concentrating delivery in short periods of time, and others delivering the training over longer time periods.

The initial design of the Academy was developed to overcome a range of issues reported by participants of existing internal and external evaluation training. Interviews with participants on previous rounds of the Evaluation Academy revealed that these courses were prohibitively expensive for most departments; did not include relevant content that could be easily applied to departmental contexts; or were often too high level, only providing a short introduction to evaluation methods. As a result, the train-the-trainer model of the Evaluation Academy was designed to offer cost-effective, in-depth, customised training that can be rolled out across departments.

The ETF has delivered the Academy for two consecutive years, and has conducted research with these participants to understand the delivery and perceived impact of the training. This is the first impact evaluation of the Academy on participant outcomes. The ETF delivered stage 1 of the Academy for a third time in July 2025. This represented an opportunity to gather evidence on the train-the-trainer stage (stage 1) of the programme, which has matured over multiple rounds of delivery[footnote 1].

This report describes the impact and process evaluation conducted by members of the Evaluation Task Force for stage 1 of the Evaluation Academy. The impact evaluation employs a waitlist randomised controlled trial (RCT) design, measuring the effects of the Academy on participants’ confidence in delivering evaluation training, the size of participants’ evaluation networks across government, and on participants’ knowledge of evaluation methods. The process evaluation provides an understanding of participants’ experiences and insight into the implementation of the programme.

1.2 Intervention

Who

Stage 1 of the Evaluation Academy is aimed at upskilling UK government evaluation specialists to provide evaluation training in their home departments. Prospective recipients of the intervention were nominated by central evaluation teams across government departments, arm-length bodies, and non-departmental public bodies. Departments were only able to nominate individuals with prior experience in evaluation methods and delivery. This was necessary as this stage of the Academy focusses on upskilling participants in how to deliver evaluation training to others, rather than on developing their skills in the practice of evaluation itself. Participants were eligible if they had not participated in the stage 1 training before and if they were able to attend each day of the workshop.

What

Participants of the Academy were provided free, in-person training over the course of five days across a two-week period. The course material for the workshop was split across 10 modules: Introduction to evaluation; Theories of change; Scoping an evaluation; Process evaluations; Experimental designs; Quasi-experimental methods; Theory-based evaluations; Value for money evaluations; Planning and managing an evaluation; Communicating evidence[footnote 2].

The Evaluation Academy aims to ensure that participants will be able to deliver and explain the content of the course to a high level. The goal is for participants to be able to both understand and deliver the theoretical material on evaluation, as well as to be able to explain to participants how to apply the content in real-life evaluation scenarios.

The first week of training was delivered by evaluation experts from the Evaluation Task Force, the Evaluation and Trial Advice Panel[footnote 3] (ETAP), and by previous trainers trained through the Academy. Participants were split into three cohorts and each cohort received instruction of three modules from the list above, followed by coaching on how to deliver those modules. All cohorts were trained to deliver the final module, Communicating evidence.

In the second week, participants then taught the modules they studied in week one to participants in other cohorts. Participants received the associated slides, facilitator guide and technical guidance for the modules they were teaching, and they delivered training to other participants in a two-hour long session. These materials, alongside training from evaluation experts, were intended to provide participants with the confidence and ability to deliver training to their Academy peers, before going on to train others in their department in the future.

In addition, ‘extracurricular’ sessions that included presentations and speeches from senior stakeholders as well as previous trainers aimed to provide further motivation and to build participants’ confidence in promoting evaluation across the Civil Service.

All activities for the Academy took place in-person rather than online, as formative evaluations of the previous two cohorts of the Academy had highlighted the benefits of this delivery model for participants. The five-day in-person course allows participants to build professional connections with fellow attendees, and highlights common barriers/opportunities faced by attending analysts. When facilitating the training, a strong emphasis is therefore put on allowing participants to collaborate and independently discuss the material and how it applies to their individual contexts. A key rationale for in-person delivery is that the Academy aims to foster analysts’ cross-departmental professional networks.

When

The version of the Academy studied in this trial took place between 1st July and 9th July 2025. The first week of the Academy involved the delivery of training from the group of contributing evaluation experts. The second week included participants’ delivering training of the modules to their Academy peers.

1.3 Theory of Change and Participant Outcomes

A Theory of Change (ToC) was developed for the Evaluation Academy by members of the Evaluation Task Force (see Figure 1). The inputs column on the left hand side of the ToC outlines the resources, activities and materials that go into running stage 1 of the Academy. The outputs column details the deliverables that are produced and the data that is captured.

The figure separately presents outcomes for the Academy in short-term and long-term categories. The short-term outcomes relate to the types of changes that the Academy is expected to induce in participants in stage 1 (i.e. in the train-the-trainer phase of the Academy). The long-term outcomes are those which are expected to manifest after stage 2 of the academy (when participants go on to train colleagues in their departments). Finally, the impact column outlines the intended impact that the Evaluation Academy is expected to have on government evaluation capability, delivery and networks in the long run.

In this trial, we concentrate particularly on those short-term outcomes that are associated with stage 1 of the Academy. Due to the variation in delivery of stage 2 of the Academy, it was not practical to include the second stage of delivery within this study. This will require another phase of evaluation to consider the delivery and effect of training in departments. For stage 1, the most relevant outcomes are those that relate to participants’ ability to deliver evaluation training in their home departments.

The main purpose of the first stage of the Academy is to improve participants’ confidence and ability to deliver evaluation training, to build participants’ connections with other evaluation specialists across government, and to ensure that participants have a comprehensive baseline knowledge of evaluation before they deliver training in their home departments. While these all represent short-term outcomes, our Theory of Change reflects our working hypothesis that increased confidence, networks and knowledge will improve the evaluation skills of other civil servants who are subsequently trained by Academy participants. This is supported by existing research into the train the trainer model in other contexts (Nexo et al, 2024; Yarber et al, 2015), however, assumes that this holds to the specific context of government capability building.

Increased confidence in delivering training is expected to improve the quality and clarity of instruction received by other civil servants in participants’ home departments. Expanded evaluation networks enable participants to draw on peer support and shared learning, increasing the consistency and quality of evaluation practice across government. A strong foundational knowledge of evaluation methods ensures that participants are equipped to deliver accurate, credible, and methodologically sound guidance to others. While measuring long-term effects of the Academy will require further research on the second stage of delivery, demonstrating that the Academy’s first stage has positive effects on these short-term outcomes would therefore represent an important confirmation of key links in the Theory of Change for the programme as a whole.

We classify confidence in delivery and the size of participants’ evaluation networks as our primary outcomes, and evaluation knowledge as a secondary outcome, based on the Academy’s intended role in enabling participants to teach others and embed evaluation practice within their departments.

Confidence in delivering training and communicating evaluation findings is a direct mechanism through which the Academy supports a train-the-trainer model. Networks matter because effective evaluators rarely work in isolation – peer support and shared learning are critical enablers of high-quality evaluation work. These outcomes represent the clearest short-term pathways through which the Academy can influence broader evaluation capacity.

We treat evaluation knowledge as secondary because the training is not aimed at novices; participants are expected to enter the programme with a reasonable baseline of evaluation knowledge. While it is clearly a relevant measure of individual learning, the expected gains of the first stage of the Academy are therefore smaller and more variable, given the expected level of participants’ pre-existing understanding.

| Process: Inputs | Process: Outputs | Impact: Short-Term Outcomes | Impact: Long-term outcomes | Impact |

|---|---|---|---|---|

| Buy-in from key, senior stakeholders into the need for the Academy Budget required to procure a contractor to facilitate the course Budget required to enable all participants, facilitators, and trainers are able to attend in person Communication with departments to recruit attendance Lecture theatre and breakout rooms to host sessions Production of course material Co-design of content and provision of relevant case studies with departments Adapting materials to suit departmental needs based upon previous deliveries of the Academy Resource from ETF and current trainers to deliver the course materials Attendance from analysts across government departments Survey to capture relevant pre/post academy metrics |

Deliverables 5 days across a 2 week period 10 modules covering technical evaluation method training, soft skills, and project management Training on how to effectively present material back to team members after the course Data Monitoring of number of participants receiving training Pre and post survey data capturing understanding of evaluation methods/importance of evaluation Feedback from departments on what elements of the course worked well and what could be improved Departments/individuals 64 participants receive training Approximately 200 old and new trainers receive networking opportunities |

Primary outcomes Trainers… …increased confidence and ability in presenting the material …increased size of trainers’ evaluation networks across government Secondary outcomes Trainers… …increased technical knowledge of evaluation methods …increased ability to develop robust and proportionate evaluation plans for policies/programmes …increased planning and project management skills in order to deliver high quality evaluations …increased ability to advocate for why evaluation should be included across the policy cycle …increased ability to influence policy design to support evaluation |

Trainers… …upskill a broader cohort of analysts across government Government… …increased number of analysts trained in evaluation methods …increased number of policies and programmes with high quality evaluation plans The Academy… …monetary savings to HMG by delivering training through the train-the-trainer model …a sustainable and adaptable evaluation capabilities offer for government |

Departments build strong internal evaluation training capabilities to support development across the GSR and analytical professions. The importance of evaluation is recognised across government. Government has the resource and capability to deliver evidence-based policymaking across departments. Establishment of a sustained cross-departmental network of evaluation specialists |

Figure 1: Theory of Change diagram for the Evaluation Academy, from left to right: inputs, outputs, short-term outcomes, long-term outcomes, and impacts

1.4 Research Questions

1.4.1 Impact Evaluation

Using the participants’ outcomes identified in the Theory of Change, we developed two primary research questions, and one secondary research question to be tested in this study. The aim of the impact evaluation is to answer these research questions.

Primary research questions:

- What impact does the train-the-trainer stage (stage 1) of the Evaluation Academy have on participants’ confidence in delivering evaluation training?

- What impact does the train-the-trainer stage (stage 1) of the Evaluation Academy have on the size of participants’ evaluation networks?

Secondary research question:

- What impact does the train-the-trainer stage (stage 1) of the Evaluation Academy have on participants’ technical knowledge of evaluation processes and methods?

1.4.2 Implementation and Process Evaluation

Alongside the impact evaluation, we also conducted an implementation and process evaluation (IPE). The main aims of the IPE were:

- To understand what participants thought about the Academy;

- To identify factors that helped or hindered participants’ engagement with the Academy;

- To gather feedback from participants about how the delivery and benefits of the Academy could be maximised;

The research questions addressed in the IPE were:

- Was the Academy delivered in the way that was envisaged?

- What were the key opportunities and barriers to effective participation in the Academy?

- What were participants’ experiences of attending the Academy?

- What are participants’ recommendations for improvement of the training in the future?

1.5 Ethics

This study received ethical exemption from the UCL Department of Political Science research ethics committee. The study was deemed exempt from ethics approval because of the low-risk nature of the research and the fact that the research only involved the use of educational tests, survey and interview procedures on participants in the public arena.

2. Impact Evaluation

| Trial design, including number of arms | Two-arm, individual-level waitlist randomised controlled trial |

| Unit of randomisation | Individual civil servants |

| Stratification variables | Department |

| Primary Outcome(s): Variable | 1. Confidence in Delivering Evaluation Training 2. Size of Evaluation Network |

| Primary Outcome(s): Measurement (instrument, scale, source) | 1. Bespoke survey with two confidence questions using the mean response to form a single “Evaluation Confidence” scale 2. Bespoke survey with single question with integer outcome |

| Secondary Outcome: Variable | Evaluation Knowledge |

| Measurement | Evaluation Knowledge Scale (online survey, 27 item battery, see annex 6.1) |

2.1 Evaluation Design

The design and analysis strategy were pre-registered prior to the delivery of the Academy, and a pre-analysis plan was published on the Open Science Framework repository (Beckett et al. 2025)[footnote 4]. All design choices and analysis decisions described below were implemented as described in the pre-analysis plan.

This trial used a two-arm, waitlist RCT design. Randomisation was conducted at the level of individual civil servants. In total, 118 participants were recruited for participation in the trial, which represented a slight under-recruitment versus our target of 126. Individuals were recruited from central evaluation teams within departments, as detailed in section 2.4 below. The Academy had capacity for 63 training places, which led to a somewhat imbalanced allocation ratio with 63 treatment units and 55 control units. Participants were aware of their treatment status, as those assigned to the treatment group attended the July 2025 Academy training, while those in the control group were informed they would be offered a place in a future round.

Randomisation was blocked by department, such that participants were randomly assigned to treatment or control within their own departmental group. This ensured approximate treatment-control balance within each department. However, as the number of participants from each department was uneven with some odd numbers, treatment probabilities varied across departments. The maximum treatment probability was .66 and the minimum probability was .44. Randomisation was conducted after baseline data collection. We account for the unequal treatment probabilities within blocks in the analysis strategy described in section 3.

Treatment group respondents received the stage 1 of the Evaluation Academy training in July 2025. Control group respondents did not receive the training in July 2025, but were scheduled to receive the same training in November 2025.

2.2 Data Collection

The primary data for this study came from survey instruments designed by members of the Evaluation Task Force and distributed online. Baseline survey data which captured information relating to both the primary and secondary research questions for the impact evaluation (described in more detail below) was collected before randomisation into treatment and control groups, approximately 6 weeks before the intervention. The baseline survey was distributed through an email containing a link to a self-completed online survey hosted on Qualtrics.

Endline survey data was collected from both treatment and control groups immediately after the intervention ended (i.e. after the trainer-the-trainer training). For the treatment group, endline survey data was collected via a self-completed online survey that participants were instructed to complete in-person during the final session of the Academy. Participants were briefed before beginning the survey that they should not collaborate with each other or use anything other than their own knowledge to complete the survey. The survey was conducted in silence, with an invigilator in the room to prevent participants from discussing the survey with one another[footnote 5]. The control group participants were emailed the online survey to complete at the same time and were encouraged to complete it as soon as possible. The control group were sent two reminders to complete the endline survey and all control group responses were received within 28 days of the treatment-group data collection. The trial experienced very limited attrition, with endline data collected from 96.6% of participants (95.2% in the treatment group, and 98.2% in the control group).

Once data collection for each survey was complete, researchers in the Evaluation Task Force cleaned and validated the data. ETF researchers then implemented the analyses described below.

2.3 Outcomes

The evaluation team undertook a rapid review of outcome measures that might be used to measure the core concepts of interest in the primary and secondary research questions: confidence in teaching evaluation, the size of evaluation networks, and evaluation knowledge. We considered two survey instruments used at different stages of the Academy in previous years: a survey used in the 2024 delivery of stage 1 to capture technical evaluation knowledge; and a pre/post survey designed by Cabinet Office colleagues to measure confidence in evaluation methods and processes after stage 2. However, neither included measures suitable for the primary outcomes of this study. We also referred to a reasoning scale developed by Drummond and Fischhoff (2017) designed to capture the skills needed to read and evaluate scientific evidence. However, this scale is not well-tailored to measuring evaluation knowledge, particularly in the context of evaluation in government. This review therefore indicated that there are no existing tools or measures that are immediately suitable for measuring the outcomes of the Evaluation Academy.

We therefore developed a bespoke survey which aimed to measure the two primary outcomes of our study (confidence in communicating about evaluation and size of evaluation network) and the secondary outcome of our study (evaluation knowledge). In developing the survey, the research team drew on the Theory of Change for the Academy, as well as on the materials used in the delivery of the Academy. We pre-tested this survey on a small number of evaluation experts from the Evaluation Task Force. We also received input on the survey design from two expert reviewers from outside of HM Government. We made several changes to the design of the survey in response to feedback from these reviewers.

In addition, following our baseline data collection (but before the delivery of the Academy) we also conducted some additional analyses to determine the reliability of our survey items, particularly those designed to measure evaluation knowledge (our secondary outcome measure). In particular, we investigated the discrimination and difficulty of the battery of evaluation knowledge items using an item response theory (IRT) model. We used this model to identify any questions that were insufficiently difficult, such that almost all respondents answered them correctly in the baseline survey. In addition, we also used this model to identify questions which fail to differentiate sufficiently between participants of varying knowledge levels. As a result of this analysis, we decided to exclude 10 items of the knowledge battery from our analysis of the RCT. These items either were too easily answerable by the vast majority of our respondents or were ineffective at distinguishing between participants with higher/lower levels of evaluation knowledge. We made no other changes to the survey instrument between baseline and endline data collection. We provide more detail of this IRT analysis in annex 6.2 and we provide the full survey instrument for all of the questions that inform our outcome variables in annex 6.1.

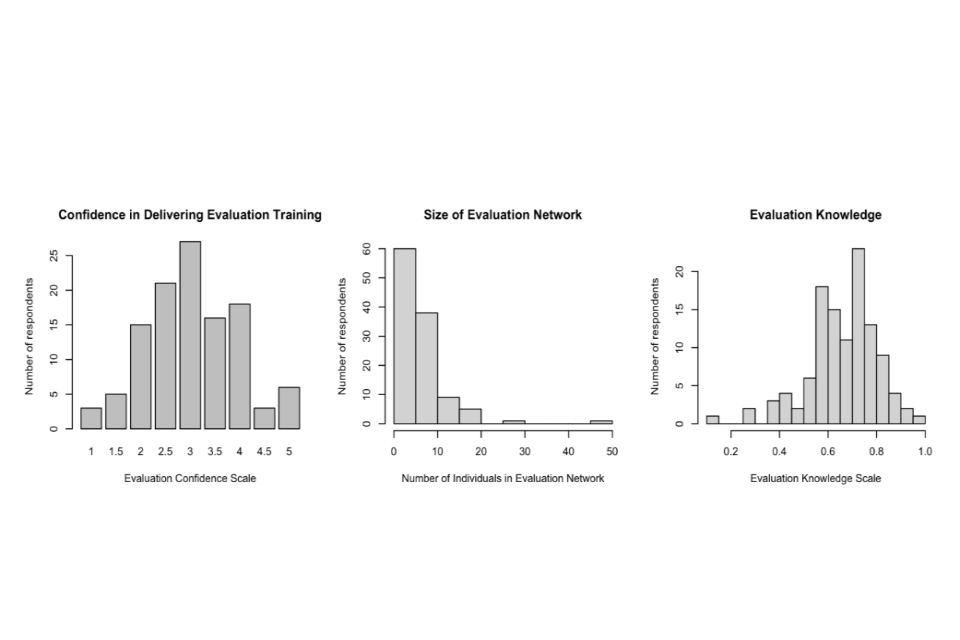

In the next sections, we describe the survey questions that we used to measure these concepts and the construction of the outcome variables derived from those measures. We present the distributions of both our primary outcomes and our secondary outcome in Figure 8 in annex 6.6.1.

2.3.1 Primary Outcomes

Confidence in Delivering Evaluation Training

We asked two questions aimed at assessing the confidence of participants in delivering evaluation training and communicating technical information to non specialists. These questions are presented in a grid format, with a common introduction text:

How would you rate your confidence in the following…

- Delivering evaluation training to members of your department

- Communicating technical evaluation methods to non-specialists

Each of the items in this battery were Likert scale questions, using a five-point scale ranging from “1 = Not at all confident” to “5 = Completely confident”.[footnote 6]

The outcome variable we use in our primary analysis is formed from the mean response of participants across these two questions[footnote 7]. We denote the score on this variable for a given respondent i in a given survey wave t as Confidenceit.

Size of Evaluation Network

We asked one question aimed at assessing the size of a participant’s evaluation network across government:

Approximately how many individuals across government would you feel comfortable approaching for advice on evaluation? Please insert a number below.

Participants then input a numerical response. We use this number in its raw form as our outcome variable, which we denote for respondent i in a given survey wave t as EvaluationNetworkit.

2.3.2 Secondary Outcome

Evaluation Knowledge

The secondary outcome variable we use for our analysis is based on 27 questions which cover a wide range of evaluation-related subjects, such as developing a Theory of Change, scoping an evaluation, and technical knowledge of process, impact and value for money (VfM) methodologies. The battery consists of questions asking participants to directly recall content from the 10 modules, in addition to questions asking them to apply this knowledge to real-world scenarios.

We calculated the proportion of correct responses across these questions and used this as our outcome variable[footnote 8]. We denote the score on this variable for a given respondent i in a given survey wave t as EvaluationKnowledgeit

2.4 Sample

The target population for this evaluation was civil servants working in evaluation roles across UK government departments, arm’s-length bodies (ALBs), and non-departmental public bodies. To recruit participants, central evaluation teams within these organisations were each invited to nominate up to 10 individuals to take part in the Academy. Nominees were required to meet the following eligibility criteria:

- Have prior experience in delivering evaluations in government (c. 2 years experience)

- Not have previously participated in stage 1 of the Evaluation Academy

- Be able and willing to deliver at least two Evaluation Academy sessions in their home department each year following the training

- Be available to attend all sessions of the July 2025 Academy

Nominations from departments were reviewed by a member of the Evaluation Task Force, and eligible individuals were enrolled in the study. In this review process, a small number of nominees were rejected based on the fact that they were unable to attend all five days of training. This sample formed the basis for randomisation into treatment and control groups as described in section 2.5 below. The final sample comprises 118 civil servants from across a broad set of departments and public bodies.

The sample is not likely to be representative of broader populations of civil servants. It is, in essence, a convenience sample of those departments and individuals who were interested in receiving training through the Academy and met the eligibility criteria. This represents a potential limitation to the external validity of the trial, as it means we cannot be confident that the effects that result from the trial population would replicate if the training was rolled out more broadly across government evaluators. That said, concerns about external validity may be limited in this context, as the Academy is a relatively standardised intervention with content designed to be broadly applicable, and there are no strong theoretical reasons to expect substantial variation in treatment effects across different types of analysts.

2.5 Randomisation

Randomisation was conducted using R on 19th May 2025. The randomization was conducted at the individual level, blocking on department. Using blocked-randomisation serves two purposes in this context. First, from a delivery perspective, blocking ensured that departments received roughly equal proportions of participants in the July 2025 delivery of the Academy[footnote 9]. Second, from an analytical perspective, blocking by department ensures that the treatment and control groups have similar distributions of departments across participants, something which tends to improve the precision of the estimates of the treatment effects of interest (Gerber and Green 2012, 110).

2.6 Analysis Strategy

We estimate the intention to treat (ITT) effect of participating in stage 1 of the Academy using a covariate-adjusted linear regression model.[footnote 10] The model we use estimates the degree to which participation in the Academy affected three key outcomes – Confidence in Delivering Evaluation Training (primary), Size of Evaluation Network (primary), and Evaluation Knowledge (secondary) – by comparing participants who received the training (treatment group) to those who didn’t (control group).

To maximize statistical power and precision, the analysis accounts for baseline measures of the outcomes, participants’ department, and their civil service grade. Because participants were randomly assigned to groups within their departments (blocked randomisation), the model includes department fixed effects to ensure that we compare treatment and control groups fairly within the same department block.

We pre-specified three directional hypotheses corresponding to our research questions described in above. Given the directional nature of these hypotheses – that the Evaluation Academy will improve trainer confidence, network size, and evaluation knowledge – we use one-tailed tests to assess each of these hypotheses.[footnote 11]

- H1: The train-the-trainer stage (stage 1) of the Evaluation Academy has a positive impact on participants’ confidence in delivering evaluation training

- H2: The train-the-trainer stage (stage 1) of the Evaluation Academy has a positive impact on the size of participants’ evaluation networks

- H3: The train-the-trainer stage (stage 1) of the Evaluation Academy has a positive impact on participants’ evaluation knowledge

To ensure valid inference despite the blocked design and modest sample size, we rely on a randomisation inference procedure for p-values and confidence intervals. Finally, we use the Benjamini-Hochberg (1995) procedure to control the False Discovery Rate and account for multiple hypothesis testing. A full description of the analysis strategy can be found in annex 6.3.

2.7 Participant Flow

The number of participants recruited into the panel was 118. After baseline survey collection, these individuals were block-randomised by department to treatment and control groups, with 63 allocated to the treatment group and 55 allocated to the control group. The trial experienced a very small amount of attrition due to some participants dropping out of the trial and not completing the endline survey. The attrition rates by treatment group are given in Table 1 below.

| Category 1 | Category 2 | Treatment | Control | Total |

|---|---|---|---|---|

| Number of individuals | Randomised | 63 | 55 | 118 |

| Included in endline analysis | 60 | 54 | 114 | |

| Attrition | Number | 3 | 1 | 4 |

| Percentage | 4.8% | 1.8% | 3.4% |

Table 1: Data table showing individual attrition from the participants recruited into the panel

In the analyses described below, our sample therefore includes 114 respondents in total, with 60 treatment group respondents and 54 control group respondents. In annex section 6.7, we assess whether the (very limited) attrition we observe is consequential for the results we present using an extreme-bounds analysis (Lee 2009) and find that our results are not affected by attrition. In addition, of the 60 treatment group respondents included in the endline analysis, seven respondents failed to attend the Academy (but did provide both baseline and endline data), resulting in one-sided non-compliance in our analysis sample.[footnote 12] Annex section 6.7 assesses the consequences of this non-compliance using a two-stage least-squares approach. We again find that non-compliance does not affect any of the substantive results that we report.

2.8 Balance in Baseline Characteristics

Table 2 presents individual level characteristics for the treatment and control groups at the point of analysis taken from the baseline survey. The figures presented here show that with just a small amount of attrition, randomisation resulted in two balanced groups in our trial. The average network and knowledge scores were slightly higher in the treatment group at baseline but these differences are not statistically significant. This coincides with there being a slightly higher percentage of participants from senior grades (G7 and above) in the treatment, however the difference relative to the control group is again not statistically significant. Blocking randomisation by department also ensures that the treatment and control groups have similar distributions of departments across participants. Taken together, Table 2 indicates that imbalance in baseline characteristics and outcomes between treatment and control groups is unlikely to be a threat to inference in this setting.

| Category | Treatment Group | Control Group | All |

|---|---|---|---|

| Mean confidence score | 2.95 | 3.12 | 3.03 |

| Mean network score | 7.58 | 7.24 | 7.42 |

| Mean knowledge score | 0.67 | 0.65 | 0.66 |

| Grade (%): | |||

| HEO/SEO | 53.33% | 55.56% | 54.39% |

| G7/G6/SCS | 46.67% | 44.44% | 55.61% |

Table 2: Mean baseline characteristics of treatment and control groups, including confidence, network and knowledge scores as well as the percentage of each group at different civil service grades. None of the differences between treatment and control groups are statistically significant at conventional levels.

2.9 Results

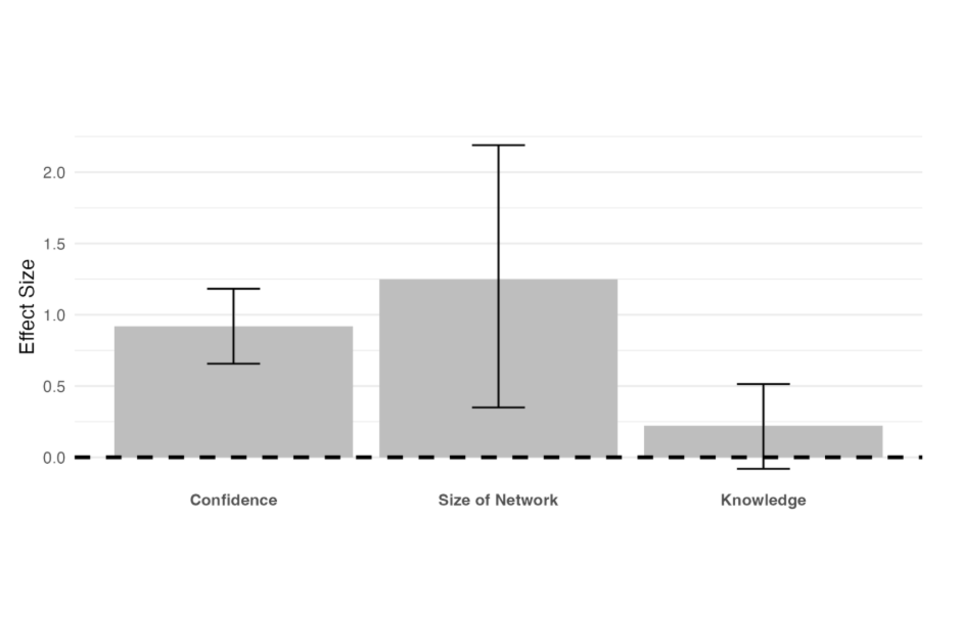

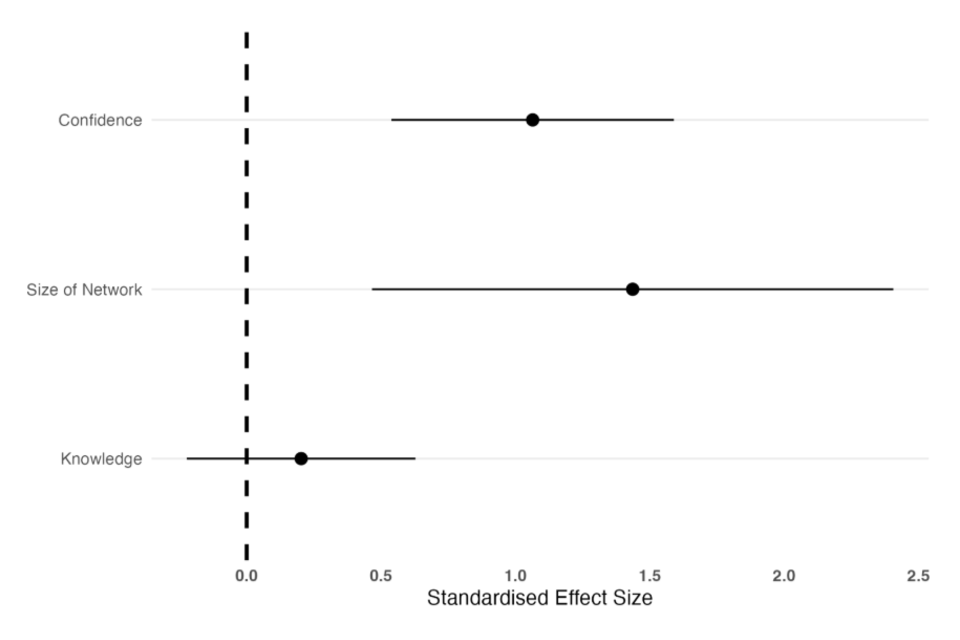

The results from the regression analyses (described in section 2.6) are presented in Table 3 and Figure 2. These analyses investigate the relationship between attending the Academy and the endline responses for each of three outcomes, while controlling for the relevant baseline responses and the department and the grade (measured at baseline) of Academy participants.[footnote 13]

Table 3 includes two presentations of the estimated effects. The “Estimated effect” column describes the size of the estimated effect in units of the original outcome variables. In addition, the “Standardised effect” column describes the size of the estimated effects in standard deviation units of the outcomes as measured from the control group at endline. The standardised effect sizes make it easier to facilitate comparisons of treatment effects across our outcome variables, which are all measured on different scales.

| Outcome | Estimated effect | 95% Confidence interval | Standardised effect | n | P-value |

|---|---|---|---|---|---|

| Confidence in Delivering Evaluation Training | 0.84*** | [0.6, 1.08] | 0.92 | 114 | 0.00 |

| Size of Evaluation Network | 7.97*** | [2.23, 13.99] | 1.25 | 114 | 0.00 |

| Evaluation Knowledge | 0.03 | [-0.01, 0.07] | 0.22 | 114 | 0.07 |

- p < 0.05, ** p < 0.01, *** p < 0.001

Table 3: OLS regression estimates for outcome variables (confidence, network size and knowledge) at endline. Covariates include the baseline measures of the relevant outcome and participants’ department and grade at baseline. Standardised effect sizes represent control-group standard deviation differences between treatment and control.

Comparison of Standardised Treatment Effects Across Outcomes

Point estimates with 95% Confidence Intervals

Figure 2: Standardised treatment effects across the three outcome variables (confidence, network size and knowledge) at endline.

Primary Outcomes

The results demonstrate that participating in stage 1 of the Evaluation Academy had significant impacts on both of the primary outcome variables.

For the confidence outcome, the estimated effect is 0.84 which indicates that those assigned to participating in the Academy reported confidence in delivering evaluation training at endline that, on average, was 0.84 points higher than those assigned to the control group. Given that the confidence variable is measured on a 5 point scale, this represents a substantive difference between the means of the two groups. This difference is statistically significant at the 99% level. The standardised effect size for the confidence variable was 0.92 of the standard deviation of the control group at the endline which is typically considered to be a large effect size.

For the network outcome, the estimated effect is 7.97. This indicates that those assigned to participate in the Academy reported an additional 8 people in their cross-government evaluation network relative to those assigned to the control group (a standardized effect size of 1.25 times the standard deviation of the control group at endline). This difference is also statistically significant at the 99% level.

Together, these effect sizes are large in magnitude and, despite the relatively small sample size in the trial, sufficiently precisely estimated for us to be able to comfortably reject the null hypothesis of no effect. This provides strong evidence that the Evaluation Academy training caused an increase in the self-reported confidence of those assigned to participate, as well as an increase in the self-reported size of their evaluation networks.

Secondary Outcome

Table 3 and Figure 2 reveal that, at endline, those assigned to participating in the Academy scored 3 percentage points higher on the evaluation knowledge battery than those assigned to the control group. However, while the confidence intervals on this effect are sufficiently narrow that we can rule out meaningful negative effects of the Academy of evaluation knowledge, we are not able to rule out the possibility of null effects as the difference we estimate is not statistically significant at conventional levels. This finding is consistent with the discussion above which indicated that knowledge gains from the first stage of the Academy are likely to be relatively hard to achieve, given that the sample of participants is expected to have a high level of evaluation knowledge before beginning the training.

2.10 Addressing Threats to Inference

Non-compliance: Some treatment group participants failed to attend the Academy despite being allocated a place.[footnote 14] Although the level of non-compliance was relatively modest (11.6% of those allocated to the treatment group failed to participate in the Academy), we mitigate the concern that non-compliance could bias our effect estimates in annex section 6.7 by estimating Complier Average Treatment Effects (CATEs) using a two-stage least squares approach as described in Gerber and Green (2012, 151). The treatment effects estimated from this approach are substantively and statistically very similar to those presented in the section above.

Attrition: As documented in section 2.7, there was limited attrition between baseline and endline in either the treatment or the control group. Nevertheless, to ensure that the estimates presented above are not biased by the slight differential attrition between treatment and control, in annex section 6.7 we calculate “worse-case” or “extreme-value” bounds on the treatment effects for all three outcome variables (Lee 2009). Extreme-value bounds on the treatment effects assess the potential consequences of differential attrition by examining how the estimated treatment effect would vary when making different assumptions about the missing outcomes that arise due to non-response. The results of this analysis indicate that the substantive conclusions drawn from the main analysis are very unlikely to be affected by differential attrition.

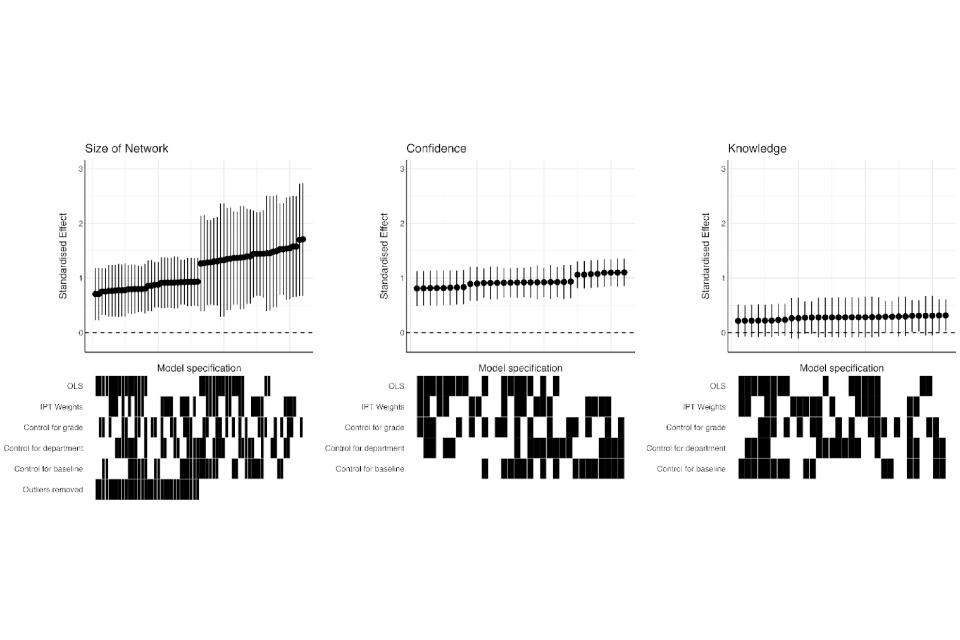

Robustness: All quantitative analyses require making analytical decisions which can be consequential for the resulting estimates. The analysis presented above follows the statistical analysis plan that we preregistered before commencing endline data collection (Beckett et al. 2025). Nevertheless, it is possible that the estimates we present would have changed had we made different analysis decisions. In order to assess this possibility, annex section 6.8 presents the results of a multiverse analysis (Steegen et al. 2016). A multiverse analysis is a systematic approach to conducting robustness checks for an evaluation in which the analyst identifies all reasonable analytical choices that could have been included in a statistical analysis plan and systematically produces and reports results for each possible combination of these choices. The multiverse analysis we conduct reveals that the conclusions reported above are insensitive to the specific analytical decisions we employ.

2.11 Limitations

There are limitations to the findings reported above. First, the outcome measures are self-reported survey responses which may overstate effects if participants felt pressure to demonstrate improvements. Future evaluations should consider more objective approaches to measuring the outcomes of focus here. Second, the endline survey environment was different for treatment and control participants in that the treatment group completed the endline survey in person in exam conditions whereas the control completed it independently online. Future evaluations should consider whether a more consistent approach could be taken without impacting on the response rates of the endline survey. Third, the trial focused on only a subset of the short-term outcomes articulated in the Academy’s Theory of Change, and did not assess whether improvements in confidence and networks translate into higher-quality training delivery within departments. Nor does the current design allow assessment of the Academy’s longer-term impact on evaluation capability across government. Future evaluation should therefore prioritise assessing stage 2 of the Academy, tracking how participants apply their training within departments, and investigating whether short-term gains in confidence and networks translate into sustained improvements in evaluation quality and capability across government.

2.12 Summary

The results of the impact evaluation indicate that stage 1 of the Evaluation Academy was successful in achieving its primary short-term objectives. Participants assigned to the July 2025 cohort reported substantially higher levels of confidence in delivering evaluation training (average increase of 1-point on a 5-point scale) and a marked increase in the size of their cross-government evaluation networks (an average increase of 8 people). These effects were large in magnitude, precisely estimated, and robust to alternative analytical strategies, providing strong evidence that the Academy training produced meaningful improvements in these outcomes.

The evaluation found limited impacts on participants’ technical knowledge of evaluation methods and processes. This is consistent with the expectations outlined in the sections above. Participants selected for stage 1 of the Academy were expected to have existing evaluation expertise, and stage 1 was designed to improve the ability to teach and disseminate evaluation content, rather than to build basic evaluation knowledge. The absence of large knowledge effects could serve as confirmation that the programme was appropriately targeted at civil servants with pre-existing evaluation knowledge. However, further research should investigate other potential reasons for the absence of large knowledge effects given that the baseline results did show the potential for significant improvements.

3. Implementation and Process Evaluation

3.1 Overview of the approach

In addition to the impact evaluation, we also conducted an implementation and process evaluation (IPE) to understand participant and teacher experiences of the Academy. The IPE aims to inform future delivery of the Academy through understanding any barriers and facilitators to participation and successful implementation. The delivery of the Academy has been iterated over the two previous years of delivery, taking on board research with participants and trainers about the effectiveness of its design. However, the aim of this IPE is to provide further useful insights to shape the ongoing delivery of the Evaluation Academy which, in turn, will improve participant ability to engage effectively in the training.

The research questions for the IPE are:

- Was the Academy delivered in the way that was envisaged?

- What were the key enablers and barriers to effective participation in the Academy?

- What were participants’ experiences of attending the Academy?

- What are participants’ recommendations for improvement of the training in the future?

We collected and triangulated a variety of data sources (see next section) to draw conclusions about participant experiences and answer these research questions.

3.2 Methodology

This IPE relied on data from five separate sources which are outlined in Table 4 below.

| Data type | Source | Information included | Sample Size |

|---|---|---|---|

| Monitoring data | Registration form | - Department - Grade - Job role |

59 |

| Qualitative feedback from participants on the Academy | An anonymous feedback survey completed post-Academy in participants’ own time. | A battery of 9 questions outlined in annex 6.4. These are a mixture of multiple choice and free-text responses. | 25 (42% response rate) |

| Qualitative feedback from teachers on the Academy | Feedback form for teachers completed at the end/during sessions | - What overall feedback do you have about the delivery of the Evaluation Academy? - Was there any content in your module that should have been included? - Were there any mistakes or needs that you spotted that should be changed before next year? |

25 |

| Qualitative feedback from teachers on the Academy | 60 minute focus group session with ETF teachers | - What went well/less well in the delivery of the Academy? - What were the barriers/facilitators to participant engagement in the Academy? - Is there anything that you would change about the delivery of the Academy for next year? |

15 |

| Observations | Observations from Evaluation Academy organisers | - Notes taken by organisers across the 5 days | 2 |

Table 4: Data types, data source, data points, and sample size for the data collected and used for the IPE.

In order to contextualise the qualitative analysis in this process evaluation, monitoring data was first analysed to produce descriptive statistics of the types of participants taking part in the Academy. Qualitative data from participant and teacher feedback was then triangulated with observations from Evaluation Academy organisers to explore themes relating to each of the four research questions. This method was chosen with careful consideration, given that participants were engaging in an intensive training programme and taking part in a trial which required surveying, so researchers were reluctant to add additional burden by requesting them to engage in lengthy interviews or focus groups.

All qualitative data outlined in Table 4 was analysed by internal ETF researchers, and quality assured externally by Cabinet Office analysts. Qualitative data was analysed to explore teachers’ and participants’ experiences, views, and perceptions. During this process, care was taken to ensure that information shared during surveys, interviews and focus groups does not contain identifiable data.

3.3 Results

3.3.1 Research Question 1

Was the Academy delivered in the way that was envisaged?

The July 2025 Evaluation Academy was developed with a number of intentional design choices to support the delivery of its intended outcomes.

Size of Evaluation Network

The Academy was intentionally designed by the ETF to be delivered in person, with time allocated for networking, and intentionally mixed groupings in order to support the intended outcome of strengthened networks for attendees.

An external facilitator has been commissioned by the ETF over the last three years to facilitate the Academy train-the-trainer course and these facilitators are well placed to provide insights into whether the course was delivered as intended. The facilitators observed that the July 2025 Academy had the highest standard of participant delivery that they had seen to date. Both facilitators and ETF organisers observed that the choice to split the Academy across two weeks and to include more breaks throughout the day compared to previous years allowed attendees more space and time to prepare for their masterclasses, as well as absorb and reflect on what they had learnt. In order to embed these additional breaks into the agenda, teaching times were reduced compared to previous years. This was an intentional design choice by the ETF which involved trade-off between networking and teaching.

The ETF decided that more time for networking was crucial to the Academy delivering its intended primary outcomes and so should be given more emphasis. However, as a result of this, facilitators and ETF organisers observed that some sessions overran by up to 10 minutes (particularly the technical teach-in sessions). When asked how the Academy could be improved, participants and teachers felt that some sessions had too much content for the allocated time and should be extended. As a result of these sessions overrunning, some participants did not get as much networking time as originally intended. Future delivery design should consider how to avoid these overrunning sessions to ensure that the networking time is prioritised given the link to the primary outcome of the Academy.

Another design feature of the Academy was the intentionally mixed participant groupings from a diverse range of departments. In July 2025, 57 participants attended the Evaluation Academy training. Participants attended from 22 government departments, devolved governments, and government organisations. Table 5 shows the breakdown of attendees per organisation. This range of organisations was as intended in the design to support participants to grow their networks with other evaluators.

| Department/Organisation | Number of attendees |

|---|---|

| Cabinet Office | 5 |

| Crown Prosecution Service | 1 |

| Department for Business and Trade | 4 |

| Department for Environment, Farming, and Rural Affairs | 2 |

| Department for Education | 2 |

| Department for Health and Social Care | 2 |

| Department for Work and Pensions | 4 |

| Foreign, Commonwealth, and Development Office | 4 |

| Food Standard Agency | 2 |

| Government Office for Science | 1 |

| HM Revenue and Customs | 3 |

| HM Treasury | 2 |

| Home Office | 4 |

| Intellectual Property Office | 2 |

| Ministry for Housing, Communities, and Local Government | 2 |

| Ministry of Defence | 3 |

| Office of Gas and Electricity Markets | 4 |

| Office for National Statistics | 1 |

| Scottish Government | 3 |

| UK Health Security Agency | 3 |

| Valuation Office Agency | 1 |

| Welsh Government | 2 |

| Total attendees: | 57 |

Table 5: Table to show the number of Academy attendees from each of the 22 participating Government Organisations.

Confidence in Delivering Evaluation Training

The Academy was also designed to very quickly build participant confidence to deliver training themselves by employing an in person, hands-on approach to teaching over five days. This involves participants presenting from day one of the course and being pushed out of their natural comfort zones where required.

Six participants dropped-out of the Academy ahead of the training’s commencement due to being unable to get time away from their day-to-day work. Due to the intense nature of the course, it is not possible to partially attend the course and this requirement could have encouraged last-minute dropouts. Non-attendance could have been due to the timing of the Academy, which coincided with a period after the Spending Review and before Summer Recess began. As a result of the drop-outs, 57 out of a potential 64 Evaluation Academy places were taken up in July 2025.[footnote 15] For the 57 participants who attended, all 10 modules of the Academy were delivered across five days as intended (please see annex 6.5 for the full July 2025 Academy agenda).

As discussed in section 1.1, stage 1 of the Academy is designed to teach analysts who have pre-existing evaluation knowledge to teach others about evaluation. Another intentional design choice for the Academy was that participants were required to have around two years of experience in evaluation roles so that they could keep up with the pace of the course and focus learning on how to deliver, rather than learn, the technical content. As a consequence, attendees to the Academy are likely to be of a higher civil service grade given the need for more experience. However, participants may not have experience in all aspects of evaluation knowledge, leaving room for improvement in evaluation knowledge as a secondary outcome of the Academy.

Participants were from several civil service grades, ranging from Higher Executive Officer to Grade 6. Almost all attendees (98%) were below Grade 6, with the majority (86%) being either Senior Executive Officers or Grade 7s. See Figure 3 below for a full grade breakdown. This means that the majority of participants were in mid or senior level management roles as expected given the requirement to have existing evaluation knowledge at attend stage 1 of the Academy.

Attendees by grade (n=56)

| Grade | Percentage of attendees |

|---|---|

| HEO | 13% |

| SEO | 41% |

| Grade 7 | 45% |

| Grade 6 | 2% |

Figure 3: Bar chart showing the proportion of Evaluation Academy attendees by grade, ranging from HEO to Grade 6.

Evaluation Knowledge

Despite the expectation that participants have existing experience in evaluation, the ETF made the design choice to include ‘teach-in sessions’ for each module to ensure that those with knowledge gaps in specific topics could be brought up to speed. The teach-in sessions involved evaluation experts running through the module content in their own teaching style, and answering technical questions while also providing tips on delivering the training. Participants also received supplementary materials with additional technical information, case studies, and frequently asked questions to support their learning.

In conclusion, the Evaluation Academy was developed with a number of intentional design choices to support the delivery of key outcomes in the theory of change:

- Size of Evaluation Network: Additional breaks, mixed groupings and an in person format to support enhanced networking opportunities;

- Confidence in Delivering Evaluation Training: In person, hands on activities, delivered to analysts with existing evaluation experience from the first day supported rapid confidence building for presenting;

- Evaluation Knowledge: Teach-in sessions and supplementary materials supported upskilling attendees in evaluation methods.

3.3.2 Research Question 2

What were the key enablers and barriers to effective participation in the Academy?

There were several key enablers and barriers to effective participation in the Academy which were highlighted by participants and teachers in their feedback. These can be divided into overarching factors as well as those that relate to the three outcomes of interest for this evaluation. The below analysis explores each in detail. A summary of the barriers and facilitators for each outcome is summarised in Table 6 below.

| Theme | Enablers | Barriers |

|---|---|---|

| Overarching factors | - Well organised | - Temperature |

| Size of network | - In person - ETAP support |

|

| Confidence in delivering evaluation training | - Academy facilitator - Observing a range of teaching styles - Senior speakers |

- Pace/intensity - Assumed prior knowledge - Wordy slidepacks |

| Evaluation knowledge | - ETAP support |

Table 6: Summary table of the enablers and barriers to participation for participants of the Evaluation Academy.

Overarching factors

Participants and teachers both reported that the Academy was well organised which helped the course run smoothly from both participant and teacher perspectives. However, a barrier to participation for participants was the temperature on the first two days of the course because it was during a heatwave and the building did not have air conditioning in the majority of its rooms. This was coupled with a more limited access to water, as participants needed to be escorted to fill water bottles due to security restrictions. This made concentration difficult for some attendees on those first two days.

Prior to the Academy being delivered, there were concerns about participant engagement in the Academy content given that they would have access to their work laptops. In the first two train-the-trainer Academies, all materials had been printed and no laptops were allowed for attendees. However, this year the ETF chose not to print materials due to sustainability and financial considerations. This posed a risk that attendees would be distracted by messages from colleagues in their day jobs. Facilitators did observe some participants with work content open on their laptops. However, they did not observe this as a barrier to participation nor did attendees or teachers note this as an issue in their feedback.

Size of Evaluation Network

As discussed in the previous section, there were a number of intentional course design choices which were made to support participants to network. Participants and teachers both expressed that having training delivered in person across five days was beneficial for networking but also to keep learners engaged in the content more easily. Furthermore, ETF teachers who had also supported the Academy, noticed that the format, which did not place sessions across lunch breaks, allowed for more networking opportunities and collaboration. Many participants also noted that the Academy’s mixed groupings helped them to form new networks across departments and engage with people that they would not have encountered otherwise. Academy groups were between eight to ten people, with a mix of individuals from different departments. The participants also set up a Whatsapp group and two social meet-ups throughout the week for the whole cohort of their own volition which showed the strength of connection made. This in person, collaborative aspect of the course design was a key enabler for the primary outcome of increasing participant networks. This compliments the findings from the IE which found that participants’ networks increased by eight people on average.

The Academy also enlisted the support of evaluation experts from the Evaluation and Trials Advice Panel (ETAP) to teach the technical content alongside ETF members. Participants reported that they found ETAP involvement in the Academy important for building a network of subject matter experts.

Confidence in Delivering Evaluation Training

In their feedback about the Academy experience, many participants noted that the course was busy and intense but that this did not feel overwhelming once it began. One participant stated, “It felt a bit like being thrown in at the deep end - but in a good way!”. This reflects the intentional design choices as mentioned in the previous section to design a course which quickly builds confidence by rapidly pushing people to present materials to a group. One participant stated, “teaching right from the start was good as it meant by the time we got to the masterclass I didn’t feel at all nervous about presenting”. For a small proportion of participants, however, the pace and intensity was more of a barrier to confidence building because it moved too quickly, stretching them too far out of their comfort zone.

One major enabler reported by participants for effective participation which led to confidence building was the involvement of the Academy facilitator. Many participants named the expert facilitator directly as a key contributor to their positive learning experience, both through his specific sessions and general supportive nature. The facilitator built a supportive culture for the course right from the beginning, setting norms and expectations for learner participation. The facilitator also led many group sessions focused on giving participants practical tips for how to effectively engage audiences and teach content. They not only built confidence by reassuring participants that the course was designed to be challenging, but also by providing them with the practical skills to deliver training themselves. The facilitator was a key enabler for participation for participants linked to the primary outcome of building confidence.

Another enabler for confidence building reported by participants was the ability to observe a range of teaching styles from the ETF, ETAP, and each other. Participants felt that seeing this diversity in approaches and being able to discern which worked best for them made them more able and more confident to stand up and deliver the masterclass themselves. Participants also noted that engagement from senior speakers including Catherine Little[footnote 16], Nick Donlevy[footnote 17] and David Halpern[footnote 18] helped to keep them engaged and focused on the key mission of the Academy which is to ultimately support better evaluation across government. These speeches, which were spaced throughout the week, were designed to motivate participants by reminding them periodically about the importance of evaluation in decision making. Having this ultimate aim clear throughout the course supported participants to be confident in delivering this training as it was clearly supported by visible senior stakeholders.

One barrier to participation related specifically to confidence in delivering evaluation training was that some participants felt that there was too much assumed prior knowledge. The Academy is designed for individuals who have been working in evaluation for at least two years, and is not marketed as an evaluation training. The premise of the course is to teach participants how to teach methods to others. However, some participants felt that they did not have enough baseline evaluation knowledge to confidently present technical evaluation information. On the other hand, in the optional post-Academy feedback survey, the majority of respondents (76%) reported that they felt the content was about right for their level of experience and only 12% reported that it was too advanced (see Figure 4). As discussed in annex section 6.6.1, participants’ evaluation knowledge prior to the intervention (as measured in our baseline survey) was shown to be reasonably good in relation to the level of questions asked, with some variation across the sample. This variation is consistent with the pattern shown in the qualitative research that the majority of participants found the level of required prior knowledge about right but a small proportion found it too advanced and a barrier to their effective participation. It is important to note that the same proportion of respondents rated the training as too basic as rated it as too advanced. There is a careful balance to strike in ensuring that the course content is of sufficient quality and detail whilst not overburdening the majority of participants.

Thinking about the three sessions as a whole (Teach-in, Delivery Practice and Coaching, and Masterclasses), how would you rate the training in relation to your level of experience?

| Response | Percentage of respondents |

|---|---|

| Too basic | 12% |

| About right | 76% |

| Too advanced | 12% |

Figure 4: Bar chart showing responses to the optional Academy feedback survey. This question asked “Thinking about the three sessions as a whole (Teach-In, Delivery Practice and Coaching, and Masterclasses), how would you rate the training in relation to your level of experience?”. The total number of responses for this question was 25.

One other barrier to participation related to confidence in delivering evaluation training that participants cited in the post-Academy survey was the design of the module slidepacks. Participants noted that the slides were often too text-heavy and some animations were broken which meant that they found them challenging to present or understand. Our ETAP and ETF teachers also gave similar feedback on the slides, adding that some of the content could be refreshed to allow for easier teaching and more up to date examples.

Evaluation Knowledge

Whilst the pace of the course acted as an enabler to quickly build participant confidence in delivering evaluation training, for some it acted as a barrier to knowledge building as it did not leave much time for additional learning. A small number of students felt that there was too much assumed prior knowledge, which both impacted confidence and knowledge building. The impact evaluation revealed no negative impact on knowledge caused by the Academy training, however, the intentional design choice for the Academy to get participants quickly presenting across a range of modules, may have limited participant ability to make significant positive shifts in their evaluation knowledge.

An important enabler for participation related to evaluation knowledge building highlighted by participants in their feedback was ETAP teachers. Participants commented on the benefit of being able to ask in depth, technical questions to these volunteers to expand their technical knowledge beyond the baseline content. ETAP members were also able to bring a wealth of experience in teaching evaluation methodologies to the sessions, and alongside the ETF’s experience in applying this to a government context, participants found the combination to be helpful. Both participants and teachers remarked that some more consistency in teachers across the week might have made the content delivery easier and built even stronger relationships. ETAP involvement in the course design was a key enabler for the secondary outcomes of increasing evaluation knowledge, however, this could be enhanced further in the future by having more consistent teaching throughout the week.

3.3.3 Research Question 3

What were participants’ experiences of attending the Academy?

Whilst it is important to understand whether the Academy was delivered as expected and any barriers/enablers for participation, it is also crucial to understand the participant experience of attending. During the five days of the Academy, ETF organisers observed participants being vocal about how much they were enjoying attending an in-person event and meeting new people. On day 1, there was a great sense of anticipation and some nervousness about the task at hand. However, confidence grew throughout the week as did connections between attendees and by the final day there was a sense of accomplishment across the group. One participant remarked that it was the best training they have ever attended. This growth in confidence is consistent with the significant increase in confidence found in the impact evaluation.

In a post-Academy optional feedback survey, we asked participants about their experiences on the course. Firstly, we asked about how useful they found the supplementary sessions run by the facilitator. These sessions were focused on teaching participants how to effectively facilitate the Academy modules in their home departments and ensure that what they teach stays with their learners. 60% of the respondents to this question rated the facilitator’s sessions as extremely useful for preparing them to facilitate the Academy modules (see Figure 5 below). During the course, participants also gave verbal feedback that these sessions with the facilitator were also valuable for preparing them for the masterclass sessions in the second week. Attendees did report that they would have liked some more advice on how to deliver training effectively in an online or hybrid environment. They noted that this is most likely how they will need to deliver their training sessions and so they called for more advice on how to do this effectively.

Thinking about the workshops that the facilitator led, how useful were they to help prepare you to facilitate the Academy Modules?

| Score (5=extremely useful, 1=not useful) | Count (n=25) |

|---|---|

| 1 | 0 |

| 2 | 0 |

| 3 | 3 |

| 4 | 7 |

| 5 | 15 |

Figure 5: A bar chart showing responses to a question in the post-Academy optional feedback survey which asked “Thinking about the workshops that the facilitator led, how useful were they to help prepare you to facilitate the Academy modules?”. The total number of responses for this question was 25.

In the same survey, participants were also asked to rate how useful the three different types of module sessions (Teach-in, Delivery Practice and Coaching, and Masterclass) were for preparing them to deliver the Academy. Sessions were rated on a scale of 1 to 5 where 1 was equal to not at all useful and 5 was equal to extremely useful. Figure 6 shows the responses to this question for each type of session.

The masterclass session had the highest proportion of respondents rate it as extremely useful (56%). This is likely due to the fact that this session is designed as a dress rehearsal for the delivery of the Academy in participants’ home departments. The teach-in and delivery practice and coaching had much lower proportions of respondents ranking them as extremely useful with 8% and 20% respectively.

The teach-in sessions had a mixed response from respondents about their usefulness. They had the highest proportion (52%) of respondents rating it 3 or below. However, it also had 48% of respondents rating it 4 or 5. Therefore, we can determine that there was a mix of opinions on these sessions. This might be due to the range of prior evaluation knowledge held by attendees. Those with lower evaluation knowledge may have found teach-in sessions more useful to upskill them on the content while those with a higher existing evaluation knowledge may have found these sessions less useful as they were already familiar with the content being taught in these sessions.

The delivery practice and coaching session also split opinions across the respondents with 36% of respondents rating it 3 or lower and 64% rating it above 3. In the anonymous feedback survey, respondents reported that these sessions were helpful for gaining more confidence in presenting to others and getting feedback on different approaches. However, participants also reported that the usefulness of these sessions are limited because no time is given between the teach-in session to prepare for the delivery practice. Participants also reported a range of experiences in the coaching from teachers in that some provided hugely helpful feedback and pointers, whereas others were more reluctant to share constructive feedback. These anecdotal accounts might explain the range of responses to this question.

Thinking about the three different types of sessions, how useful were they in preparing you for delivering the Evaluation Academy?

| Score (1=not at all, 5=extremely useful) | Teach-in Session - Count (n=25) | Delivery Practice and Coaching Session - Count (n=25) | Masterclass session - Count (n=25) |

|---|---|---|---|

| 1 | 1 | 1 | 1 |

| 2 | 4 | 2 | 0 |

| 3 | 8 | 6 | 2 |

| 4 | 10 | 11 | 8 |

| 5 | 2 | 5 | 14 |

Figure 6: Bar chart showing responses in an optional post-Academy feedback survey to the question “Thinking about the Teach-in, Delivery Practice and Coaching, and Masterclass sessions. How useful were they to help prepare you to facilitate the Academy Modules? (5=extremely useful, 1=not useful)”. The total number of responses for this question was 25.

One further question asked participants to what extent they could apply what they have learnt to their work. Respondents were asked to rate this on a scale of 1 to 5 where 1 was equal to not at all and 5 was equal to a lot. 84% of respondents rated a 4 or higher for this question (see Figure 7). This suggests that the majority of respondents found what they learnt during the Academy to be applicable in their daily work. This is likely due to the fact that many respondents work in evaluation roles across government and because the general presentation skills taught on the course are also useful for non-evaluation roles.

Thinking about the overall experience, to what extent do you think you can apply what you’ve learned to your work?

| Score (5=a lot, 1=not at all) | Count (n=25) |

|---|---|

| 1 | 0 |

| 2 | 2 |

| 3 | 2 |

| 4 | 10 |

| 5 | 11 |

Figure 7: Bar chart showing responses to a question in the post-Academy feedback survey which asked “Thinking about your overall experience, to what extent do you think you can apply what you’ve learned to your work? (1=not at all, 5=a lot)”. The total number of responses for this question was 25.

3.3.4 Research Question 4

What are participants’ recommendations for improvement of the training in the future?

Participants made several recommendations for how to improve the training for future cohorts. In the optional feedback survey, respondents were asked about one thing they would change about the Academy. Three of the recommendations were related to the primary outcome of confidence in delivering evaluation training: