Quality and Methodology Information for the Civil Service People Survey 2023

Updated 29 August 2024

Civil Service People Survey 2023: Technical Summary

Coverage

In 2023, 103 Civil Service organisations took part in the survey. The section “Participating Organisations” provides more details on coverage.

A total of 549,574 people were invited to take part in the 2023 survey and 356,715 participated, a response rate of 65%.

The census approach used by the survey (where all staff are invited to participate) allows us to produce reports for managers and teams so that action can be taken at all of the most appropriate levels across the Civil Service.

Note, these figures do not reconcile with Official Statistics about the size of the Civil Service due to different decisions about who is invited to participate in the People Survey and who is counted in Official Statistics.

Coordination and delivery of the survey

The survey is coordinated by the Civil Service People Survey Team in the Cabinet Office. The team commissions a central contract on behalf of the Civil Service and acts as the central liaison between the independent survey supplier and participating organisations. The 2023 survey was the fourth delivered by Qualtrics.

Questionnaire

Most of the questionnaire used in the Civil Service People Survey is standardised across all participating organisations. The section “Questionnaire and Question Development” provides more details on the questionnaire.

Data collection methodology

The questionnaire is a self-completion process, with 99% completing online and less than 1% on paper. Completion of all questions in the survey are voluntary. Fieldwork for the 2023 survey opened on 19 September 2023 and was closed on 23 October 2023.

Analysis

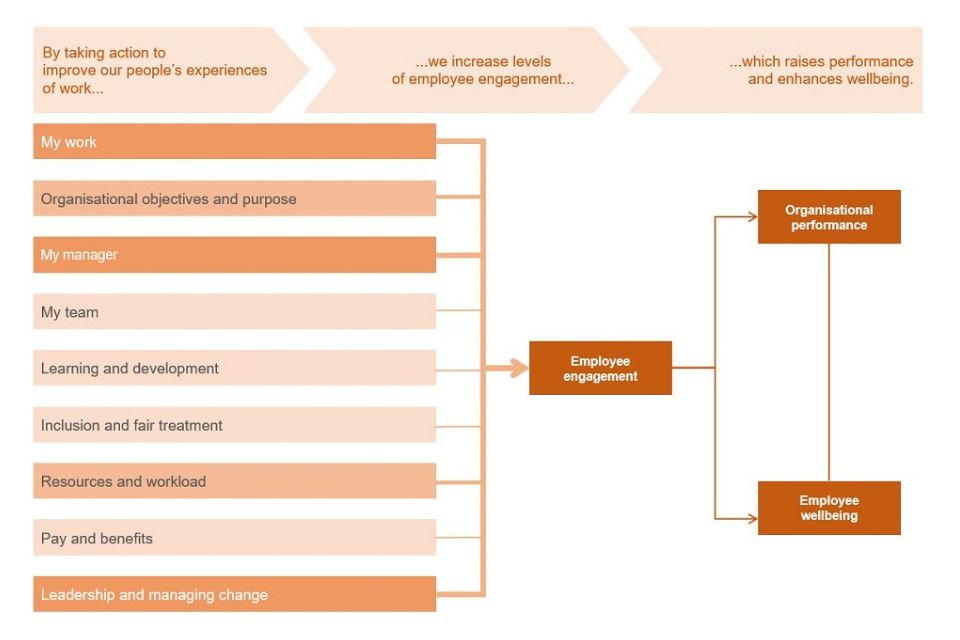

The framework underpinning the analysis of the Civil Service People Survey is based on understanding the levels of employee engagement within the Civil Service and the experiences of work which influence engagement. The “Employee Engagement” section provides more details on the engagement index, while the “Wellbeing indices” provides more details on the wellbeing indices.

Publication

Results from the 2023 People Survey will be published on GOV.UK during 2024:

Civi Service people survey hub

Participating Organisations

The Civil Service People Survey can be considered both as a single survey and as a large number of separate surveys.

The People Survey can be considered a single survey because:

-

It is commissioned by the Cabinet Office, on behalf of the UK Civil Service, as a single contract with a single supplier.

-

The majority of questions respondents are asked are the same, irrespective of the Civil Service organisation they work for.

-

The data is collected and collated and analysed as a single activity.

-

The survey takes place at the same time across all organisations.

However, the People Survey can also be considered a collection of separate surveys because:

-

The core questionnaire includes a ‘variable term’ meaning that certain questions include the name of the organisation. Rather than “Senior managers in my organisation are sufficiently visible”, respondents in the Cabinet Office, for example, are asked “Senior managers in the Cabinet Office are sufficiently visible” while respondents in the Crown Commercial Service are asked “Senior managers in the Crown Commercial Service are sufficiently visible”.

-

Organisations are able to select up to three sets of additional questions that focus on topics of particular interest/relevance to that organisation.

-

Organisations define their own reporting hierarchy and structure.

For the purposes of the People Survey an ”Organisation” is typically a government department or executive agency, and it is usually the case that an executive agency participates separately from its parent department.

However, in some cases it may be more practical or effective for a department and its agencies to participate together as a single organisation. Alternatively, it may be that particular sub-entities of a department participate as a standalone organisation, even though they may not be a fully organisationally separate entity from their parent department.

Below are listed all 103 “participating organisations” that took part in the 2023 survey, grouped by departmental/organisational family. Where a family has a single entry, that represents when that family has a single survey. Where a family has a number of bodies listed beneath it, then each of those bodies has completed a separate survey.

Organisations participating in the 2023 Civil Service People Survey:

Attorney General’s Departments

Attorney General’s Office

Crown Prosecution Service

Government Legal Department

HM Crown Prosecution Service Inspectorate

Serious Fraud Office

Cabinet Office

Cabinet Office

Crown Commercial Service

Government Property Agency

Charity Commission

Defence

Ministry of Defence

Defence Equipment & Support

Defence Science and Technology Laboratory

Submarine Delivery Agency

UK Hydrographic Office

Culture, Media & Sport

Department for Culture, Media & Sport

The National Archives

Department for Business and Trade

Acas

Department for Business and Trade

Companies House

Competition and Markets Authority

The Insolvency Service

Department for Education

Department for Education

Institute for Apprenticeships and Technical Education

Department for Energy Security and Net Zero

Department for Science, Innovation and Technology

Department for Science, Innovation and Technology

Building Digital UK

Intellectual Property Office

Met Office

UK Space Agency

Environment, Food and Rural Affairs

Department for Environment, Food and Rural Affairs

Animal and Plant Health Agency

Centre for Environment, Fisheries and Aquaculture Science

Rural Payments Agency

Veterinary Medicines Directorate

Estyn

Food Standards Agency

Foreign, Commonwealth and Development Office

Foreign, Commonwealth and Development Office

FCDO Services

Wilton Park

Government Actuary’s Department

Health and Social Care

Department of Health and Social Care

Medicines and Healthcare products Regulatory Agency

UK Health Security Agency

HM Inspectorate of Constabulary and Fire & Rescue Services

HM Revenue & Customs

HM Revenue & Customs

Valuation Office Agency

HM Treasury and Chancellor’s departments

HM Treasury

Government Internal Audit Agency

National Infrastructure Commission

UK Debt Management Office

Home Office

Justice

Ministry of Justice

Criminal Injuries Compensation Authority

HM Courts and Tribunals Service

HMPPS: Headquarters

HMPPS: Her Majesty’s Prison Service and Youth Custody Service

HMPPS: Probation Service

Legal Aid Agency

MoJ Arms Length and Other Bodies

Office of the Public Guardian

Levelling Up, Housing and Communities

Department for Levelling Up, Housing and Communities

Planning Inspectorate

HM Land Registry

National Crime Agency

National Savings and Investments

Office of Rail and Road

Ofgem

Office of Qualification and Examinations Regulation

Ofsted

Scottish Government

Scottish Government

Accountant in Bankruptcy

Crown Office and Procurator Fiscal Service

Disclosure Scotland

Education Scotland

Food Standards Scotland

Forestry and Land Scotland

National Records of Scotland

Office of the Scottish Charity Regulator

Registers of Scotland

Revenue Scotland

Scottish Courts and Tribunal Service

Scottish Forestry

Scottish Housing Regulator

Scottish Prison Service

Scottish Public Pensions Agency

Social Security Scotland

Student Awards Agency for Scotland

Transport Scotland

Territorial Offices

Scotland Office, Office of the Advocate General, Wales Office and Northern Ireland Office

Transport

Active Travel England

Department for Transport

Driver and Vehicle Licensing Agency

Driver and Vehicle Standards Agency

Maritime and Coastguard Agency

Vehicle Certification Agency

UK Export Finance

UK Statistics Authority

UK Statistics Authority

Office for National Statistics

Water Services Regulation Authority (Ofwat)

Welsh Government: Llywodraeth Cymru

Welsh Revenue Authority

Work and Pensions

Department for Work and Pensions

Health and Safety Executive

Questionnaire and question development

Questionnaire structure

The Civil Service People Survey is comprised of three sections:

-

Core attitudinal questions

-

Local optional attitudinal questions

-

Demographic questions

The core attitudinal questions

The core attitudinal questions cover perceptions and experiences of working for a Civil Service organisation; future intentions to stay or leave; awareness of the Civil Service Code, Civil Service Vision and the Civil Service Leadership Statement; experiences of discrimination, bullying and harassment; and ratings of individual subjective wellbeing. The core attitudinal questions also include an opportunity to provide free-text comments.

The core attitudinal questions includes the five questions that are used to calculate the survey’s headline measure, the “Employee Engagement Index”. A large number of the core attitudinal questions have been grouped into nine themes using factor analysis (a statistical technique to explore the relationship between questions), that are associated with influencing levels of employee engagement – taking action on these themes will lead to increases in employee engagement. You can read more about employee engagement and the Civil Service People Survey in the section called “Employee Engagement”.

The majority of the core additional questions are asked on a 5-point scale of strongly agree to strongly disagree.

Local optional attitudinal questions

The core attitudinal questions are a set of common questions that provide an overview of working for an organisation, and are generally applicable to any working environment.

However, there may be topics that particular organisations want to explore in more detail, therefore each participating organisation can select up to three short blocks of additional attitudinal questions that have been standardised.

Demographic questions

The core demographic questions collect information from respondents about their job and personal characteristics such as their working location and age. These questions are used to filter and compare results within organisations by different demographic characteristics so that results can be better understood and action targeted appropriately. The vast majority of demographic questions are standardised across the survey to enable analysis not only at organisation-level but also across the Civil Service.

Questionnaire development and changes over time

Prior to 2009, government departments and agencies conducted their own employee attitudes surveys, using different question sets, taking place at different times of the year, and at different frequencies. In addition to the economies of scale afforded by coordinating employee survey activity, a single survey allows for a coherent methodology to be applied that facilitates effective analysis and comparison.

The questionnaire is reviewed on an annual basis, following consultations with internal and external stakeholders. Internally this includes HR Directors from across participating organisations, as well as policy leads, organisations survey managers, cross-government networks, and actual respondents to the survey. Externally, the central team engages with international peers via the Organisation for Economic Co-operation and Development (OECD) survey group, academics, other public sector organisations, and trade union representatives. Below is a summary of changes made to the survey.

2007 – 2008: Pathfinder studies and harmonisation

Pathfinder studies were conducted with Civil Service organisations over 2007 and 2008, to inform the development of a core questionnaire for a pilot of the ‘single survey’ approach. The questionnaire used in the pilot was a pragmatic harmonisation of previous questionnaires used in staff surveys by Civil Service organisations, while ensuring it covered key areas identified by previous studies of employee engagement.

Employee engagement is a workplace approach designed to ensure that employees are committed to their organisation’s goals and values, motivated to contribute to organisational success, and are able at the same time to enhance their own sense of well-being. There is no single definition of employee engagement or standard set of questions; for the Civil Service it was decided to use five questions measuring pride, advocacy, attachment, inspiration, and motivation.

The development of the Civil Service People Survey questionnaire was done in consultation with survey managers and analysts across all participating organisations. This development process consisted of a substantial review of the questionnaire (including cognitive testing) to ensure it used plain English and that the questions were easily understood by respondents. The ‘single survey’ approach meant that organisations could retain trend data, by using questions they had previously measured, while ensuring that the questionnaire was fit for purpose in measuring employee engagement in the Civil Service and the experiences of work that can affect it.

2009: The first People Survey and factor analysis

Following a successful pilot of the ‘single survey’ approach in early 2009, the first full Civil Service People Survey was conducted in autumn 2009. The results of the pilot and the first full survey were used in factor analysis to identify and group the core attitudinal questions into 10 themes (the employee engagement index and nine ‘drivers of engagement’).

Factor analysis identifies the statistical relationships between different questions, and illustrates how these questions are manifestations of different experiences of work. For example, the question “I have the skills I need to do my job effectively” might, at first glance, seem to be a question about learning and development but factor analysis of the CSPS dataset found that this was more closely related to other questions about ‘resources and workload’. The themes have shown relatively strong consistency in structure across organisations and across time.

2011: Taking action

In 2011, the first change to the core questionnaire was undertaken to add a further question to measure whether staff thought effective action had taken place since the last survey.

2012: Organisational culture and subjective wellbeing

Five questions on organisational culture were added to the core questionnaire in 2012. They were included to help measure the desired cultural outcomes of the Civil Service Reform Plan.

Four new questions on subjective wellbeing, as used by the Office for National Statistics as part of their Measuring National Wellbeing Programme, were also added to the core questionnaire in 2012:

-

Overall, how satisfied are you with your life nowadays?

-

Overall, to what extent do you think the things you do in your life are worthwhile?

-

Overall, how happy did you feel yesterday?

-

Overall, how anxious did you feel yesterday?

These were piloted with five organisations in the 2011 survey prior to their inclusion. The wellbeing questions are measured on an 11-point scale of 0 to 10, where 0 means not at all and 10 means completely.

2015: Civil Service Leadership statement and organisational culture

In 2015, eight questions related to the Civil Service Leadership Statement were added to measure perceptions of the behavioural expectations and values to be demonstrated by all Civil Service leaders. This section was reduced to two questions in 2016 as analysis of the 2015 results showed us that six questions were highly correlated with the ‘leadership and managing change’ theme questions, meaning questions could be removed without losing insight.

Depending on how respondents answered the Leadership statement questions they were given a follow-up question asking them to list up to three things that senior managers and their manager do or could do to demonstrate the behaviours set out in the Civil Service Leadership Statement.

2016: Organisational culture

One of the questions added in 2012 on organisational culture (“My performance is evaluated based on whether I get things done, rather than on solely following process”) was removed in 2016 as stakeholder feedback suggested that it offered little insight and removing it would reduce questionnaire length while having minimal impact on the time series.

2017: Questionnaire review and theme changes

In 2017 the Leadership statement follow up questions were amended to “Please tell us what [senior managers] in [your organisation] do to demonstrate the behaviours set out in the Leadership Statement” and “Please tell us what managers in [your organisation] do to demonstrate the behaviours set out in the Leadership Statement” and asked to all respondents. Each was followed by one text box. The follow up questions were not asked in paper surveys.

Six questions were removed as they were found to be duplicative or difficult to take action on (B06, B30, B40, B56, B60 and B61 in 2016). This change caused a break in the time series for three of the nine headline theme scores (Organisational objectives and purpose; Resources and workload; Leadership and managing change). Our independent survey supplier recreated trends for these theme scores for 2009 - 2016, so that organisations would still be able to see their 2017 results compared to equivalent 2016 theme scores, and theme scores in previous years.

Five new questions were then introduced in 2017 to improve insight into key business priorities. This included two questions on the Civil Service Vision, and three relating to organisational culture.

A response option was added to question J01, asking about gender, to allow individuals to say “I identify in another way”.

2018: Discrimination, gender identity, function and free-text comments

Following stakeholder consultation in 2018, two additional response options - ‘Marital status’ and ‘Pregnancy, maternity and paternity’ - were added to question E02, “On which of the following grounds have you personally experienced discrimination at work in the past 12 months?”

The wording of two response options, to question J01 “What is your gender identity”, were changed from ‘Male’ and ‘Female’ to ‘Man’ and ‘Woman’ respectively, to differentiate identity from sex at birth (which is asked in question J01A).

The wording of question H8B was changed from “Does the team you work for deliver one of the following Functions?” to “Which Function(s) are you a member of?” This was because our analysis found that respondents could be working in teams that delivered multiple Functions, some of which were not directly related to the work they did themselves.

A new preamble was added before question G01 “What would you like [your organisation] to change to make it a great place to work?” in light of the new General Data Protection Regulation (GDPR) to explain how we would use the free text comments provided by respondents.

2019: Diversity, discrimination, bullying/harassment, wellbeing and locations

To get better insights on experiences of discrimination, bullying and harassment in the Civil Service, and drawing on findings from Dame Sue Owen’s review to tackle bullying, harassment and misconduct in the Civil Service, a number of changes were made to this set of questions. For question E02 (the grounds of discrimination) new categories have been added and the language of some existing categories has been refined. New questions on the nature of bullying/harassment experienced and what the current state of the situation is have been added as follow-up questions for those who said they were bullied or harassed. The response options for E04 (the source of bullying/harassment) and E05 (whether they reported their experience) were also amended to improve insight gained from these questions.

Two additional demographic questions were added to improve our understanding of the health and wellbeing of civil servants. One new question asks about respondents’ self-assessed mental health (“In general, how would you rate your overall mental health now?”). The other new question asks respondents about workplace adjustments (“Do you have the Workplace Adjustments you need to do your job?”).

The existing response options for the questions asking about which region of England, Scotland or Wales work in have been expanded to include a broader range of areas (e.g. including three other major cities in Scotland [alongside Glasgow and Edinburgh]; these are Inverness, Dundee, and Aberdeen). For those working in Northern Ireland a question asking if they work in Belfast or elsewhere has been added. As workforce and organisational change programmes relating to location and place continue to develop, the location question will be kept under review in the coming years.

Seven new questions were added to help baseline the socio-economic diversity of the Civil Service workforce by 2020. This was a public commitment made in the 2017 Diversity and Inclusion Strategy. The measures were developed over a two year period in consultation with academics and other organisations. These questions did not appear in paper surveys.

Finally, to manage questionnaire length, the sections on the Civil Service Vision and Civil Service Leadership Statement were reduced to one question each.

2020: Bullying/harassment and wellbeing

The option ‘unhelpful comments about my mental health or being off sick’ was added to question E03A regarding the nature of bullying and/or harassment.

Questions W05 (“In general, how would you rate your overall physical health?”), W07 (“How often do you feel lonely?”) and W08 (“The people in my team genuinely care about my wellbeing”) were new to the survey in 2020 and have been added to the wellbeing results, along with J04B (“In general, how would you rate your overall mental health now?”), which is published here for the first time.

Questions on sex, gender, ethnicity, disability, caring responsibilities, religion and sexual orientation were amended to bring them in line with the latest GSS Harmonised Standards and the 2021 Census.

Questions on function and occupation were revised to align with the most recent published career frameworks.

A more comprehensive list of geographical locations was included, drawing on the ONS list of towns in England, and locations provided by the Scottish and Welsh Governments.

The response options for E01 and E03, whether the respondent has experienced BHD, have reverted to Yes, No and Prefer not to say, rather than Yes in my organisation, Yes in another organisation, Yes in a non-Civil Service organisation.

The response option ‘I moved to another team or role to avoid the behaviour’ for those who said they had experienced bullying or harassment was removed due to data sensitivities, but response options to indicate whether appropriate action was taken or the individual felt victimised remained.

The wellbeing section of the questionnaire was expanded and respondents with long-term health conditions and carers seeing new follow-up questions.

A question on hybrid working and a new section on Civil Service Reform and Modernisation were added.

2021: Miscellaneous changes

The Civil Service Reform questions were modified to reflect the changes in the reform agenda and include new aspects related to flexible working.

The Bullying, Harassment and Discrimination questions were amended to allow staff to tell us about their experience and collect more evidence of where BHD occurs.

The accelerated development programme’s response options were updated to reflect new offers.

Function and occupation response options were revised in consultation with each Head of Function / Profession. This will enable more cross-departmental comparisons.

The list of geographical locations was updated to align with the Annual Civil Service Employment Survey (ACSES)/Civil Service Statistics publication and ONS guidelines.

The Diversity and Inclusion questions were reviewed with policy leads to ensure consistency and harmonisation across the questions.

2022: Miscellaneous changes

A new core question on reasons for leaving the organisation was added to obtain a more comprehensive understanding of why respondents indicated their intention to leave the organisation.

A new question, harmonised with the Office for National Statistics, on whether colleagues have long-Covid related symptoms has been included.

An addition to the Civil Service Reform and Modernisation section included a question on whether efficiency is pursued as a priority in the organisation staff work for.

The list of geographical locations was updated to align with the Annual Civil Service Employment Survey (ACSES)/Civil Service Statistics publication and ONS guidelines (International Territorial Levels 3) as well as function and occupation response options were revised in consultation with each Head of Function / Profession.

A question on whether poor performance is dealt with effectively in the team was removed based on feedback from participating organisations and lack of use from a central policy perspective. That necessitated recalculating the My Manager theme score back to 2009 without question B17 for comparison. Comparisons to earlier results reports for ‘My manager’ will not match.

Two questions on the Leadership Statement were removed after its withdrawal in November 2021.

2023: Pay and Benefits and Wellbeing

Two new questions related to Pay and Benefits were added following consultation with stakeholders. B37A relates to awareness of employee benefits and B37B relates to money worries over the last 12 months. They were not included in the computation of the overall ‘Pay and Benefits’ theme scores for this year to allow easy comparison to previous years.

Two new questions on employee health and wellbeing have also been included. They covered whether the organisation provided good support for employee health, wellbeing and resilience (W09), and how often personal wellbeing and/or work related stress is discussed with the line manager (W10).

A new question (B59I) relating to whether Civil Service Reform improved the way people work in their local area has also been added.

The section on the personal and job characteristics was also reviewed and in 2023 this led to the inclusion of three new questions on i. Whether people do any work related to National Security (H8C); ii. Whether they are experiencing any symptoms related to menopause (J04I); iii. What citizenship they possess (J09).

Employee engagement

Our analytical framework focuses on how employee engagement levels can be improved.

The results of the People Survey have shown consistently that leadership and managing change is the strongest driver of employee engagement in the Civil Service, followed by the my work and my manager themes. The organisational objectives and purpose and resources and workload themes are also strongly associated with changes in levels of employee engagement.

Measuring employee engagement in the Civil Service

Employee engagement is a workplace approach designed to ensure that employees are committed to their organisation’s goals and values, motivated to contribute to organisational success, and are able at the same time to enhance their own sense of well-being.

There is no single definition of employee engagement or standard set of questions. In the Civil Service People Survey we use five questions measuring pride, advocacy, attachment, inspiration and motivation as described in the table below.

| Aspect | Question | Rationale |

| Pride | B47. I am proud when I tell others I am part of [my organisation] | An engaged employee feels proud to be associated with their organisation, by feeling part of it rather than just “working for” it. |

| Advocacy | B48. I would recommend [my organisation] as a great place to work | An engaged employee will be an advocate of their organisation and the way it works. |

| Attachment | B49. I feel a strong personal attachment to [my organisation] | An engaged employee has a strong, and emotional, sense of belonging to their organisation. |

| Inspiration | B50. [my organisation] inspires me to do the best in my job | An engaged employee will contribute their best, and it is important that their organisation plays a role in inspiring this. |

| Motivation | B51. [My organisation] motivates me to help it achieve its objectives | An engaged employee is committed to ensuring their organisation is successful in what it sets out to do. |

Calculating the engagement index

Like all of the other core attitudinal questions in the CSPS, each of the engagement questions is asked using a five-point agreement scale.

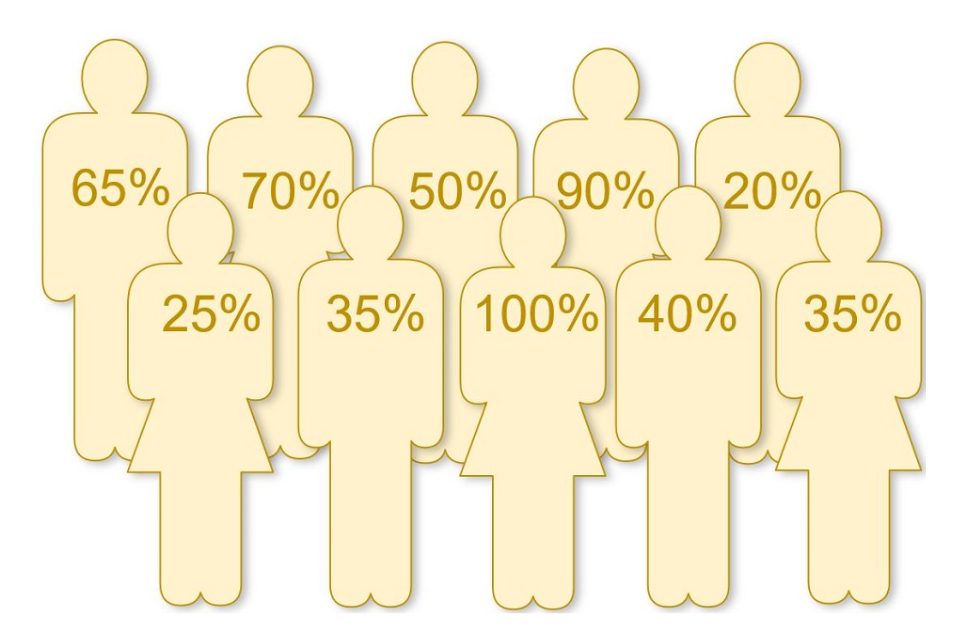

For each respondent an engagement score is calculated as the average score across the five questions where strongly disagree is equivalent to 0, disagree is equivalent to 25, neither agree nor disagree is equivalent to 50, agree is equivalent to 75 and strongly agree is equivalent to 100. Like all questions in the survey this cannot be linked back to named individuals.

The engagement index is then calculated as the average engagement score in the organisation, or selected sub-group. This approach means that a score of 100 is equivalent to all respondents in an organisation or group saying strongly agree to all five engagement questions, while a score of 0 is equivalent to all respondents in an organisation or group saying strongly disagree to all five engagement questions.

| Response | Strongly agree | Agree | Neither agree nor disagree | Disagree | Strongly disagree | Score |

| Weight: | 100% | 75% | 50% | 25% | 0% | |

| I am proud when I tell others I am part of [my organisation] | ✓ | 100% | ||||

| I would recommend [my organisation] as a great place to work | ✓ | 75% | ||||

| I feel a strong personal attachment to [my organisation] | ✓ | 75% | ||||

| [My organisation] inspires me to do the best in my job | ✓ | 50% | ||||

| [My organisation] motivates me to help it achieve its objectives | ✓ | 25% | ||||

| - | - | - | Total: | 325% | ||

| Respondent’s individual engagement score (total / 5): | 65% | |||||

| Sum of engagement scores (65+25+70+35+50+100+90+40+20+35): | 530% | |||||

| Engagement index for the group (530 / 10): | 53% |

Comparing the index scores to percent positive scores

Because the engagement index is calculated using the whole response scale, two groups with the same percent positive scores may have different engagement index scores. For example comparing one year’s results to another, or as illustrated in the example below comparing two organisations (or units).

In the example below two organisations (A and B) have 50% of respondents saying strongly agree or agree. However the index score for the two organisations is 49% in A and 63% in B.

The index score gives a stronger weight to strongly agree responses than agree responses, and also gives stronger weight to neutral responses than to disagree or strongly disagree responses.

Figure 1 shows the distribution of the responses in each organisation. Table 1 shows how the calculations on the previous page translate these response profiles into index scores. Finally Figure 2 contrasts the percent positive scores between the two organisations with their index scores.

Table 1: Calculating the index score

Organisation A

| Organisation A | Percentage |

|---|---|

| Strongly Agree | 10% |

| Agree | 40% |

| Neither agree nor disagree | 10% |

| Disagree | 16% |

| Strongly disagree | 24% |

Organisation B

| Organisation B | Percentage |

|---|---|

| Strongly Agree | 22% |

| Agree | 28% |

| Neither agree nor disagree | 34% |

| Disagree | 12% |

| Strongly disagree | 6% |

| Response | Weight | Organisation A % | Organisation A Score | Organisation B % | Organisation B Score | |

|---|---|---|---|---|---|---|

| Strongly agree | 100% | 10% | 10% | 22% | 22% | |

| Agree | 75% | 40% | 30% | 28% | 21% | |

| Neither agree nor disagree | 50% | 10% | 5% | 34% | 17% | |

| Disagree | 25% | 16% | 4% | 12% | 3% | |

| Strongly disagree | 0% | 24% | 0% | 6% | 0% | |

| Total | 100% | 49% | 100% | 63% |

Figure 2: Comparison of percent positive and index approaches

| Category | Organisation A | Organisation B |

|---|---|---|

| Percent positive score | 50% | 50% |

| Index score | 49% | 63% |

Wellbeing indices

The Proxy Stress Index and the PERMA Index

Using existing People Survey questions to provide additional wellbeing measures

High employee engagement is often conceptualised in terms of the benefits it can bring to organisations. Through the inclusion of four subjective wellbeing questions in the People Survey since 2012, as used by ONS, we are trying to understand the benefits that high engagement can bring to our employees as individuals.

Results products include two indices based on existing questions in the People Survey, which have been shown as important elements of wellbeing.

The Proxy Stress Index

This index aligns to the Health and Safety Executive stress management tool. It uses the 8 questions from the People Survey shown below. It is calculated in the same way as the Employee Engagement Index. We then ‘invert’ the final index so that it is a measure of conditions that can add to stress rather than alleviate stress, i.e. a higher index score represents a more stressful environment.

-

Demands: B33. I have an acceptable workload

-

Control: B05. I have a choice in deciding how I do my work

-

Support 1: B08. My manager motivates me to be more effective in my job

-

Support 2: B26. I am treated with respect by the people I work with

-

Role: B30. I have clear work objectives

-

Relationships 1: B18. The people in my team can be relied upon to help when things get difficult in my job

-

Relationships 2: E03. Have you been bullied or harassed at work, in the past 12 months?

-

Change: B45. I have the opportunity to contribute my views before decisions are made that affect me

The PERMA Index

This index measures the extent to which employees are ‘flourishing’ in the workplace; it is based around the 5 dimensions: Positive emotion, Engagement, Relationships, Meaning and Accomplishment. The index is computed using the 5 questions from the People Survey shown below and combining them in the same way as the Employee Engagement Index.

A high score for an organisation represents a greater proportion of employees agreeing with the statements below and rating two well-being questions as high.

-

Positive Emotion: W01. Overall, how satisfied are you with your life nowadays?

-

Engagement: B01. I am interested in my work

-

Relationships: B18. The people in my team can be relied upon to help when things get difficult in my job

-

Meaning: W02. Overall, to what extent do you feel that things you do in your life are worthwhile?

-

Accomplishment: B03. My work gives me a sense of personal accomplishment

Calculating the Proxy Stress Index

Step One: Ensure an individual has responded to all eight questions the index is based on.

Step Two: Recalculate the scores as percentages:

-

For “B” questions: 100% if Strongly Disagree, 75% if Disagree, 50% if Neither agree or disagree, 25% if Agree, 0% if Strongly agree

-

For bullying and harassment: 100% if Yes, 50% if Prefer not to Say, 0% if No

Step Three: Add together the scores for all 8 questions answered by the respondent, and divide them by 8. This gives you the respondent’s mean score.

Step Four: For a team or organisation level Proxy Stress Index score, the Proxy Stress scores of all the individuals in the group should be added up, and that score divided by the number of individuals in the group.

Lower Proxy Stress Index for a team indicates a greater capacity to prevent and manage stress in that team.

Rounding should take place at the final stage, if needed.

| Survey response: | Strongly agree | Agree | Neither agree nor disagree | Disagree | Strongly disagree | Score |

| Weight: | 0% | 25% | 50% | 75% | 100% | |

| Demands: B33. I have an acceptable workload | ✓ | 100% | ||||

| Control: B05. I have a choice in deciding how I do my work | ✓ | 50% | ||||

| Support 1: B08. My manager motivates me to be more effective in my job | ✓ | 50% | ||||

| Support 2: B26. I am treated with respect by the people I work with | ✓ | 50% | ||||

| Role: B30. I have clear work objectives | ✓ | 25% | ||||

| Relationships 1: B18. The people in my team can be relied upon to help when things get difficult in my job | ✓ | 75% | ||||

| Change: B45. I have the opportunity to contribute my views before decisions are made that affect me | ✓ | 50% | ||||

| Survey response | No | - | Prefer not to say | - | Yes | Score |

| Weight: | 0% | - | 50% | - | 100% | |

| Relationships 2: E03. Have you been bullied or harassed at work, in the past 12 months? | ✓ | 0% | ||||

| Total score (sum of 8 question scores): | 400% | |||||

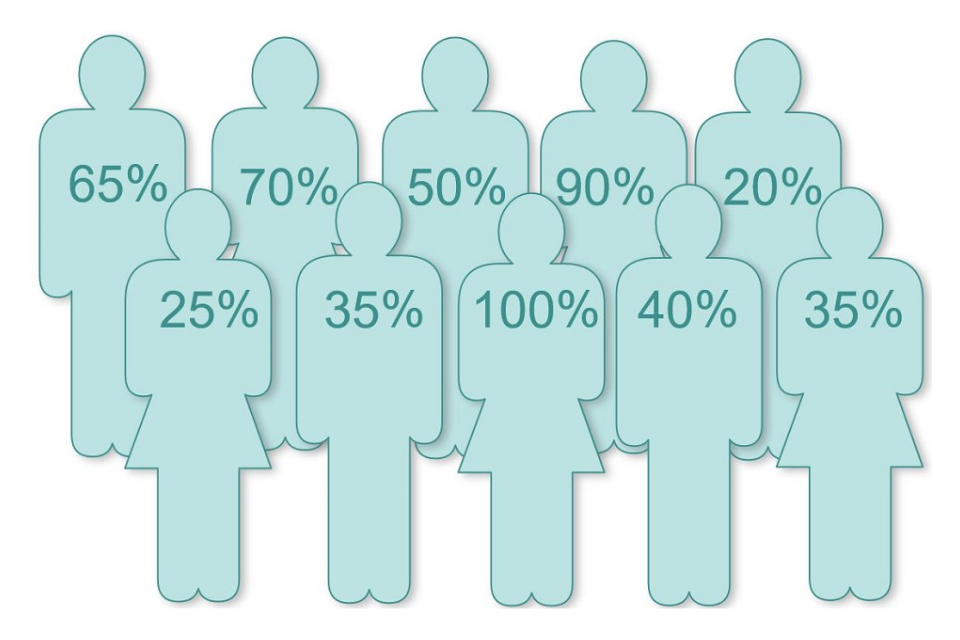

| Respondent’s individual Proxy Stress score (total score / 8): | 50% |

| Sum of individual proxy stress scores (65+25+70+35+50+100+90+40+20+35): | 530% |

| Proxy Stress index for the group (530 / 10): | 53% |

Calculating the PERMA Index

Step One: Ensure an individual has responded to all five questions the index is based on.

Step Two: Recalculate the scores as percentages:

-

For “B” questions: 0% if Strongly Disagree, 25% if Disagree, 50% if Neither agree or disagree, 75% if Agree, 100% if Strongly agree

-

For “W” questions of 0 to 10: assign a score of 0% if 0, 25% if 1 to 4, 50% if 5 or 6, 75% if 7 to 9, and 100% if 10.

Step Three: Take a mean of the percentage scores for each question, by totalling them and dividing by five

Step Four: For a group PERMA score, the PERMA scores of all the individuals in the group are averaged

Higher PERMA Index scores represent higher levels of flourishing and engagement at an individual or team level.

Rounding should take place at the final stage, if needed.

| Survey response: | Strongly agree | Agree | Neither agree nor disagree | Disagree | Strongly disagree | Score |

| Weight: | 100% | 75% | 50% | 25% | 0% | |

| Engagement: B01. I am interested in my work | ✓ | 75% | ||||

| Relationships: B18. The people in my team can be relied upon to help when things get difficult in my job | ✓ | 100% | ||||

| Accomplishment: B03. My work gives me a sense of personal accomplishment | ✓ | 50% | ||||

| Survey response | 10 | 7/8/9 | 5/6 | 1/2/3/4 | 0 | Score |

| Weight: | 100% | 75% | 50% | 25% | 0% | |

| Positive Emotion: W01. Overall, how satisfied are you with your life nowadays? | ✓ | 75% | ||||

| Meaning: W02. Overall, to what extent do you feel that things you do in your life are worthwhile? | ✓ | 25% | ||||

| Total score (sum of 5 question scores): | 325% | |||||

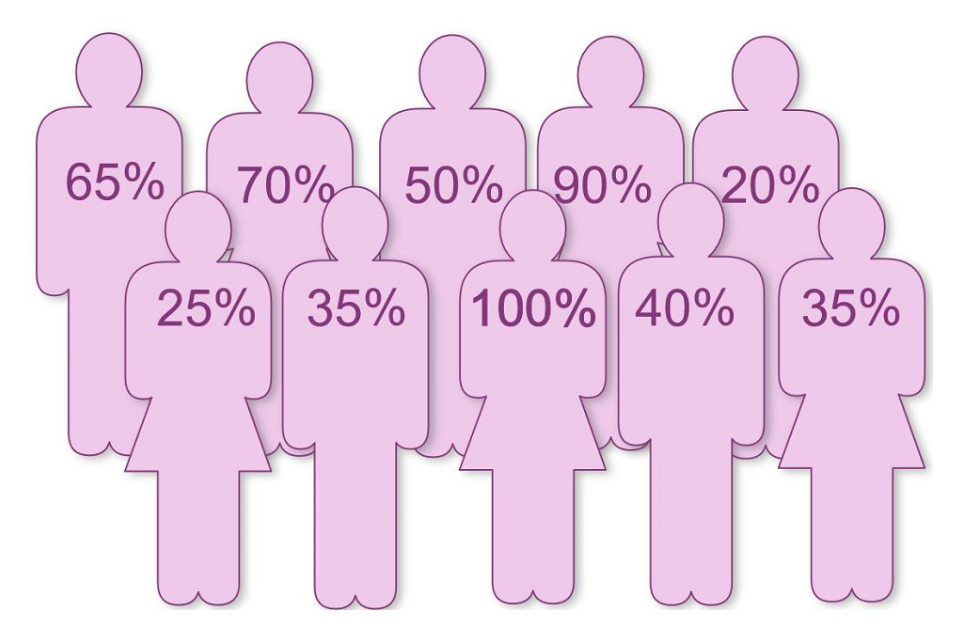

| Respondent’s individual PERMA score (total score / 5): | 65% |

| Sum of individual PERMA scores (65+25+70+35+50+100+90+40+20+35): | 530% |

| PERMA index for the group (530 / 10): | 53% |

CSPS and the Code of Practice for Official Statistics

The Civil Service People Survey is not designated and treated as Official Statistics.

The Civil Service People Survey is primarily a management tool aiming to support UK government departments and agencies to embed and improve employee engagement within their organisations. The survey is also an effective mechanism for internal and external accountability that provides invaluable insight to help measure progress against the aims and outcomes of major cross Civil Service policy initiatives.

Given the wide interest in the annual results, the People Survey looks to match the approaches set out in the Code of Practice for Statistics around its three pillars:

- Trustworthiness - confidence in the people and organisations that produce statistics and data;

- Quality - data and methods that produce assured statistics; and

- Value - statistics that support society’s needs for information.

Specifically:

- The People Survey is managed by a multidisciplinary team which includes social researchers and statisticians who ensure that the information produced are based upon best data and analytical principles to best reflect employees’ experience;

- There are stringent quality assurance processes to ensure the figures produced are accurate;

- Findings are disseminated internally via reports, dashboards and analysis through the various Civil Service governance boards, which provides check and challenges on the figures produced;

- A Data Protection Impact Assessment (DPIA) is conducted which outlines how personal data are treated. Additional documents in this space include privacy notices for different users of the People Survey, data sharing agreements, and a memorandum of understanding for participating organisations;

- The People Survey team publishes a series of supporting documents such as this Quality and Methodology Information page, and a summary of the key findings.

At this stage, the dissemination of results to participating organisations continues to be a priority. External publication of these results, therefore, currently takes place with a lag. We have, nonetheless, committed to reducing the time between internal release and their external publication.

We are constantly reviewing our systems and processes and remain fully committed to producing outputs that are respected for their high quality and which inform decision making.

We welcome feedback on all aspects of the survey. Please send your comments to: csps@cabinetoffice.gov.uk

Publication including rounding of results

Publications

To help leaders, managers and staff understand and interpret the People Survey results for their organisation, a number of benchmarks and comparisons are published on GOV.UK:

-

median benchmark scores (2009 to 2023)

-

mean civil servants’ scores (2009 to 2023)

-

organisation scores (2023)

Civil Service Benchmark (median)

The Civil Service benchmark scores are the high-level overall results from the Civil Service People Survey.

For each measure it comprises the median of all participating organisation’s scores for a given year.

In 2023 there were 103 participating organisations, an odd number, so the benchmark score represents the figure for which 51 organisations will score at or above, and 51 organisations will score at or below. Crucially, these values represent the median organisation, not the median respondent.

Civil Service mean scores / All Civil Servants

The Civil Service mean scores are the simple aggregate scores of all respondents to the Civil Service People Survey. This might also be referred to as the score for “all civil servants”.

These scores are not used as the high-level figure for the Civil Service overall as they are strongly influenced by the largest civil service organisations. The Civil Service benchmark (median) score is a more accurate measure of organisational performance.

However, the mean scores may be more appropriate when looking at the largest organisations, and/or when looking at cross-Civil Service demographic analysis (e.g. how do women’s scores vary from all civil servants).

Notes for published results

Calculation

The result for each of the headline themes is calculated as the median percentage of ‘strongly agree’ and ‘agree’ responses, across all organisations, to all questions in that theme.

The change in the median benchmark score is calculated as the later year’s unrounded benchmark score minus the preceding year’s unrounded benchmark score.

Survey flow

Question E02 was only asked to those who had responded ‘yes’ to question E01. The score for question E02 is the number of responses to that category as a percentage of those who selected at least one of the multiple choice options. As respondents were able to select more than one category the scores may sum to more than 100% and the proportions for individual categories cannot be combined.

Questions E03A, E04, E05 and E06A-D were only asked to those who had responded ‘yes’ to question E03. The scores for questions E03A and E04 are the number of responses to that category as a percentage of those who selected at least one of the multiple choice options. As respondents were able to select more than one category the scores may sum to more than 100% and the proportions for individual categories cannot be combined.

Localisation

‘[my organisation]’, is used in the core questionnaire to indicate where participating organisations use their own name. For example, ‘the Cabinet Office’ in place of ‘[my organisation]‘.

All results in CSPS reporting products are rounded to three decimal places

Figures in the CSPS reports published on GOV.UK are displayed as integers with three decimal places. To ensure the figures are as accurate as possible the reports and tools apply rounding to the figures at the last stage of calculation and are rounded to three decimal places. Sometimes this will mean that the figures shown may not be identical if calculations are performed using the figures displayed in the report, however any difference would not be larger than ±1 percentage point.

Table A presents a demonstration of rounding for the question results: only if the third decimal would be a 0, the integer will be shown with only two decimal places.

Table A: Demonstration of rounding when presenting question results

| Category | Strongly agree | Agree | Neither agree nor disagree | Disagree | Strongly disagree | Total | Positive responses |

| Number of responses | 103 | 166 | 177 | 95 | 24 | 565 | 269 |

| Ratio of responses | 18.230 | 29.381 | 31.327 | 16.814 | 4.248 | 100.000 | 47.611 |

| Figure displayed in reporting | 18.23 | 29.381 | 31.327 | 16.814 | 4.248 | 100 | 47.611 |

© Crown copyright 2024

You may re-use this information (not including logos) free of charge in any format or medium, under the terms of the Open Government Licence.

To view this licence, visit www.nationalarchives.gov.uk/doc/open-government-licence/

This document can also be viewed on our website: https://www.gov.uk/government/collections/civil-service-people-survey-hub