Biometric self-enrolment feasibility trials

Published 4 July 2022

Executive summary

The Home Office’s ambition is that all visitors and migrants will provide their biometric facial images and fingerprints under a single global immigration system ahead of travel to the UK, utilising remote self-enrolment for those who are not required to apply for a visa as part of an ecosystem of enrolment options. To assess the maturity of industry capabilities, the Home Office ran Biometric Self-Enrolment Feasibility Trials from 29th November to 22nd December 2021.

As part of these trials, a number of industry suppliers provided smartphone applications (mobile) and self-service kiosks (kiosk), which captured a person’s face and fingerprint biometrics. To effectively evaluate the maturity of these solutions, participants across a range of demographics were recruited, along with a specialist biometric testing laboratory.

The trials showed promise from both capabilities. However, it is evident there is a difference in the maturity level of fingerprint capture for mobiles and kiosks. Testing of these solutions showed that while there are several aspects that must be improved for both forms of technology, kiosks have been developed to a more advanced stage than that of mobiles. However, face capture and face Presentation Attack Detection[footnote 1] (PAD) on a mobile has improved over the last few years, since the Home Office launched its identity verification app as part of the EU Settlement Scheme. Fingerprint capture and fingerprint PAD on a mobile app needs further development, with particular focus on improving usability.

With kiosks it was seen that they can reliably capture face and fingerprint biometrics, while operating effective face PAD, the capability is therefore mature and ready for more advanced testing. However, kiosk fingerprint PAD requires more research

The success of these trials and the insights provided have brought the Home Office closer to achieving its ambition of remote self-enrolment. Considering these results, it is hoped that by continuing to work with industry and by piloting new solutions, current self-enrolment technology will improve to be in a production-ready state within the next one to three years.

The Home Office is now planning the next phase of biometric self-enrolment technology testing. It will be splitting up the technology testing to address the different levels of maturity:

-

Self-service kiosk pilot

-

Second round of feasibility trials focussed on mobile apps.

Industry partners are encouraged to sign up to the JSaRC mailing list to receive bulletins highlighting future opportunities to collaborate with the Home Office.

1.0 Introduction

1.1 Trials scope and purpose

Under the current UK immigration system, some migrants and visitors are required to provide face and fingerprint biometrics ahead of travel to enable identity and security checks before they are granted an immigration permission. The Home Office’s long-term aim is that all visitors and migrants to the UK will provide their biometric facial images and fingerprints ahead of travel under a single global immigration system, utilising remote self-enrolment for those who are not required to apply for a visa as part of an ecosystem of enrolment options.

As part of achieving this ambition, the Home Office ran Biometric Self-Enrolment Feasibility Trials from 29th November to 22nd December 2021.

To assess the current maturity of the technology and determine the priority areas for further testing and development, the trials sought to recruit voluntary participants to provide their face and fingerprint biometrics. The trial participants were chosen to reflect a wide range of demographics across the public, as defined by the key Office for National Statistics (ONS) groups.

The trials were split into two distinct strands:

-

Trial participants self-enrolled their biometrics using a mobile app and kiosk. This was held in Manchester from 29th November – 3rd December 2021.

-

PAD effectiveness was tested by a specialist biometric testing laboratory, who attempted to subvert the participating suppliers’ PAD software using a variety of techniques. This was hosted in Manchester from 29th November – 3rd December 2021 for testing of kiosks and was continued in a lab environment for testing mobile apps from 6th December – 22nd December 2021.

The objectives of the feasibility trials were to evaluate the following:

-

Can biometrics be consistently self-enrolled to a high quality, without supervision, on both mobile apps and kiosks, by a range of users representing the general public?

-

Do self-enrolled biometrics negatively impact subsequent identity assurance processes, due to issues with capture quality or integrity?

-

Will capture quality and reliability allow the Home Office to use these capabilities at scale, for a range of use cases?

-

Are PAD solutions effective in determining non-genuine biometrics at Home Office scale?

-

The effectiveness of biometric binding[footnote 2] techniques?

-

How user-friendly are self-enrolment techniques?

2.0 Methodology

2.1 Participants

The Home Office recruited 545 participants for the trials from across the ONS categories for ethnicity, age and sex. Despite focussed efforts to recruit equally across demographic groups, it was not possible to achieve this target.

The recruitment approach allowed the Home Office to analyse whether any bias existed in the biometric systems.

| Sex | |

|---|---|

| Female | 306 women participated 56% |

| Male | 239 men participated 44% |

| Age | |

|---|---|

| 18-24 | 21% |

| 25-40 | 28% |

| 41-60 | 33% |

| 61+ | 18% |

| Ethnicity | |

|---|---|

| White | 66% |

| Asian | 16% |

| Mixed/multiple | 8% |

| Black | 8% |

| Other | 2% |

Arrival

Covid protocols

COVID-19 prevention measures in line with the government guidance at the time the trials were held, including extra precautions put in place for participants, staff and suppliers coming to the venue.

Registration

Participants signed in at the registration desk, collecting the welcome pack and QR code.

Briefing

This session helped participants to understand the aims of the trials, the testing they would be required to undertake and to sign and return the consent form, allowing the Home Office to process their data for the purpose of the trials.

Testing

Reference capture

Participants were requested to enrol their fingerprint and face biometrics using current methods. The reference biometrics captured were needed to establish a benchmark, which the mobile and kiosk captures were compared against.

Mobile testing

Participants were requested to enrol their fingerprints and/or facial image using various mobile apps downloaded to different phone models.

Kiosk testing

Participants were requested to enrol their face and fingerprint biometrics using different self-service kiosks.

After testing

Submit checklist

At the end of the day participants were requited to complete an exit questionnaire which gathered insights into the usability of the technology. Once completed, the participant was signed out.

2.2 Suppliers

Map of suppliers

In December 2020, the Home Office released an Expression of Interest (EoI) through the Joint Security and Resilience Centre’s (JSaRC) extensive industry network. This EoI invited suppliers from around the world to register their interest in taking part in feasibility trials and submit details of their remote self-enrolment capabilities. Successful suppliers were given a set of requirements that their capabilities must meet, giving them six months for further development.

The successful suppliers were a mix of large biometrics specialists and small and medium enterprises who participated in the trials by providing mobile apps, kiosks or both for testing.

The devices used to operate the mobile data capture solutions during the trials included a variety of different Android and iOS smartphone models. This enabled the performance of each solution to be evaluated on both older and newer phones to test its functionality, as well as allowing for a comparison between the quality of the data captures recorded by the different models.

The trials included fourteen kiosks designed by five suppliers. Each kiosk captured a participants QR code, fingerprints, facial image and passport scan.

The trials also included close to 100 smartphone devices, made up of eight iOS and Android models, with the thirteen different supplier applications installed on them. Each app captured a participants QR code, passport scans and fingerprints and/or facial biometrics.

2.3 Biometric reference capture

To provide a baseline sample with which to compare the biometrics captured by the mobile apps and kiosks, fingerprint and facial biometrics were captured using existing capabilities.

2.4 PAD

PAD testing was performed on the self-enrolment capabilities provided by the suppliers.

There were two PAD related activities running in parallel at the trials event:

-

PAD testing.

-

Fingerprint and face biometric binding performance.

PAD testing assessed the ability of the supplier solutions to detect presentation attacks[footnote 3] during enrolment. The effectiveness of supplier’s PAD software was assessed during the post-trial analysis.

2.5 Environmental controls

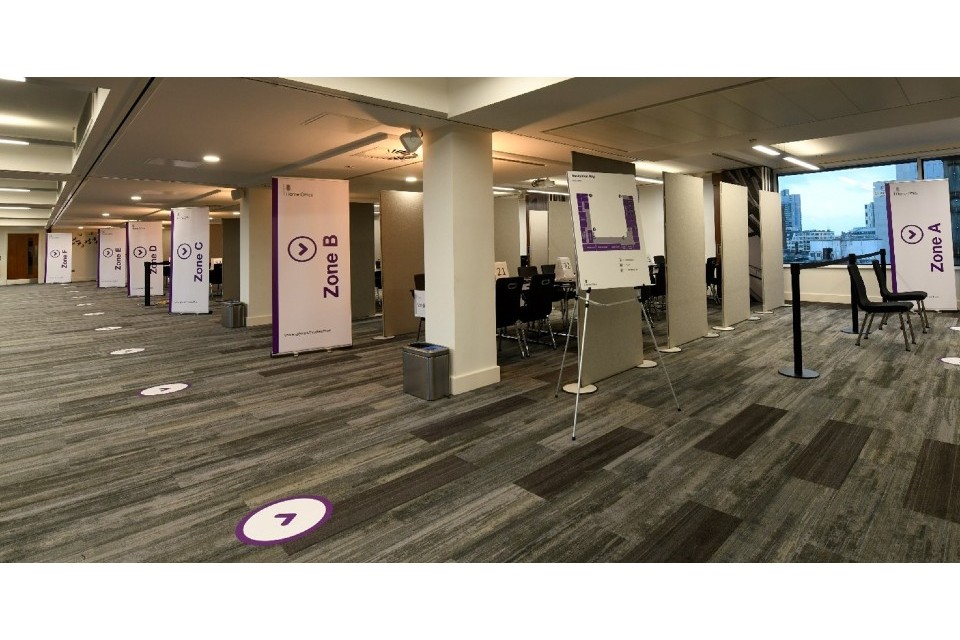

Trials event

To ensure the testing environment remained consistent throughout the data capture period, various controls were implemented. The following measures were put in place to create a standardised environment: background panels, blinds and lighting.

PAD

Kiosk PAD testing took place during the main trials week, using the kiosks in situ, out of main test hours. Mobile PAD testing took place after the main trials had completed, in a specialist biometric testing laboratory.

2.6 Data handling

Security control framework

During the trials, each supplier solution collected special category (biometric) personal data from the attending participants and event staff. To ensure that this data was secured and protected, each supplier was required to comply with the Future Borders and Immigration System (FBIS) Control Framework, which outlined general requirements. To prove that they have implemented these requirements, each supplier submitted supporting evidence to the Home Office. In addition, the Home Office ensured the trial participants were able to exercise their rights under the UK General Data Protection Regulation (GDPR).

Data collected

As personally identifiable information of participants was collected and processed during the trials, data protection measures were in place. Data from participants, including special category data, was collected with their explicit consent as defined in Article 6 and Article 9 of the UK GDPR, this included: name, contact details, face and fingerprint biometrics, passport details (only photo retained), age, sex, ethnicity, accessibility (e.g. missing fingers, damaged skin, which could make using the technology challenging), any disabilities, health conditions or impairments (which could make using the technology challenging).

Data storage

The participant’s data is stored using a secure Home Office Biometrics (HOB) Accuracy Testing platform. Participants’ face and fingerprint biometrics were separated from the other personal data listed in the previous section. It will only be possible to link this biometric data to the other personal data through a unique participant ID. The personal data of participants will only be linked to their contact details if there is a need to do so. For example, if they wish to withdraw their consent, which they are free to do at any time.

The need to retain the data collected during these trials will be subject to annual review. The data will be retained for as long as necessary, up to 6 years and data will be deleted in line with whichever occurs sooner.

See published Privacy Information Notice.

2.7 Data and analyses

PAD approach

There are two recognised ways to evaluate the suppliers’ system’s ability to detect presentation attacks:

-

Success of a system in identifying presentation attacks.

-

Errors when a system incorrectly identifies a genuine transaction as a presentation attack.

There were four broad ranges of possible outcomes for each supplier’s system:

-

All presentation attacks fail.

-

All presentation attacks succeed.

-

A few presentation attacks succeed.

-

A significant number of attacks succeed, but not all.

Biometric accuracy approach

Biometric accuracy tests were performed to assess how well the self-enrolled face and fingerprints would enable verification and identification searches to be performed. Verification and identification searches are used in many stages of the Migration and Borders processes.

Test results were compared to the current performance benchmarks of operational Home Office systems.

Verification tests

-

The self-enrolled faces were compared to the face on the passport.

-

The self-enrolled fingerprints were compared to the reference fingerprints captured during the trial.

-

A threshold was set to report the false reject rate and false accept rate for both face and fingerprint verification.

Identification tests

-

Self-enrolled faces were searched against a gallery of 2.7 million images into which the participant passport images were added.

-

Self-enrolled fingerprints were searched against a gallery of 2 million fingerprints into which the participant’s reference fingerprints were added.

Biometric quality approach

The biometric quality was assessed using both qualitative and quantitative methods. The quantitative assessment for face used an ICAO compliance checking algorithm to assess how well the captured faces met the ICAO criteria, and whether these factors impacted the matching performance.

The qualitative assessment for face looked at the types of capture quality issues that either prevented records being loaded in the face matcher or produced low match scores. The assessment looked at the level of detail that could be seen in images and whether image compression obscured features.

The quantitative assessment for fingerprints used the open standard National Institute of Science and Technology (NIST) NFIQ2 and an algorithm used on the HOB Matcher platform which provides an indication of matchability. There are currently no quality assessment algorithms available that are proven for use on contactless captured fingerprints. These algorithms are proven to be effective on fingerprints captured using contact fingerprint scanners. The assessment considered whether the mobile device model/specification would impact the quality scores. The scaling accuracy of captured fingerprints was assessed by doing a dip sample of the images.

The qualitative assessment for fingerprint images looked at the level of detail that could be seen. The assessment considered whether a manual comparison of the fingerprints by a trained examiner would be possible.

3.0 Data analysis & results

Objective 1: Can biometrics be self-enrolled to a high quality?

This assessment evaluated the quality of the captured biometrics using a combination of proprietary and open-source quality assessment algorithms. The analysis also compared the quality of self-enrolled face and fingerprint biometrics to that achieved in real visa enrolments at a Visa Application Centre (VAC), which provides a benchmark.

There were a few challenges related to face capture quality including motion blur and barrel distortion. The best-in-class kiosks were able to capture faces to a similar quality as the reference captures. The best-in-class mobile face images were of a similar quality to the best kiosks. Lens distortion was present with ears less visible on mobile captures than on the kiosks. Several recommendations arise including, implementing glasses, hats, and shadow detection, detecting motion blur, and gaze and poise suggestions.

NFIQ2 is the open standard fingerprint quality assessment algorithm developed by NIST and designed to be agnostic of contact capture device and matching engine. This standard assessment of fingerprint quality can only reliably be used to indicate the quality of the fingerprints captured on the kiosks (all of which had contact fingerprint scanners). The NFIQ2 scores for the best-in-class kiosk captures were similar to current VACs. In addition to NFIQ2, the HOB Matcher has a proprietary fingerprint quality assessment algorithm however it is not proven for use with contactless captured fingerprints. Therefore, objectives five and six will be the best indicators for contactless fingerprint quality.

In addition to the assessments, a qualified fingerprint expert assessed the quality of the fingerprint images produced by each supplier. In both assessments, some issues included, blurred images, post capture image processing, scaling, and 3D – 2D conversion. For both kiosks and mobiles there were clear differences in quality and scale accuracy between suppliers. Additionally, it was also noted that the FBI Electronic Biometric Transmission Specification[footnote 4] Appendix F is not applicable to contactless fingerprint capture solutions.

Objective 2: Effectiveness of contactless fingerprint quality algorithms

As above there are currently no fingerprint quality assessment algorithms that are proven to be a reliable indicator of matching performance for contactless captured fingerprints. This assessment intended to investigate the degree of correlation between the NFIQ2, HOB Matcher Platform algorithm and supplier algorithm quality scores and match verification scores.

The HOB Matcher Platform algorithm quality assessment looked for a correlation within each supplier solution. The results showed that there was no evident correlation between the HOB Matcher Platform algorithm quality score and match score. This is believed to be due to the aggregation of individual finger scores impacting the correlation. A deeper level of analysis is recommended looking at the correlation of the quality of individual fingers against the verification score for individual fingers.

Objective 3: Effectiveness of PAD to determine genuine and non-genuine biometrics

This objective had two main aspects, evaluating:

- The effectiveness of suppliers’ solutions at detecting a presentation attack.

- How often the solutions incorrectly flagged a genuine participant as being a presentation attack.

This analysis provided insight into the current state of the art of PAD technology for face and fingerprints, the limitations in the technology and identified areas where improvements could or should be made.

There was a broad range of results for mobiles and kiosks. For face images captured by kiosks, the most common errors were genuine participants being incorrectly flagged as presentation attacks. This was often caused by accessories (non-eyewear) and interferences of subjects’ hair. In comparison, the most common errors for face images captured by mobiles were caused most often by accessories (non-eyewear), subject positioning, and image quality. Overall, mobiles had a much wider range of results than their kiosk counterpart, the best-in-class mobile face PAD was 100% effective at detecting presentation attacks however the false detection rate required further refinement to meet the level of performance required to operate at the target Home Office scale.

Objective 4: Effectiveness of binding techniques

The suppliers involved in the trials were asked to demonstrate capture methods that would provide confidence in the fingerprint and face binding to be assured that the captured biometrics were from the same participant.

Only one supplier in the trials demonstrated a mobile app binding solution that met the Home Office requirements. Although it would not be a robust mechanism to prevent non-compliant applicants submitting fingerprints from a different person it could still be helpful to avoid compliant groups accidentally mixing up their fingerprints (which is an increased risk for self-enrolment).

It is recommended that further work is done looking into technology options and process controls.

Objective 5: Matching of data across reference capture and self-enrolment

Biometric performance has been evaluated using the HOB BAT environment and the HOB fingerprint and face algorithm. These algorithms were used to identify matches for face verification and face identification performance, as well as fingerprint verification and identification. In each of these tests, the images captured by the mobile and kiosk solutions were used to search for a matching passport facial or reference fingerprint image of the individual, that had been added into a database of images.

In relation to face verification, the results showed that both types of supplier solutions performed well, as the false accept rate for face verification transactions was low.

For face identification, kiosks performed well with the best-in-class solution being unable to match only 1% of images with passports. Where the image failed to match with the image in the database, it was often due to significant differences in appearance from the passport image. In comparison, mobile solutions performed slightly worse. For the best-in-class solution around 2% of images could not be successfully matched with the passport image. Where the image failed to match with the older passport image in the database, it was often due to a combination of quality issues with the image as well as significant differences in appearance from the passport image.

The results from fingerprint verification of both kiosk and mobile solutions, showed that with ten-finger verification the best kiosk and mobile solutions are, within the Home Office’s benchmark (allowing for the limited transaction volumes and ground truth errors). For the other solutions to achieve this level of performance they would need to improve their ability to consistently capture all ten fingers.

Regarding fingerprint identification, one kiosk solution achieved results above the Home Office threshold and two other kiosks were close. The results from the mobile and other kiosk solutions were below the Home Office’s threshold. To achieve the necessary level of identification and capture consistency that is required for a production solution further development of this technology is required.

Objective 6: Efficiency of capture rates

The capture efficiency metrics were calculated using a combination of the data collected by the supplier solutions during the capture session and a dip sample of the participant feedback manually captured on check sheets.

The kiosk solutions performed well in the biometric capture process and had the best successful completion rates with all five supplier solutions being greater than 90% and the best performer at 97%. The results showed the kiosks offer a relatively even performance across the demographics groups. The kiosks also reliably read the passport chip.

For all the kiosk suppliers the average capture time was well under the five-minute target typically averaging around two minutes. The self-reported participant technology proficiency rating did impact the time but not significantly. Overall capture time was not impacted across demographic groups apart from age. With face capture, the kiosks did show a variation by ethnicity with the best in class exhibiting variation at 5% whereas the worst in class exhibits a 25% variation. There was also some variation across the demographic groups for finger success rate, with success decreasing with age and lower technology proficiency.

The mobile apps varied substantially in their capabilities and this had an impact on the transaction times, completion rates and capture rates. The mobile apps exhibited a higher failure to complete rate than the kiosks with certain suppliers performing more poorly than others. However, it was evident that problems with the near-field communication passport chip reading with specific apps/device models made up most of the failures. Usability also varied across suppliers.

For the mobile apps, 95% of the captures were under five minutes. Capture time was impacted across age and ethnicity. With face capture, the mobile apps did show variation across demographic groups. There was less variation with finger success rate across demographic groups, with only a decrease in success with an increase in age. There was however a large variation overall among suppliers with some performing relatively poorly across demographic groups.

For both mobiles and kiosks there was large variation in referral rate indicating that there needs to be tuning according to Home Office standards.

4.0 Conclusion

At this stage, for biometric self-enrolment on kiosks and mobile apps there are areas of improvement to consider. These trials have highlighted considerations that may need to be implemented to standardise biometric self-enrolment and continue innovation in the space.

Kiosks

Kiosks have generally functioned at an acceptable success and accuracy rate. They benefit from being able to incorporate specialist equipment and capabilities that improve enrolment. Although there is more work to be done to improve usability so that kiosks can operate in a standalone environment where there is no supervision from the operator, overall, the user experience was generally positive.

Mobile

There was a large amount of variance across suppliers in all areas of testing. Mobile face capture through a mobile app has been operated by the Home Office for some time, with millions of applications having been made in this way as part of the EU Settlement Scheme. The trials proved that the capability is still advancing, but it could still be enhanced through further tuning.

Mobile fingerprint capture needs improvement to achieve the performance required for the identification searches in the Home Office use-case. However, mobile has generally performed well at fingerprint verification. Fingerprint PAD also needs further testing before a solution can be considered in line with the Home Office’s strategic vision for this capability. The most prominent finding for mobile fingerprint capture was that usability must improve. All of the mobile apps required the user to capture images of their fingerprints using the rear facing camera and this regularly poses problems for the user, particularly when they are self-enrolling their thumbs. Improvements to the instructions, user interface and enrolment process must be sought as part of future testing.

Next stage

The Home Office is now planning the next phase of biometric self-enrolment technology testing. It will be splitting up the technology testing to address the different levels of maturity:

-

Self-service kiosk pilot

-

Second round of feasibility trials focussed on mobile apps

More details will be released shortly. Industry partners are encouraged to sign up to the JSaRC mailing list to receive bulletins highlighting future opportunities to collaborate with the Home Office.

-

Presentation Attack Detection (PAD) software is responsible for detecting genuine and non-genuine attempts to subvert the enrolment system. ↩

-

The Home Offices defines biometric binding as the process for confirming that biometric information belongs to the same person to a high degree of certainty. ↩

-

Presentation attacks are instances where a user attempts to subvert the enrolment system. This can be done in a number of ways, but in these trials the Home Office presented non-genuine fingerprints and facial biometrics. ↩

-

The FBI Electronic Biometric Transmission Specification Appendix F is the standard that contact capture fingerprint scanners should be certified to where the use-case is evidential. ↩