Analysis of consultation responses: Regulating Digital Functional Skills Qualifications

Updated 25 November 2021

Summary

There was strong support for the majority of our proposals.

Responses to 3 of our proposals, however, were more mixed, with around half of the respondents to the question agreeing or strongly disagreeing and the other half disagreeing or strongly disagreeing. These were:

- that Digital FSQs should be made up of a single overall component

- to prohibit the adaptation of contexts for Digital FSQs at level 1

- to require the Digital FSQs to be awarded at Entry level 3 and Level 1 only

In addition, the majority of respondents disagreed with 2 of our proposals. The first was our proposal to prohibit paper-based, on demand assessment in Digital FSQs at both qualification levels. Respondents felt by prohibiting paper-based, on-demand assessment it could stop some learners from accessing the assessments who needed paper-based assessment materials for accessibility reasons, others also highlighted concerns with some centres lacking the resources or technology needed to deliver the test online and on-screen.

The second proposal respondents disagreed with was our proposal not to introduce rules around assessment times for Digital FSQs. Respondents felt that setting assessment times for Digital FSQs would increase comparability between awarding organisations.

We also included several questions in the consultation which were open-ended and asked for respondents to provide comments on an issue.

Background

The new Digital Functional Skills qualifications

Digital Functional Skills qualifications (FSQs) are new qualifications that seek to provide students with the core The Department for Education (referred to as the Department in this document) is introducing new qualifications called Digital Functional Skills qualifications (FSQs) that seek to provide students with the core digital skills needed to fully participate in society. The Department is introducing them as part of its plans to improve adult basic digital skills and the new qualifications will sit alongside Essential Digital Skills Qualifications as part of the government’s adult digital offer.

As set out by the Department, Digital FSQs will be introduced from August 2023 and will be new qualifications replacing the existing Functional Skills Qualifications in Information Computer Technology (FSQs in ICT). Unlike FSQs in ICT, which are available at Level 1, Level 2 and Entry levels 1, 2 and 3, Digital FSQs will be based on Entry level and Level 1 subject content.

The Department published the final subject content on 29 October 2021 following a consultation in May 2019. Awarding organisations will use this subject content to create the new qualifications.

Ofqual will regulate Digital FSQs. This analysis document considers the responses we received to our consultation in May 2019 on our policy approach to regulating the new qualifications.

Approach to analysis

The consultation included 47 questions and was published on our website. Respondents could respond using an online form, by email or by posting their responses to us.

Respondents to this consultation were self-selecting, so while we tried to ensure that as many respondents as possible had the opportunity to reply, the sample of those who chose to do so cannot be considered as representative of any group.

We present the responses to the consultation questions in the order in which they were asked. For each of the questions, we presented our proposals and then asked respondents to indicate agreement and provide comment. Respondents did not have to answer all the questions.

In some instances, respondents answered a question with comments that did not relate to that question. Where this is the case, we have reported those responses against the question to which the response relates, rather than the question against which it was provided.

Who responded?

Our consultation on regulating Digital Functional Skills qualifications (Digital FSQs) was open between 16 May 2019 and 26 July 2019. Respondents could complete the questions online or download and submit a response.

In addition, we held 2 consultation events with awarding organisations and stakeholders at our office in Coventry, which attracted 19 attendees. The majority of these responded in writing to the consultation, and as such we have not reported on the consultation events here. For those unable to attend consultation events, we produced a podcast.

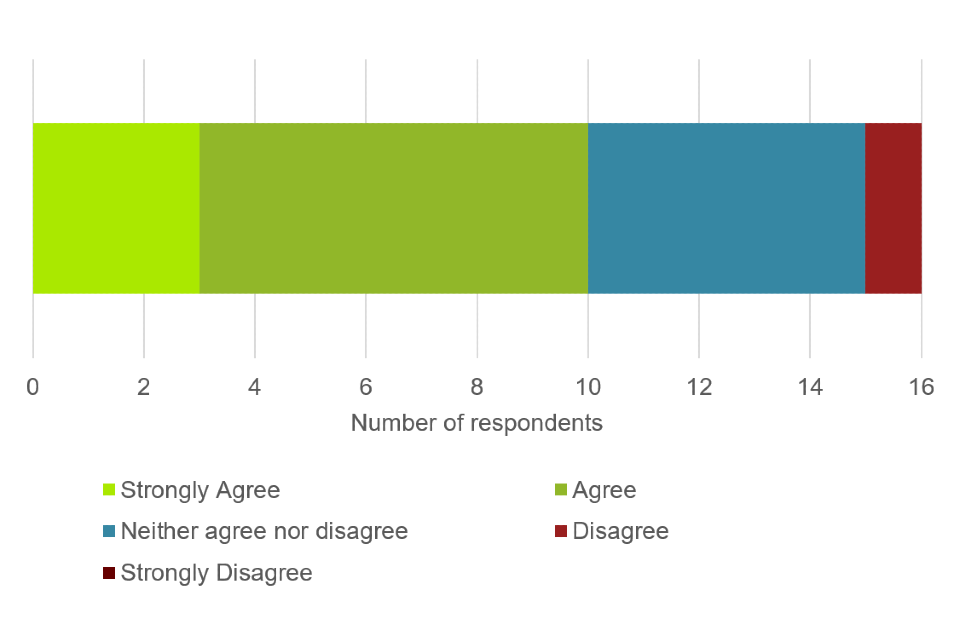

We received 16 written responses to our consultation, 12 of which were official responses from organisations:

- 7 responses from awarding organisations

- 3 responses from other representative or interest groups

- 1 response from a local authority

- 1 response from a school or college

We also received 4 personal responses:

- 2 responses from teachers

- 1 response from an educational manager

- 1 response from a project manager

All respondents were based in England.

Detailed analysis

In this section, we report the views of respondents to the consultation in broad terms. We list the organisations who responded to the consultation in Annex A.

Question 1

Do you have any comments on our proposed approach to regulating Digital FSQs?

Fourteen respondents provided a comment on our proposed approach to regulating Digital FSQs.

Seven respondents expressed broad agreement with our proposed approach to regulate as far as possible against the General Conditions of Recognition (GCR), but to introduce a limited number of subject specific conditions. They considered this a sensible and appropriate approach. Some respondents noted the proposal would bring our regulatory approach in line with those in place for the reformed Functional Skills qualifications in English and maths (FSQs in English and maths).

Several respondents welcomed the proposal to disapply some of the GCR, agreeing that this would reduce the potential burden on awarding organisations.

Several respondents also agreed that we should require a higher level of awarding organisation control for Level 1 qualifications, which are more likely to be used to support progression, compared to Entry level 3 qualifications.

However, 3 of the respondents queried the difference in the proposed approach to regulation for Digital FSQs compared with Essential Digital Skills Qualifications (EDSQs). Respondents did not feel that there was sufficient difference between the 2 types of qualifications to warrant the proposed difference in the approach to regulation. In addition, 1 respondent suggested there was a danger that by taking different approaches, it was creating a false distinction based on the regulatory approaches rather than differences in the fundamental aims of the 2 qualifications.

One respondent also commented that our regulatory approach appeared to be overly burdensome and prescriptive, stating that it may lead to less flexibility in developing Digital FSQs which was fundamental for these qualifications. They also questioned why Digital FSQs should be regulated in a similar way to the reformed FSQs in English and maths, when they would serve different purposes. For example, Digital FSQs would not be part of apprenticeships or study programmes.

Some respondents made other comments which were relevant to specific questions, in particular regarding qualification levels and the lack of a Level 2 FSQ and the overall assessment model, which are recorded against the relevant questions, or on the scope of the subject content which is the responsibility of the Department rather than Ofqual.

Question 2

Do you have any comments on the qualification purpose statement for Digital FSQs?

Ten respondents provided a comment on our proposed purpose statement for Digital FSQs.

Seven of these respondents highlighted the similarities between the purpose statements proposed for Digital FSQs and EDSQs. These respondents raised concerns that the lack of a distinct and clear purpose between the 2 qualifications may cause confusion. The respondents highlighted the need to articulate the difference between these 2 qualifications. Respondents also reflected on language of the purpose statement, with 1 highlighting that while the inclusion of the term ‘demanding’ for Digital FSQs suggested that the qualifications should be more demanding than for EDSQs, both qualifications would be at the same levels and based on the same standards.

In addition, 1 awarding organisation responding to the consultation commented that the purpose as drafted by the Department, and adopted by Ofqual, was insufficiently defined for awarding organisations to develop business cases to offer both Digital FSQs and Essential Digital Skills qualifications.

A few respondents also reflected on Digital FSQs not forming part of the apprenticeship standards. One respondent welcomed this decision, stating that the inclusion could be a barrier for some learners achieving apprenticeship standards. Whereas another highlighted that by the qualification not being mandatory, it would affect the registration numbers.

Other comments on our proposal included 1 respondent asking that consideration be given to wider contexts and users. They felt that the purpose statement focussed too much on adult learners at the expense of other groups that may take the qualification. In addition, another response largely agreed with the purpose statement outlined in the consultation.

Question 3

To what extent do you agree or disagree that we should adopt the Department’s subject content into our regulatory framework?

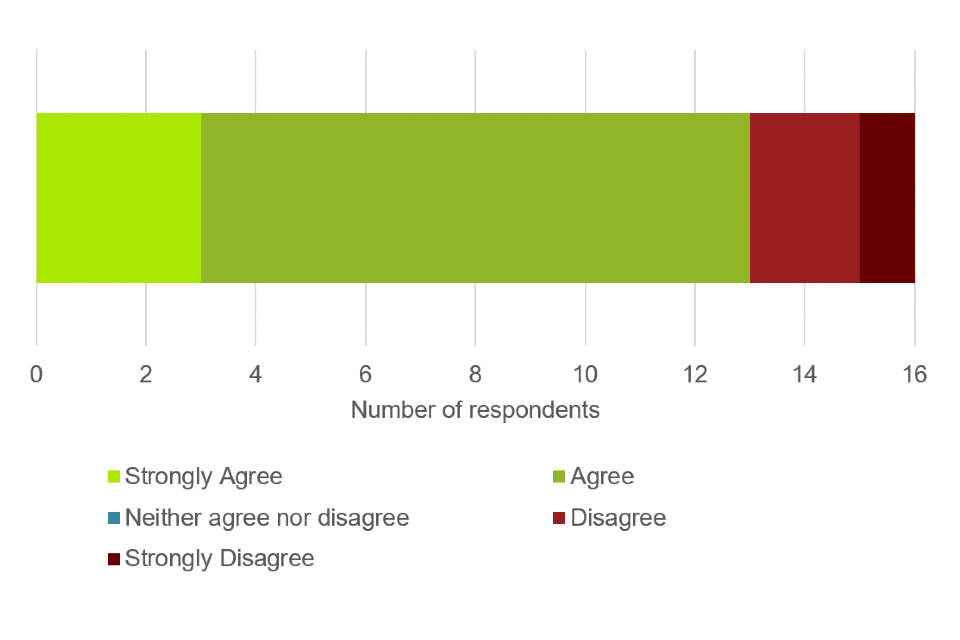

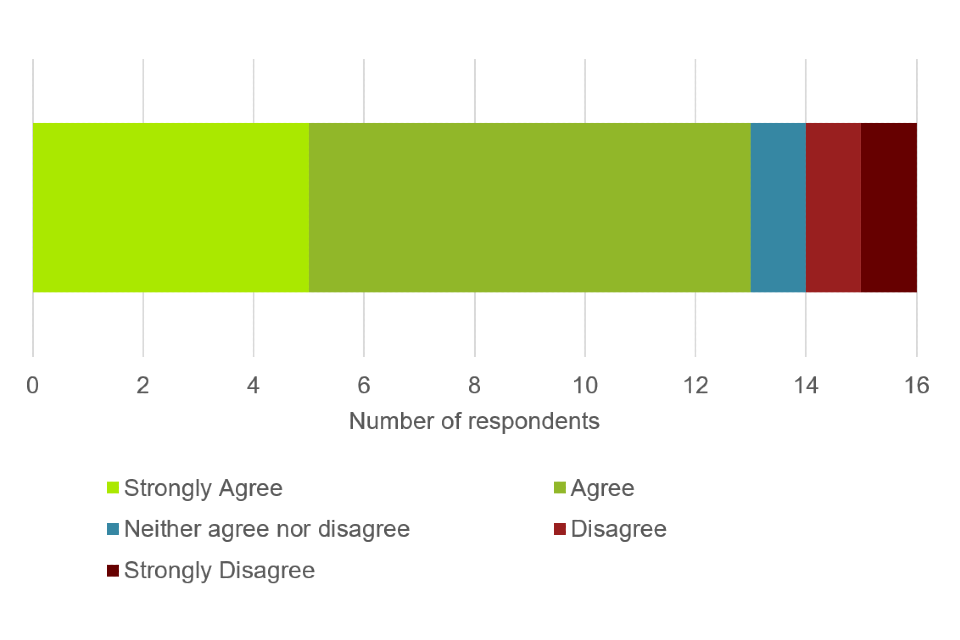

Thirteen out of the 16 respondents either agreed or strongly agreed with the proposal to adopt the Department’s subject content into our regulatory framework.

Three of the respondents agreed with this proposal as it would provide a standard approach to content in Digital FSQs. They felt that this would enable comparability between qualifications offered by different awarding organisations. One elaborated further to say that it would benefit learners and centres to know in advance the content that will be assessed.

Three respondents agreed with the adoption of the Department’s subject content but said that this would need to be reviewed and updated in line with technological developments.

Four respondents agreed with the proposal but noted that the content required further development to make it suitable as the basis for a qualification. Most of the respondents in this group had provided specific feedback in their response to the recent consultation by the Department on areas of the content that needed development. One of the 4 respondents provided further comments - raising concerns with specific technical interpretations used in the Department’s content and questioning the research that forms the basis of the content.

One respondent agreed with the choice of content proposed by the Department as it was broadly in line with the topics and content for similar courses currently taught. The focus on smart devices and online safety was seen as positive.

Three respondents disagreed or strongly disagreed with the proposal. Two of these respondents raised questions about the subject content proposed by the Department, in particular whether it was possible to assess all the skills formally in the way proposed by the Department and the lack of a Digital FSQ at Level 2. The remaining respondent disagreed with the proposal because Essential Digital Skills Qualifications would not be adopting the Department’s content. They felt that this difference in approach could lead to the updating of Digital FSQs lagging some time behind the updating of EDSQs, as 1 would be linked to the national standards and 1 would not.

Question 4

To what extent do you agree or disagree that we should set rules and guidance around how awarding organisations should interpret and treat the subject content statements for the purpose of assessment?

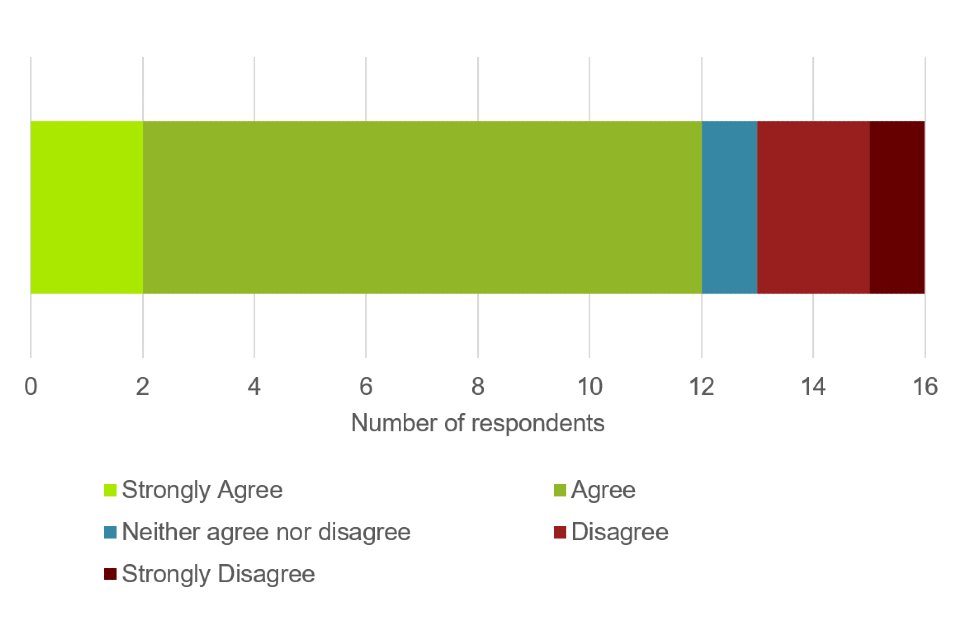

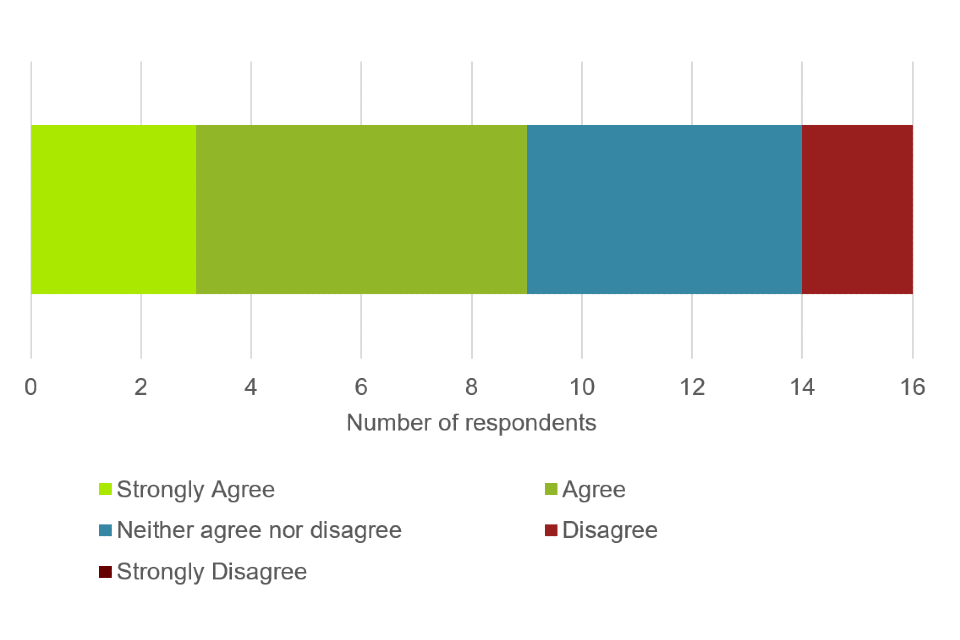

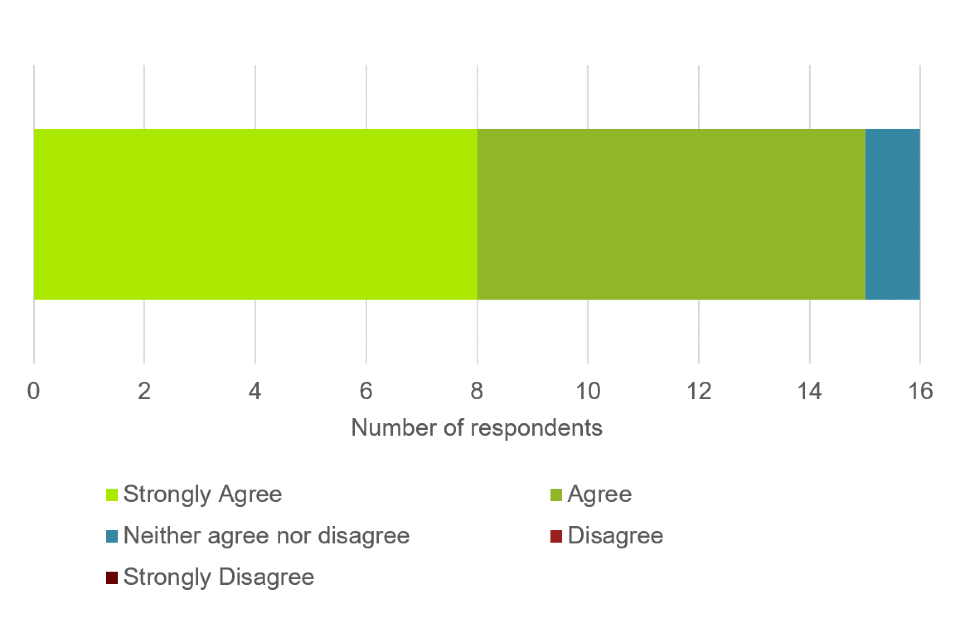

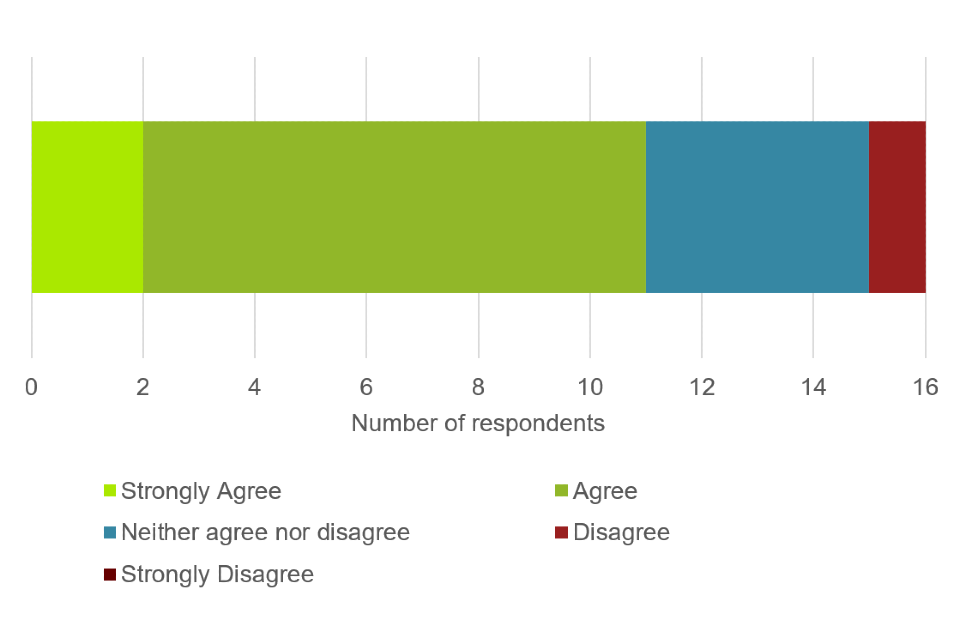

From the 16 respondents to this question, 13 either agreed or strongly agreed with the proposal.

Eight of the responses supported the proposal and further highlighted the comparability between awarding organisations as well as the consistency of interpretation that would result from this approach. They all agreed that specifying how knowledge and skills should be interpreted and then assessed would ensure comparability between Digital FSQs offered by different organisations. One respondent reflected that our proposal would avoid a ‘race to the bottom’ where decisions about assessment were made for reasons of cost or manageability rather than validity of assessment.

Two respondents agreed with the proposal but asked for further guidance on the assessment of the content. Guidance was specifically requested on which skill statements and areas of content should be covered through formal assessment.

One respondent neither agreed nor disagreed with the proposal, asking for more detail as to which assessment approach would be acceptable. They felt that setting parameters within which the content statements should be treated would improve comparability across awarding organisations. The respondent also stressed the need for an innovative assessment approach and expected that any additional rules and guidance set by Ofqual would not stifle innovation. They suggested that awarding organisations should decide what approach to take in the design of the qualification and provide a rationale for this approach in their assessment strategy.

One respondent strongly disagreed with the proposed approach, stating that the content was too narrow. They felt that the awarding organisations were best placed to make decisions and improvement to content to ensure it was sufficiently broad.

Question 5

To what extent do you agree or disagree that we should require certain content statements to be covered within the course of study, rather than in the formal assessment?

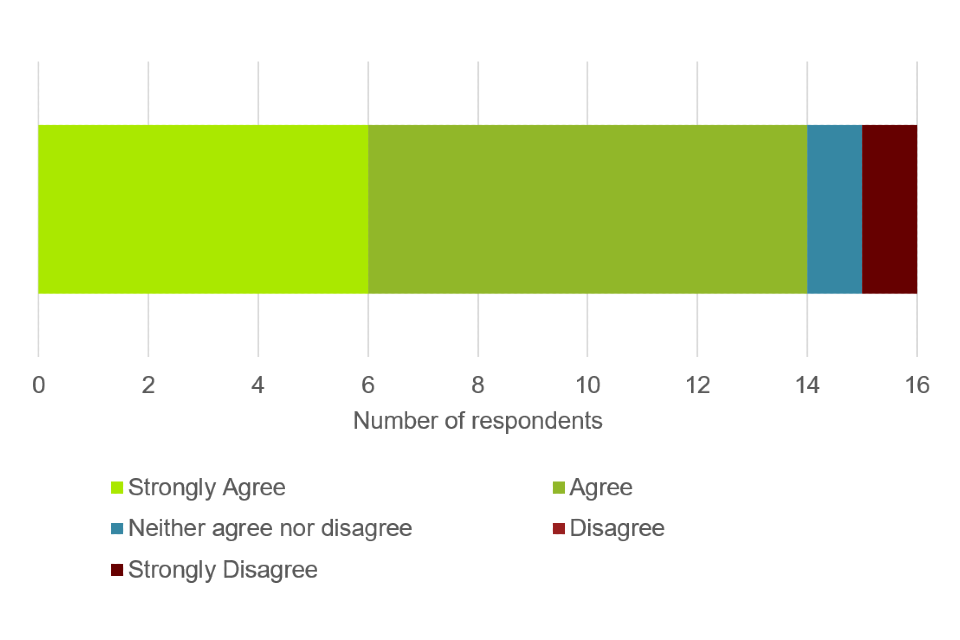

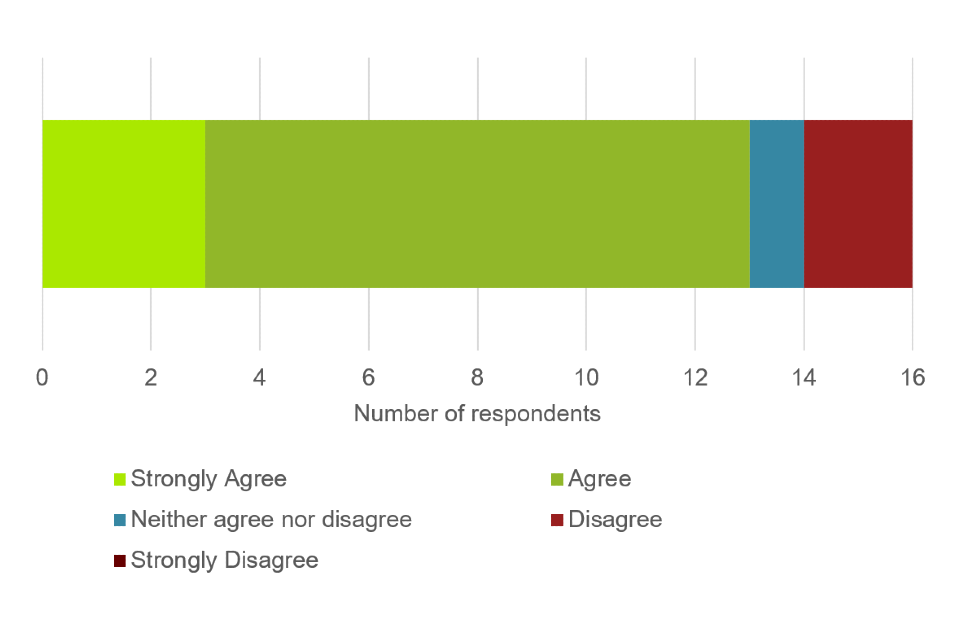

Thirteen respondents either agreed or strongly agreed with the proposal to require certain content statements to be covered within the course of study, rather than in formal assessment.

Seven respondents agreed with this proposal as they felt that certain elements of the content would be difficult to assess formally. This group suggested that some of the content statements would be better suited to being covered during the course of study and would be problematic to assess in a more formal way.

Two respondents welcomed the approach to not assessing all content formally, as it could reduce the costs awarding organisations incur. They felt that less awarding organisation responsibility in marking and moderating assessments would lead to reduced costs and help keep the assessments manageable for centres. They raised concerns that some of the content, if assessed formally, could lead to costs and additional burdens on the awarding organisations. An example provided was of having to develop an online training environment or a website for learners to use. In addition, 1 respondent highlighted that it would be difficult to assess the content statement Entry level 4.2 ‘Securely buy an item or service online’ during the course of study because learners may not have the funds or financial arrangements to do this, such as owning credit cards.

Other responses received to this question included 1 respondent stating that assessing skills through coursework would be a more suitable form of assessment for some of the content outlined by the Department. They requested that if this approach is used then there should be clear guidance provided to awarding organisations and centres.

Another raised concerns about potential disruption to formal assessment if there were issues with IT equipment or internet connection. They agreed with the proposal as they felt some of the content should not be assessed to minimise the disruption that technical difficulties may cause.

One respondent neither agreed nor disagreed with the proposed approach outlined in this question. The respondent agreed that the demonstration of some of the skills statements would be difficult to assess and better lend themselves to coverage during the course of study. However, they suggested that further guidance needed to be developed on the level and nature of quality assurance that awarding organisations would apply to those components that are not formally assessed. They also felt that it was too premature to determine whether certain content statements should be covered within the course of study as it might be that awarding organisations are able to develop approaches to allow some of the statements to be assessment through formal assessment.

Two respondents disagreed with the approach. One felt that it was unclear what monitoring activity awarding organisations would have to undertake during the course of study if formal assessment did not form part of the qualification. They stated that it was not clear how evidence would be gathered and then contribute to the overall achievement of the qualification. Finally, they raised the issue that if a large number of the skill statements are excluded from formal assessment then Digital FSQs and EDSQs would have a very similar approach, which could lead to confusion.

The other strongly disagreed with the proposal and suggested that if aspects of the content could not be formally assessed then they should be included as guidance rather than content requirements. They also requested clarification on the process by which evidence would be provided to the awarding organisation by learners or centres and the purpose of this evidence if it were not linked to formal assessment. They voiced concerns that some of the content requirements may not produce any meaningful evidence.

Question 6

To what extent do you agree or disagree that we should set rules around the sampling of subject content?

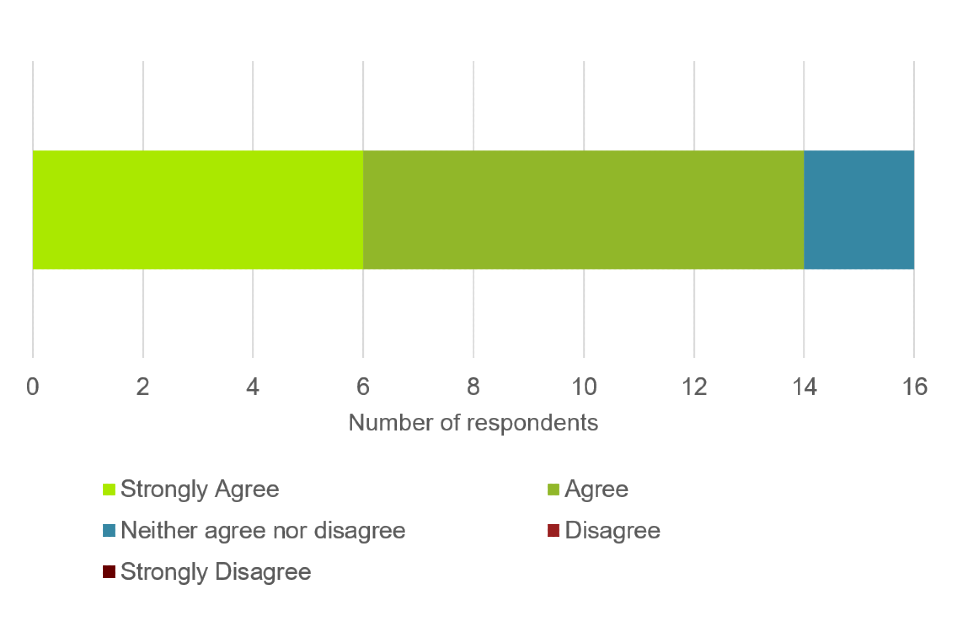

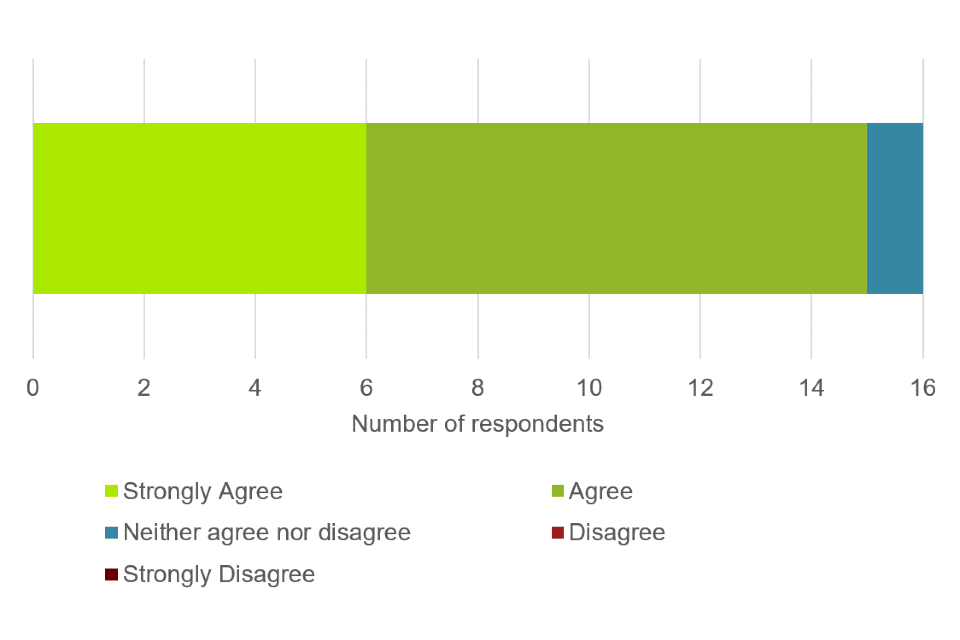

All 16 respondents to this question either agreed or strongly agreed that Ofqual should set rules around the sampling of subject content.

Six respondents welcomed this approach, as it would ensure consistency across awarding organisations offering Digital FSQs. This group also requested for further guidance on the content to be sampled, with Ofqual clarifying its expectations to all awarding organisations so there would be a consistent approach used across qualifications.

Three respondents agreed with the proposal and suggested a longer-term approach to sampling content. They thought it important that there was not an expectation to test all content in each assessment, but that the full range of content should be tested over time. Concerns were raised that assessment would become predictable if all of the content was covered in each assessment. In addition, if assessments used a ‘problem-solving approach’ and so required an outcome but not a method for achieving the outcome, it would mean that it was harder for an awarding organisation to guarantee the content coverage when setting assessments where there was more than 1 way to solve the problem.

One respondent commented that sampling all subject content statements in each set of assessments could result in assessments being overlong and unmanageable for learners. They asked that content, which is best suited to formal assessment, should be identified and agreed so that the potential scale of assessments could be considered.

Question 7

To what extent do you agree or disagree that we should not set rules around weighting of skills areas but should instead require awarding organisations to ensure a reasonable balance across the different skills areas?

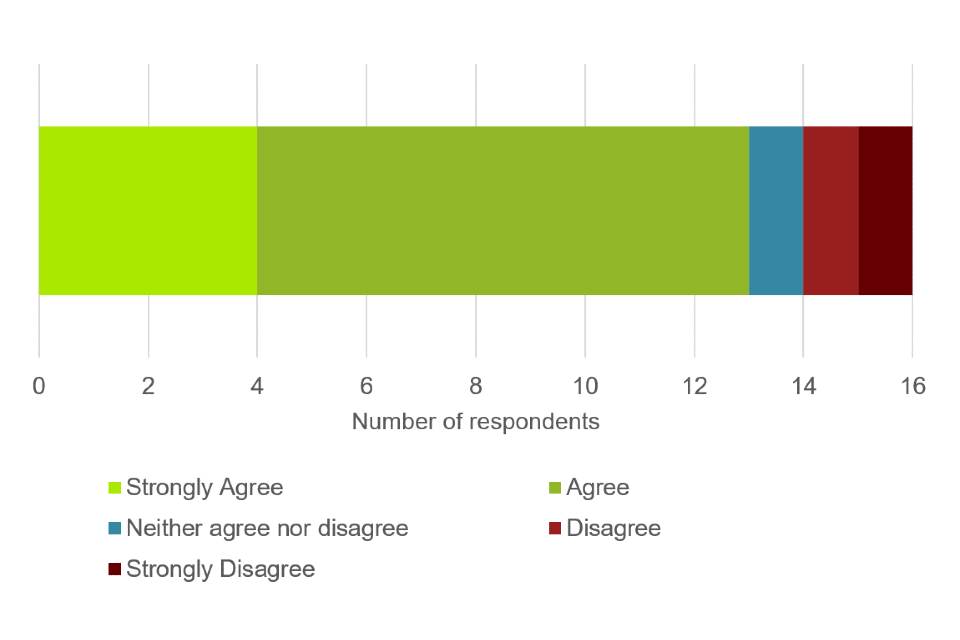

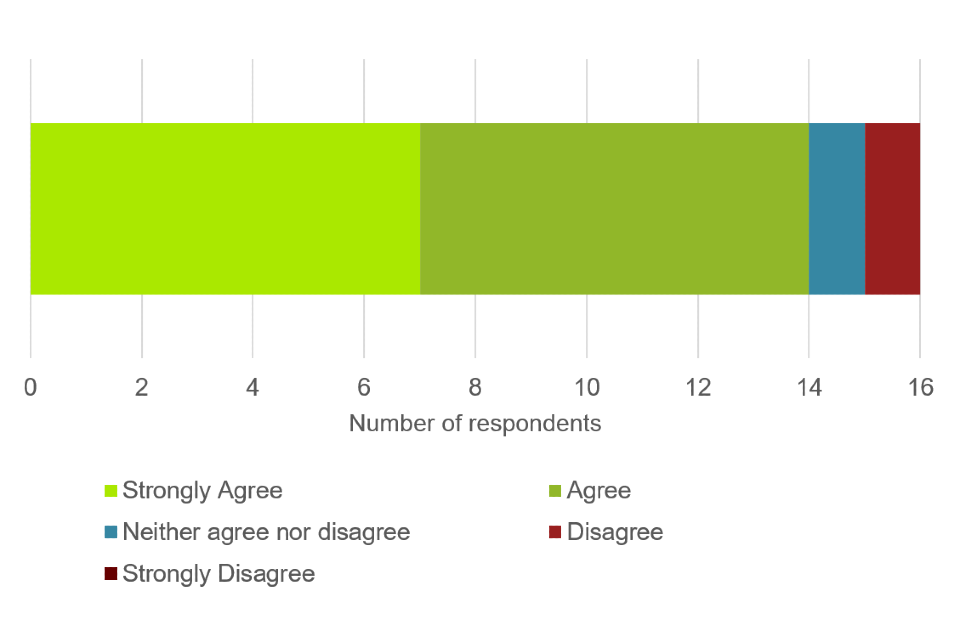

Twelve respondents agreed or strongly agreed that Ofqual should not set rules around weighting of skills areas but should instead require awarding organisations to ensure a reasonable balance across the different skills areas.

Two respondents noted that this approach worked well for FSQs in English and maths and supported a similar approach with Digital FSQs.

Two respondents agreed with the proposal as they felt that weighting of skills could constrain assessment development and lead to assessments that are not authentic.

One respondent agreed with the proposal and commented that rather than weightings there should be greater emphasis on skills in context and problem solving to emphasise the functional nature of the qualifications.

Three respondents either disagreed or strongly disagreed with the proposal. Each of these respondents raised concerns that this approach would reduce comparability between qualifications because of awarding organisations taking different approaches to weighting. They also suggested that if awarding organisations took different approaches to weighting skills, this could lead to confusion for tutors delivering the qualifications.

Question 8

Do you have any comments on the principles set out above, or as to the form the assessments should take?

Twelve respondents provided comments on the principles set out in the consultation or on the form the assessments should take.

Two respondents commented on the use of digital technology in the assessments. They supported the use of up-to-date technology but highlighted the potential burdens on awarding organisations that could result, such as affordability and maintaining the security of the assessments. Concerns were also raised about the access that centres and learners would have to some of the relevant high-end equipment, especially in deprived areas.

Several respondents supported the high-level principles outlined in the consultation but made specific comments on drafting of the principles. This included requesting:

- further clarity that the principles covered both formal and teacher-led assessments

- that a definition for both online and on-screen tests be communicated to awarding organisations, stating that clarification of both of these terms would help in the interpretation of the assessment methods

- clarity on the tools needed to be offered for assessment purposes

- clarity on what was meant by ‘making full use of recent advances in digital technology to enhance the quality and relevance of assessments’

One respondent commented that the number of assessments should be kept to the minimum necessary to aid tutors in delivering courses. They also commented that these assessments should be delivered on-screen or online to reflect the digital content of the qualifications.

Four respondents provided comments on the use of online or on-screen assessment. They all supported the use of online or on-screen assessment, especially for the testing of knowledge. However, they raised concerns about the impacts a move away from paper-based assessment could have. All 4 questioned whether this could raise issues of centre manageability, with some centres lacking the resource or infrastructure to be able to run the assessments as intended. An option to run paper-based assessments where required was supported by all 4 respondents.

One respondent raised concerns that the emerging assessment model could be overly burdensome and instead suggested that Digital FSQs should be assessed through a project-based task followed by an exam.

Two respondents stressed the importance of linking assessment to real life application either through the use of scenarios or linking to a vocational area of interest to the learner.

One respondent requested that the assessment and course of study should not require internet access, as this could be problematic for centres that prohibit internet use.

Question 9

Are there any regulatory impacts arising from the proposed principles?

Twelve respondents provided comments on the regulatory impacts arising from the proposed principles.

Two respondents outlined the potential impacts on awarding organisations that could result from the proposed principles. The respondents stated that to teach and assess some of the skills (such as transacting) a bespoke platform or additional software might need to be developed by awarding organisations. There would be costs involved in developing or sourcing of these systems.

Four respondents suggested potential impacts on centres that could arise from the proposal. They raised concerns about the financial impact of providing access to the IT equipment needed to deliver the qualifications. There were further comments that this could be an issue for many centres and that there would be more equipment or resources required to deliver Digital FSQs than was the case for FSQs in ICT.

Other responses received to this question included:

- one respondent commented that a requirement for evidence to be provided by a learner through the course of study could lead to additional burden on centres and awarding organisations

- one respondent did not provide a comment on the impacts of the proposed principles as they felt they needed clarity on certain key points, especially principle 6, before they could make an informed comment

- one respondent acknowledged that the introduction of 6 new principles adds to the volume of regulation, but felt that these principles were proportionate

One respondent did not feel there were any additional impacts other than those we had identified and 2 respondents did not think there would be any regulatory impacts resulting from the proposed principals.

Question 10

Are there any equalities impacts arising from the proposed principles?

Fifteen respondents commented on potential equalities impacts that may arise from the proposed principles.

Four respondents commented that certain groups might not be able to complete or fully engage with the qualifications if internet access was required. Groups identified were those in offender institutions where internet access is controlled and people from certain cultural backgrounds where full internet access may conflict with their beliefs.

One respondent highlighted the need for assessments to take into account that learners may have English as a second language or have very low-level language skills.

Six respondents raised their concerns that there may be impacts on learners with disabilities if the proposed principles are implemented. This group highlighted the need for testing of any new assessment to ensure that a wide range of learners with disabilities could access and complete them.

One respondent commented on the potential impact on learners with disabilities and the role of exemptions. They highlighted how the current approach did not grant exemptions below the level of a component, but the proposal would mean that Digital FSQs would be a single component qualification. They suggested a flexible approach so that certain groups would not be denied an exemption and therefore unable to access the qualification.

One respondent acknowledged the potential for learners with disabilities to be impacted, but new modes of assessment could provide opportunities to increase the accessibility of the qualifications for this group and increase participation.

One respondent said they could not identify any equalities impacts that may result from the principles.

One respondent provided comments that did not cover any equalities impacts, but related to content and the link to employability. These comments are recorded against the relevant questions.

Question 11

To what extent do you agree or disagree that we should set rules around the number of components within Digital FSQs?

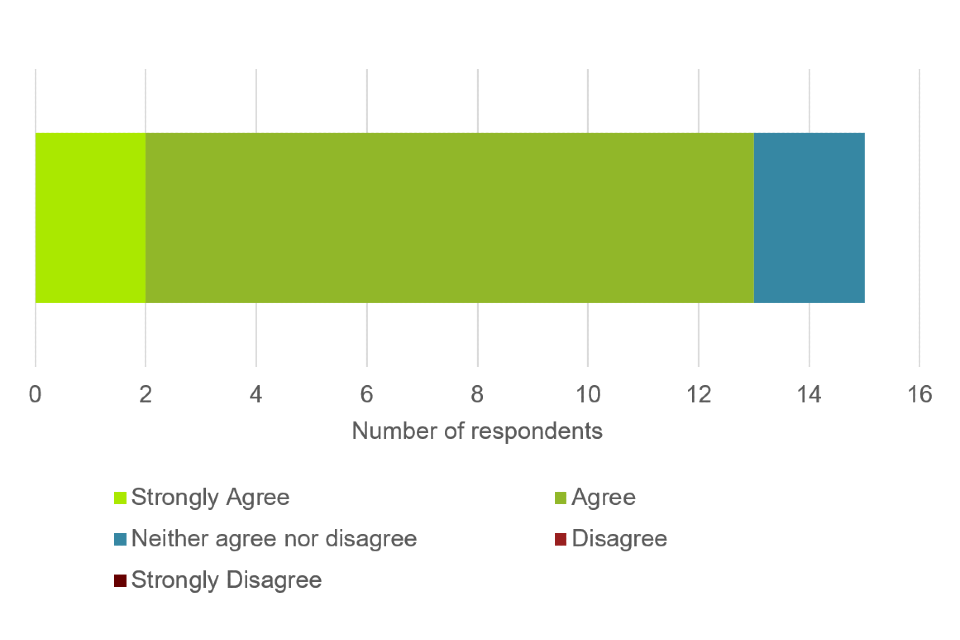

Thirteen respondents either agreed or strongly agreed that we should set rules around the number of components within Digital FSQs.

Four respondents agreed with the proposal and provided further comments on how this would lead to consistency in approach. They believed this would contribute to greater comparability between Digital FSQs due to awarding organisations adopting a uniform approach to components and would increase employer confidence in the qualifications.

Two respondents agreed with the proposal but requested that any rules in this area should not be too restrictive. They felt that there should be flexibility in the development of Digital FSQs and a greater range of design options should be available.

One respondent agreed that we should set rules on the number of components and supported a single component approach to Digital FSQs. They felt that a single overall component would support design, qualification purpose and learner progression. In their comments, they outlined the pros and cons of a multi component approach but felt that there were more drawbacks than benefits for having more than 1 component.

One respondent disagreed with this proposed approach, stating that each additional component could be a barrier to achievement for some learners.

One respondent disagreed with the proposal and favoured an approach where awarding organisations made the decision on how many components would make up the Digital FSQs they provided. They also felt it was difficult to comment on the proposal without decisions on how the skills statements should be assessed.

Question 12

To what extent do you agree or disagree that Digital FSQs should be made up of a single overall component?

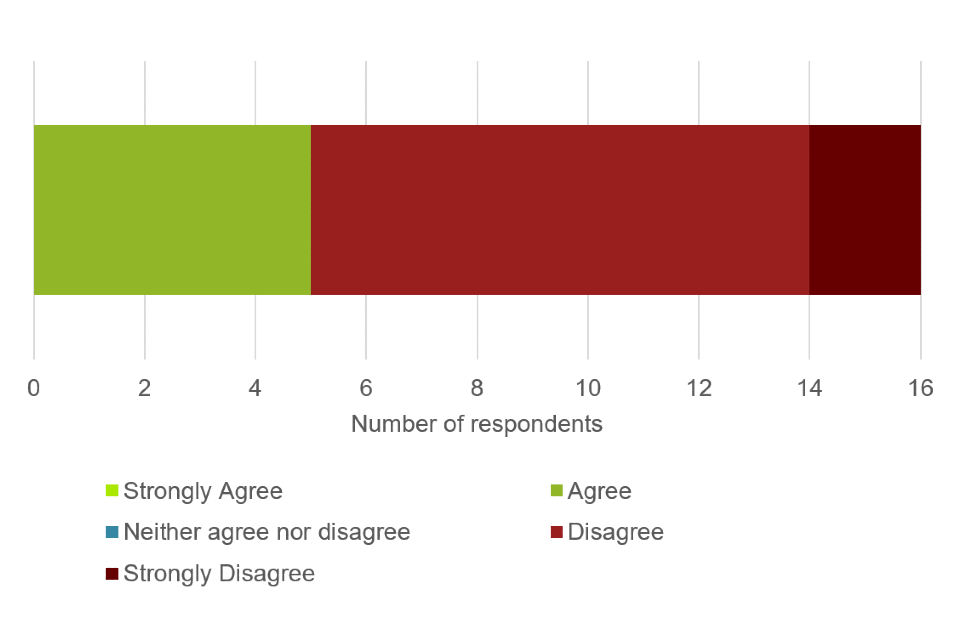

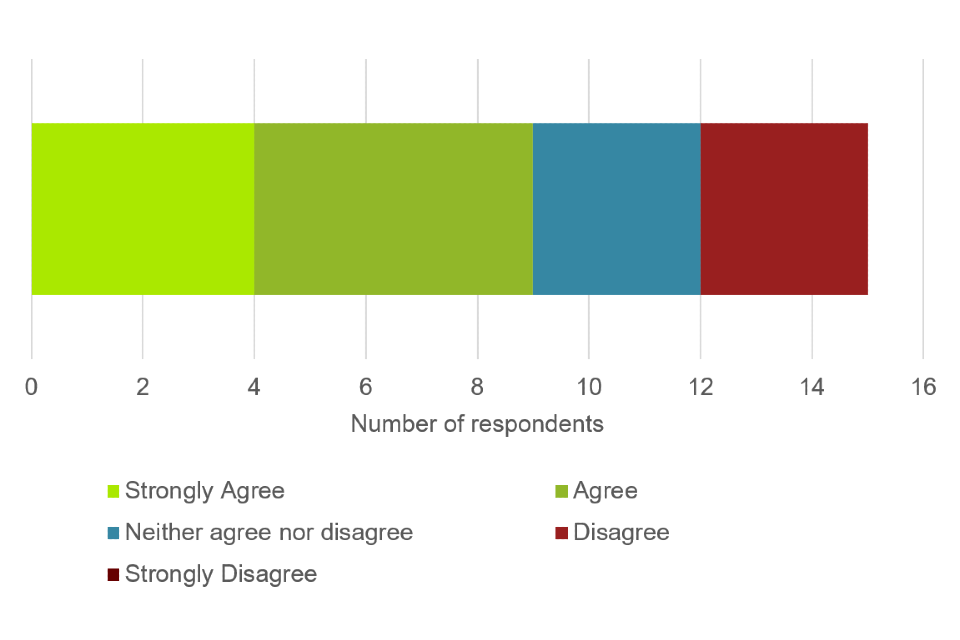

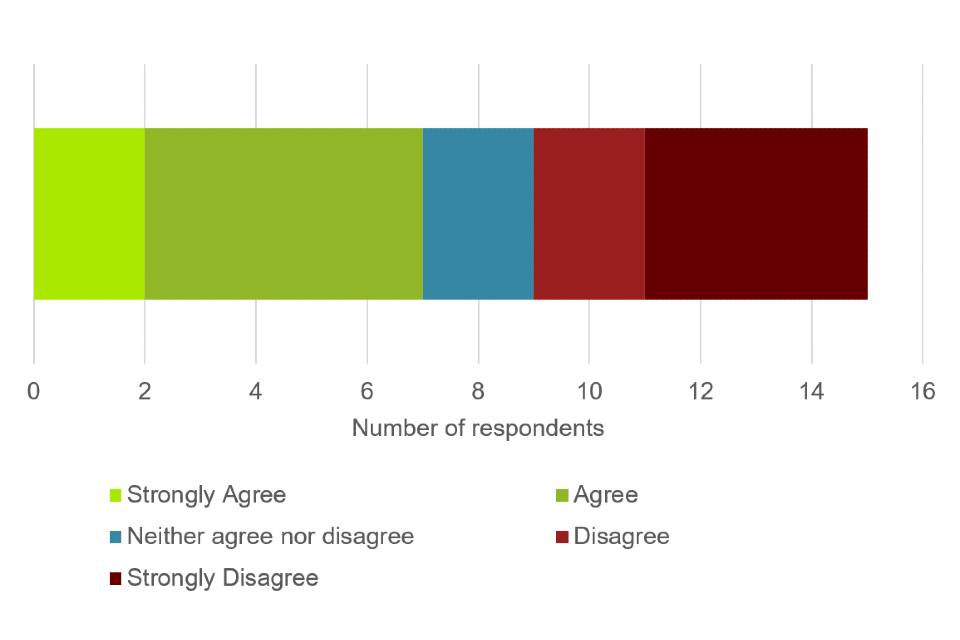

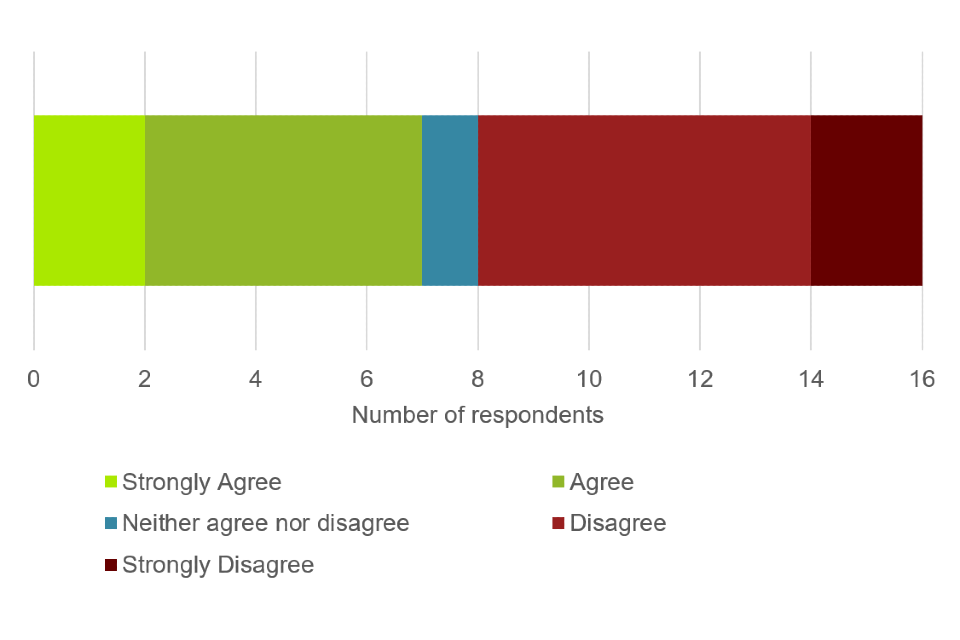

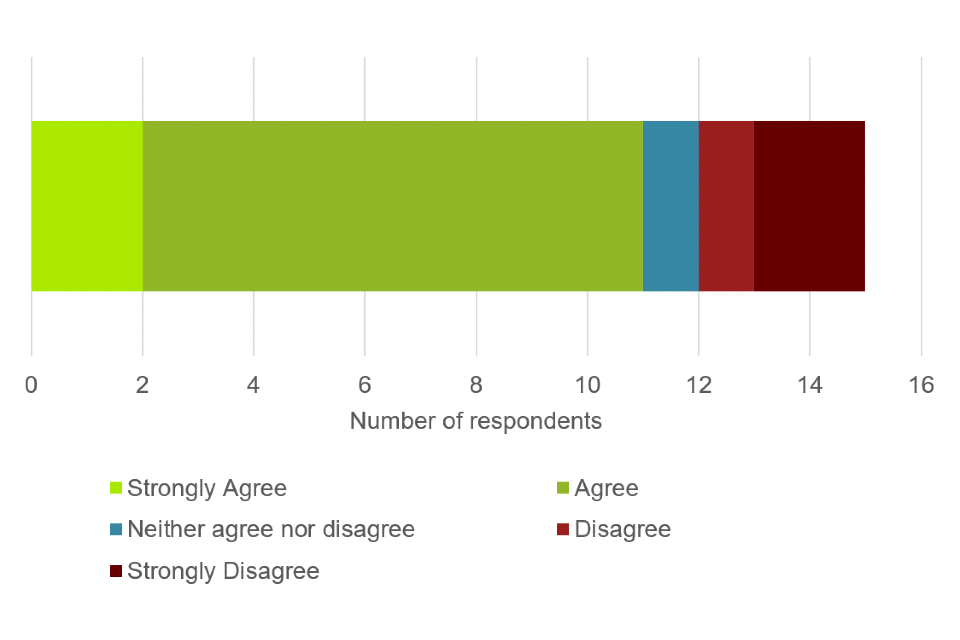

Seven of the respondents to this question either agreed or strongly agreed that Digital FSQs should be made up of a single overall component. However, the same number of respondents either disagreed or strongly disagreed.

Eleven respondents provided additional comments to outline the reasons for their responses.

Two respondents neither agreed nor disagreed that Digital FSQs should be made up of a single overall component. Both respondents asked for clarification on the subject content that would be assessed and the number of assessments that would need to be taken by learners. They felt that they could not make an informed decision unless these points were clearer.

Four respondents agreed with the rationale presented in the consultation document for a single component approach to Digital FSQs. They highlighted the benefits this approach would bring, such as comparability between qualifications, simplicity of design and efficiency in delivery.

In addition, 1 respondent supported there being 1 component in Digital FSQs as they felt the inter-related nature of the skills areas would benefit from 1 component. They felt that this would aid teaching and learning, as well as improving manageability for centres.

Four respondents who disagreed with the proposal provided comments, this included:

- one respondent supported an approach where the decision on the number of components is at the discretion of awarding organisations but that it was difficult to comment until a decision on which skills statements would be assessed through the formal assessment had been made

- one respondent felt that there should be an upper limit to the number of components, they proposed an approach of 2 components, 1 assessed internally and 1 assessed externally

- one respondent felt that employers would prefer students to have completed more than 1 component in order to reflect the diverse requirements of a workplace

Question 13

To what extent do you agree or disagree that we should set rules around the number of assessments within Digital FSQs at both qualification levels?

Twelve respondents either agreed or strongly agreed that we should set rules around the number of assessments within Digital FSQs at both qualification levels.

Eight respondents supported this proposal and provided comments to say they felt that this would improve comparability between qualifications. They felt that a consistent approach by awarding organisations was needed to ensure comparability.

One respondent strongly disagreed and stated that they felt the decisions on the number of assessments in Digital FSQs should be made by awarding organisations.

Three respondents neither agreed nor disagreed with the proposal. Two of the 3 respondents provided a comment to say that the proposals relating to assessments were not sufficiently clear for them to make a decision at this time.

Question 14

Do you have any comments on the number of assessments that should be permitted or required?

Nine respondents provided a comment on the number of assessments that should be permitted or required.

Two respondents commented that any decisions taken should ensure that the number of assessments is manageable for centres and learners.

One respondent felt that any decisions on the number of assessments in Digital FSQs should be made by awarding organisations. The rationale for the decisions taken would be outlined in assessment strategy documents.

Other comments on our proposal included:

- one respondent proposed an approach of 2 assessments, 1 internally assessed and 1 externally assessed

- one respondent commented that the number of assessments should be kept as low as practically possible, but that a single component should not be the only option available

- one respondent welcomed Ofqual setting rules on the number of assessments, but felt that knowledge should be assessed without the need for internet access

- one respondent commented that there should be a mix of assessment types used throughout the qualification

- one respondent suggested an approach where there would be an assessment for each unit that makes up the qualification

- one respondent did not believe that knowledge and skills could be assessed together and instead proposed an approach where knowledge would be assessed via on screen assessment, with a practical task involved in the assessment of skills

Question 15

What do you consider are the benefits and risks of permitting Entry level learners to split their assessments into different sessions? Are there any equalities issues that we should be aware of?

Twelve respondents provided comments on the proposal to permit Entry level learners to split their assessments into different sessions and the potential equalities impacts that would need to be considered.

Four respondents commented that for some learners at entry level, taking an assessment in a single session could be problematic. They suggested that flexibility to deliver the assessments over a number of sessions would limit the impact on some groups with protected characteristics.

Two respondents identified both benefits and risks that might result from splitting assessments over different sessions. They felt that splitting assessments would increase learner engagement and improve concentration. However, they suggested that by splitting the assessments it could lose the ‘real life’ functionality of the assessment and might demotivate learners who found the assessment harder than expected. They also felt a risk that might emerge was that assessments were split at inappropriate stages. They went on to suggest that rules should be in place to set where assessments are split.

It was also reported that Digital FSQs would have more content areas to cover than the current entry level ICT qualifications. Therefore, because Digital FSQs would be assessing a larger volume of content, the assessments would be longer. So by splitting assessments across sessions, it would make them more manageable for learners.

One respondent commented that they supported splitting assessments into different sessions, but only as long as the total time did not become excessive. They were unsure whether this approach should be applied to all learners or only as a reasonable adjustment for those with protected characteristics.

It was also felt that there was an issue of accessibility for entry level 1 and 2 learners that splitting assessments would not alleviate. However another respondent believed that splitting the assessment into different sessions would lower the demand of the qualification.

One respondent reported that they supported an approach where all learners taking Digital FSQs would have the option to split their assessments into different sessions and another acknowledged the security implications that may arise from splitting assessments over sessions. In addition, 1 respondent asked for clarity on whether this would be offered to all learners or just those with disabilities.

Question 16

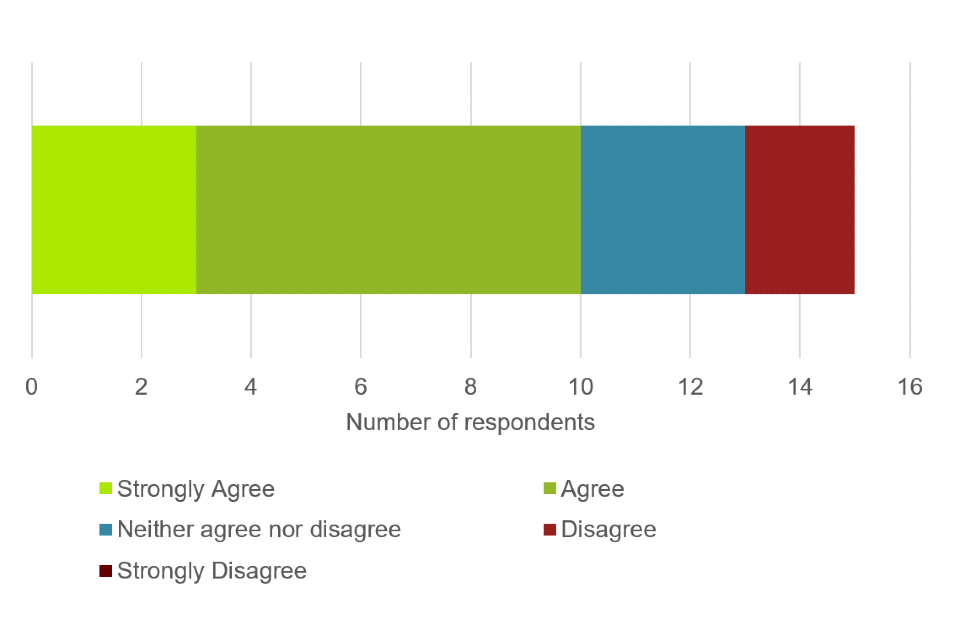

To what extent do you agree or disagree that we should not introduce rules around assessment times for Digital FSQs?

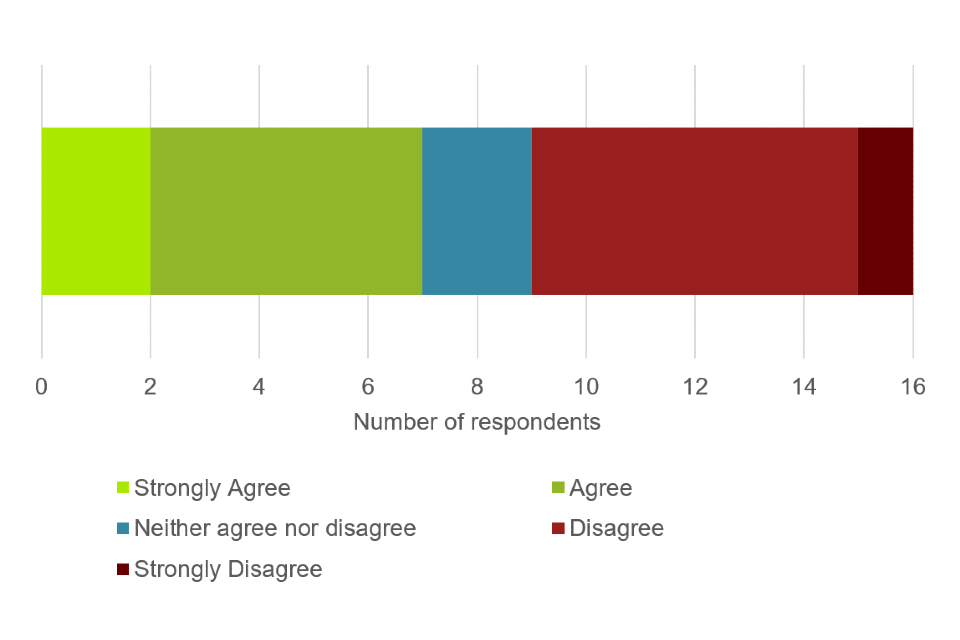

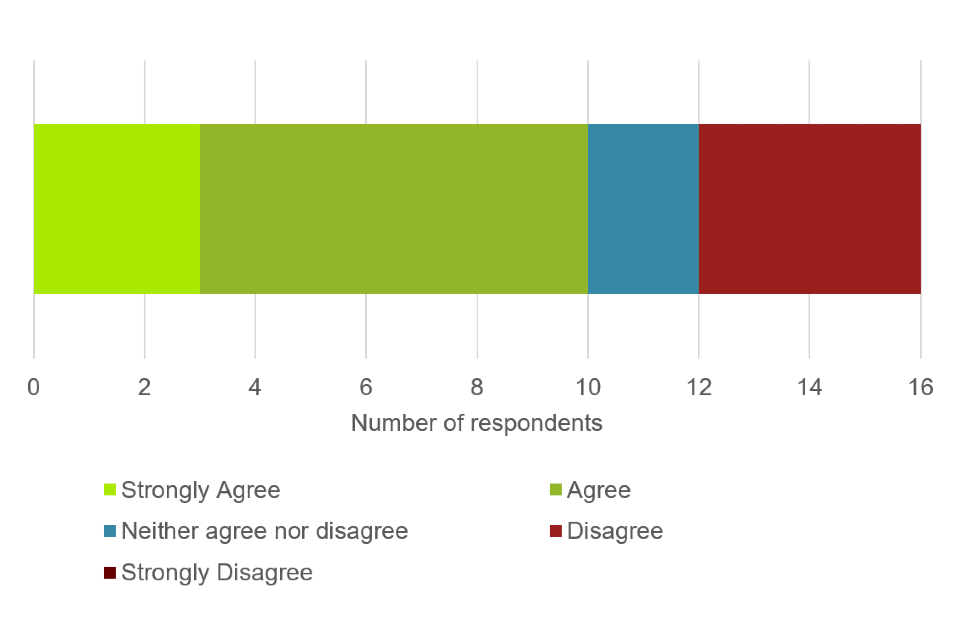

Eleven respondents disagreed or strongly disagreed that we should not introduce rules around assessment times for Digital FSQs.

Four respondents agreed with the proposal. Those that made further comments cited flexibility for awarding organisations to set timings and marks per timings as the reason they agreed. However, 1 respondent suggested that setting minimum and maximum assessment times, as is the case for FSQs in English and maths, would also provide this flexibility for awarding organisations.

Three of the respondents who disagreed with the proposal provided a comment to say they felt an overall time for the assessments should be agreed and set.

Two of the respondents who disagreed in their comments said that a fixed assessment time would ensure comparability between awarding organisations. Both disagreed with differing assessment times between awarding organisations as they felt that the focus should be on what is the most appropriate assessment conditions, not on how the qualification could be marketed to centres and learners.

Two respondents thought that we were proposing no time limits at all for the assessments. Both disagreed with this approach, with 1 citing the resource implications that untimed assessments may bring. In their comments, they outlined the difficulties in staffing and facilitating untimed assessments. The other reflected that time limits would better reflect the workplace where tasks are completed under time pressure.

Question 17

To what extent do you agree or disagree that we should prohibit paper-based, on demand assessment in Digital FSQs at both qualification levels?

Nine respondents disagreed or strongly disagreed that we should prohibit paper-based, on demand assessment in Digital FSQs at both qualification levels.

Overall, thirteen of the respondents provided additional comments to outline the reasons for their response.

Two respondents disagreed with the proposal and provided comments that raised the potential issues with accessibility that would arise from prohibiting paper-based assessment. They felt that some learners would not be able to interact with an on-screen assessment and if the option of a hard copy is removed, they might not be able to complete the assessment and qualification.

Four respondents outlined their concerns that technological limitations for some centres and learners would make anything other than paper-based assessment problematic. The lack of the resources and equipment needed to deliver the test and no or limited internet connections were highlighted as significant barriers for some learners.

Three respondents agreed with the proposal and in their comments felt that this would improve the security of the assessment.

Other responses received to this question included:

- one respondent disagreed with the proposal as they believed that a knowledge-based assessment could take either a paper-based or digital form and still be valid

- one respondent disagreed with the proposal and highlighted that not allowing paper-based assessments might limit centre’s ability to contextualise Entry level 3 assessments

- one respondent neither agreed nor disagreed with the proposal and felt that there should be flexibility in the delivery of the assessments, but that if Ofqual identified risks with paper-based assessments then rules should be set to prohibit them

- one respondent disagreed with the proposal to disallow paper-based assessment, questioning whether Ofqual would mandate the use of on-screen assessment and not allow the possibility of printing the questions out for candidates to complete

- one respondent disagreed and highlighted the difference in approach to Digital FSQs and FSQs in English and maths. They acknowledged that the digital content lends itself to on-screen assessment, but that the difference in approach from other FSQs would likely lead to confusion with centres and learners

Question 18

To what extent do you agree or disagree that we should not place any other restrictions around availability of assessments in Digital FSQs?

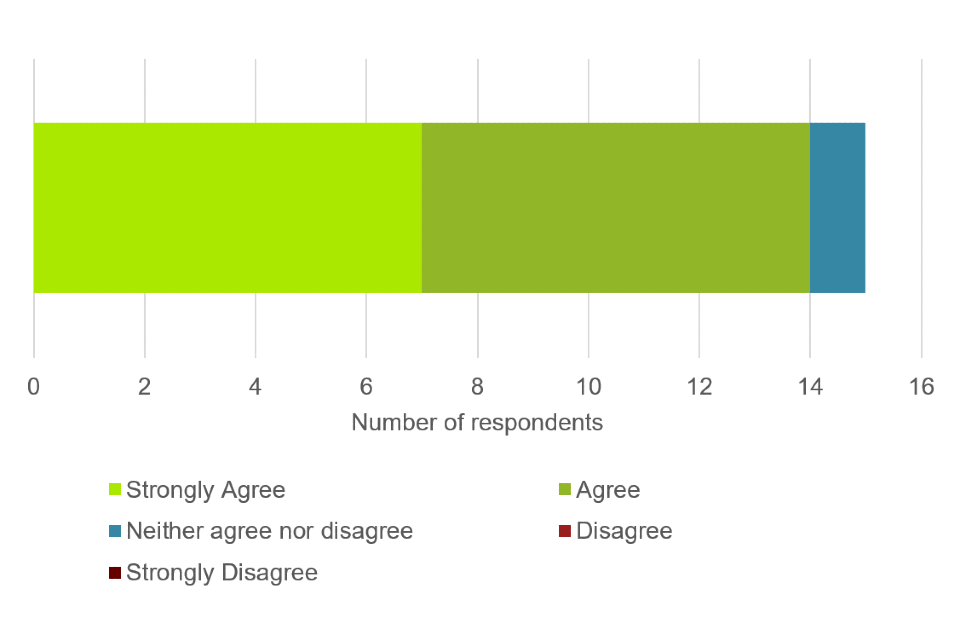

All but 2 respondents agreed or strongly agreed that we should not restrict assessment availability in any other way because of the importance of flexibility for the adult target audience for these qualifications.

Two respondents also commented that awarding organisations should have the ability to determine how often to provide assessments, taking account of the needs of different centres and their target learner groups, and should explain their approach in their assessment strategy.

A comment made by 1 respondent who agreed with our proposal not to otherwise restrict assessment availability, suggested that they felt that there were still challenges for security and comparability.

Another respondent who agreed with the proposal commented that there might be a need to agree restrictions around availability of assessment in the first year of awards until a standard has been agreed across all awarding organisations.

The 2 respondents who neither agreed nor disagreed with our proposal did not provide a comment.

Question 19

Are there any regulatory impacts arising from our proposal to prohibit paper-based, on-demand assessment in Digital FSQs, at both qualification levels?

Ten respondents provided comments to this question, 2 of which did not believe there were any regulatory impacts arising from the proposal.

Seven respondents commented to outline any regulatory impacts arising from our proposal to prohibit paper-based, on-demand assessment in Digital FSQs, at both qualification levels.

Two respondents outlined the potential impact of increased costs for awarding organisations or centres if paper-based assessments were not permitted, and assessments were made available on-screen and online.

Other comments on our proposal included:

- if paper-based assessment was prohibited then there might be impacts on resourcing and equipment within centres

- if assessment was solely delivered online, then tutors would not have access to hard copy papers to use with subsequent cohorts and to improve their teaching methods

- paper-based qualifications can put undue burden on centres when providing access arrangements for learners

- the difference in approach to paper-based assessment in Digital FSQs compared to other functional skills qualifications might cause confusion in centres and with learners

Question 20

Are there any equalities impacts arising from our proposal to prohibit paper-based, on-demand assessment in Digital FSQs, at both qualification levels?

Eleven respondents provided comments on equality impacts arising from our proposal to prohibit paper-based, on-demand assessment in Digital FSQs, at both qualification levels.

Four respondents felt that there could be impacts on learners that need a paper-based assessment for accessibility reasons.

Four respondents commented that they thought there could be impacts on the ability for reasonable adjustments to be provided by centres if paper-based assessments were to be prohibited.

One respondent felt that any potential impacts could be mitigated through the use of access arrangements. Two respondents raised concerns that those centres and learners that cannot access the internet (for reasons covered under the equalities act) may be negatively impacted.

Question 21

To what extent do you agree or disagree that we should set a bespoke Condition which requires the hours of Guided Learning for Digital FSQs to align with the figure set by the Department?

The majority of respondents (10 in total) agreed with our proposal to set a bespoke Total Qualification Time Condition. Two disagreed and 4 neither disagreed nor disagreed.

Those who agreed or strongly agreed commented that:

- where the hours of Guided Learning have been determined by the Department and all awarding organisations must align with this figure, it is helpful to have this recognised in a bespoke Condition

- because the hours of Guided Learning have not been determined by awarding organisations in the way that is normally required by the General Conditions of Recognition, a bespoke Condition means that awarding organisations cannot be held to account for a figure they have no control over

- this has become standard practice with other qualifications such as the reformed FSQs in English and maths

One respondent who agreed also asked for clarification on what would happen if the hours of Guided Learning determined proved not to be appropriate.

One respondent who neither agreed nor disagreed commented that determining hours of Guided Learning supported teachers with their implementation planning.

One respondent who disagreed commented that there was likely to be a wide variety of learners taking these qualifications and so flexibility in hours of Guided Learning was necessary.

Question 22

To what extent do you agree or disagree that we should require a compensatory approach to assessment within Digital FSQs at both qualification levels?

Nine respondents agreed or strongly agreed that we should require a compensatory approach to assessment. Five respondents neither agreed nor disagreed and 2 respondents disagreed.

Comments from those who agreed or strongly agreed included:

- the proposed approach is in line with that of the reformed FSQs in English and maths

- a compensatory approach to assessment is appropriate in light of the purpose of the qualifications and the inter-related nature of the subject content

One respondent who agreed with the proposal asked for clarification on how a compensatory approach would be applied if there were separate assessment components for knowledge and skills. They raised concerns that employers may expect learners to have passed both components and that if this was not the case then the credibility of the qualification could be impacted. One respondent who neither agreed nor disagreed made a similar point, proposing that compensation should not apply across components.

One of the other respondents who neither agreed nor disagreed said that that they did not understand the question. The other 3 did not provide a comment.

One of the 2 respondents who disagreed commented that learners who did not pass all units would be penalised with this approach but did not explain why they thought this to be the case.

The other respondent who disagreed did so because they questioned how a pass or fail qualification could be compensatory. They also asked whether a compensatory approach meant that learners could achieve high marks in 1 skills area and no marks in another skills area, but could still pass.

Question 23

To what extent do you agree or disagree that we require Digital FSQ assessments at both qualification levels to use mark-based approaches to assessment?

Ten respondents agreed that we should require awarding organisations to use mark-based approaches and to separate the allocation of marks from decisions about grading. Two neither agreed nor disagreed and 4 disagreed.

Comments from those who agreed or strongly disagreed included:

- the application of mark-based approaches support the use of a compensatory approach to assessment

- the approach is consistent with that used in Essential Digital Skills and the reformed FSQs in English and maths

One respondent who neither agreed nor disagreed with our proposal also commented that mark-based approaches should give the opportunity to standardise assessment decisions and to ensure that variations in assessment difficulty are considered in determining pass marks.

Those who disagreed or strongly disagreed commented that:

- a mark-based approach was not appropriate for all subject content statements, in particular those with a focus on practical skills where learners either can or can’t perform that skill

- a decision about the use of marks can’t be made without knowing which statements fall within the formal assessment

- a combination of marks and judgements against criteria is used in FSQs in English

- awarding organisations should be allowed to decide whether to use marks or judgements against criteria and be required to justify their approach in their assessment strategy

Some respondents also asked for clarification on whether this proposal applied to the assessment of skills statements as part of the course of study, and how the assessment of these statements would contribute to the overall mark.

Question 24

To what extent do you agree or disagree that we should require awarding organisations to set assessments for Digital FSQs at both qualification levels?

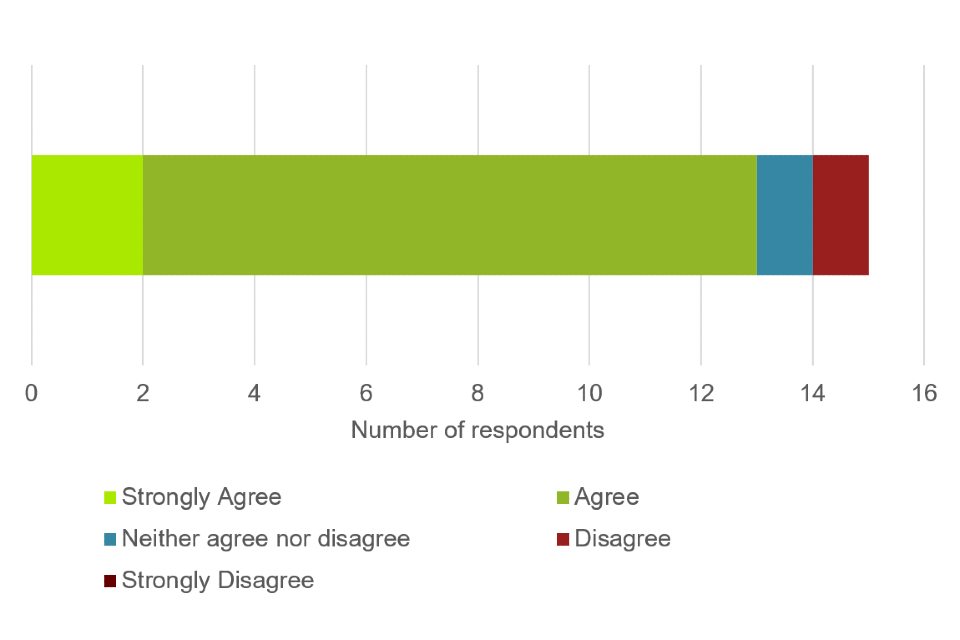

All but 1 respondent agreed or strongly agreed with our proposal.

Reasons given included:

- requiring assessments to be set by awarding organisations would help to maintain confidence in the new qualifications

- it would ensure appropriate coverage of the subject content and would support comparability across centres and learners and over time

- centres don’t have the assessment expertise, time or resources to create their own assessments, unlike awarding organisations

- the proposal is consistent with the approach taken with the reformed FSQs in English and maths

One respondent also asked for clarification on the definition of ‘set by the awarding organisation’. Whilst another stated that they would welcome a level of flexibility and innovation, permitting centres to contextualise the set assessments based on the demographics of their learners.

One respondent neither agreed nor disagreed but did not provide a comment.

Question 25

To what extent do you agree or disagree that we should require Level 1 Digital FSQ assessments to be marked by the awarding organisation?

Nine respondents agreed or strongly agreed with our proposal. Comments in support of our proposal included:

- this proposal was consistent with the approach taken for the reformed FSQs in English and maths and so would help centre understanding

- marking by awarding organisations would provide the highest level of control

- if the assessments are delivered on-screen or on-line, the need for centre marking won’t arise

One respondent who agreed with our proposal commented that the case for a higher level of control for a relatively low stakes qualification was not particularly compelling, but they did not feel there would be a need for teacher marking. Another respondent questioned what would be required for the skills statements that fall outside of the formal assessments.

Two respondents neither agreed nor disagreed. One did so because they were unclear whether our definition of marking by awarding organisations also covered centre marking which was then quality assured by an awarding organisation. The other did so because they thought we were proposing that awarding organisations should mark internal assessments (by which we think they meant centre-devised assessments).

Comments from the 3 respondents who disagreed with our proposal included:

- if a full quality assurance process is in place at centre level, there should be no reason why assessments could not be centre marked as is permitted at Entry level, which would also speed the process for certification

- requiring awarding organisations to mark assessments would stifle innovation and the ability of awarding organisations to respond to the needs of different learner groups

- the practical and skills based nature of the subject content would be more validly assessed as part of on-going teaching, learning and assessment

- requiring an externally set and marked test would increase robustness of the assessment but at the expense of the realism

- requiring awarding organisations to mark all tasks would limit what could be assessed as the skills statements don’t lend themselves to external assessment

- awarding organisations should be able to decide the marking approach and explain it in their assessment strategy

Question 26

To what extent do you agree or disagree that we should allow, but not require, Entry level Digital FSQ assessments to be centre marked?

All but 3 respondents agreed or strongly agreed with our proposal to permit but not require centre marking in Entry level qualifications. One neither agreed nor disagreed and 2 disagreed or strongly disagreed.

Reasons given for agreeing with our proposal included:

- Entry Level qualifications should be as flexible as possible to enable centres to assess their learners as and when they are ready to take the assessments

- allowing centres to mark learner assessment would support the development of innovative assessment approaches

- centre marking would improve the improve the holistic teaching and learning of the qualification content, enable learners to understand the interdependencies of the standards, and ensure the validity of the assessment

- Entry level Digital FSQs are not high risk qualifications and so centre marking would be appropriate

- not permitting centre marking would lead to additional costs for awarding organisations and centres

The respondent who neither agreed nor disagreed with our proposal commented that if the assessments were likely to be on-screen and on-demand, then the advantages of a centre-marked approach would be limited. A respondent who agreed with our proposal made a similar point.

The 2 respondents who disagreed or strongly disagreed with our proposal did so either because they felt that decisions about marking should be made by the awarding organisation and explained in their assessment strategy, which would support innovation, or because they thought that our proposal was requiring awarding organisations to mark assessments, which would incur additional costs and be restrictive.

One respondent also commented on the level of risk attached to Digital FSQs, stating that funding models linked to an entitlement can be susceptible to fraudulent behaviours from a small number of providers, and that the high stakes nature of some qualifications is not the only reason why an awarding organisation might want to exercise tight controls.

Question 27

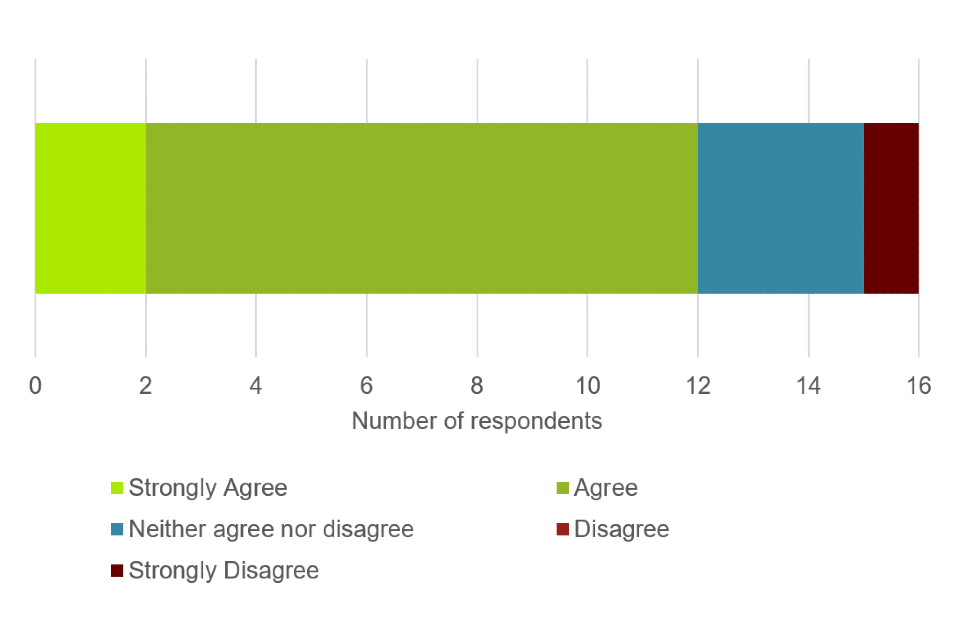

To what extent do you agree or disagree that we should allow, but not require, adaptation of contexts within assessments for Entry level Digital FSQs?

All but 2 respondents agreed or strongly agreed with our proposal to permit adaptation of contexts in assessments at Entry level. Several commented that this could help to make assessments relevant to the different learner groups who might take the qualification.

One respondent neither agreed nor disagreed with our proposal but made the same point that adaptation of contexts in assessments could make assessments more relevant to learners.

The respondent who disagreed stated that adaptation of contexts would not be possible if assessments had to be delivered on-screen or on-line as suggested in our high-level principles. Some respondents who agreed with our proposal raised the same point.

Question 28

To what extent do you agree or disagree that we should prohibit adaptation of contexts within assessments for Level 1 Digital FSQs?

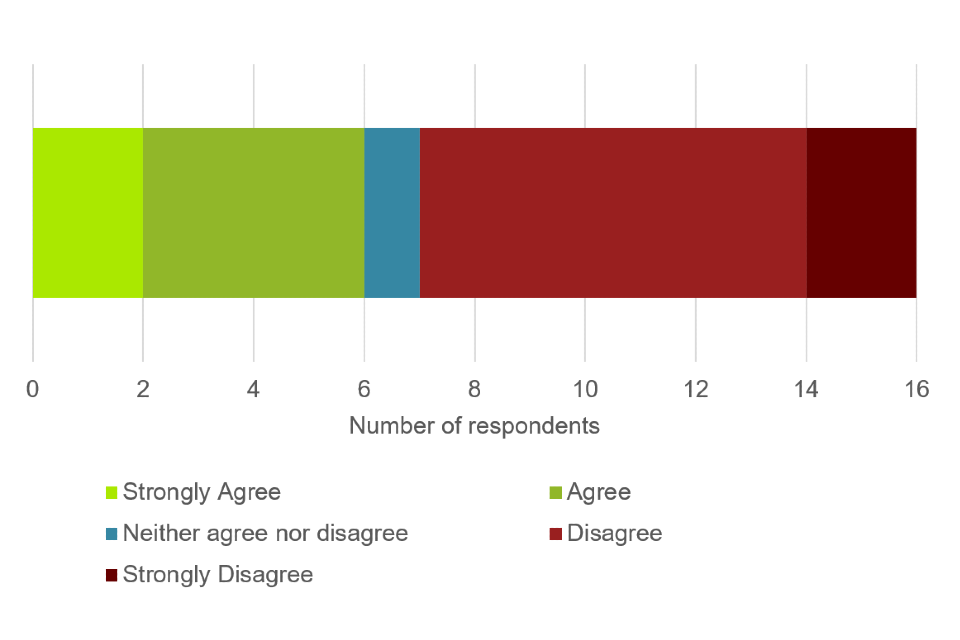

Views were more divided about our proposed approach at Level 1, where 7 respondents agreed or strongly agreed and 6 respondents disagreed or strongly disagreed with the proposal not to permit adaptation of contexts at Level 1. Two respondents neither agreed nor disagreed and 1 gave no response.

Comments from those who agreed with our proposal included:

- Level 1 learners should be able to respond to different contexts

- centres have not taken advantage of the opportunity to adapt the contexts for assessments with previous qualifications

- our approach was consistent with that taken for EDSQs

Those who disagreed said that adaptation of contexts would help to make assessments relevant to Level 1 learners and that there was reason to differentiate between Entry level and Level 1 learners. In addition, 1 respondent reported that allowing centres to adapt the context of assessments to focus on local employer requirements and needs would help improve employer confidence in the qualifications.

Those who neither agreed nor disagreed did so either because they did not feel that it was sufficiently clear what forms of assessment would be permitted, or because they felt that whether or not adaptation was permitted was dependent on whether the assessment was internally or externally marked.

Question 29

What are the costs, savings or other benefits associated with our proposals for setting, marking and adaptation of assessments? Please provide estimated figures where possible and any additional information we should consider when evaluating the impact of our proposals.

Ten comments were provided to this question that asked for any costs, savings or other benefits associated with our proposals.

Three comments related to the costs that would result from developing an on-screen or online assessment. This group of respondents outlined the increase in costs and resourcing needed to develop any new systems and software to deliver the assessments electronically.

Other responses received to this question included 1 respondent who felt that the digital infrastructure that would need to be in place to deliver on-screen or online assessments would result in an increase in costs for centres.

Another commented to say that delivering the assessments online or on-screen would lead to reduced costs in the long term. However, they felt that in the short term the lack of suitable IT equipment might be an issue.

One respondent raised concerns about the evidence of requirements covered through study that centres would need to submit. They felt that this would add unnecessary costs and resource burdens for centres and awarding organisations.

It was also felt that a potential benefit of the proposed approach would be faster turnaround for accreditation if centres have direct claim status and mark their own assessments at all levels. In addition, 1 respondent provided approximate costings for the Digital FSQs, based on similar qualifications currently offered.

Question 30

To what extent do you agree or disagree that we should require a single grading approach across Digital FSQs?

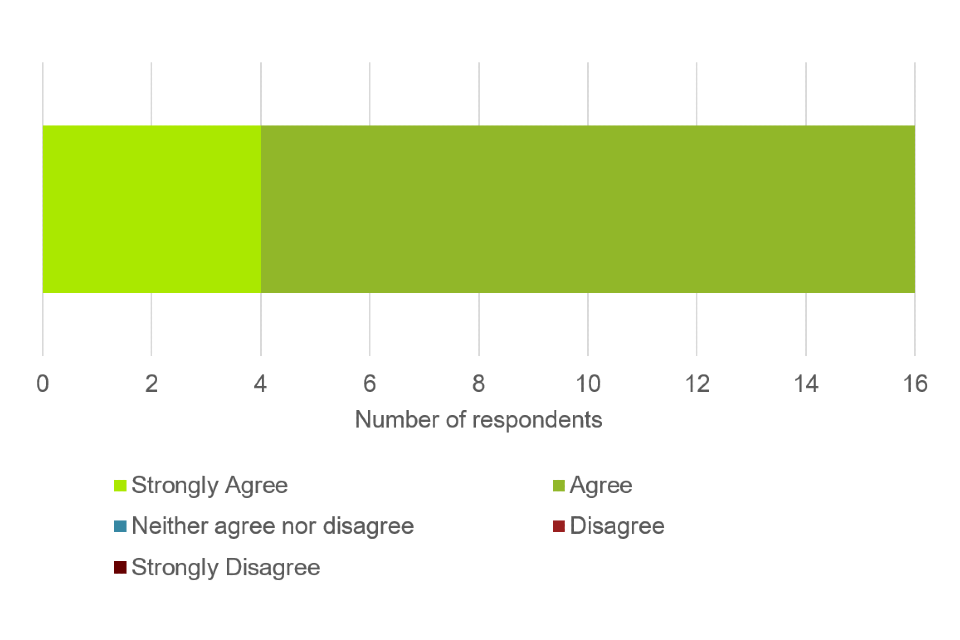

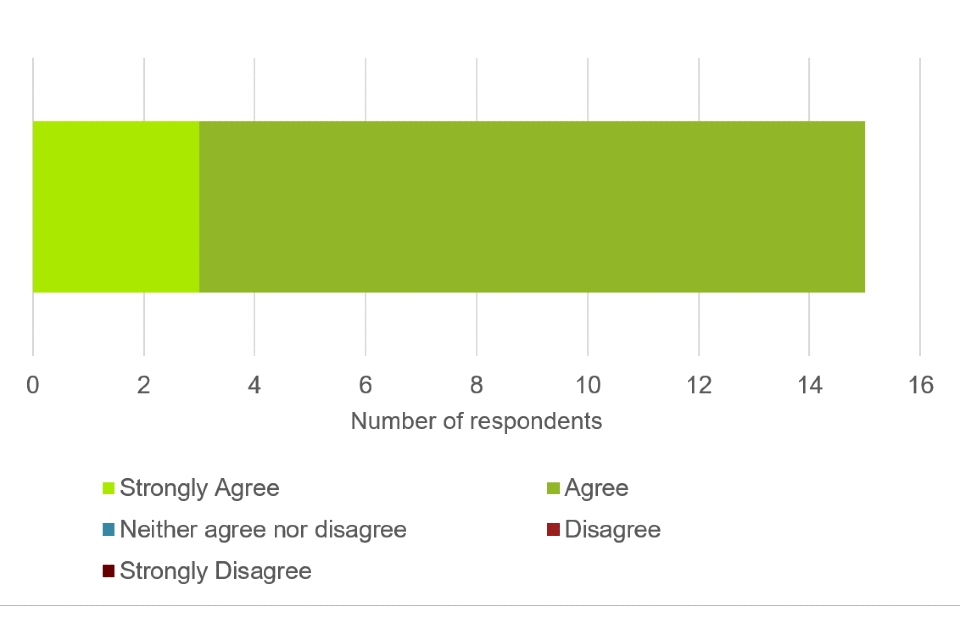

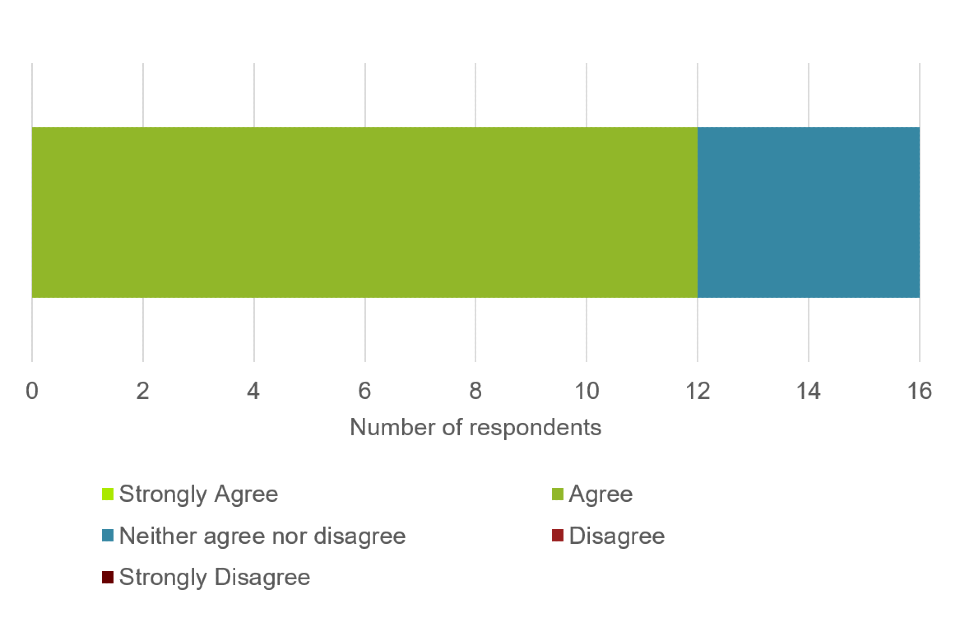

All but 1 respondent agreed or strongly agreed with our proposal to adopt a single grading approach across Digital FSQs.

Respondents agreed that a single grading approach supported user understanding, transparency and comparability between awarding organisations. It was also consistent with the approach used with the reformed FSQs in English and maths.

Question 31

To what extent do you agree or disagree that, if a single grading approach is required, that a pass or fail grading model should be used for Digital FSQs?

All but 1 respondent agreed or strongly agreed with our proposal to grade Digital FSQs on a pass or fail basis. Respondents agreed with a pass or fail grading approach because it was consistent with the aim of the qualification, which was to demonstrate a baseline of digital skills, and the introduction of a grading scale (such as pass, merit and distinction) could confuse users of the qualification. It was also consistent with the approach taken with the reformed FSQs in English and maths and EDSQs.

One respondent commented that as there would be no limit on retakes and assessment is on demand, no ‘fail’ grade would be awarded at the level of the qualification. The registration is kept open until the candidate passes or until the registration expires. Fail can only be recorded at the level of the assessment, and only where this is assessed by the awarding organisation.

Question 32

To what extent do you agree or disagree that we should require the Digital FSQs to be awarded at Entry level 3 and Level 1 only?

Respondents were divided in their views on this proposal. Just over half disagreed or strongly disagreed with our proposal, just under half agreed or strongly agreed. One respondent neither agreed nor disagreed.

Those that agreed with our proposal to award the Entry level qualification at Entry level 3 did so because of the inter-related nature of the knowledge and skills across the 3 Entry levels and the difficulty in distinguishing between them to generate reliable and valid assessments. It was also noted that as the proposal had been adopted for EDSQs, it made sense to implement the same rule for Digital FSQs.

Most of those who disagreed or strongly disagreed with our proposal did so because they felt that Digital FSQs should be available at Level 2. Even some of those who agreed with our proposal commented that Digital FSQs should be available at Level 2, particularly for a qualification supporting employability.

One respondent also disagreed with our proposal to award the Entry-level qualification at Entry level 3 only, stating that qualifications at each level were necessary to support learner progression.

Some respondents also disagreed with our proposal because of concerns with the subject content. One commented that the amount of material to be covered in the Entry-level standard was such that many learners in the target group for this qualification will never reach Entry level 3 in some of the skills areas. Another commented that the Department’s subject content was, at this stage, insufficiently developed to be able to arrive at a decision about the assignment of levels.

Question 33

To what extent do you agree or disagree with our proposals around the setting and maintenance of standards in Digital FSQs?

All respondents agreed or strongly agreed with our proposal not to set a single technical approach to standard setting as it permits awarding organisations to take different approaches to assessment. This approach would be consistent with that taken with reformed FSQs in English and maths and Essential Digital Skills Qualifications.

Other comments (some of which are relevant to questions 34 and 35) included:

- at Entry level, if assessment is internally marked, no evidence will be returned to the awarding organisation and predetermined pass thresholds will be required

- further information is needed on whether an Oversight Board and Technical Group will be established for the Digital FSQs

- the development of common descriptors to aid standards maintenance would be helpful

- discussions with other awarding organisations and Ofqual to consider the types of evidence that would be most appropriate in standard setting would be helpful, including an indication of Ofqual’s expectations when the usual ‘appropriate range of qualitative and quantitative evidence’ is unavailable

- there is a need to make clear that the standard being set is an entirely new standard and there is no expectation that awarding organisations should be carrying through a legacy standard from the Functional Skills in ICT (especially as the legacy Level 2 standard is now closer to the new Level 1)

- Ofqual and awarding organisations should explore the impact of any potential dip in performance due to the Sawtooth Effect ahead of first awards and the approach for mitigating this should be documented (or indeed, the rationale for taking no action provided, given the competency-based model of the assessments)

- further details are also needed around the plans for standard setting in the first year, as this may impact on what data is consequently available for performance of learners who have previously achieved the qualification

- given that the Digital FSQs are based on subject content drawn from the Essential Digital Skills standards, a case could be made for looking at how performance in Digital FSQs and EDSQs compares

Question 34

To what extent do you agree or disagree that we should regulate differently for the first year of awards for Digital FSQs to ensure initial standards are set appropriately?

A majority of respondents agreed or strongly agreed with our proposal to regulate differently in the first year of awards to ensure that all a common standard, applied by all awarding organisations, can be carried forward over time.

It was also noted that the first year of awarding could present particular challenges and so regulation in this year should be different to ensure learners are not unintentionally disadvantaged in any way.

Other comments about how the approach should be implemented included:

- further thought is required about the timing of the ‘standardisation activity’, particularly in the context of on-demand assessment and the use of pre-tested items, to ensure that it would not be burdensome for awarding organisations

- any comparability activities undertaken must not undermine the flexible nature of the assessments or delay the issuing of results to learners

- the timing of any activities needs to take account of the different patterns of entry in awarding organisations

- proper and advanced notification of comparability activities should be communicated to awarding organisations

- rather than just promoting communication between awarding organisations, Ofqual should require this, otherwise awarding organisations will struggle to meet the principles of scrutiny (see Q.35)

- the development of any ‘pass descriptor’ might need to take into account equivalences between Digital FSQs and other IT user qualifications

- a pass descriptor would be valuable to awarding organisations to underpin approaches to test design, and form an integral part of the threshold determination process. However, the extent to which it would ensure greater level of comparability across awarding organisations is dependent on the extent to which the descriptor is open to interpretation

- a pass descriptor could assist employers in understanding what a Digital FSQ Pass means if it articulates functionality

- any remedial work that may be deemed necessary following this should not impact learners’ results already published

- there needs to be recognition that the outcomes in the first year may differ considerably to both legacy outcomes and those achieved in future years as providers and learners adapt to the different demands of the revised qualifications

- further thought is required on the use of qualitative and quantitative evidence

One respondent neither agreed nor disagreed and 1 disagreed.

The respondent who disagreed was an awarding organisation who stated that Digital FSQs should be regulated, in the first year of awards, in the same way as EDSQs. By this, we think the respondent was suggesting that because there is no requirement to regulate EDSQs differently in their first year of awards, there should be no requirement to do so for Digital FSQs.

Question 35

To what extent do you agree or disagree with our proposals around post-results scrutiny of outcomes?

A majority of respondents agreed or strongly agreed with our proposal.

Comments in support of the proposal included:

- there should be an enhanced level of scrutiny to ensure comparable outcomes between awarding organisations and this should only affect future paper-setting and awarding decisions

- the principles of scrutiny proposed are consistent with those for reformed FSQs in English and maths

- there was a need to increase scrutiny in the first year of a new qualification

One of the respondents who agreed with our proposal also wished for greater clarity on what constitutes ‘some common basis’ and whether the scrutiny might include a review of borderline work alongside the review of outcomes data.

Four respondents neither agreed nor disagreed, and 1 disagreed.

One of those who neither agreed nor disagreed did so because, they thought that although post results scrutiny might be helpful, there was a lack of clarity about what was being proposed.

The respondent who disagreed was an awarding organisation who did not agree that post results scrutiny of outcomes should be proposed for Digital FSQs, as it had been for reformed FSQs in English and maths, as these qualifications are not intended to be used in the same way.

Question 36

To what extent do you agree or disagree with our proposal to require awarding organisations to put in place and comply with an assessment strategy?

A majority of respondents agreed or strongly agreed with our proposal to require awarding organisations to produce assessment strategies, including all awarding organisations.

Two respondents neither agreed nor disagreed. No respondents disagreed or strongly agreed.

Comments in support of our proposal included:

- this had become standard practice for a range of regulated qualifications and generally works well

- awarding organisations should be required to give a rationale for their approach to allow providers to make informed choices

- an assessment strategy would help with developing and formalising the processes or decisions taken about qualifications

- it would help to ensure that each awarding organisations has a clear and appropriate plan in place to assess the qualifications

- the proposal is in line with the approach taken for reformed FSQ in English and maths

Respondents also commented on the guidance and information Ofqual should share with awarding organisations to assist with their compliance with the requirement. This included:

- Ofqual should distil any learning from the technical evaluation process for the reformed FSQs in English and maths, and disseminate it across all awarding organisations who wish to offer the new Digital FSQs to ensure that those awarding organisations that have not been through the process already are not disadvantaged

- further information about timelines and the process for review of the assessment strategies is required

- more specific guidance on Ofqual’s expectations for each section of the assessment strategy, including greater clarity on what is acceptable, should be provided

Question 37

To what extent do you agree or disagree with our proposals around the technical evaluation process?

A majority of respondents agreed with our proposal and some commented on the need for, and potential benefits of, technical evaluation.

No respondents disagreed or strongly disagreed with our proposal. Four neither agreed nor disagreed, 1 of which was an awarding organisation.

The awarding organisation that neither agreed nor disagreed with our proposal expressed concern that the process of technical evaluation may be time-consuming and lead to delays in providing information to centres before first teaching.

They, together with some respondents who agreed with our proposal, suggested ways of enhancing the process, such as using an approach which builds in more frequent and/or informal feedback to awarding organisations during the evaluation process, providing clear and detailed feedback, and allowing awarding organisations to publish draft assessment materials before they have gone through the process of technical evaluation.

Some respondents also asked for clarification on the timing of the technical evaluation process and whether this would be before the qualifications were made available to centres.

Question 38

What are the costs, savings or other benefits associated with our proposals which we have not identified? Please provide estimated figures where possible and any additional information we should consider when evaluating the impacts of our proposals.

Six respondents provided comments to this question that identified any costs, savings or benefits that may result from our proposals.

Two respondents envisaged a number of costs resulting from the proposal, specifically relating to the potential for online or on-screen assessment. They felt that there was potential for costs in developing an online assessment platform to host the assessment. They also felt that the support and new resources needed for centres to deliver the qualifications would lead to additional costs.

One respondent commented to say that there were potentially costs involved for centres in relation to the hardware and equipment needed to deliver and assess Digital FSQs.

One respondent provided an estimate of the cost of setting, marking and evaluating Digital FSQs based on other functional Skills Qualifications. They proposed that a dual running of Essential Digital Skills Qualifications and Digital FSQs could result in efficiencies. They also provided comments on further specific costs that awarding organisations could incur, including:

- the potential copyright costs that could be associated with using third party images and platforms

- the development of a simulated environment would incur significant costs to develop

- the resourcing involved in the moderation and monitoring of skill statements assessed through the course of study

One respondent felt that savings could occur by using online or on-screen assessment.

One respondent provided a comment to say that potential software development costs to support online or on-screen assessment would be significant and would mean that Digital FSQs would cost more to develop than other functional skills qualifications.

Question 39

To what extent do you agree or disagree that once Digital FSQs are available, we should allow awarding organisations to make current FSQs in ICT at Entry level 1 to 3 and Level 1 available for a maximum of 12 months, which would include all resists?

A majority of respondents agreed or strongly agreed with our proposal.

Some of these respondents stated that running current FSQs in ICT and Digital FSQs alongside each other for a period of 12 months was reasonable, would protect existing Functional Skills learners and was consistent with the approach taken with reformed FSQs in English and maths.

One awarding organisation neither agreed nor disagreed but in their comment stated that the 2 qualifications should not run in parallel to maintain efficiency and minimise operational problems.

Three respondents disagreed, 2 strongly, either because they felt the window was too short for centres to adjust their delivery, or because they did not want Digital FSQs to be introduced as currently designed.

Some respondents asked for clarification on whether FSQs in ICT at Entry level 1, Entry level 2 and Level 2 would be awarded outside this 12-month transition period.

Question 40

To what extent do you agree or disagree with our proposal to disapply General Conditions E1.3 to E1.5, E7 and E9?

A majority of respondents agreed or strongly agreed with our proposal, with 1 respondent disagreeing and the rest neither agreeing nor disagreeing. Those that agreed accepted that it would reduce the regulatory burden on awarding organisations.

The respondent who disagreed, did so because they believed that that was a need for Digital FSQs at Entry level 2 and at Level 2. Another respondent who agreed with our proposal to disapply these GCRs made the same point.

Of the respondents that neither agreed nor disagreed with our proposal, 1 commented that they did not understand the question.

Regulatory impact

Question 41

Are there any regulatory impacts that we have not identified arising from our proposals? If yes, what are the impacts and are there any additional steps we could take to minimise the regulatory impact of our proposals?

Fifteen of the 16 respondents to this question either made no comment or had nothing further to add.

One respondent suggested further steps that could reduce the regulatory impact could include providing materials to aid tutor delivery.

Question 42

Are there any costs, savings or other benefits associated with our proposals which we have not identified? Please provide estimated figures where possible.

There were 6 responses provided to this question.

Two respondents considered that online or on-screen assessments and not doing paper-based assessments could lead to reduced costs.

In contrast, several respondents felt that there would be additional costs. This included additional costs as result of:

- the development of an online assessment platform for use in the external assessments

- new resources and support materials for centres

- increased moderation for controlled assessments and portfolio-based assessments if they were to be introduced which would increase the costs for awarding organisations, especially if on-screen assessment were to be used

- the administration of the tests by awarding organisations

One respondent felt that there would be additional costs for centres in terms of hardware and equipment to deliver and assess the qualification.

Another respondent could not make a statement on the costs, savings or other benefits associated with our proposals as they felt they did not have all of the information required to make an informed decision.

Question 43

Is there any additional information we should consider when evaluating the costs and benefits of our proposals?

Three respondents provided comments on this question. Each of them reiterated points they had made in Question 42 or referred to information already provided in the course of this consultation.

Question 44

Do you have any comments on the impact of our proposals on innovation by awarding organisations?

There were 6 comments provided to this question.

One respondent felt that internal assessment allows an innovative approach, whereas external assessment can be restrictive. They also asked for clarification on how often regulatory guidance will be updated, they felt that the guidance and content should be reviewed every 3 years.