Public attitudes to data and AI: Tracker survey (Wave 2)

Published 2 November 2022

1. Foreword

The Centre for Data Ethics and Innovation (CDEI) leads the UK government’s work to enable trustworthy innovation in data-driven technologies and AI. As such, it is critical to understand public priorities and concerns for these technologies.

Greater data access has the potential to change our lives for the better. The more accurate information we have, the easier it is to solve complex problems and make informed decisions. From civil society-led global dashboards to monitor COVID-19, to DeepMind’s breakthrough AlphaFold solution to predict protein structures, we live in a time where the opportunities of data and AI use are becoming clearer by the day.

Despite the potential benefits, there are public concerns about increasing the use of data. Citizens want to know that information about them is being used responsibly and stored safely; and to understand how data is informing decisions being made about them. Low public trust acts as a barrier to data-driven innovation. For example, without justified trust, citizens may be wary of opting-in to data sharing schemes that could deliver wide public benefits, leading to unrepresentative datasets and risks of unfair outcomes.

Building on Wave 1, this second iteration of the Public Attitudes to Data and AI (PADAI) Tracker Survey, provides insight into issues including where citizens see the greatest value in data use, where they see the greatest risks, trust in institutions to use data, and preferences for data sharing.

Public attitudes towards data use are, of course, context dependent, and vary across the population based on existing views and experiences. To draw out these nuances, the CDEI used a large sample of over 4,000 respondents, and also conducted telephone interviews with an additional 200 respondents with very low levels of digital familiarity.

This tracker survey, alongside other qualitative and quantitative research at CDEI, provides an evidence base which sits at the heart of our work on responsible innovation. For example, the survey’s research findings on demographic data will inform work to support organisations to access demographic data to monitor their products and services for algorithmic bias.

We want this report to be used by the wider data ecosystem, including government, industry and civil society - and we are grateful to those of you who have contributed to the design of the survey. Beyond this summary report, we have provided the survey’s raw data to enable further analysis and support organisations to innovate in data and AI. We would welcome further thoughts about how to make this research as useful as possible, and are always keen to hear how you are using these findings at public-attitudes@cdei.gov.uk.

We hope you find this work useful, and look forward to working with you to help achieve trustworthy innovation in data and AI.

Edwina Dunn, Chair, CDEI Advisory Board

2. Executive summary

Below is a summary of the key findings of this Wave 2 report (June - July 2022), and how these findings differ from Wave 1 (November - December 2021):

1. Health and the economy are perceived as the greatest opportunities for data use.

‘Health’ and ‘the economy’ represent the greatest perceived opportunities for data to be used for public benefit. These mirror the areas where the public perceive the biggest issues facing the UK: ‘the economy’ and ‘tax’ have both increased in importance as key issues faced by the UK since the last wave, while health remains a priority issue. In general, UK adults remain broadly optimistic about data use, with reasonably high agreement that data is useful for creating products and services that benefit individuals, and that collecting and analysing data is good for society. Nevertheless, it is important to recognise that differences persist across population groups, with younger people, and those within higher socio-economic grades, seeing various aspects of data use in a more positive light.

2. Data security and privacy are the top concerns, reflecting the most commonly recalled news stories.

UK adults report a slight increase in perceived risks related to data security since December 2021, with ‘data not being held securely / being hacked or stolen’ and ‘data being sold onto other organisations for profit’ representing the two greatest perceived risks. These concerns are reflected in the news stories that people recall about data, resulting in a predominantly negative overall recall. At the same time, the UK adult population expresses low confidence that concerns related to data are being addressed.

3. Trust in data actors is strongly related to overall trust in those organisations.

Trust in actors to use data safely, effectively, transparently, and with accountability remains strongly related to overall trust in those organisations to act in one’s best interests. This is therefore affected by other events which impact on the level of trust in those organisations (such as public discussions about the current energy crisis, and mismanagement of sewage affecting public trust in utility companies). While trust in the NHS and academic researchers to use data remains high, a drop in the public’s trust in the government and utility companies and their data practices has been reported since the previous wave. Trust in big technology companies to act in people’s best interest has also fallen since 2021, particularly amongst those who are most positive and knowledgeable about technology. Trust in social media companies remains low.

4. UK adults do not want to be identifiable in shared data - but will share personal data in the interests of protecting fairness.

When it comes to data governance, people want their data to be managed by experts, and for the privacy of their data to be prioritised. ‘Identifiability’ stands out as the most important consideration for an individual to be willing to share data, and UK adults express a clear preference that they are not personally identifiable in data that is shared. People also express a preference for experts to be involved in the decision-making process for data sharing, over a public or self-assessed review.

While identifiability is the most important criterion driving willingness to share data, the wider UK adult population is willing to share demographic data for the purpose of evaluating systems for fairness towards all groups. The proportion of people who would be comfortable sharing information about their ethnicity, gender, or the region they live in, to enable testing of systems for fairness is 69%, 68% and 61% respectively. In comparison, adults with very low digital familiarity are less comfortable sharing information about their ethnicity (47%), gender or region they live in (both 55%).

5. The UK adult population prefers experts to be involved in the review process for how their data is managed.

Out of all types of governance tested, people value the involvement of experts in the review process over the involvement of the public, self-assessed review, or no review, suggesting that the public prefers to delegate decisions around data management to professionals skilled in this field. This preference for experts to be involved in decision-making processes related to shared data is stronger among adults with low levels of trust in institutions tested compared to those with high levels of trust.

6. People are positive about the added conveniences of AI, but expect strong governance in higher risk scenarios.

The UK adult population has a positive expectation that AI will improve the efficiency and effectiveness of regular tasks. However, public views are split on the fairness of the impact of AI on society, and on the effect AI might have on job opportunities. People have higher demands for governance in AI use cases that are deemed higher risk or more complex, such as in healthcare and policing. Healthcare is the area in which UK adults expect there to be the biggest changes as a result of AI. However, overall AI still ranks fairly low against other societal concerns, signalling this is not yet a front of mind topic or concern for people.

7. Those with very low digital familiarity express concerns about the control and security of their data, but are positive about its potential for society.

Members of the public with very low digital familiarity have low confidence in their knowledge and control over their personal data, with the majority saying that they know little or nothing about how their data is used and collected (76%). Few feel that their data is stored safely and securely (28%). As a result, they are less likely than the wider UK adult population to feel they personally benefit from technology. However, they are reasonably open to sharing data to benefit society, and the majority consider collecting and analysing data to be good for society (57%).

3. Overview

The Centre for Data Ethics and Innovation (CDEI)’s Public Attitudes to Data and AI (PADAI) Tracker Survey monitors public attitudes towards data and AI over time. This report summarises the second wave (Wave 2) of research and makes comparisons to the first wave (Wave 1). The research was conducted by Savanta ComRes on behalf of the CDEI.

The research uses a mixed-mode data collection approach comprising online interviews (Computer Assisted Web Interviews - CAWI) and a smaller telephone survey (Computer Assisted Telephone Interviews - CATI) to ensure that those with low or no digital skills are represented in the data.

The Wave 1 online survey (CAWI) ran amongst the general adult population (18+) from 29th November 2021 to 20th December 2021 with a total of 4,250 interviews collected in that time frame. A further 200 Telephone interviews (CATI) with the ‘very low digital familiarity’ sample were conducted between 15th December 2021 and 14th January 2022.

For Wave 2, there were a total of 4,320 online surveys (CAWI) across a demographically representative sample of UK adults (18+). This survey ran from 27th June 2022 to 18th July 2022. A further 200 UK adults were interviewed via telephone (CATI) between 1st and 20th July 2022. Any respondents who participated in Wave 1 of the online survey were excluded from participation in Wave 2. This was done to ensure that the surveyed sample does not include any individuals who have built up knowledge in this area by filling in the Wave 1 survey.

With thanks to Better Statistics and the Office for Statistical Regulation for their ongoing support and advice with the methodological approach for this survey.

Please see the ‘Methodology’ section at the end of this report for further detail.

4. The value of data for society

Health and the economy have risen above COVID-19 as the areas with greatest perceived opportunities for data to bring benefits to the public. People remain optimistic about data use, with reasonably high agreement that data is useful for creating products and services that benefit individuals.

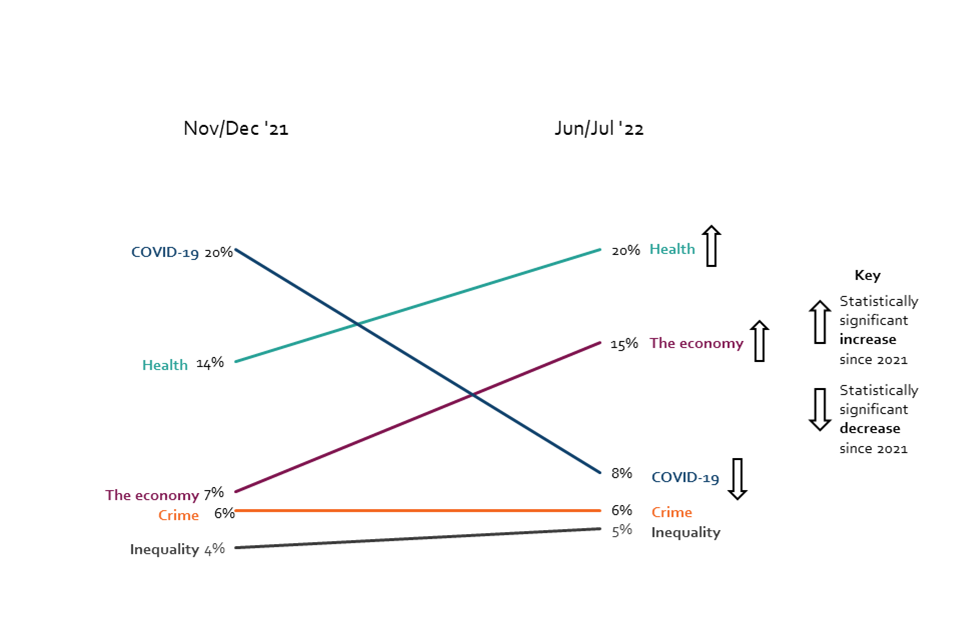

Opinions on the greatest opportunities for data use to benefit the public have shifted since 2021, reflecting a shift in public priorities. 20% of people surveyed consider ‘health’ to be the issue where data presents the greatest opportunity to benefit the public, compared to 14% in 2021. The proportion of respondents selecting ‘COVID-19’ as an opportunity area for data use has declined from 20% in 2021 to 8% in 2022. ‘The economy’ and ‘tax’ have both risen as perceived opportunities for data use, with ‘the economy’ rising from 7% in 2021 to 15% in 2022, and ‘tax’ rising from 3% to 4% in the same period.

Chart 1: The greatest opportunities for data use to benefit the public, November/December 2021 and June/July 2022 (Showing mentions > 5% for Jun/Jul ‘22)

Line chart showing opportunities for data use. Respondents see a bigger opportunity for data use in both health and the economy, compared to the last wave.

Q16. In which of these issues do you think the use of data presents the greatest opportunity for making improvements that benefit the public in this country? BASE: All online respondents: Nov/Dec ’21 (Wave 1) n=4250, Jun/Jul ’22 (Wave 2) n=4320

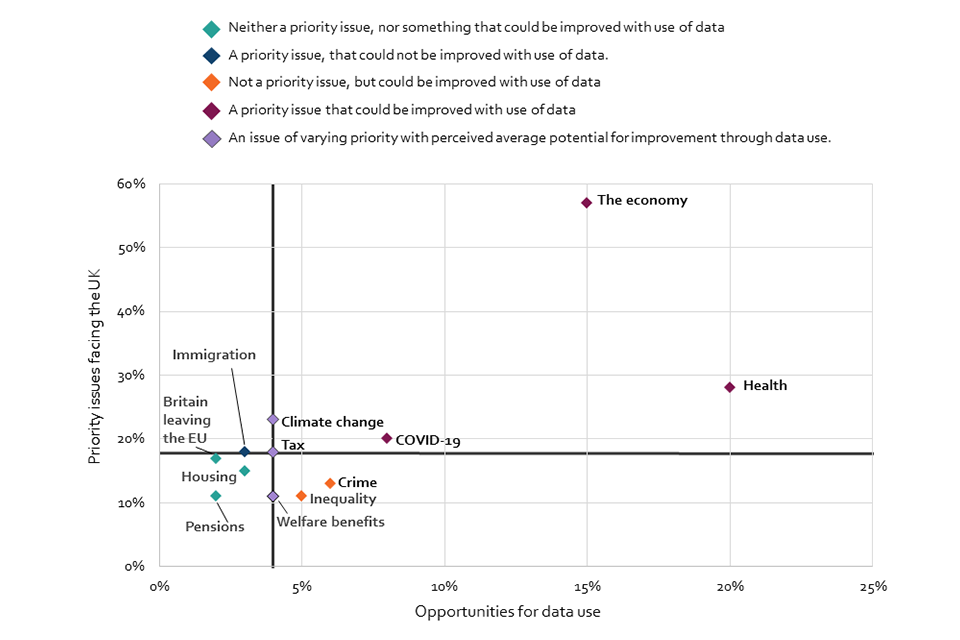

Where the public sees the potential for data to be used for good is mirrored in what they identify as the biggest issues facing the UK. The economy and health stand out as the top issues, and as ones that present opportunities for data use. While COVID-19 and climate change are both considered to be important issues facing the country (20% and 23% respectively), more UK adults (8%) perceive that data presents an opportunity for improving how we tackle COVID-19, than perceive that data presents an opportunity for making improvements regarding climate change (4%).

In Wave 2, the economy and tax have quickly increased in importance as the most important issues facing the UK. 57% of adults say the economy is the most important issue, compared to 33% in 2021. 18% of people select tax as their top issue, compared to 7% in 2021. The prevalence of COVID-19 as a key issue facing the country has significantly declined. Over half (56%) of the public selected COVID-19 as one of the top three most important issues in 2021, falling to just 20% in 2022.

Chart 2: The most important issues facing the country and opportunities for data use, June/July 2022 (Showing any issues for the UK selected by at least 10% of respondents; axes split along median value)

Scatter graph showing the relationship between the most important issues facing the country and the issues presenting the greatest opportunity for data use to benefit the public. The economy and health stand out as the top issues across both metrics.

Q15b. Which of the following do you think are the most important issues facing the country for you personally at this time? Q16. In which of these issues do you think the use of data presents the greatest opportunity for making improvements that benefit the public in this country? BASE: All online respondents: Jun/Jul ’22 (Wave 2) n=4320

There were also several respondents (112) that selected ‘other’ issues that they feel the country is facing, choosing to fill in an elective open-text box. A high proportion of these (67% of all ‘other’ responses) highlighted the ‘cost of living’ as a key concern. Although overall this comprises a small percentage of overall responses, the number of spontaneous mentions indicates it is at the forefront of the public’s mind.

The optimism around data use has remained stable since 2021. When asked how much people agree that ‘data is useful for creating products and services that benefit me’, 53% of respondents agreed (consistent with 51% in 2021), while only 15% disagreed in 2022. In Wave 2, perceptions of the value of data to create products that benefit individuals is more than double amongst those with high digital familiarity, where 62% agree, compared to those with low digital familiarity, where only 27% agree.

The collection and analysis of data is seen in a reasonably positive light, and is in line with the previous wave in 2021. 39% of respondents agreed that ‘collecting and analysing data is good for society’, consistent with 40% in 2021. 22% of the UK adult population disagree with this statement, compared to 20% in 2021.

Regression analysis[footnote 1] indicates greater agreement for both of the abovementioned statements amongst younger respondents and respondents from a higher socio-economic grade (ABC1)[footnote 2] in comparison to older respondents and those from a lower socio-economic grade (C2DE) (Annex, Models 1 & 2). This suggests greater optimism amongst these groups when it comes to the use of data in society.

5. Risks related to data and its portrayal in the media

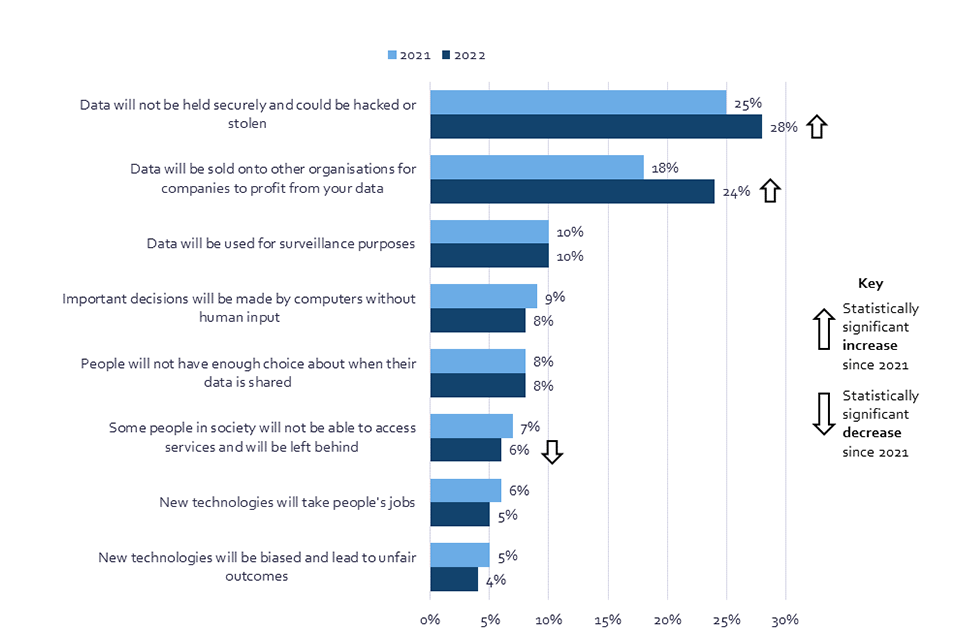

Chart 3: The greatest risk posed by data use in society, November/December 2021 and June/July 2022 (Showing % selected each option)

Bar chart shows the change in the proportion of respondents that selected an option as representing the greatest risk for data use in society, in 2021 and 2022.

Q17. Which of the following do you think represents the greatest risk for data use in society? BASE: All online respondents: Nov/Dec ’21 (Wave 1) n=4250, Jun/Jul ’22 (Wave 2) n=4320

The top perceived risks of data use are related to data being hacked or stolen, or being sold on to other companies. These fears reflect the most commonly recalled media stories related to data.

The main perceived risks associated with data use are related to data security and selling data for a profit, both of which have increased in concern since the previous wave of research in 2021. When the UK public was asked to select the greatest risk for data use in society from a list, 28% expressed concern that data will not be held securely and could be hacked or stolen, compared with 25% in 2021, while 24% selected ‘data will be sold onto other organisations for companies to profit’ as the greatest risk, compared with 18% in 2021[footnote 3]. Both of these concerns are especially high amongst those who are aged over 55 (for ‘data will not be held securely’: 32% of those aged 55+, compared to 23% of those aged 18-34; for ‘data will be sold onto other organisations or companies to profit’: 28% of those aged 55+, compared to 21% of those aged 18-34).

Concerns about data security differ across the population. Those who feel they have less knowledge about data and technology are more likely to have concerns surrounding data security. 31% of those who report having little or no knowledge about how the technology sector is regulated select data security as their main concern, compared to 20% of those who know a great or fair amount about regulation. Similarly, those who report little or no knowledge about how data is used and collected in day-to-day life are also more likely to select data security as a concern, with 31% choosing this option, compared to 25% of those who know a great or fair amount about data use.

Conversely, confident technology users are more likely to report concerns about data being sold onto other organisations for profit. 25% of those who report being confident completing activities online say that they are concerned about their data being sold on to other companies, compared to 16% of those who are not confident completing activities online.

A considerable share of UK adults express confidence that their data security and privacy concerns are being addressed. 41% feel that ‘when organisations misuse data, they are held accountable’. Those with a higher digital familiarity are more likely to agree that those who misuse data are held accountable (45% agree), while those with medium or low familiarity express lower confidence (35% for those with low familiarity). Regression analysis indicates that those of Asian ethnicity[footnote 4] are 1.86 times more likely and those from Black ethnicity[footnote 5] 1.43 times more likely than UK adults from a White ethnic background[footnote 6] to express confidence in accountability in instances of data misuse (Annex, Model 3).

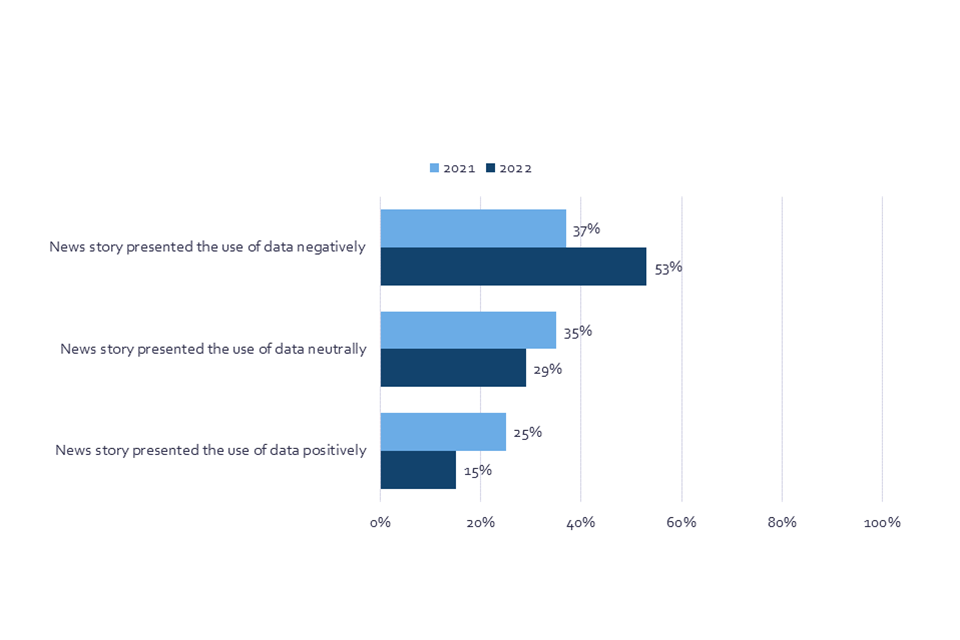

There is evidence that suggests that the news media may contribute to negative associations related to data use. When respondents were asked to describe stories they remember seeing or hearing about data, they were predominantly negative, and this has increased since the previous wave in 2021: in 2021, 37% said that the news story about data they could recall was mainly negative, but this has jumped to 53% in 2022. There has been an accompanying decrease in recall of stories that are presented as neutral, from 35% in 2021 to 29% in 2022, or positive, from 25% in 2021 to 15% in 2022. Those with a greater digital familiarity are more likely to recall news stories that are presented in a negative light; 57% of those with high familiarity say that the news story they recalled was mostly negative, compared to 48% of those with a medium digital familiarity, and 38% of those with a low digital familiarity.

Chart 4: The recalled presentation of data in news stories, November/December 2021 and June/July 2022 (Showing % selected each option)

Bar chart that shows the change in the proportion of respondents that said a news story they recalled about data presented the use of data either negatively, neutrally, or positively, in 2021 and 2022.

Q11. Overall, do you think this news story presented the way data was being used positively or negatively? Base: All online respondents who say they have read, seen or heard a news story about data in the last 6 months: Nov/Dec ‘21 (Wave 1) n=1499, Jun/Jul ’22 (Wave 2) n=1678

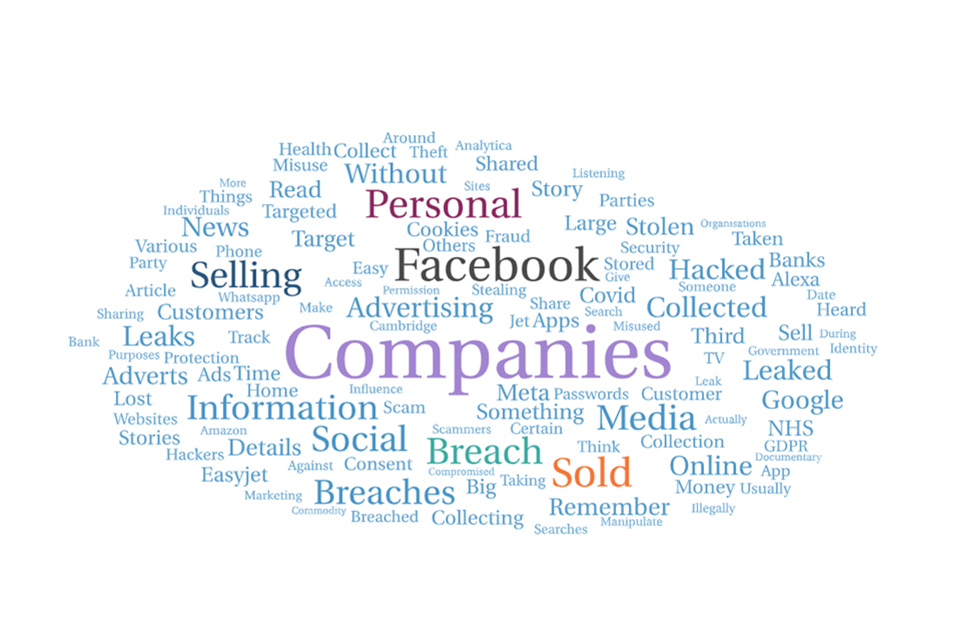

When respondents were asked to describe stories they remember seeing or hearing about data, open-text responses are dominated by references to data breaches, and social media companies’ misuse of data and targeted advertising. Stories about data breaches saw a slight increase in June/July 2022 compared to November/December 2021, with 12% of respondents in 2021 to 14% (346) in this wave.

Media monitoring identifies that this recall reflects the numerous stories about data breaches during this time, including those of health and financial data as well as cyber attacks and data breaches in relation to the war in Ukraine which were prominent in the first months of 2022. Respondents’ recall of news stories about the use of data by social media platforms including Facebook/Meta, TikTok, and Instagram are common.

Of the number of media stories that UK adults could recall about data, just 4% were related to COVID-19 in 2022, compared to 13% in 2021. Of the recalled stories in Wave 2, 3% referenced health data and the NHS. Recall of media stories also highlight changing laws and regulations around GDPR and cookies.

Image 1: Word cloud of public recall of data-related news stories

Word cloud of public recall of data-related news stories

Q10. In a couple of sentences, please could you briefly tell us what the story you saw about data was about? Base: All CAWI respondents who say they have seen a news story about data in the last 6 months: Jun/Jul ’22 (Wave 2) n=1725. Size of words corresponds to the number of times they occur in responses. All words mentioned nine times or less are removed.

Spontaneous recall of data-related news stories vary by levels of digital familiarity. Those with a high digital familiarity recall a wide range of news stories, with positive stories focused on benefits of data to society, such as the use of data in the response to COVID-19, uses of census data, and other data that helps us gain a better understanding of business, society, and our world. Negative stories recalled by those with high digital familiarity are predominantly stories related to data and security, and often demonstrate a good level of technical knowledge about the stories, for example mentioning use of cookies, companies passively collecting data from phones, social media bots, and blockchain technology.

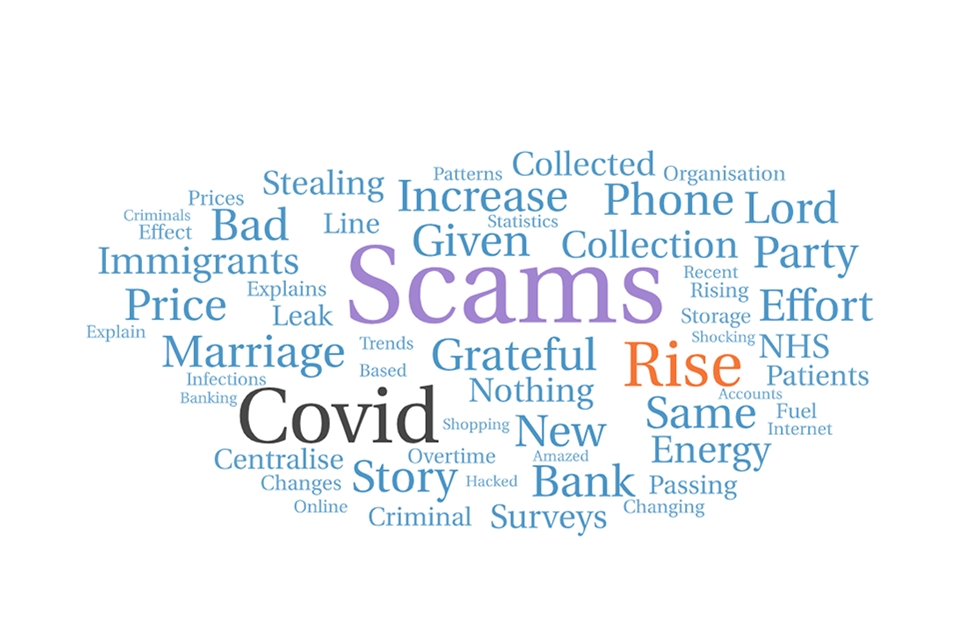

Image 2: Word cloud of public recall of data-related news stories – by UK adults who recall that the story was mainly positive, and have high digital familiarity.

Word cloud of public recall of data-related news stories – by UK adults who recall that the story was mainly positive, and have high digital familiarity

Q10. In a couple of sentences, please could you briefly tell us what the story you saw about data was about? Base: All online respondents who say they have seen a news story about data in the last 6 months, and who say that story was mainly positive, and who have high digital familiarity: Jun/Jul ’22 (Wave 2) n=156. Size of words corresponds to the number of times they occur in responses. All words mentioned two times or less are removed.

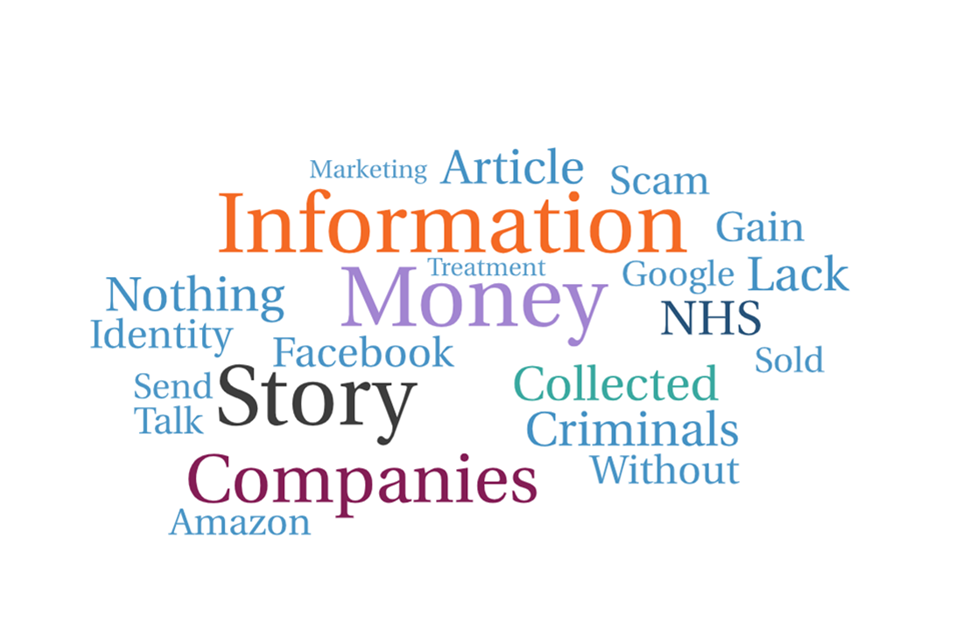

Image 3: Word cloud of public recall of data-related news stories – by UK adults who recall that the story was mainly negative, and have high digital familiarity.

Word cloud of public recall of data-related news stories – by UK adults who recall that the story was mainly negative, and have high digital familiarity.

Q10. In a couple of sentences, please could you briefly tell us what the story you saw about data was about? Base: All online respondents who say they have seen a news story about data in the last 6 months, and who say that story was mainly negative, and who have high digital familiarity: Jun/Jul ’22 (Wave 2) n=596. Size of words corresponds to the number of times they occur in responses. All words mentioned four times or less are removed.

Those with low digital familiarity also recall media stories that fall into similar themes as those with higher digital familiarity, but express a smaller range in topics, with concerns about data breaches and scams dominating both positive and negative stories that are recalled. Far less technical detail is mentioned, compared to those with high digital familiarity, meaning the stories recalled are more limited to a description of risk and impact of these risks.

Image 4: Word cloud of public recall of data-related news stories – by UK adults who recall that the story was mainly positive, and have low digital familiarity (*low base size).

Word cloud of public recall of data-related news stories – by UK adults who recall that the story was mainly positive, and have low digital familiarity (*low base size)

Q10. In a couple of sentences, please could you briefly tell us what the story you saw about data was about? Base: All online respondents who say they have seen a news story about data in the last 6 months, and who say that story was mainly positive, and who have low digital familiarity: Jun/Jul ’22 (Wave 2) n=28*. Size of words corresponds to the number of times they occur in responses.

Image 5: Word cloud of public recall of data-related news stories – by UK adults who recall that the story was mainly negative, and have low digital familiarity (*low base size).

Word cloud of public recall of data-related news stories – by UK adults who recall that the story was mainly negative, and have low digital familiarity (*low base size)

Q10. In a couple of sentences, please could you briefly tell us what the story you saw about data was about? Base: All online respondents who say they have seen a news story about data in the last 6 months, and who say that story was mainly negative, and who have low digital familiarity: Jun/Jul ’22 (Wave 2) n=40*. Size of words corresponds to the number of times they occur in responses. All words mentioned once are removed.

While UK adult recall of media stories related to data is fairly low, it is predominantly negative. Only 40% of UK adults recall a story about data in the last 6 months. Those who have greater knowledge about technology are more likely to report a higher recall of media stories about data. 52% of those who report that they have a great or fair amount of knowledge about how their data is used and collected in day-to-day life recall seeing a news story related to data, compared to 31% of those who say they know just a little or nothing at all.

It is also worth noting that recall of news stories being predominantly negative could in part be explained by a higher number of negative news stories in the media, people being more likely to remember the negative stories, and the fact that when data plays a role in facilitating a positive outcome it can be the outcome that is the focus, whereas the data takes ‘centre stage’ when something goes wrong.

6. Trust in data actors

Trust in organisations to use data safely, effectively, transparently and with accountability remains strongly related to overall trust in those organisations to act in one’s best interests.

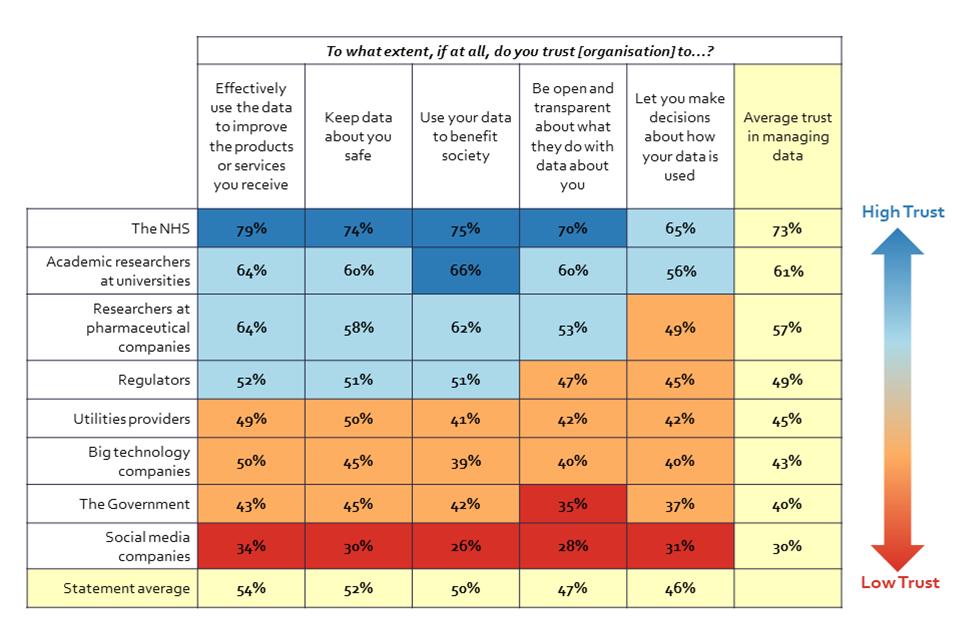

Trust in data actors to act in one’s best interest follows a very similar pattern to trust in these actors to take actions with data safely, effectively, transparently, and with accountability. The majority of UK adults, 73%, select the NHS as the most trusted actor to take actions with data safely, effectively, transparently, and with accountability. This is followed by researchers in two different areas: academic researchers (61%) and researchers at pharmaceutical companies (57%). Next, 49% of UK adults trust regulators to use data and a slightly lower share of people trust utility providers (45%) and big technology companies (43%) to do so. Trust levels in data practices of the government and social media companies are rather low compared to other actors tested (40% and 30% respectively).

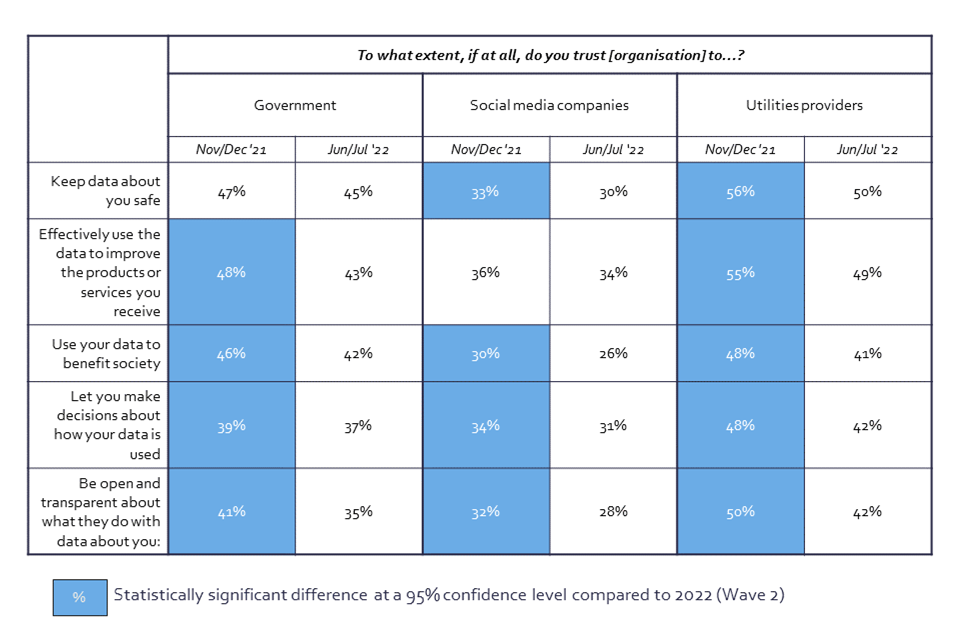

Table 1: Trust in organisations, and in their actions with data (Showing % Sum: Trust)

Table showing trust in organisations to take data actions and to act in one’s best interest.

Q14. To what extent, if at all, do you trust the [organisation] to…? BASE: Half of all online respondents per organisation n=2092, Jun/Jul ’22 (Wave 2) n=2676

Some significant changes in overall trust levels have been recorded since the previous survey wave. Firstly, overall trust in the government and utility companies and their data practices has fallen since 2021 (40% to 33%, and 61% to 46% respectively).

Table 2: Trust in selected organisations, and in their actions with data (Showing % Sum: Trust)

Data table that shows the proportion of respondents that trust the government, Utility Providers, and Social Media Companies to perform six data actions, in November/December 2021 and June/July 2022.

Q14. To what extent, if at all, do you trust the [organisation] to…? BASE: Half of all online respondents per organisation - Nov/Dec ‘21 (Wave 1) n=2092, Jun/Jul ’22 (Wave 2) n=2676

Public trust in government to use data has fallen since 2021 in most dimensions tested in the survey, including ‘use data to improve products or services you use’ (48% to 43%), ‘use your data to benefit society’ (46% to 42%), and ‘let you make decisions about how your data is used’ (39% to 37%). The largest drop has been reported to ‘be open and transparent about data use’ (41% to 35%). The greatest fall in trust in the government across the tested actions is seen among those in a higher socio-economic grade (ABC1: from 47% in 2021 to 41% in 2022), while trust has remained consistent amongst those from socio-economic grades C2DE (41% in 2021 and 39% in 2022). Those from the higher socio-economic grades (ABC1) show a drop from Wave 1 in reported trust in the government to ‘keep data about you safe’ (50% to 46%), ‘use data to improve products or services you use’ (51% to 44%), and ‘use your data to benefit society’ (50% to 43%). The drop in trust amongst those from higher socio-economic grades (ABC1) brings their levels of trust more in line with those from the lower socio-economic grades (C2DE).

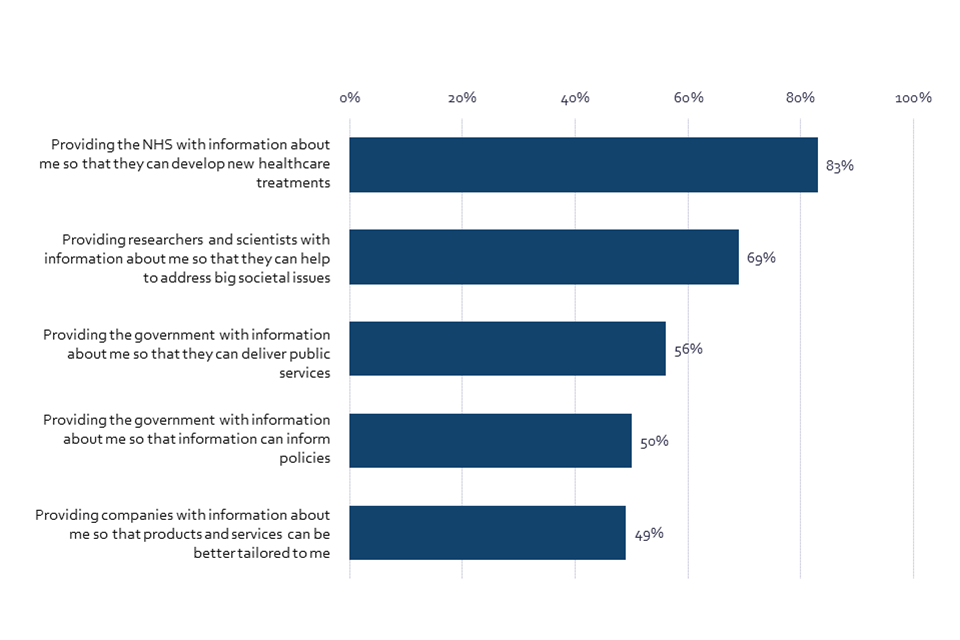

Comfort levels with the government’s use of data have also decreased, in line with the reported fall in trust. The proportion of the public who are comfortable providing the government with information about them so that they can deliver public services has dropped from 62% in 2021 to 56% in 2022. In addition, there has been a decrease in the share of UK adults who feel comfortable providing the government with information about them so that this information can inform policies from 56% in 2021 to 50% in 2022.

Chart 5: Level of comfort with providing personal information to selected organisations (Showing % SUM: Comfortable)

Bar chart showing comfort in providing personal information in various scenarios. Respondents are far more comfortable sharing their information with the NHS than the government.

Q13. Personal data is information that can be used to identify you, for example, your name, address, telephone number, email, or information about you, like your ethnicity, income, or online activity. Please indicate how comfortable or uncomfortable you are with providing personal information about yourself in the following instances? BASE: All online respondents Jun/Jul ’22 (Wave 2) n=4320

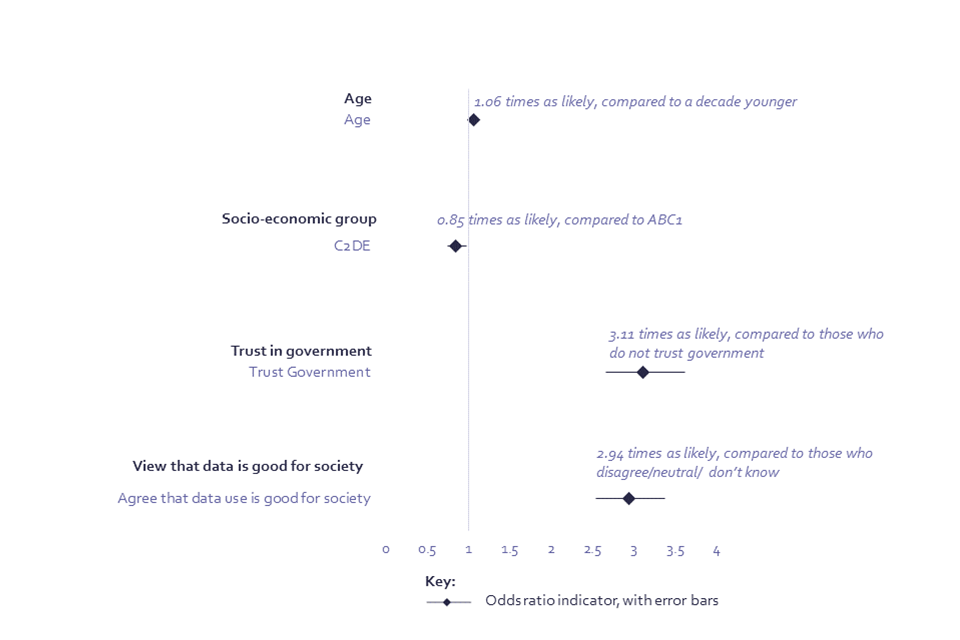

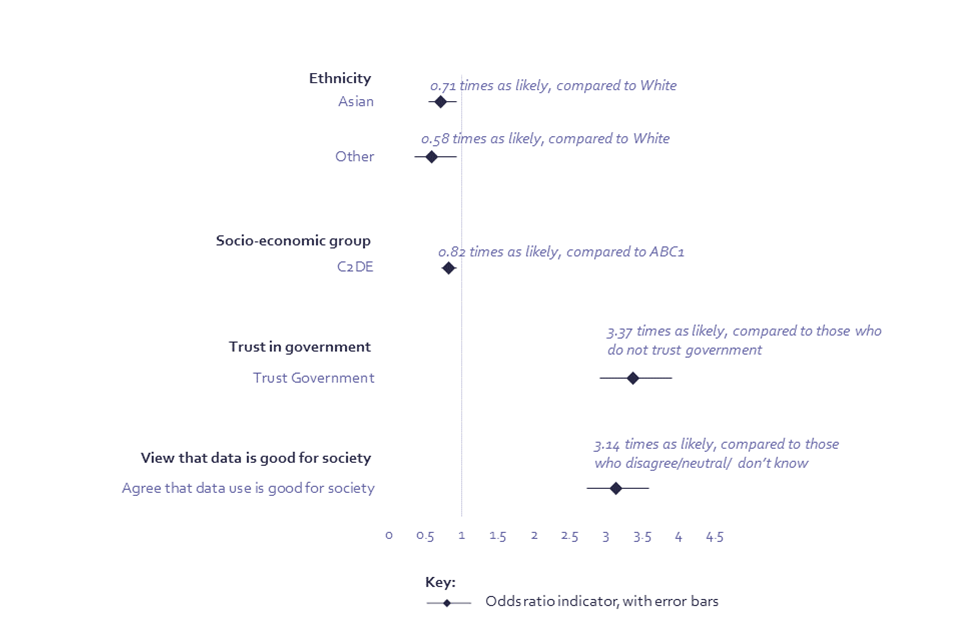

Regression analysis indicates that being comfortable with the use of data by government is strongly related to overall trust in government. Those who report that they trust the government to act in their best interests, controlling for other demographic factors, are 3.11 times more likely to say they are comfortable providing data to government to enable the delivery of public services (Chart 6; Annex, Model 5) and 3.37 times more likely to be comfortable providing their information for policy development (Chart 7; Annex, Model 4). Although unsurprising, this demonstrates the strong tie between trust of the government and wider attitudes towards government data use. A wider belief that data is good for society is also strongly associated with being comfortable sharing data for these purposes – those with this view are 2.94 times more likely to be comfortable sharing data for delivering public services and 3.14 times more likely to be comfortable sharing data to inform policies (Chart 6; Annex, Model 5).

Chart 6: Logistic regression model showing predictors of comfort providing data to government to enable the delivery of public services

Logistic regression model showing predictors of comfort providing data to government to enable the delivery of public services

Age: The odds ratio[footnote 7] for age is applied by decade, and so, for example, someone is 1.06 times more likely to be comfortable providing data to the government to enable the delivery of public services, compared to someone a decade younger.

Socio-economic group is defined in a footnote in the first chapter of this report.

Q13. Personal data is information that can be used to identify you, for example, your name, address, telephone number, email, or information about you, like your ethnicity, income, or online activity. Please indicate how comfortable or uncomfortable you are with providing personal information about yourself in the following instances? Providing the government with information about me so that they can deliver public services. BASE: All online respondents Jun/Jul ’22 (Wave 2) n=4320

Chart 7: Logistic regression model showing predictors of comfort providing data to government for policy development

Logistic regression model showing predictors of comfort providing data to government for policy development

Q13. Personal data is information that can be used to identify you, for example, your name, address, telephone number, email, or information about you, like your ethnicity, income, or online activity. Please indicate how comfortable or uncomfortable you are with providing personal information about yourself in the following instances? Providing the government with information about me so that information can inform policies. BASE: All online respondents Jun/Jul ’22 (Wave 2) n=4320

With regards to other actors, the general public’s overall trust in utility providers to act in one’s best interests, and to carry out specific data actions, has fallen since 2021. 46% of UK adults report an overall trust in utility companies, compared to 61% in 2021, and trust has fallen across most demographic groups surveyed.

The UK public also reports lower trust levels in big technology companies (e.g. Amazon, Microsoft) to act in people’s best interest – this share has fallen from 60% in 2021 to 57% in 2022. While this fall is small, it is statistically significant, and what makes it notable is that this fall in trust is larger among people who think technology makes the lives of people like them better - from 66% in 2021 to 60% in 2022 - in other words, the people who think technology makes their lives better are less likely to report trust in these big tech companies. In addition, a similar drop in trust is noted amongst those who claim to have a great or fair amount of knowledge about how the UK technology sector is regulated: 74% in 2021, compared to 68% in 2022. In essence, this decline in overall trust in big technology companies is the largest amongst the most positive and knowledgeable about technology.

Unlike other data actors, trust in the NHS and academic researchers remains high. In 2022, the UK public is most likely to trust the NHS (89%) and academic researchers at universities (78%) to act in their best interests. These levels of trust have remained high since 2021 and have even slightly grown in the case of academic researchers from 76% in 2021 to 78% in 2022. The percentage of UK adults comfortable sharing their data with the NHS to develop new healthcare treatments has increased since Wave 1: 81% in 2021, compared to 83% in 2022. The share of UK adults comfortable sharing data about themselves with researchers and academics so that they can help to address big societal issues has remained consistently high and represents 69% of respondents in both survey waves.

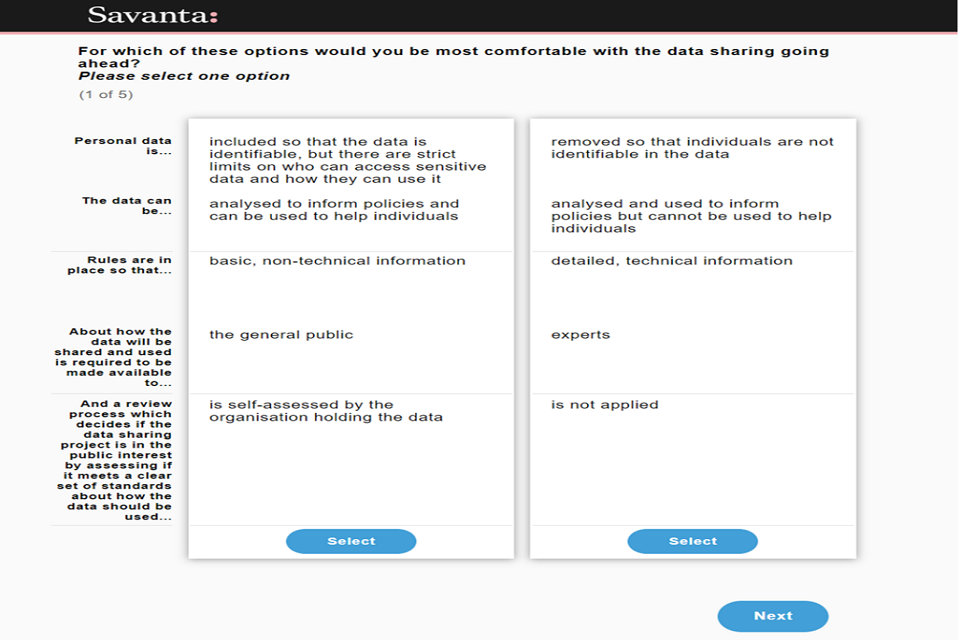

7. Public preferences for data governance

Identifiability, or the ability to identify individuals within shared data, stands out as the most important governance consideration impacting willingness to share data, over transparency about data use and the review process of data sharing. Respondents show a preference for non-identifiable information being shared, even if this means individuals can’t be helped as a result of data sharing.

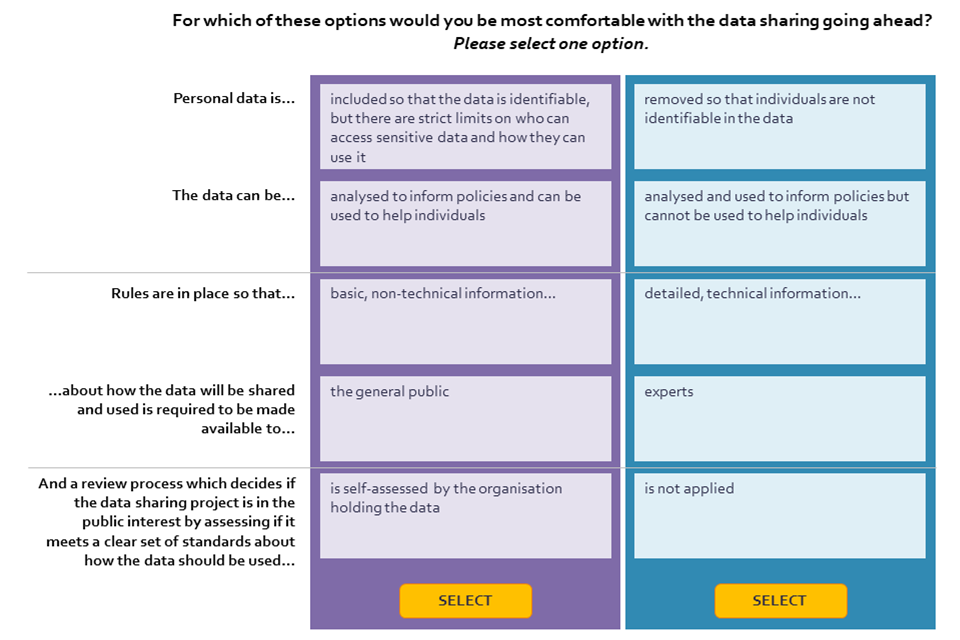

In order to test people’s preferences for different data sharing governance mechanisms, we used a ‘conjoint experiment’. This is a survey-based research approach for measuring the value that individuals place on different features and considerations for decision making. It works by asking respondents to directly compare different pairs of scenarios that vary by features to determine how they value each feature.

We use a conjoint experiment to test preferences for three attributes of data sharing governance:

- the level of identifiability of the data[footnote 8]

- the type and availability of transparency information[footnote 9]

- the review / accountability process applied to data sharing[footnote 10].

The difference in responses allows us to understand which attributes and features are driving preferences. The experiment tests pairs of data sharing scenarios, asking respondents to select one scenario in which they would be most willing to share their data. Respondents were shown 5 pairs of scenarios each, leading to five selected scenarios in total.

Image 6: Mocked-up example of a scenario presented to the respondent for the conjoint experiment

Mocked-up example of a scenario presented to the respondent for the conjoint experiment

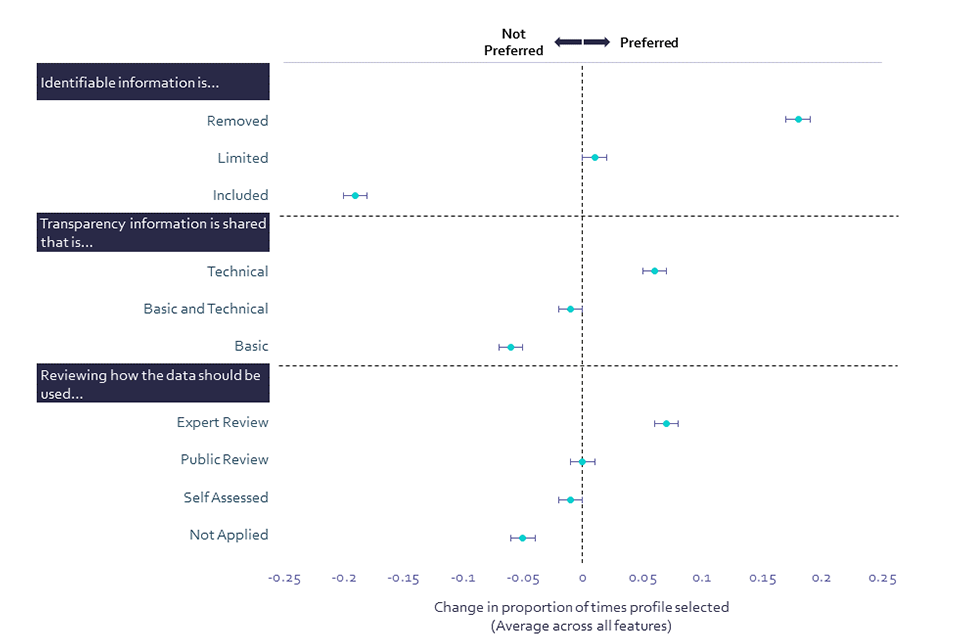

Identifiability (i.e. whether personal data is included in the shared data, which may mean that an individual could be identified from it) stands out as having the biggest impact on willingness to share data, more so than transparency and the inclusion of a review process. Public preference for shared data to exclude identifiable information is expressed, even if this means that the data cannot be analysed in such a way that will help other individuals. This is especially important for older (aged 45+) people and those with a White ethnic background.

In addition, UK adults express a preference for experts to be involved in the review process, over a review by the public, self-assessed review, or no review. This preference runs counter to a prevalent narrative that what people want is to be able to self-manage their privacy, suggesting instead that the public would prefer to delegate consent to others. When it comes to transparency of information about data sharing, people express a preference for technical information to be made available to experts, over technical and basic information made available to experts and the public, and basic, non-technical information made available to the public only. Older people (aged 65+) were more likely to select technical information being made available than their younger counterparts. This finding indicates a public preference for more informed decision-makers to be involved in the review process for how their data is managed.

Chart 8: Public preferences in scenarios affecting willingness to share personal data (Showing % change in proportion of times the option is selected)

Public preferences in scenarios affecting willingness to share personal data

CONJOINTQ. For which of these options would you be most likely to be willing to share your data? Base: All online respondents Jun/Jul ’22 (Wave 2) n=4,257

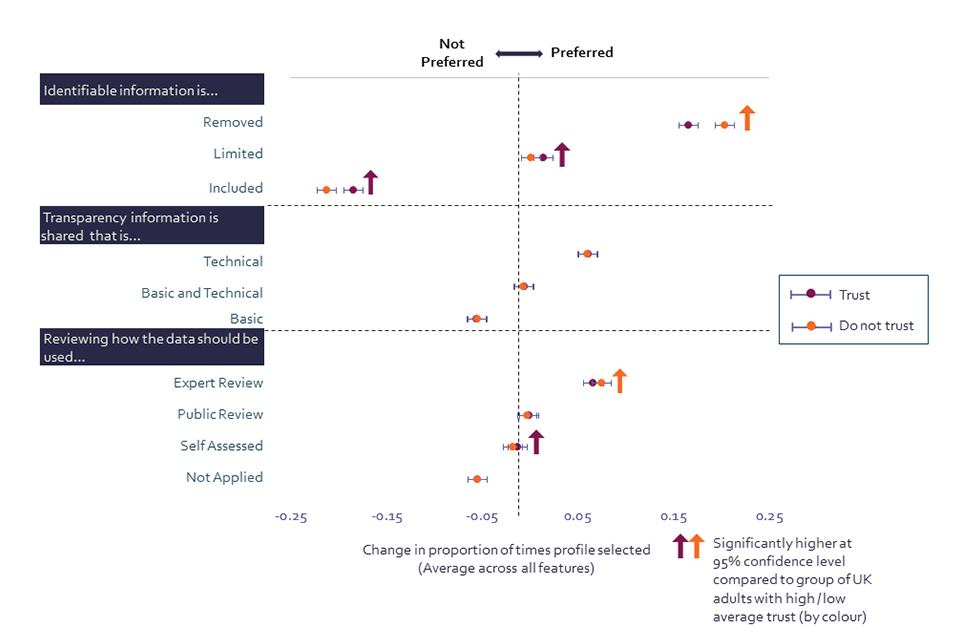

We also examined differences in preferences between those who, on average, trust selected institutions to perform the data actions tested, and those who do not trust these institutions (see Table 1 for details on the institutions and data actions). While there is consistency in people’s preferences of selected elements across all three dimensions (identifiability, transparency and review) and across both those who trust institutions and those who do not, the conjoint data offer some strong results around identifiability and review.

Chart 9: Public preferences in scenarios affecting willingness to share personal data by trust in organisations across all actions with data tested (Showing % change in proportion of times the option is selected)

Public preferences in scenarios affecting willingness to share personal data by trust in organisations across all actions with data tested (Showing % change in proportion of times the option is selected)

CONJOINTQ. For which of these options would you be most likely to be willing to share your data? Base: All online respondents who on average trust all tested organisations across all actions with data (n=2,956); All online respondents who on average do not trust all tested organisations across all actions with data (n=1,353)

Results suggest that UK adults with low trust in institutions (Chart 9) have a strong preference for sharing data with personal data ‘removed so that individuals are not identifiable in the data’. In comparison, adults with high levels of trust (Chart 9) are more likely than those with low trust to express a preference for limited personal data sharing, and for personal data being included in the data shared. This finding indicates that the public’s trust in organisations is directly reflected in their identifiability preferences.

When it comes to review processes, respondents with low levels of trust in institutions (Chart 9) are more likely than those with high trust (Chart 9) to express a preference for experts to be involved in the decision-making process related to shared data. This finding suggests there is a public preference among distrustful adults for informed decision-makers to be involved in review processes. In addition, UK adults with high trust are more likely than those who have low trust to prefer that the organisation holding the data be the one to review how the data should be used.

8. Public preferences for data sharing

Despite general preferences for data to be non-identifiable when shared, there is high public support for access to demographic data to enable systems to be checked for fairness. Governance mechanisms that ensure greater privacy or control over data access would make people feel more comfortable sharing data to check if systems are fair. While links between respondents’ own demographics and their willingness to share certain types of data might be expected, we found no evidence to suggest that respondents’ personal characteristics influenced their willingness to share different types of demographic data.

When algorithms and data are used by both public and private sector organisations to make decisions, this can sometimes lead to unintended unfair outcomes for different groups. To avoid this, we need to test systems for bias, which involves evaluating whether personal information in the input data is affecting the output of the system. Personal information about the affected individuals (e.g. their gender, ethnicity, disability, and sexuality) is therefore needed in order to check if these systems are fair to different groups. Despite strong preferences for non-identifiability in shared data as reported in the previous chapter, most UK adults want systems to be checked for fairness and are comfortable sharing their demographic data to enable this to happen.

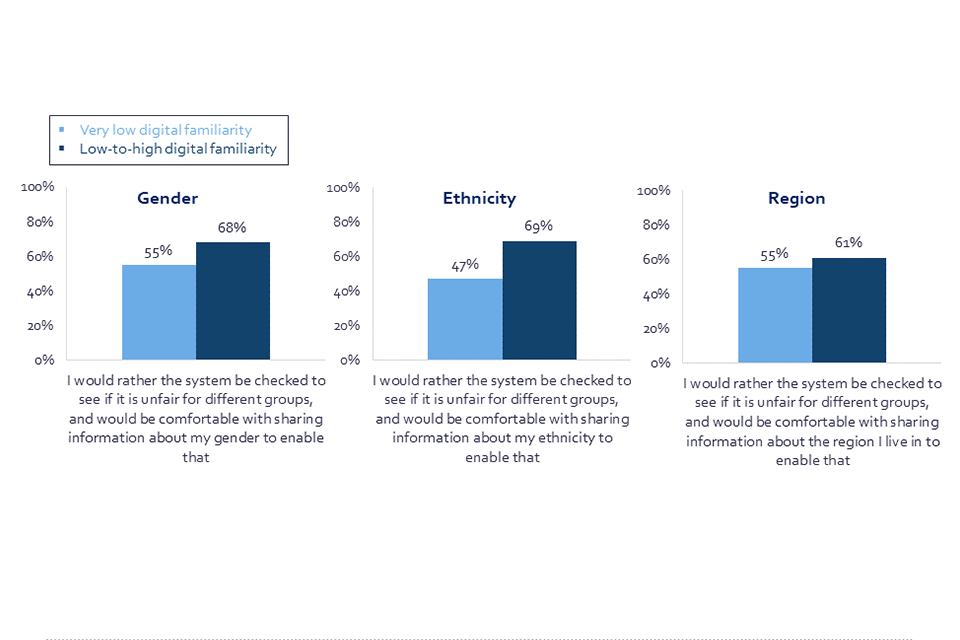

At least three in five UK adults would rather the system be checked to identify whether it is unfair for different groups, and would be comfortable sharing information about their ethnicity (69%), gender (68%), or region they live in (61%) to enable that. On the other hand, a smaller but significant proportion would not feel comfortable providing this data about their ethnicity (21%), gender (21%), or region they live in (25%), even if it means the system cannot be checked to see if it is fair to different groups.

There is a marked difference in the comfort levels of those with very low digital familiarity compared to the wider UK adult population when it comes to sharing personal information in order to see if a system is fair to different groups. Only 55% of those with very low digital familiarity would be happy to share data about their gender (compared to 68% of UK adults), and 55% would share data about their region (compared to 61% of UK adults). Most starkly, only 47% of those with very low digital familiarity would be comfortable sharing their ethnicity (compared to 69% of UK adults). This indicates that those with very low digital familiarity are more protective of ethnicity data, or at least wary about the way institutions might use it.

Chart 10: Comfort sharing demographic data to see if a system is fair to different demographic groups (Showing % comfortable sharing information)

Three column charts showing the proportion of respondents with very low digital familiarity, and the proportion of respondents with low-to-high digital familiarity, comfortable with sharing data about a demographic characteristic.

Q34. Would you rather not provide demographic data, even if it means a system could not be checked to see if it’s unfair to people from different groups, or the system be checked to see if it is unfair for different groups, and would be comfortable with sharing information about demographic data to enable that? BASE[footnote 11]: All online respondents Jun/Jul ’22 (Wave 2), Gender n=1440, Ethnicity n=1438, Region n=1442. All respondents with very low digital familiarity Jun/Jul ‘22 (Wave 2), Gender, n=71, Ethnicity n=69, Region n=60

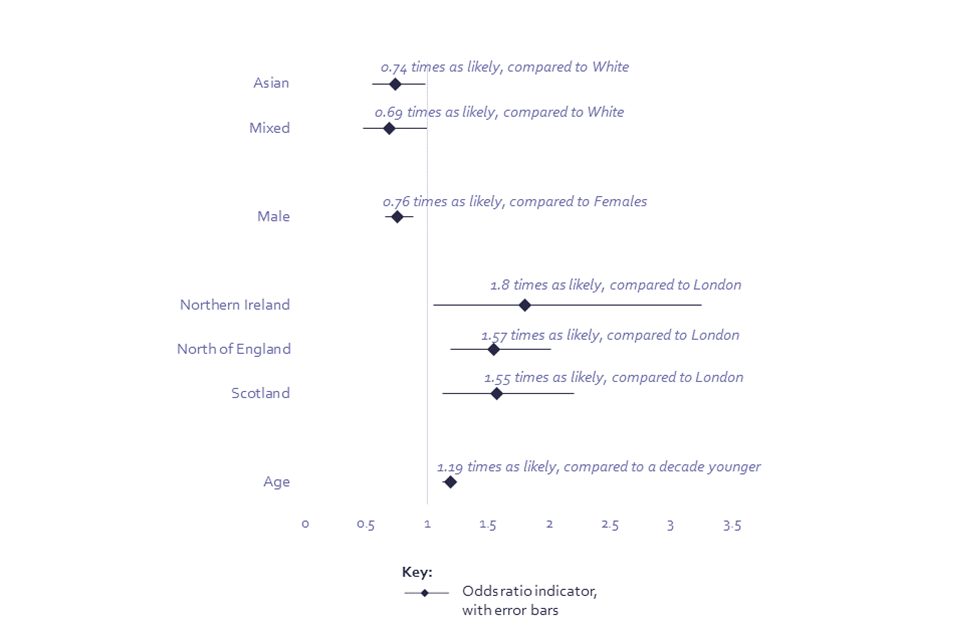

Regression analysis indicates that, when controlling for other demographic factors, younger respondents and male respondents were less willing to share their demographic data. People of Asian and Mixed ethnicity were also less willing to share demographic data to check if systems are fair. Those living in the North of England, Scotland, and Northern Ireland were significantly more likely to be willing to share this demographic data, compared to those in London (Chart 11; Annex, Model 6).

Chart 11: Logistic regression model showing predictors of willingness to share demographic data to check to see if a system is fair to different demographic groups

Logistic regression model showing predictors of willingness to share demographic data to check to see if a system is fair to different demographic groups

Age: The odds ratio for age is applied by decade, and so, for example, someone is 1.19 times more likely to be willing to share demographic data to check to see if a system is fair to different demographic groups, compared to someone a decade younger.

Q34. Would you rather not provide demographic data, even if it means a system could not be checked to see if it’s unfair to people from different groups, or the system be checked to see if it is unfair for different groups, and would you be comfortable with sharing information about demographic data to enable that? BASE: All online respondents Jun/Jul ’22 (Wave 2) n=4320[footnote 12]

We might also expect there to be links between respondents’ own demographics and how willing they are to share certain types of data, for example their ethnicity. We tested this hypothesis using interactive effects[footnote 13] on the type of data mentioned in the question and the individual’s demographic characteristics. We found no evidence to suggest that respondents viewed these types of data differently based on their demographics. Rather, there are fairly consistent attitudes across these different data types, potentially highlighting the intersectionality of viewing protected characteristics as sensitive (Annex, Model 7).

The survey also tested whether the presence of additional governance mechanisms which offered greater privacy or control over data impact on an individual’s willingness to share data to check if systems are fair.

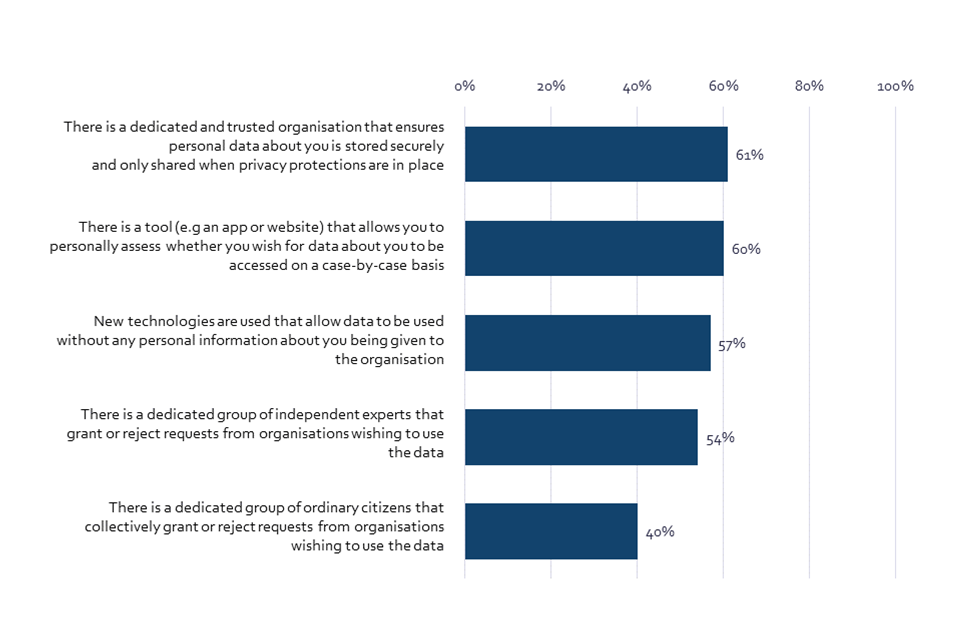

Generally, there were high levels of comfort with sharing personal data to check if algorithms are fair, provided governance mechanisms were in place. The option of a dedicated and trusted organisation that ensures personal data about people is stored securely and only shared when privacy protections are in place made the most people comfortable (61%). A similar share of people (57%) said they felt more comfortable with the option of new technologies that allow data to be used without any personal information being given to the organisation.

UK adults were also reasonably likely to feel more comfortable sharing personal data when presented with systems that would give people more control over who accesses their data. These systems include a hypothetical tool that allows individuals to personally assess data sharing requests on a case-by-case basis (60%), and give greater means of review – either by a group of independent experts (54%) or a group of ordinary citizens (40%) (Chart 12).

Chart 12: The impact of certain scenarios on comfort with sharing personal data (Showing % made more comfortable by each scenario)

Bar chart showing proportion of respondents made more comfortable with providing personal information by various scenarios.

Q35. Thinking about the different types of personal data that are needed in order to check if the use of algorithms is fair for different groups (e.g. gender, ethnicity, disability, and sexuality), in each of the scenarios would the action make you feel more or less comfortable sharing this data for the purpose of checking if systems are fair. BASE: All online respondents Jun/Jul ’22 (Wave 2) n=4320

Older UK adults (aged 55+) and those with higher socio-economic grade (ABC1) were most likely to report that these mechanisms (see mechanisms in Chart 11 above) would increase their comfort levels. Regression analysis (Annex, Models 8-12) indicates that these groups would feel more comfortable sharing their data, compared to younger respondents and those with lower socio-economic grade (C2DE), if there was:

-

a group of independent experts that grant or reject requests from organisations wishing to use the data,

-

a tool that allows individuals to personally assess whether they wish data about them to be accessed on a case-by-case basis,

-

a dedicated and trusted organisation that ensures personal data is stored securely and only shared when privacy protections are in place,

-

and new technologies that allow data to be used without any personal information being given to the organisation.

Certain groups are also more likely to feel more comfortable sharing their data if a dedicated group of ordinary citizens was in place to review data-sharing requests. Those from a higher socio-economic grade (ABC1), men, and those from Asian and Black ethnic backgrounds are more likely to express comfort in these circumstances, compared to their C2DE, female, and White counterparts (Annex, Model 9). This suggests that some groups are more likely to believe in a democratised voice than expert opinion when it comes to sharing data.

Comfort levels with all of the proposed governance mechanisms are higher amongst those with higher digital familiarity, indicating that those who are less engaged with technology may be harder to reassure.

It is also valuable to identify which governance mechanisms would help those UK adults who are cautious when it comes to sharing their personal data; they represent 23% of the sample. Out of those who would not provide their personal data to check if systems are fair to different groups, 51% would be most comfortable sharing this data when a tool that personally assesses their preference of data usage would be used. This is closely followed by the use of new technologies that allow data to be used without any personal information being given to the organisation (48%) and a dedicated and trusted organisation that ensures personal data about people is stored securely and only shared when privacy protections are in place (46%). This finding suggests that this group of adults has lower levels of trust in involvement of people (experts or citizens) in review processes. Nevertheless, at least half of this group does not feel comfortable with or has no opinion on each of the mechanisms offered, signalling that more would be needed to persuade them to share their personal data.

9. The future of AI and AI governance

People are positive that AI will improve the efficiency of regular tasks, access to healthcare, and saving money on services and goods. However, UK adults are wary of potential negative impacts of AI, for example in producing unfair outcomes or reducing job opportunities. The demand for AI governance is greatest in complex scenarios, with healthcare, policing, the military, and banking and finance seen as the top priorities. The use of AI in healthcare is of high importance as it is also expected to make large changes as a result of digitalisation.

Most (89%) UK adults have heard about Artificial Intelligence (AI) with 46% of respondents saying they are able to give at least a partial explanation of what AI is, compared with 50% in 2021, and only 11% having never heard the term, consistent with 10% in 2021. However, fewer people are aware of AI and feel they could explain what it is in detail; this proportion has slightly declined from 13% in 2021 to 10% in 2022.

The majority of people express moderate or neutral views on their expectations of the impact of AI on society. When asked for their rating on a scale of 0 to 10 where 0 is a very negative impact and 10 is a very positive impact, 58% scored ‘moderate’ (score 4-7). Only 21% gave a negative score (score 0-3), and slightly fewer (16%) gave a positive score (score 8-10).

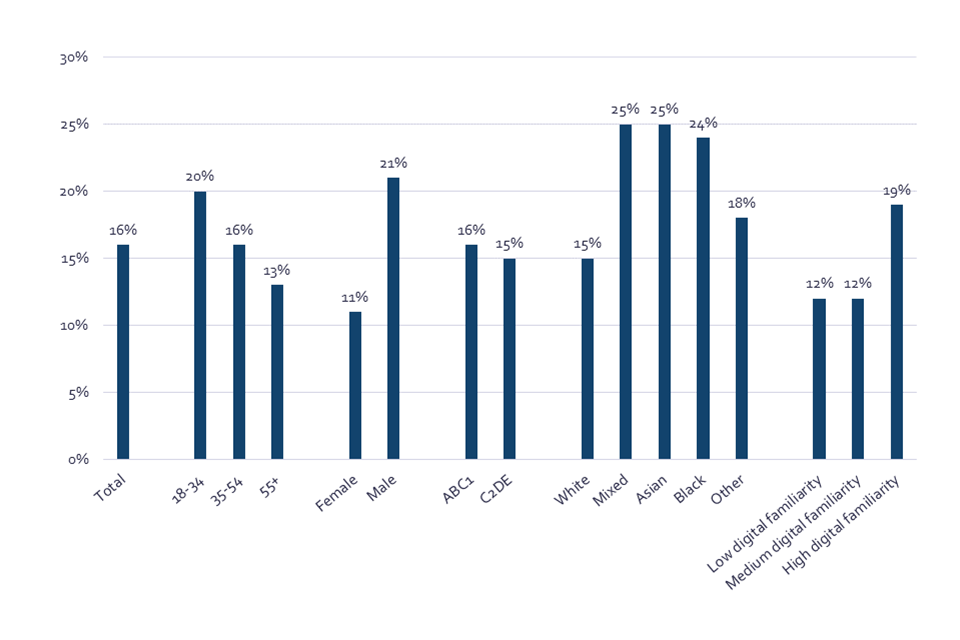

Older people are more likely to have a negative expectation of the impact of AI on society (24%), compared to 16% of those aged 18-34. Similarly, those from lower socio-economic grades and those with a White ethnic background are also more likely to have this negative expectation: 24% of those from C2DE, compared to 19% of those from ABC1 socio-economic grade, and 21% of those from a White ethnic background, compared to 14% of those from an Asian ethnic background or 17% of those from a Black ethnic background.

Those with a lower digital familiarity are more likely to express scepticism towards AI; 28% of those with low digital familiarity gave a negative rating, compared to 18% of those with a high digital familiarity. Importantly, 48% of those who feel that technology has made life for people like them worse express negative expectations for the impact of AI (score 0-3), compared to only 14% of those who feel that technology has made life better.

Chart 13: Expectations of the positive impact of AI on society (Showing % to score 8-10, where 0=very negative and 10=very positive)

Bar chart showing proportion of different demographic groups that give a positive score (8-10) on their expectations of AI’s impact on society.

Q23b. On a scale from 0-10 where 0=very negative impact and 10=very positive impact, based on your current knowledge and understanding, what impact do you think Artificial Intelligence (AI) will have overall on society? BASE: All online respondents Jun/Jul ’22 (Wave 2) n=4320

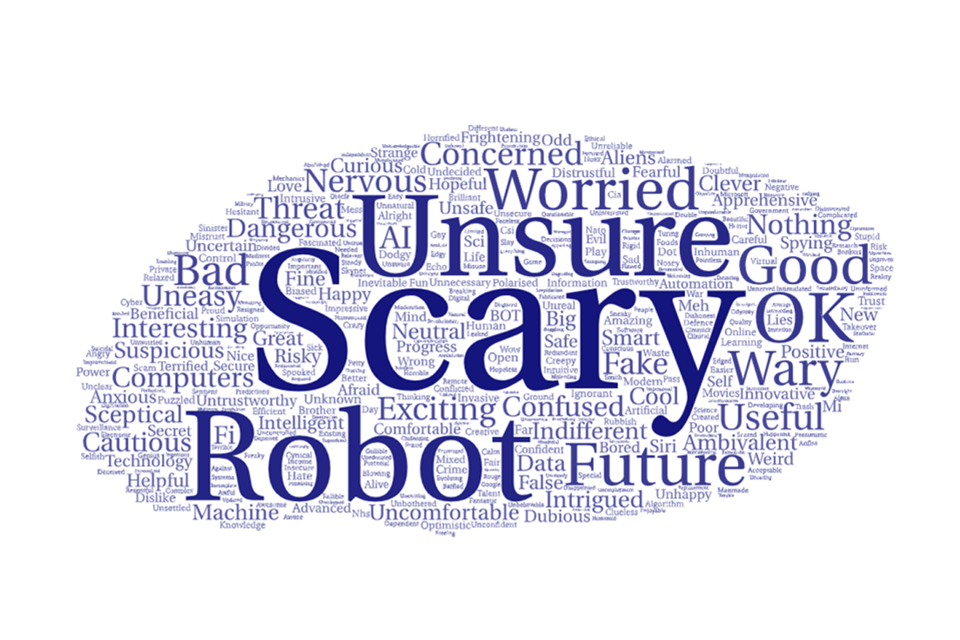

While most UK adults express moderate views about the future of AI, some latent anxieties are present. When asked to describe their feelings about the future of AI, the dominant responses are those of caution, confusion, worry, and concern. That said, many also express ambivalence or a lack of knowledge, at least partly explaining the high proportion of neutral scores given about the expected impact of AI. A sense of excitement and potential value also comes through, although they are currently dominated by more fearful sentiments.

Image 7: Word cloud of public sentiment towards AI – by UK adults (CAWI)

Word cloud of public sentiment towards AI – by UK adults

Q22. Please type in one word that best represents how you feel about ‘Artificial Intelligence’. Base: All online respondents to say they have heard of AI Jun/Jul ’22 (Wave 2) n=3838

Those with high digital familiarity express a broad range of emotions about AI, from excitement to fear. Some also express strong expectations for both positive and negative potential impacts of AI on society, and for the governance of AI to protect people from potential harms.

Conversely, those with very low digital familiarity largely report a lack of knowledge and general confusion about AI. 28% of this group feel they could give at least a partial explanation of what AI is[footnote 14], compared with 56% of the wider adult population. Similarly, open-text responses amongst those who have heard of AI reflect low confidence in their knowledge and understanding of AI, and are generally far less engaged.

While most of the public express a moderate or neutral expectation for AI (58% score of 4-7), the 16% who express positive views (score 8-10) mention excitement and fascination with a futuristic vision for what AI might offer society - although they do express some cautiousness too. Amongst the 21% who express negative views about AI (score 0-3), open-text responses are much more nervous and in some cases even fearful about the prospects of AI for our society.

Image 8: Word clouds of public sentiment towards AI – by sentiment towards AI

Word clouds of public sentiment towards AI – by sentiment towards AI

Q22. Please type in one word that best represents how you feel about ‘Artificial Intelligence’. Base: All online respondents who say they have heard of AI Jun/Jul ’22 (Wave 2), who say the impact of AI on society is positive (selected 8-10 on a 0-10 scale) n=638, or the impact of AI on society is negative (selected 0-3 on a 0-10 scale) n=786. Size of words corresponds to the number of times they occur in responses. All words mentioned three times and less are removed.

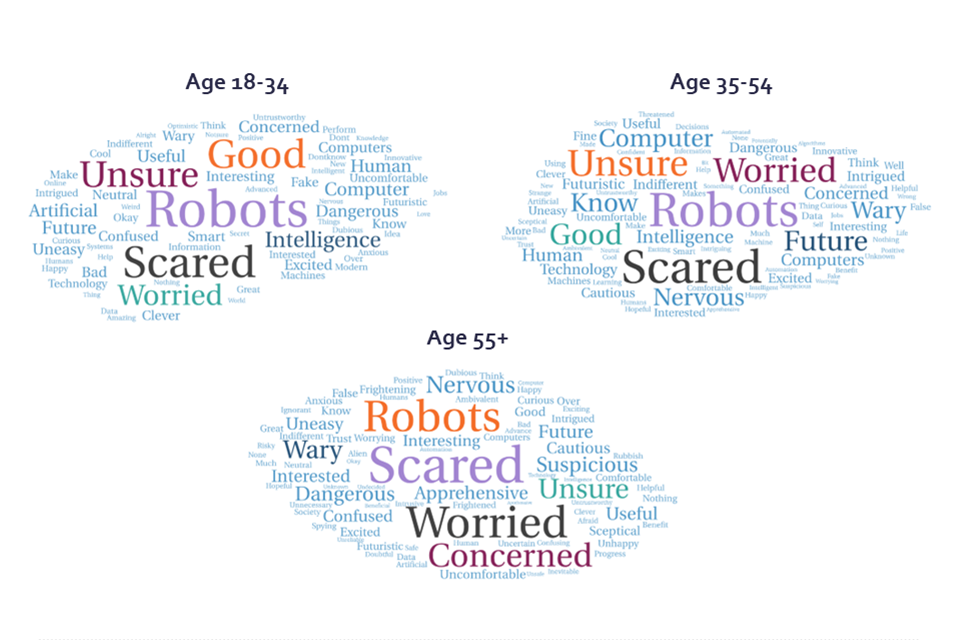

Open-text responses sorted by age group reflect the different expectations for AI, with slightly higher optimism and excitement expressed by younger respondents, while older respondents are more hesitant.

Image 9: Word clouds of public sentiment towards AI – by age

Word clouds of public sentiment towards AI – by age

Q22. Please type in one word that best represents how you feel about ‘Artificial Intelligence’. Base: All online respondents who say they have heard of AI Jun/Jul ’22 (Wave 2), and who are aged 18-34 n=936, or 35-54 n=1306, or 55+ n=1575. Size of words corresponds to the number of times they occur in responses. All words mentioned three times and less are removed.

While some concerns about the future of AI are expressed, these fears are dramatically lower, compared to other societal issues. When asked about the most important issues facing the country only 2% of UK adults focus on how data and Artificial Intelligence (AI) is used in society, compared to other concerns such as the economy (57%), health (28%), and climate change (23%).

Regression analysis indicates that younger people, those from an Asian ethnic background (compared to those from a White ethnic background), and males, are more likely to have a positive view about the impact of AI on job opportunities. Interestingly, views were not found to be statistically significant between those of different social grades despite some jobs being more at risk from automation than others (Annex, Model 7).

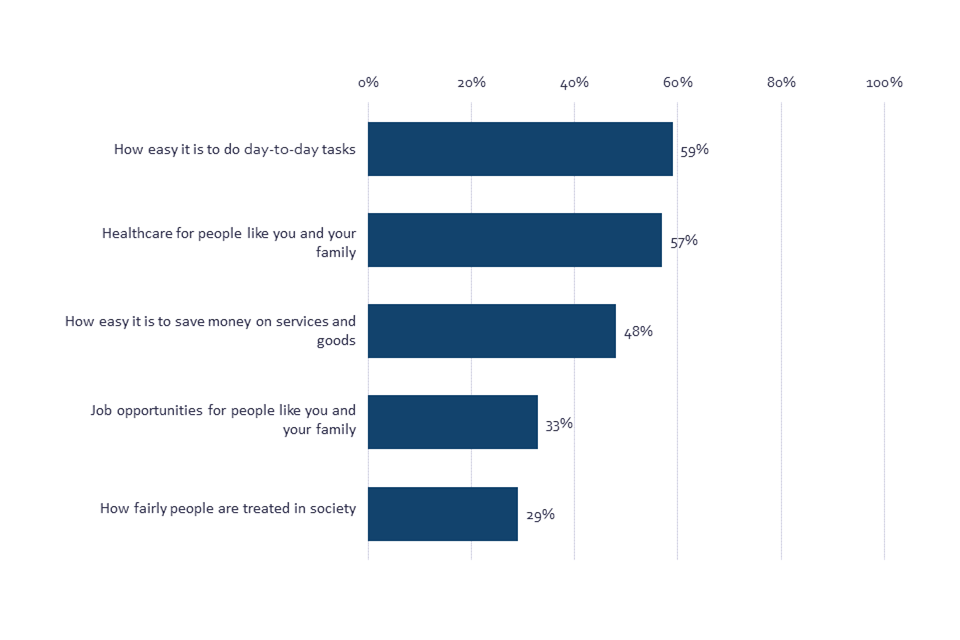

Next, we looked at the impact people expect AI to have across four outcomes concerning fairness, the ease and cost of day-to-day tasks and services, job opportunities, and healthcare. Most UK adults expect that AI will have a positive impact on peoples’ everyday lives, improving the easiness of day-to-day tasks (59%), the quality of healthcare (57%), and their ability to save money on services and goods (48%). However, when it comes to both job opportunities and fairness, there are less positive expectations. A similar proportion of people believe that AI will have a positive impact on job opportunities for people like them as they believe it will have a negative impact: 33% positive, compared to 34% negative. The same pattern is seen in expectations for AI’s impact on how fairly people are treated in society: 29% positive, compared to 28% negative.

Chart 14: Belief that AI will have a positive impact on selected types of situations (Showing % SUM: Positive)

Bar chart showing perceived positive impact of artificial intelligence on situations. Respondents see AI as mainly providing day-to-day and healthcare benefits.

Q25b. To what extent do you think the use of Artificial Intelligence will have a positive or negative impact for the following types of situations? BASE: All online respondents Jun/Jul ’22 (Wave 2) n=4320

Regression analysis was used to identify the demographic and attitudinal dimensions that predict people’s expectations about AI’s impact. Demographic factors were considered in addition to respondents’ reported ability to be able to explain AI (comparing those who could offer at least a partial explanation of AI to those that could not) and overall belief that collecting and analysing data is good for society (comparing those that agreed with this statement to those that did not). This awareness of AI and attitude about data use were found to be strongly linked to people’s expectations about AI outcomes (Annex, Models 14-18).

Unsurprisingly, those who were optimistic about data being good for society were also more likely to believe that AI would lead to positive outcomes across all tested situations (see tested situations in Chart 14 above) (Annex, Models 4-8). Similarly, those that reported they would be able to give at least a partial explanation of AI were more likely to believe it would lead to positive outcomes (Annex, Models 15, 16 & 18). Generally, this implies that those with greater understanding about AI and recognition of the wider benefits of data are more likely to believe AI will lead to positive outcomes, whether that is through the ease of day-to-day tasks, saving money on goods and services, or access to healthcare.

Two exceptions to this are how fairly people are treated in society (Annex, Model 14) and the availability of job opportunities (Annex, Model 17). Those who can offer at least a partial explanation of AI, controlling for other characteristics, are less likely to believe these outcomes will be positive. Therefore, while these groups might be aware of the convenience and innovation benefits of AI they are also wary of the wider societal impacts AI could have.

In terms of demographic differences, the impact of AI on healthcare is a rare case where no demographic differences other than age are found to be statistically significant, and older age ranges are found to be slightly more likely to believe the impacts of AI will be positive (Annex, Model 8).

Across the other outcomes, younger respondents are generally more likely to believe in the positive benefits of AI for how fairly people are treated in society and the availability of job opportunities (Annex, Models 14 & 17). Those of Asian ethnicity, compared to those of White ethnicity were found to be more likely to believe the impacts of AI will be positive across all outcomes, with the exception of healthcare (Annex, Models 14 - 17). Those from lower social grade (C2DE), compared to those of higher social class (ABC1) were less likely to believe in positive AI outcomes of how easy it is to do day-to-day tasks, and save money on goods and services (Annex, Models 15 & 16), potentially reflecting a belief that AI services will not be felt evenly across society. Males were more likely than females to believe AI would lead to positive outcomes on fairness and job opportunities.

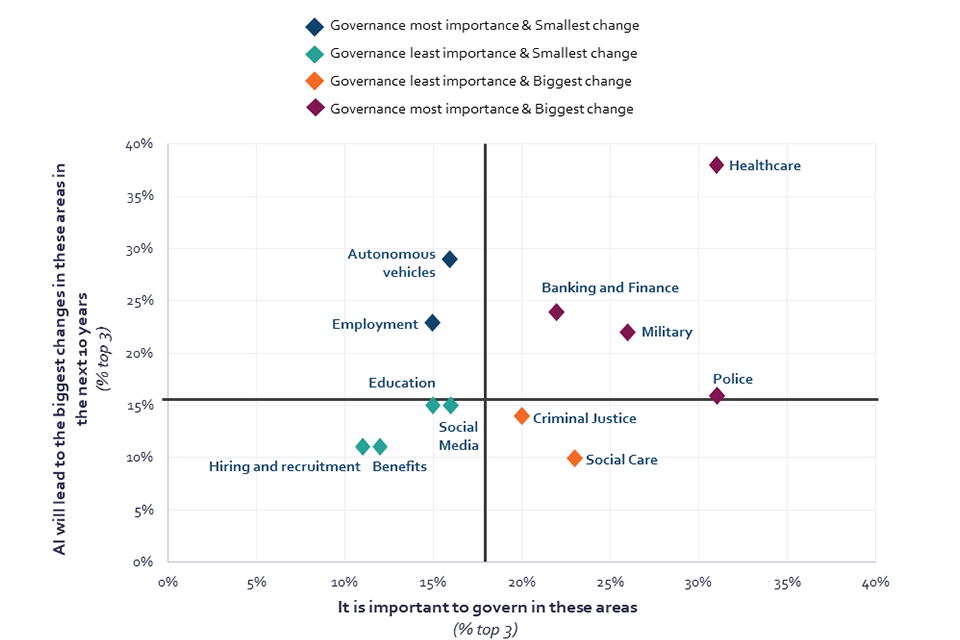

Looking at the desired level of governance for AI alongside those areas most expected to change due to the impact of AI enables us to identify the top priorities for AI governance. UK adults most want governance of AI within policing (31%), healthcare (31%), military (26%), social care (23%), banking and finance (22%), and the courts (20%). The highest expectations of change due to AI are within healthcare (38%), autonomous vehicles (29%), banking and finance (24%), employment (23%), the military (22%), and policing (16%). Healthcare, policing, the military, and banking and finance are therefore the four areas that emerge as top governance priorities for the UK public.

Chart 15: Importance of AI governance and expected change due to AI (Top 3) (Axes split along median value)

Scatter plot showing importance of artificial intelligence governance and expected change due to AI in various areas. Healthcare clearly emerges as an area that is expected to change and require governance.

Q32. Which of the following areas do you think is important that governments carefully manage to make sure the use of AI does not lead to negative outcomes for users? Q33. In which of the following areas, if any, do you think the use of AI will lead to the biggest changes in society over the next 10 years? BASE: All online respondents Jun/Jul ’22 (Wave 2) n=4320

10. Attitudes of those with very low digital familiarity

Those with very low digital familiarity (the CATI sample) are less likely than the wider UK adult population to feel they personally benefit from technology. However, they are reasonably open to sharing data to benefit society, and the majority consider collecting and analysing data to be good for society.

We define people as having very low digital familiarity if they either agreed with three out of the five statements below, or said that they did not perform the activities in question.

-

I don’t tend to use email

-

I don’t feel comfortable doing tasks such as online banking

-

I feel more comfortable shopping in person than online

-

I find using online devices such as smartphones difficult

-

I usually get help from family and friends when it comes to using the internet

Further information regarding the design of the CATI sample can be found in the Methodology section.

Interviews with those with very low digital familiarity (using a Computer Assisted Telephone Interview – CATI) enable us to ensure that the attitudes and experiences across the full spectrum of digital engagement are captured by this public perceptions tracker. This group has low confidence in the level of knowledge and control they have over their personal data. 34% claim they know nothing at all about how data is used and collected in their everyday lives, compared to 10% of those in the wider UK adult online sample. 43% say that they know just a little about how their data is used and collected about them, and 17% know a great or fair amount. Those with very low digital familiarity also lack confidence in the level of control they have over their data; only 19% agree that they have control over who uses their data and how, compared to 29% of the UK adult sample.

Those with very low digital familiarity are less likely to feel that they benefit personally from technology, compared to the wider UK adult population. 44% of those with very low digital familiarity agree that data is useful for creating products and services that benefit them personally, compared with 53% of the wider UK adult sample. However, respondents with very low digital familiarity are more optimistic than the wider UK adult sample that all groups in society equally benefit from data usage; 33% agree all groups benefit equally, compared to 26% of the wider adult population.

In addition, this group expresses comfort with sharing their personal data for public good. 57% of those with very low digital familiarity believe collecting and analysing data is good for society, whereas amongst the wider UK adult sample, 39% believe the same.

When it comes to attitudes towards different organisations, trust in the government as a data actor is lower amongst those with very low digital familiarity, compared with the wider UK adult population. 27% of those with very low digital familiarity trust the government to keep their data safe, compared to 45% of the general adult sample, and 26% trust the government to use their data effectively, as opposed to 43% of the adult sample who say so. In addition, only 17% trust the government to be open and transparent about how they use this data, compared to 35% of the UK adult sample.

When asked about trust in academic researchers, regulators, and utility providers to keep their data safe, to be transparent and to effectively improve services, levels of trust are lower for those with very low digital familiarity, compared with the majority UK adult population.

When asked about their trust in data practices of other organisations, those with low digital familiarity have lower levels of trust, especially amongst social media and big technology companies. 7% of those with very low digital familiarity trust social media companies to keep data about them safe, compared to 30% UK adults who say so, and 6% trust them to be open and transparent about what they do with the data, compared to 28% of the general UK adult sample who trust them to do the same. Only 14% of those with very low digital familiarity trust big technology companies to keep their data safe, as opposed to 45% of UK adults who do the same. Only 17% trust there will be transparency in the data they use, compared to 40% of the wider UK adult population.

11. Methodology

The Centre for Data Ethics and Innovation (CDEI)’s Public Attitudes to Data and AI Tracker Survey monitors public attitudes towards data and AI over time. This report summarises the second wave (Wave 2) of research and makes comparisons with the first wave (Wave 1).

The research uses a mixed-mode data collection approach comprising online interviews (Computer Assisted Web Interviews - CAWI) and a smaller telephone survey (Computer Assisted Telephone Interviews - CATI) to ensure that those low or no digital skills are represented in the data.

The Wave 1 online survey (CAWI) ran amongst UK the general adult population (18+) adults from 29th November 2021 to 20th December 2021 with a total of 4250 interviews collected in that time frame. A further 200 telephone interviews (CATI) with the ‘very low digital familiarity’ sample were conducted between 15th December 2021 and 14th January 2022.

For Wave 2, Savanta completed a total of 4320 online Interviews (CAWI) across a demographically representative sample of UK adults (18+). This survey ran from 27th June 2022 to 18th July 2022. A further 200 UK adults were interviewed via telephone (CATI) between 1st and 20th July 2022.

With thanks to Better Statistics and the Office for Statistical Regulation for their ongoing support and advice with the methodological approach for this survey. We welcome any further feedback or questions on our approach at public-attitudes@cdei.gov.uk.

11.1 Sampling and Weighting

Representative Online (CAWI) Sample

Quotas have been applied to the online sample to ensure that it is representative of the UK adult population, based on age, gender, socio-economic grade, ethnicity, and region. In addition, interlocked quotas on age and ethnicity, and age and social grade were used during fieldwork to monitor the spread of age across these two categories and ensure a balanced final sample. The online sample was provided by Cint. All the contact data provided is EU General Data Protection Regulation (GDPR) compliant.

The online sample was weighted based on official statistics concerning age, gender, ethnicity, region, and socio-economic grade[footnote 15] in the UK to correct any imbalances between the survey sample and the population to ensure it is nationally representative. Random Iterative Method (RIM) weighting was used for this study, such that the final weighted sample matches the actual population profile.

The most up to date ONS UK population estimates have been used for both the fieldwork quotas and weighting scheme to ensure a nationally representative sample, using 2020 mid-year estimates for age, gender, and region, and the 2011 Census data for socio-economic groups (SEG) and ethnicity.

The online sample weighting used in Wave 2 is the same as Wave 1. Comparisons can therefore be drawn across both waves.

Very low digital familiarity (CATI) Sample

200 respondents with very low digital familiarity were contacted and interviewed via telephone survey (CATI). The named sample list of respondents’ contact details was provided by Datascope. All the contact data provided is GDPR compliant.

This telephone sample captures the views of those who have low to no digital skills and are, therefore, likely to be excluded from online surveys (CAWI). They are likely to be affected by digital issues in different ways to other groups. As the answers respondents give to questions may be impacted by how the question was delivered (e.g. by whether they saw it on a screen, or had it read to them over the phone), any comparisons drawn between the CATI and CAWI samples should be treated with caution.

In order to select those with very low digital familiarity, we asked the below screening question in the Wave 2 telephone survey questionnaire. Respondents needed to agree to the statements or say they do not do the activities for three out of the five statements asked in order to qualify for the telephone interview.

Statements

-

I don’t tend to use email

-

I don’t feel comfortable doing tasks such as online banking

-

I feel more comfortable shopping in person than online

-

I find using online devices such as smartphones difficult

-

I usually get help from family and friends when it comes to using the internet

The sample of those with very low digital familiarity was set fieldwork quotas and weighted to be representative of the digitally excluded population captured in FCA Financial Lives 2020 survey. The FCA Financial Lives 2020 survey was chosen as the basis to weight data rather than other similar datasets, including ONS Data and Lloyds Digital Skills 2021 report, as the FCA Financial Lives survey includes ethnicity breakdowns in data tables. We are aware that this group is slightly skewed towards ethnic minority (excluding White minority) adults, hence, the inclusion of ethnicity breakdown was of great importance.

The quotas used were set on broader bands within key categories of gender, age, ethnicity, employment, UK nations, and regions of England. Random Iterative Method (RIM) weighting was used for this study, such that the final weighted sample matches the actual population profile. Those respondents who prefer not to answer questions on age and ethnicity are weighted to 1.0, and those who do not identify as male or female are also weighted to 1.0.

This weighting of the very low digital familiarity (CATI) sample was used in Wave 2 for the first time, Wave 1 data have not been weighted. Data from both waves should, therefore, not be compared.

Demographic Profile of the Online (CAWI) sample & very low digital familiarity (CATI) sample

The demographic profile of the online and very low digital familiarity (CATI) samples, before and after the weights have been applied, are provided in the table below.

| Online (CAWI) sample | Online (CAWI) sample | Online (CAWI) sample | Online (CAWI) sample | Very low digital familiarity (CATI) sample | Very low digital familiarity (CATI) sample | Very low digital familiarity (CATI) sample | Very low digital familiarity (CATI) sample | |

| Unweighted - Sample Size | Unweighted - Total Sample (%) | Weighted - Sample Size | Weighted - Total Sample (%) | Unweighted - Sample Size | Unweighted - Total Sample (%) | Weighted - Sample Size | Weighted - Total Sample (%) | |

| Gender | ||||||||

|---|---|---|---|---|---|---|---|---|

| Female | 2236 | 52% | 2194 | 51% | 121 | 61% | 117 | 59% |

| Male | 2058 | 48% | 2100 | 49% | 77 | 39% | 81 | 40% |

| Identify in another way | 16 | 0% | 16 | 0% | - | - | - | - |

| Prefer not to say | 10 | 0% | 10 | 0% | 2 | 1% | 2 | 1% |

| Age | ||||||||

| NET: 18-34 | 1139 | 26% | 1193 | 28% | 4 | 2% | 12 | 6% |

| NET: 35-54 | 1459 | 34% | 1422 | 33% | 12 | 6% | 17 | 9% |

| NET: 55+ | 1722 | 40% | 1704 | 39% | 181 | 91% | 168 | 84% |

| Social Grade | ||||||||

| ABC1 | 2494 | 58% | 2392 | 55% | - | - | - | - |

| C2DE | 1826 | 42% | 1928 | 45% | - | - | - | - |

| Region | ||||||||

| Northern Ireland | 107 | 2% | 119 | 3% | 22 | 11% | 12 | 6% |

| Scotland | 389 | 9% | 362 | 8% | 29 | 15% | 22 | 11% |

| North-West | 454 | 11% | 472 | 11% | 24 | 12% | 15 | 7% |

| North-East | 179 | 4% | 174 | 4% | 7 | 4% | 4 | 2% |

| Yorkshire & Humberside | 358 | 8% | 356 | 8% | 26 | 13% | 25 | 12% |

| Wales | 220 | 5% | 207 | 5% | 19 | 10% | 8 | 4% |

| West Midlands | 377 | 9% | 379 | 9% | 17 | 9% | 19 | 9% |

| East Midlands | 326 | 8% | 318 | 7% | 10 | 5% | 12 | 6% |

| South-West | 372 | 9% | 371 | 9% | 20 | 10% | 38 | 19% |

| South-East | 601 | 14% | 590 | 14% | 13 | 7% | 24 | 12% |

| Eastern | 398 | 9% | 402 | 9% | 9 | 5% | 12 | 6% |

| London | 539 | 12 | 569 | 13% | 4 | 2% | 11 | 5% |

| NET England | 3604 | 83% | 3632 | 84% | 130 | 65% | 159 | 79% |

| Ethnicity | ||||||||

| NET: White | 3651 | 85% | 3834 | 89% | 180 | 90% | 166 | 83% |

| NET: Mixed | 150 | 3% | 58 | 1% | 1 | 1% | 1 | 1% |

| NET: Asian | 276 | 6% | 274 | 6% | 13 | 7% | 24 | 12% |

| NET: Black | 157 | 4% | 116 | 3% | 3 | 2% | 5 | 2% |

| NET: Other | 86 | 2% | 39 | 3% | 2 | 1% | 3 | 1% |

| NET: Ethnic minority (excl. White minority) | 669 | 15% | 487 | 11% | 19 | 10% | 33 | 16% |

Wave on wave comparability