Monitoring and evaluation strategy

Published 27 March 2013

Foreword

Transport has never been more central to the government’s economic agenda, and my department is at the forefront of delivering the vital infrastructure and services which will support our country’s ambitions now and in the future.

We need to maintain a strong evidence base to help us step up to the challenge, and guide our activities. Finding out whether or not our investments and policy decisions have been effective, delivered value for money, and achieved anticipated outcomes is an essential part of maintaining and developing that evidence base.

As a professional and learning organisation, the Department for Transport is committed to understanding what we do well and where we should aspire to do better. This is a fundamental part of delivering an effective service for our customers and for all taxpayers.

I am therefore pleased to introduce this strategy which is part of a wider set of activities to enhance our approach to monitoring and evaluation. This strategy sets our direction moving forward, and outlines where we aspire to be in the future. It is intended to provide greater assurance and accountability that in times of constrained resources the department is making the right decisions and is maximising the impacts of its spending.

To achieve our ambition we will expect to work closely with delivery partners and wider stakeholders, so we can continue to develop and refine our work programme in the future.

Philip Rutnam Permanent Secretary Department for Transport

Executive summary

The Department for Transport is launching a new monitoring and evaluation strategy which will guide the operation and development of its monitoring and evaluation activity.

Monitoring and evaluation are key activities for any learning organisation which aims progressively to improve its performance. They allow for systematic learning from past and current activities - “what works/what doesn’t work” and “why” - so that good practice can be replicated in the future and mistakes and poor outcomes avoided.

This strategy sets out a framework for enhancing the generation of good quality monitoring and evaluation evidence, which will be integrated into departmental decision making and delivered within a robust and proportionate governance framework. This is to provide greater accountability and a stronger evidence base for future decision making and communication activities.

The following factors are critical:

- adopting a needs-driven and proportionate approach to establishing monitoring and evaluation priorities

- being outward facing and delivering results through successful collaboration with other organisations

- embedding a culture within the department which incentivises the delivery of good quality monitoring and evaluation

The strategy will take time to be implemented in full and for all of the benefits to be realised. It will be refined over time and progress reviewed at regular intervals, starting in March 2014.

A key milestone will be the launch of a ‘monitoring and evaluation programme during summer 2013. To help us prepare this we will be actively seeking views from our stakeholders.

1. Introduction

Why monitoring and evaluation is important for the Department for Transport

1.1 The Department for Transport (DfT) has responsibility for supporting the transport network that helps businesses to deliver services and gets people and goods travelling around the country. We plan and invest in transport infrastructure, network utilisation and efficiency to keep people on the move, and decisions taken affect the lives of everybody in England. We have responsibility for some of the largest and transformative investments needed to help us compete internationally, including High Speed Rail.

1.2 In times of constrained resources, it becomes even more important to ensure that we invest our money wisely and get the maximum value from it, by building on evidence of “what works”[footnote 1]. Good quality monitoring and evaluation evidence is important for helping make and communicate decisions about where best to target public spending, demonstrating the value for money and benefits which are generated by investment in transport, and learning about how we can most effectively design and deliver policies, programmes, communications and regulations.

1.3 Adopting effective approaches for monitoring and evaluation can reduce the risks of:

- poor decision making and inefficient delivery, by ensuring that valuable lessons are learnt about what works and why / why not

- inability to demonstrate accountability, by providing greater transparency to taxpayers about how their money was spent

- unnecessary burdens being placed on businesses from regulatory activities

1.4 Monitoring and evaluation is not new to the department, or to the transport sector more widely, and over the years this type of evidence has been used to:

- demonstrate the impacts of large scale infrastructure projects (e.g. the Jubilee Line extension)

- test out pilot / demonstration projects (e.g. sustainable travel)

- improve the delivery of policies, programmes and communication activities (e.g. road safety vehicle / road design, education, communication and enforcement measures)

- refine appraisal assessments and forecasts (e.g. the Highways Agency’s post opening project evaluation)

1.5 However, we wish to strengthen our framework for monitoring and evaluation to ensure that the coverage of activity is aligned to its priorities and that the plans for monitoring and evaluation are effectively delivered. We recognise the value of an increased focus on the evaluation of transport initiatives, as a number of stakeholders have urged.

How monitoring and evaluation fit into the policy cycle

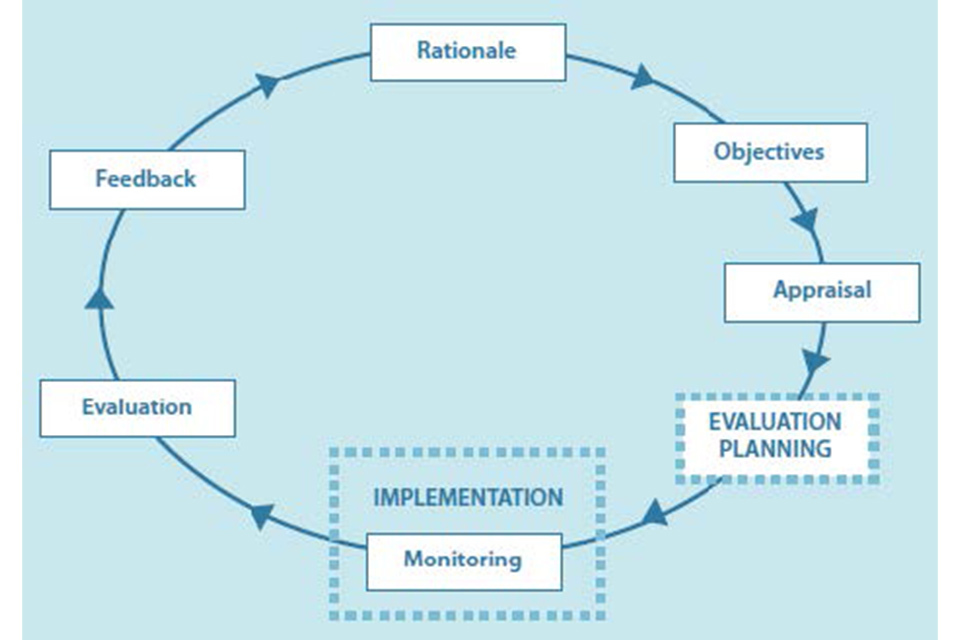

1.6 Monitoring and evaluation are integral parts of the broad policy making and delivery cycle. Figure 1.1 below depicts the ROAMEF cycle presented in the HM Treasury Green Book[footnote 2]. ROAMEF stands for rationale, objectives, appraisal, monitoring, evaluation and feedback.

Figure 1.1 The ROAMEF cycle[footnote 3]

Picture of circular flow chart framework of evaluation practice which flows, starting and returning to rationale, objectives, appraisal, monitoring, evaluation and feedback.

1.7 The evidence generated from monitoring and evaluation activity can be used to build on the analyses undertaken at the appraisal stage by testing and refining the assumptions made. It can also feed back evidence throughout the whole policy and delivery cycle to help track progress and can offer real time identification of any barriers which could risk inhibiting successful delivery.

1.8 Investing in the collection of good quality monitoring and evaluation evidence is important for feeding back evidence of the real world efficacy, efficiency and cost effectiveness of the intervention. The HM Treasury Magenta Book provides the following definitions:

- appraisal occurs after the rationale and objectives of the policy have been formulated. The purpose is to identify the best way of delivering a list of options which meet the stated objectives and assessing these for the costs and benefits that they are likely to bring to UK society as a whole

- monitoring seeks to check progress against planned targets and can be defined as the formal reporting and evidencing that spend and outputs are successfully delivered and milestones met (also providing a valuable source of evidence for evaluations)

- evaluation is the assessment of the initiative’s effectiveness and efficiency during and after implementation. It seeks to measure the causal effect of the scheme on planned outcomes and impacts and assessing whether the anticipated benefits have been realised, how this was achieved, or if not, why not

2. The Monitoring and Evaluation Strategy

The ambition

2.1 The overarching goal is to enhance the generation of good quality monitoring and evaluation evidence, which will be integrated into departmental decision making, and to deliver this within a robust governance framework. This is to provide greater accountability and a strong evidence base for future decision making. The following factors will be critical to the success of the strategy:

- adopting a needs-driven and proportionate approach to establishing monitoring and evaluation priorities which is applied systematically across the department’s portfolio

- being outward facing and recognising that delivering the required outcomes will often entail successful collaboration with other organisations. An initial step will be to implement a clear and transparent process for articulating the department’s priorities for monitoring and evaluation

- embedding a culture within the department which incentivises the delivery of good quality monitoring and evaluation

Objectives

2.2 To achieve the ambition this strategy sets out three objectives, to:

- establish a proportionate monitoring and evaluation programme

- ensure a robust governance framework for monitoring and evaluation activity

- embed a culture of monitoring and evaluation

Scope

2.3 Monitoring and evaluation is focused on the measurement and assessment of outcomes and impacts following the implementation of an initiative[footnote 4]. This is distinct from appraisal which focuses on assessing the options before decisions are made as to which, if any, should be implemented. But this does not mean that monitoring and evaluation activity can, or should, only take place post implementation. On the contrary, the need is to establish monitoring and evaluation approaches as part of the overall planning of what is to be taken forward. That increases the likelihood of generating timely and helpful information to assist driving forward the Department’s business successfully. It also minimises the chances of “benefits drift” where the appreciation of what a successful outcome comprises subconsciously changes over time.

2.4 More generally, the department considers monitoring and evaluation to be key mechanisms for generating evidence to assess benefits realisation, in line with best practice for programme and project management, and to deliver post implementation reviews of regulations. In delivering the strategy, we will take opportunities for dovetailing these requirements and standardising approaches where appropriate to prevent duplication and inefficiencies.

2.5 This initial strategy is focused on the central Department for Transport rather than the whole DfT Family (which includes its agencies[footnote 5] and arms length bodies). The agencies have their own practices and governance structures for monitoring and evaluation. However, over time, a phased roll out across the whole DfT family may be appropriate.

2.6 We will look to refine the strategy over time and review progress, in the light of experience, at regular intervals starting in March 2014.

3. Establishing a proportionate monitoring and evaluation programme

3.1 As with all other aspects of our work and within a context of constrained public finances, we have to balance the need for good quality monitoring and evaluation against other priorities to ensure that value for money is delivered for the taxpayer. It would not be cost effective to apply a blanket requirement for monitoring and evaluation across all activities undertaken within the department. The returns on the investment will diminish if the quality of the evidence generated becomes compromised, the evidence fails to add any value to the existing evidence base within a timely manner, or the cost of the monitoring / evaluation activity is disproportionate to the size of the initiative or the returns which can be generated by the investment.

3.2 To establish a systematic but proportionate approach to identifying priorities, the department will have regard to the following principles:

- the scale of investment / potential impact

- strategic imperative

- delivering statutory obligations

- degree of risk

- contribution to the evidence base[footnote 6]

3.3 Priorities will be set out in a monitoring and evaluation programme published on the department’s website. The initial programme will be launched in the summer 2013 and will be developed and updated annually.

3.4 Whilst the department is responsible for driving forward the strategy and the programme, it recognises the delivery of many monitoring and evaluation activities will be in collaboration with, or led by, other organisations such as local authorities, other delivery partners and expert practitioners. So opening up the department’s proposed priorities for monitoring and evaluation in a transparent, outward facing way, will help to underpin a constructive dialogue.

4. Ensuring a robust governance framework for monitoring and evaluation

4.1 An important foundation is to establish an enabling environment[footnote 7] which incentivises the delivery of good quality monitoring and evaluation. In part, this is a question of creating the right culture, as discussed in the next section. But it also means embedding the requirement for monitoring and evaluation into the framework for corporate governance and approvals so it becomes part, and remains part, of the key decision making processes.

4.2 This will incentivise planning for monitoring and evaluation requirements at the early decision making stages of an initiative, in line with best practice, and it will also provide greater oversight at a senior level of the planned activity on monitoring and evaluation.

4.3 Additionally, we are strengthening the assurance functions for monitoring and evaluation, in three ways. Two of these will be undertaken by the department’s senior-level strategy committee. It will:

- be responsible for ensuring the coverage of the monitoring and evaluation programme meets the needs of the department

- monitor the implementation of the strategy and the delivery of the programme to track overall progress and ensure that the lessons from monitoring and evaluation evidence are fed back into decision making and shared across policy and programme areas. This level of oversight is important given that the monitoring and evaluation of transport initiatives can span long periods (especially for large-scale infrastructure projects) and their impacts can take a number of years to be fully realised and observed

4.4 The third aspect is enhancing the quality assurance of monitoring and evaluation plans and deliverables. This will be led by the central evaluation team and will be integrated with wider analytical assurance processes undertaken within the department.

5. Embedding a culture of monitoring and evaluation

5.1 At the heart of the strategy is the need to enhance the organisational culture to fully embrace learning about what works and why / why not and use this knowledge to advance understanding and decision making.

5.2 The strategy provides a clearer commitment to monitoring and evaluation which will help to encourage departmental policy makers and analysts to plan more effectively for delivering monitoring and evaluation activity and to target resources efficiently.

5.3 Building and embedding a culture for monitoring and evaluation needs to be a sustained activity and will also require collaboration with partner organisations. The 2012 Capability action plan identified that the department needed to increase its efforts in evaluation in order to improve its capabilities for basing choices on evidence. The department accepted this conclusion, and the senior management team are strongly committed both to this strategy and to ensuring that the evidence generated is effectively utilised and lessons are shared for the benefit of future decision making.

5.4 Priority is therefore also being given to capability building and raising awareness of the value and importance of monitoring and evaluation activity. Training and development is being focused to help give people the skills they need to design and deliver new monitoring and evaluation activities but also to draw insights from the existing evidence base more effectively.

5.5 A good support system will be essential to building capabilities. By reinforcing the connections between monitoring and evaluation, good programme and project management, and better regulation, the department will look to develop tools for guidance and support in a cost effective way. Technical support and advice will be provided from a central analytical team and this will be augmented by the development of a network of monitoring and evaluation champions located across the department.

5.6 We will also seek to draw on the insights from external experts and our partner organisations.

6. Next steps

6.1 This strategy has set out the department’s sustained ambition for monitoring and evaluation. It provides the overarching road map but we recognise that this will take time to be delivered in full and for all the benefits to be realised. To make this a success a concerted effort is needed amongst DfT senior managers, policy makers and analysts as well as effective collaboration with partner organisations.

6.2 The strategy is intended to be an outward facing document and to act as a platform for engaging with, and learning from, our stakeholders. Comments and suggestions are therefore welcome and should be directed to DfTMonitoringAndEvaluation@dft.gsi.gov.uk.

6.3 The department is currently assessing priorities for monitoring and evaluation and will aim to launch the monitoring and evaluation programme during summer 2013. We will seek views from stakeholders actively to help us with these initiatives.

Footnotes

public sector: key considerations from international experience.

-

Civil Service Reform Plan, June 2012 (PDF, 356KB) and What works: evidence centres for social policy, March 2013, Cabinet Office. ↩

-

The Green Book is HM Treasury guidance for central government, setting out a framework for the appraisal and evaluation of all policies, programmes and projects. ↩

-

Adapted from HM Treasury Green Book. ↩

-

Which includes policies, programmes, projects and regulations. ↩

-

Driver and Vehicle Licensing Agency, Driving Standards Agency, Highways Agency, Maritime and Coastguard Agency, Vehicle and Operator Services Agency, Vehicle Certification Agency. ↩

-

This includes an assessment of how the monitoring and evaluation activity will add value to the existing evidence base by: filling key evidence gaps; influencing future decision making; testing out innovative initiatives or pilots; the likely quality of the resulting evidence; and, generating generalisable evidence. ↩

-

Lahey, R. (2005) A framework for developing an effective monitoring and evaluation system in the ↩