Evaluating One Big Thing 2024 (HTML)

Published 10 October 2025

Foreword

‘One Big Thing starts with One Small Change’ was a celebration of innovation in the Civil Service. This evaluation report is a testament to how the initiative has sparked new ideas and insightful conversations, creating genuine building blocks for lasting change in how we work. The positive feedback from colleagues has been overwhelming; it is clear they have embraced this opportunity to learn, collaborate and experiment with new ways of doing things.

What has been particularly encouraging is seeing the principles of applied innovation come to life. It has been great to see so many experiments coming directly from our frontline services and from our commercial, financial, policy and strategy teams from every part of the UK. Every experiment, no matter the outcome, contributed to a clear signal of a broader cultural shift where we are empowered to take managed risks and deliver better outcomes for citizens.

The ambition now is to cement this mindset. Innovation isn’t a one-off event; it is the small improvements and mindset changes that add up to significant impact. It is seeing innovation as everyone’s job. My hope is that the knowledge and skills developed through One Big Thing 2024 will continue to inform our work every day, ensuring this culture of continuous improvement and inquisitiveness becomes the bedrock of how we serve the public.

Jo Shanmugalingam

Permanent Secretary, Department for Transport

Sponsor for One Big Thing 2024

Acknowledgements

We in the One Big Thing team in the Civil Service Strategy Unit would like to pay a special thank you to all One Big Thing participants, particularly:

- All the participants who contributed to evaluation projects.

- The One Big Thing Senior Steering Group, Champions Network, Expert Advisory Group and Project Manager Community.

- The innovation and continuous improvement communities and cross-government forums.

- The Civil Service Learning team, through which we collected evaluation data on the Civil Service Learning platform.

- Dino Ribic and Rikki Bains from Wazoku who built the brilliant One Big Thing platform.

- Colleagues from the Joint Data and Analysis Centre (JDAC) in the Cabinet Office who led and managed the collaborative evaluation presented in this report and built the Smart Survey.

- Analysis in this report has also been provided by colleagues in Wazoku who shared insights into the case studies submitted onto the One Big Thing platform for departmental review.

Executive summary

One Big Thing is an annual initiative for all civil servants to take action around a shared Civil Service reform priority. It focuses on upskilling and culture change priorities: in 2024 the focus was on innovation - ‘’One Big Thing starts with One Small Change’’.

From October 14th 2024 to February 14th 2025,

161,152 civil servants[footnote 1] completed the online ‘Innovation Masterclass’, a 60-minute e-learning that taught a step-by-step innovation process civil servants can apply in their work.

4,891 team conversations took place where participants reflected on their learning, shared ideas, and agreed on the practical change that they could make as a team.

2,411 case studies of experiments were submitted from 34 public sector organisations.

Overall, results from pre and post surveys and analysis of case studies suggest that OBT 2024 had a generally positive impact across departments.

Civil servants indicated improvements in their understanding of innovation, to feeling more comfortable sharing ideas with their managers and teams and increased recognition of the value of innovation to the Civil Service.

Why innovation?

One Big Thing was a natural catalyst for the Civil Service innovation mission and was designed to help tackle the most common challenges to innovation in government.

One Big Thing: One Small Change

- Skills - Equip our people with the knowledge and skills to formulate new ideas and develop them to deliver value

- Incentives - Motivate our people to take risks and explore new ideas

- Empowerment - Foster an environment where our people feel comfortable to speak up with no fear that they will be penalised for taking risks

- Access to time, money and space - Address the current processes and structures of government that inhibit innovation to exploit creativity in the system

Alongside calls from the Chancellor of the Duchy of Lancaster and the Cabinet Secretary for the Civil Service to inject innovation into service delivery, the decision to focus on innovation was also backed by data on public and civil servant perceptions:

75% of civil servants said more support from leadership to pursue new ideas would do most to boost innovation. (Civil Service Data Challenge)

Only 30% of the public thought that a department would be likely to adopt an innovative idea to improve a public service, compared to 40% in 2022. (OECD Trust Survey)

Only 43%of civil servants felt equipped with the knowledge and skills to formulate new ideas and develop them to deliver value. (Civil Service innovation benchmarking exercises)

What was One Big Thing 2024?

The objectives of One Big Thing 2024 were to:

- LEARNING

- Equip civil servants with the knowledge and skills to formulate new ideas and develop them to deliver value.

- All civil servants were invited to complete the Innovation Masterclass - available on Civil Service Learning.

- TEAM CONVERSATIONS

- Build towards a culture where our people feel comfortable and encouraged to experiment, test and implement ideas.

- All civil servants participated in a team conversation to share ideas for a ‘small change’.

- EXPERIMENTATION

- Enable civil servants to experiment with small changes to realise tangible benefits and demonstrate that innovation is accessible to all.

- Teams experimented with delivering the agreed small changes and logged outcome on the One Big Thing platform.

Evaluation objectives

The evaluation assessed whether OBT 2024 met its aim to empower individuals and teams to spend time not only learning about the innovation process but also putting learning into practice by experimenting and making small, tangible improvements to how they operate.

The evaluation carried out used a combination of assessing the process with respect to how well equipped individuals and teams felt in their ability and opportunity to be innovative and impact through demonstrating tangible changes evidenced through case studies.

The approach involved analysing completion rates of the Innovation Masterclass through the Civil Service Learning data dashboards, Pre & Post Survey data on feedback and team discussions through Smart Survey, and Case studies from the Wazoku Platform. Techniques include quantitative data from dashboards and surveys, qualitative case study documentation, and comprehensive data analysis to fulfill evaluation objectives.

It is well researched in academic literature that any culture change, particularly a lasting culture of innovation and experimentation is not achieved overnight, but requires a systematic approach over a prolonged period of time (Kotter, 1995)[footnote 2]. At a macro level, the only way to test whether achieving participation in the three components of OBT 2024 would lead to lasting changes in culture, where innovation is embedded as part of Civil Service ways of working, is to look for a shift in the following People Survey metrics between 2024 and 2025:

- ‘I believe I would be supported if I try a new idea, even if it may not work’ (% strongly agree or agree)

- ‘The people in my team are encouraged to come up with new and better ways of doing things (% strongly agree or agree)

Evaluation Objectives

Since the Civil Service People Survey is run towards the end of the calendar year, and results are not published until the following January, this report largely relies on sentiment data captured during the OBT24 period (October 2024 to February 2025) to inform its conclusions.

Approach

The analytical approach was limited to evaluating short and medium term outputs of the OBT 2024 and focused on the research questions below. Long term measures of lasting cultural change are not within the scope of this report and will be followed up through the People Survey, which ran just before the launch of OBT 2024 and will provide a baseline for comparing against in 2025.

Research Question 1: Do civil servants feel they have the skills to formulate new ideas and develop them to deliver value?

a. Representation of civil servants engaging in Innovation Masterclass.

b. The pre survey determined a baseline for civil servants’ skill level and knowledge to formulate new ideas ahead of the Masterclass. The post survey was used to follow up on whether civil servants felt more equipped having completed the Masterclass.

Research Question 2. Do civil servants feel comfortable and encouraged to experiment, test and implement ideas?

c. Responses to this pre survey question were used to establish a baseline of whether civil servants felt they had the space and opportunity to be innovative and a post survey followed up if the team conversations had aided this.

Research Question 3: Do civil servants feel enabled to deliver small changes to realise benefits through innovation as defined in OBT 2024?

d. This post survey question considered whether civil servants felt they are now enabled to deliver small changes.

e. Case studies of experiments conducted were used to understand how successful civil servants have been in delivering a small change to realise benefits.

Measurement tools

Tools include the Civil Service Learning data dashboards, SmartSurvey, and the One Big Thing Platform to track engagement levels, participation rates, and completions of the Innovation Masterclass. A list of all survey questions used can be found in Appendix A.

The evaluation focussed on:

- Measuring whether civil servants feel more equipped to formulate new ideas and develop them to deliver value.

- Measuring changes in civil servant views of emphasis/importance placed on innovation in the Civil Service e.g. whether civil servants see innovation as being relevant to their role and whether they feel they have the time and space to innovate, as a result of e-learning and team conversations.

- Measuring whether managers and team leaders feel they have the practical skills, tools and knowledge to talk about innovation with their team and set a culture where innovative behaviour is promoted and valued.

- Recording case studies of small changes civil servants have experimented with - what the impact was and what they learned.

- Gathering feedback on the design and approach of One Big Thing more broadly e.g. what they liked the most and what could be improved.

- Uptake rates of other, similar innovation-focussed training on Civil Service Learning.

Measurement Tools

Our evaluation gathered data at each stage of the 3 components to help us assess whether OBT 2024 had met its objectives by using the following data sources.

1. Equip civil servants with the knowledge and skills to formulate new ideas and develop them to deliver value.

- 161,152 Innovation Masterclass Completions

- 151,869 Pre Survey Responses on Civil Service Learning

- 60,404 Post Survey Responses on Civil Service Learning

2. Build towards a culture where our people feel comfortable and encouraged to experiment, test and implement ideas.

- 4,891 Team conversations

- 151,869 Pre Survey Responses on Civil Service Learning

- 60,404 Post Survey Responses on Civil Service Learning

3. Enable civil servants to experiment with small changes to realise tangible benefits and demonstrate that innovation is accessible to all.

- 2,411 Case studies submitted onto the OBT Platform built by Wazoku

Data Analysis & Findings

Over 150,000 civil servants participated in at least one of the survey responses for “One Big Thing 2024”, the results of which reveal a generally positive attitude among participants regarding the event’s overall value, relevance, and applicability to their roles. A substantial majority expressed:

- a clear understanding of innovation;

- recognised its importance in their work and;

- felt comfortable sharing ideas and being creative.

The analysis has been organised in three sections, each representing one of the One Big Thing 2024 objectives.

Objective 1: Equip civil servants with the knowledge and skills to formulate new ideas and develop them to deliver value

The mechanism for achieving this objective was through the online training on the Civil Service Learning (CSL) platform, the Innovation Masterclass.

- 161,152[footnote 3] civil servants completed the online ‘Innovation Masterclass’, representing 39.2% of in-scope civil servants.

- Top performing departments in terms of percentage of Masterclass completion were the Ministry of Justice (78.1%)[footnote 4], Department for Transport (72.6%), and the Department for Work & Pensions (64.2%).

- 2,300[footnote 5] Senior Civil Servants completed the Masterclass.

- Over 60,000 civil servants in operational delivery professions completed the Masterclass

Improved understanding of what innovation means (Question 4. i. - Upskill)

93% (up 5 ppts) of respondents agreed they have a clear understanding of what innovation means in the post survey, an increase of 5 ppts compared to the baseline of 88%6 in the pre-survey, with those “Strongly Agreeing” rising from 18% to 35%

Increased recognition of innovation’s relevance in work (Question 4. ii. - Upskill)

88% (up 3 ppts) of respondents agreed that innovation was relevant to their work, after completing the masterclass, an increase of 3 ppts compared to the baseline of 85% in the pre-survey[footnote 6], with those “Strongly Agreeing” rising from 21% to 32%.

Increased ability to apply the innovation process (Question 4. iii. - Upskill)

88% (up 36 ppts) of respondents agreed to have a good understanding of how to apply the stages of the innovation process at work, after completing the masterclass, an increase of 36 ppts compared to 52%6 in the pre-survey.

One Big Thing was a valuable exercise (Question 8. i.)

76% of respondents agreed to some extent that the event was valuable. While the majority found it beneficial, 15% were neutral, and 9% disagreed to some extent, suggesting room for improvement in engaging all participants fully.

The One Big Thing content was relevant to my role (Question 8. ii.)

75% of respondents agreed to some extent that the content was relevant to their roles. However, with 16% neutral and 10% disagreeing, there is an opportunity to better tailor content for participants in specific work areas.

I feel confident to apply learning from this training in my role (Question 8. iii.)

77% of participants felt confident in applying what they learned. Although most feel equipped, 16% were neutral and 7% disagreed, indicating that additional support or follow-up could benefit those less confident.

Objective 1 Findings

- One Big Thing 2024 was generally an effective vehicle for equipping civil servants with the skills and tools to find innovative solutions to problems at work, with a specific focus on:

- Improved understanding of the innovation process.

- Demonstrating the importance of innovation in relation to roles of civil servants.

- There was a strong overall positive sentiment of the 5,250 responses towards the One Big Thing training across various departments and widespread satisfaction with its delivery.

Figure 1: Sentiment Analysis - Question 9. “What, if anything, went well about the training?”

| Sentiment | % |

|---|---|

| Negative | 9% |

| Neutral | 18% |

| Positive | 73% |

Objective 2: Build towards a culture where our people feel comfortable and encouraged to experiment, test and implement ideas.

Comfort in sharing ideas (Question 4. iii.- Culture)

88% (up 2 ppts) of respondents agreed to feeling more comfortable sharing ideas with their managers and teams, an increase of 2 ppts compared to the baseline of 86%[footnote 7].

Opportunity to apply the learning (Question 7 - Team Conversations)

83% of respondents agreed to some extent that the team conversation allowed them and their team to apply the learning from the innovation masterclass to an identified problem.

Objective 2 Findings

- One Big Thing Team Conversations were generally a good use of time, with participants reporting they felt:

- Confident applying the principles of the masterclass to their selected problem statement

- Comfortable sharing new ideas with their teams, and crucially, with their manager, indicating a high degree of psychological safety

Figure 2 - Distribution of responses for Question 7. “The Team Conversation allowed me and my team to apply this learning to an identified problem”

| Response | % |

|---|---|

| Strongly Agree | 19% |

| Agree | 50% |

| Partially Agree | 14% |

| Neutral | 14% |

| Partially Disagree | 1% |

| Disagree | 1% |

| Strongly Disagree | 1% |

Objective 3: Enable civil servants to deliver small changes to realise tangible benefits and demonstrate that innovation is accessible to all

The impact scores of case studies were consistently high across departments. The graph below presents the average impact score[footnote 8] for the top 20 departments and agencies that submitted more than five case studies. The number displayed on each bar indicates the total number of submissions from that department or agency.

Figure 3 - Average impact score per department with > 5 case studies (top 20 departments and agencies)

Objective 3: Enable civil servants to deliver small changes to realise tangible benefits and demonstrate that innovation is accessible to all

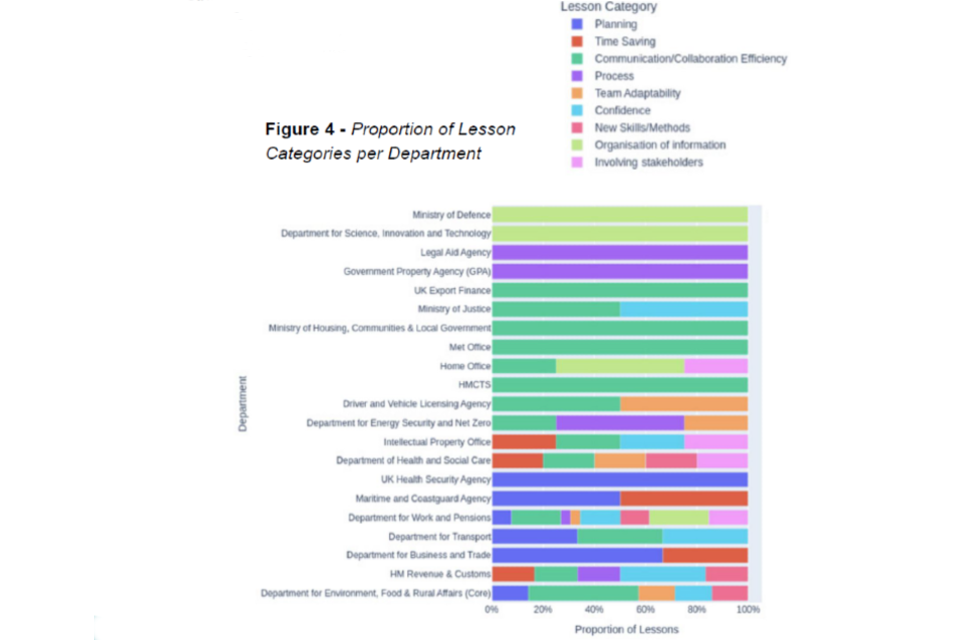

Learning from experimentation is a key part of innovation and civil servants were encouraged to reflect on lessons learned when submitting their case studies. Figure 4 provides an overview of the most common types of lessons and how they were distributed across departments.

Figure 4 provides an overview of the most common types of lessons and how they were distributed across departments.

Objective 3 Findings

- Almost two thirds of One Big Thing experiments were deemed to be highly replicable by the submitting team, highlighting the value of transparency in sharing experiments on the cross-government OBT platform.

- Experiments were deemed to be impactful, with 34% being linked to productivity gains. The average impact score of experiments from the top 20 departments with the most listed case studies ranged from ‘moderate’ to ‘high’.

- Communication, Confidence and the Organisation of Information were the three main lesson categories identified by teams when submitting case studies of their experiments.

Example One Big Thing experiments include:

HR innovation: The Civil Service Strategy Unit’s (CSSU) ‘Internal first by default’ experiment introduced a dynamic ‘Flexpool’ system, enabling workstream leads to tap into a pool of skilled and motivated individuals within the CSSU for short-term assignments.

850 million views: Met Office’s ‘Creative Headspace’ sessions sparked digital content that engaged audiences at scale.

AI-powered productivity: Government Digital Service Communications Service halved note-taking time with a new approach to AI-generated meeting notes.

Topic Modelling Analysis to the question ‘Was there anything that could have been improved?’

One of the final post-survey questions involved a free-text box where learners were asked if there was anything that could have been improved with the OBT initiative.

Analysis of responses was grouped into underlying themes that suggests learners critically evaluated various dimensions of the OBT initiative, ranging from how content is engaged with, to how well it fits within organisational frameworks and real-world applications.

Figure 5 - Topic analysis of responses for Question 10. Was there anything that could have been improved?

| Response | % |

|---|---|

| Content Engagement and Learning Styles | 17% |

| General Feedback and Constructive Criticism | 22% |

| Implementation and Real-world Application | 22% |

| Organisational Culture and Innovation Barriers | 19% |

| Relevance and Target Audience | 20% |

Quality Assurance

Quality assurance of the surveys was carried out internally by the Joint Data and Analysis Centre (JDAC) and case study data findings were quality assured by Wazoku. The quality assurance was structured as follows:

- Data Collection:

- Various data sources were utilised, including structured data from surveys via SmartSurvey and case studies via Wazoku Platform.

- Processes were implemented to ensure accuracy in the extraction of Survey data and integration into spreadsheet datasets.

- Data Analysis:

- Statistical techniques were employed to validate Survey findings focusing on hypothesis testing to examine differences between pre and post outcomes.

- Sentiment analysis and topic modelling of free text Survey questions faced challenges like ambiguity and lack of context, which were acknowledged and factored into the interpretation of results.

- Thematic analysis was performed using a variety of automated Natural Language Processing methods on case studies recorded in Wazoku. Quality assurance was incorporated through conducting an iterative approach involving the automated analysis coupled with manual/semi-manual evaluation and intervention.

- Verification:

- Internal quality assurance processes were carried out by JDAC to verify the accuracy and consistency of survey and case study data.

- Regular reviews and cross-checks ensured the reliability of the data before it was integrated into the evaluation.

- Reporting:

- Efforts were made to provide clear and accurate reports that reflect the true nuances of the data.

Lessons Learned

We conducted lessons learned sessions with departmental One Big Thing Champions and project managers immediately after the end of OBT2024 and have presented the key themes arising in those sessions below:

1. Ways of working

Open and early engagement with departments is crucial for mobilising the necessary resources and allowing an opportunity to tailor the departmental offer.

- Engage earlier with ALBs to get them on board and have a specific point of contact.

- Notify departments at the earliest opportunity of the scope, intention, and desired outcome of OBT to stand up appropriate resources.

- Continue to run the fortnightly X-CS Working Group meetings and ensure they are chaired by departments.

2. Flexibility

Effective collaboration and flexibility is needed during testing, in particular for larger departments with high volumes of feedback to process.

- Continue to ensure all departments are sighted early on plans and are represented in forums for providing feedback on component development.

3. Comms and engagement

The sharing of best practice and lessons from comms campaigns between departments e.g. via Basecamp was highly valuable and underlined the importance of keeping terminology simple.

- Look into making the comms assets shared via Basecamp editable by departments.

- Explore having a dedicated departmental comms lead.

- Ensure project teams are always copied into direct central communications to Permanent Secretaries.

4. Three-component structure

Simplicity is paramount. The simpler the user journey, including structure, processes, sources of information/platforms and timings, the easier it is to drive engagement and participation.

- Factor in the time commitment required for multiple components when planning the duration of OBT.

- Ensure that e-learning is kept short, clearly structured and developed in partnership with experts and departments.

5. Reflect and evaluate success

Holding an open, transparent lessons learned exercises with delivery partners following the end of the initiative is a good way of recording feedback and celebrating success.

- Continue to invite all stakeholders to participate in a lessons learned exercise at the end of OBT to reflect on both the design and delivery of the offer.

Case studies

One Big Thing 2024 inspired a series of smart, scalable experiments that are transforming how the UK government works, from automating

time-consuming tasks to rethinking how teams collaborate. 2,411 case studies of documented experiments across 34 public sector organisations was recorded. Our top ten scalable case studies can be found here: Celebrating a wave of innovation: Scalable experiments from the One Big Thing initiative.

Faster justice: Covid Inquiry Unit boosted document processing from 152 to 392 exhibits per hour, helping surface evidence more quickly.

Smarter support for families: A new digital referral form in family law has improved case handling and reduced delays for those in need.

850 million views: Met Office’s ‘Creative Headspace’ sessions sparked digital content that engaged audiences at scale.

Time-saving tools: Power BI automation in the Department for Environment, Food and Rural Affairs saves 33 hours a month and boosts data insight.

AI-powered note taking: The Government Communications Service (GCS) developed a comprehensive guide for using AI to generate standardised meeting notes, resulting in a 50% cut in note-taking time.

Limitations and Challenges

Reliance on self-reporting

Most findings in the report are based on responses to pre and post-surveys, team conversations feedback, and submitted case studies. Self-assessment data can have limitations including:

- Variability amongst respondents in how they may interpret a concept like “innovation”.

- Social desirability bias, where respondents may accentuate what they deem as positives while down-playing what could be perceived as being negative in an effort to be viewed more favourably.

Variation in implementation

Possible differences in how departments/agencies engaged, delivered or supported OBT (e.g. time allocated for participating in OBT, degree of senior leadership involvement). This could introduce variability in respondent experience and, therefore, outcome data.

Long-term outcomes

A key limitation of this evaluation is the reliance on sentiment and self-reporting during the OBT period rather than longer-term impact data such as People Survey results, which are not released until the end of 2025.

Also, due to ensuring anonymity there was no longitudinal tracking of individuals/teams to assess whether changes in attitudes or behaviour persist beyond the OBT period or have translated to measurable work improvements.

While reported outcomes show an improvement in the understanding and attitudes towards “innovation”, this evaluation is unable to provide definitive evidence of sustained changes in behaviour or attitudes beyond the immediate term. Current academic literature suggests that with active leadership and consistent enforcement, it could take >5 years for cultural change to be embedded in an organisation the size of the the civil service.

Conclusion

Incremental Changes Lead to Significant Impact

- As highlighted in our Innovation Masterclass, the majority of ‘lightbulb moments’ arise from consistent iteration of existing processes. The recurring themes in our case studies - such as collaboration and team efficiency - demonstrate that small adjustments in the processes we work with day in, day out, can accumulate and drive benefits for both civil servants and the citizens we serve.

Just the beginning, not the end of a focus on innovation

- The call for civil servants to experiment and innovate did not stop on the 14th February 2025. Instead, One Big Thing has been the start of innovation culture drive across the Civil Service, with departments running their own ‘OBT inspired’ initiatives to support civil servants with innovative ideas and encourage cultures where continuous improvement is rewarded and recognised.

The legacy of One Big Thing 2024

- Ultimately, the cumulative impact of the 2,411 case studies holds great potential for remarkable change. One Big Thing 2024 has successfully demonstrated that innovation is not merely a one-off event or a sweeping overhaul; it is often simply small improvements or shifts in mindset that collectively yield significant impact.

Appendix A: Pre Survey Questions

| Number | Question | Response Options |

|---|---|---|

| 1 | What Department or Agency do you work for? | Dropdown selection |

| Only applicable in some instances | Please select the part of the organisation you work in | Dropdown selection |

| 2 | What Grade are you? | Dropdown selection |

| 3 | Please select the area of work that is most relevant to your job | Dropdown selection |

| 4 | To what extent do you agree or disagree with the following statements? i) I have a clear understanding of what innovation means ii) I think innovation is relevant to my work iii) I feel comfortable in sharing new ideas with my manager and/or team and being creative in my day-to-day role iv) I know how to apply the stages of the innovation process |

Strongly Agree, Agree, Partially Agree, Neutral, Partially Disagree, Disagree, Strongly Disagree |

| 5 | Are you a line manager, team leader or facilitator for the team conversation component of One Big Thing? If yes, please proceed to answer the remaining questions in this survey. If no, your survey responses will be submitted. | Yes, No |

| Only applicable if ‘yes’ is selected for question 5 | (As a line manager, team leader or facilitator) Please rate to what extent you agree or disagree with the following statements i) I can foster an inclusive, empowering environment for my team to share new ideas. ii) I feel equipped to run a team conversation on innovation. |

Strongly Agree, Agree, Partially Agree, Neutral, Partially Disagree, Disagree, Strongly Disagree |

Appendix B: Post Survey Questions

| Number | Question | Response Options |

|---|---|---|

| 1 | What Department or Agency do you work for? | Dropdown selection |

| Only applicable in some instances | Please select the part of the organisation you work in | Dropdown selection |

| 2 | What Grade are you? | Dropdown selection |

| 3 | Please select the area of work that is most relevant to your job | Dropdown selection |

| 4 | To what extent do you agree or disagree with the following statements? i) I have a clear understanding of what innovation means ii) I think innovation is relevant to my work iii) I feel comfortable in sharing new ideas with my manager and/or team and being creative in my day-to-day role |

Strongly Agree, Agree, Partially Agree, Neutral, Partially Disagree, Disagree, Strongly Disagree |

| 5 | Have you taken part in the ‘Innovation Masterclass’ training? | Yes, No |

| Only applicable if ‘yes’ is selected for question 5 | To what extent do you agree or disagree with the following statement on the Innovation Masterclass training? The online training - “Innovation Masterclass” has given me a good understanding of how to apply the stages of the innovation process * |

Strongly Agree, Agree, Partially Agree, Neutral, Partially Disagree, Disagree, Strongly Disagree |

| 6 | Are you a line manager, team leader or facilitator for the Team Conversation component of One Big Thing? | Yes, No |

| Only applicable if ‘yes’ is selected for question 6 | (As a line manager, team leader or facilitator) Please rate to what extent you agree or disagree with the following statements i) I can foster an inclusive, empowering environment for my team to share new ideas. ii) I feel equipped to run a team conversation on innovation. |

Strongly Agree, Agree, Partially Agree, Neutral, Partially Disagree, Disagree, Strongly Disagree |

| 7 | Have you taken part in the One Big Thing “Team Conversation”? | Yes, No |

| Only applicable if ‘yes’ is selected for question 7 | Please rate to what extent you agree or disagree with the following statement about the Team Conversation. The Team Conversation allowed me and my team to apply this learning to an identified problem. |

Strongly Agree, Agree, Partially Agree, Neutral, Partially Disagree, Disagree, Strongly Disagree |

| 8 | Please rate to what extent you agree or disagree with the following statements about One Big Thing i) One Big Thing was a valuable exercise. ii) The content was relevant to my role. iii) I feel confident to apply learning from this training in my role. |

Strongly Agree, Agree, Partially Agree, Neutral, Partially Disagree, Disagree, Strongly Disagree |

| 9 | (Optional. Max 250 Characters) What, if anything, went well about the training? | Free-text |

| 10 | (Optional. Max 250 Characters) Was there anything that could have been improved? | Free-text |

Appendix C: Methodology & Workings

Hypothesis testing for two population proportions

This analysis helps determine whether the difference between two proportions (e.g., pre-survey and post-survey responses) is statistically significant, or likely due to random variation.

- Type of Test: Z-test for two proportions To test if there is a significant difference between the two proportions you are comparing.

- Significance Level: (0.05), meaning there is a 5% risk of concluding that a difference exists when there is none.

| Aspect/Question | Pre-survey Proportion (%) | Post-survey Proportion (%) | Z-Score | P-Value | Statistical Significance |

|---|---|---|---|---|---|

| Understanding of Innovation | 88% | 93% | -29 | p < 0.0001 | Yes |

| Relevance to Work | 85% | 88% | -16 | p < 0.0001 | Yes |

| Application of Innovation Process | 52% | 88% | -125 | p < 0.0001 | Yes |

| Comfort in Sharing Ideas | 86% | 88% | -11 | p < 0.0001 | Yes |

The statistical significance tests revealed that all observed increases in respondent agreement across the three survey aspects were statistically significant, indicating that the improvements are unlikely due to random chance.

-

39.2% of in-scope civil servants. This compares to 82,000 bookings across the CSL catalogue over the first quarter of 2023, or to CSL’s most popular course (the induction) with 17,000 enrollments ↩

-

Kotter, J. P. (1995). Leading Change: Why Transformation Efforts Fail. Harvard Business Review, 73(2), 59-67. ↩

-

10,449 completions were recorded via departments’ own learning platforms or as ‘accessible’ completion in a group setting or via hard copies of the learning ↩

-

includes 2,217 group completions whereby staff undertook the learning as a team due to limited access to laptops and/or other appropriate tech to log in to to CSL individually ↩

-

includes SCS completions of the Innovation Masterclass on Future Learn ↩

-

The statistical significance tests concluded all observed increases were statistically significant, indicating that the improvements are unlikely due to random chance. ↩

-

The statistical significance tests concluded that observed increases were statistically significant, indicating that the improvements are unlikely due to random chance. ↩

-

1- No impact, 2- Minimal impact, 3- Moderate impact, 4- High impact ↩