Electronic Monitoring in the Criminal Justice System

Published 31 August 2023

Applies to England and Wales

1. Purpose

EM is a valuable tool with flexible and wide-ranging potential to both strengthen offender management in the community and to drive broader criminal justice priorities of reducing reoffending and protecting the public. EM can be imposed on individuals in various circumstances, including defendants on court bail, offenders serving a community sentence, prison-leavers on Home Detention Curfew, and prison-leavers on licence.

The Electronic Monitoring (EM) Strategy was the first in a series of documents published as we progress our EM Expansion Programme and develop our EM Target Operating Model. This document provides an update on the steps we have taken to:

- improve data collection and analysis in tagging services;

- monitor the delivery of benefits; and

- build the evidence base for the impact of tagging on reoffending and

offenders’ diversion from prison.

In publishing this document, we are fulfilling one of the recommendations made by the Public Accounts Committee in its September 2022 report.

1.1 Summary of progress

Data improvement:

Since October 2022, progress has been made in several areas to improve EM data; in the way we collect, access, use and share our data to provide us with greater insight. We explain the progress we have made to date, and how we will continue to build upon this to meet our strategic objectives.

Benefits management:

It is widely acknowledged that a robust and transparent approach is required to monitor the delivery of benefits as part of the EM Expansion programme. We set out current progress, alongside an explanation of how this will be further developed.

Building the evidence base:

Evidence of the impact of EM on reoffending and other outcomes is limited. There are relatively few studies that reliably measure the impact of EM, with some studies suggesting that the impact of electronic monitoring is heavily context dependent. We explain the evaluation approach that the EM expansion programme has developed to improve the evidence base for the impact of tagging on reoffending and offender behaviour.

2. Data improvement plan

2.1 Introduction

In this section, we will set out:

- The current state of EM data, and why improvement is vital

- The 11 areas of work that make up our data improvement plan, and what outcomes or benefits we expect in each area

- A summary of our progress to date

- What we expect to achieve in the next two years

2.2 The current state of EM data – challenges and opportunities

Electronic Monitoring (EM) is a valuable tool with flexible and wide-ranging potential to both strengthen offender management in the community and to drive broader criminal justice priorities of reducing reoffending and protecting the public.

We collect EM data on the operation of EM services, device-wearer compliance, and tag performance. Across the wider criminal justice system, data relating to EM is collected in Probation, Court and third-party systems. Our ability to collect, access and use quality data effectively to support decision-making is vital to the delivery of court-ordered community orders, court bail, post release licence conditions, and the supervision of foreign national offenders.

If we want to deliver a change in our ability to use EM to help reduce reoffending and protect the public, we need to develop a deeper understanding of the device-wearer journey through the Justice System (and across other services). Constraints in linking data between supplier and MOJ systems limits insight on device-wearer characteristics, offence history and outcomes. Improved quality data enables increased productivity, reduced duplication of effort and identifies opportunities to innovate.

The recent investment to build the evidence base to demonstrate the effectiveness of EM further increases the importance of having access to and control of the right data. We need to develop our understanding of which type of EM works for whom, when and why. The opportunity for improved EM data quality, management and analysis to drive our operational priorities is huge.

The EM caseload is expected to continue to rise over the next few years leading to more diverse and larger datasets for collection and analysis. New supplier contracts will be in place from 2024 and with these, the Ministry of Justice can take more control over which data is collected and how it is accessed.

2.3 Overview

The EM Data Improvement Plan builds on and aligns with the MoJ Data Strategy: Becoming a Truly Data Led Justice System - Justice Digital (blog.gov.uk) .

The EM Data Improvement Plan has been developed with extensive stakeholder engagement and is primarily based on the findings in the EM Data Discovery review carried out by the Data Improvement team (Sept 2021), Justice Digital EM Data Discovery (May 2022) and EM Data Governance Review (June 2022). The plan also supports implementation of some of the recommendations made in the June 2022 NAO report and the January 2022 HMIP thematic inspection.

The EM Data Improvement Plan will help to realise the strategic ambition for EM to be a data driven service. We have invested in additional resources across the data, analytical and digital functions within MoJ to support delivery of the plan.

2.4 Areas of focus

We have identified 11 areas of focus that will guide the work of our data improvement plan. The table below summarises what outcome or benefit we expect to achieve through the work in each area:

| Area of Focus | Expected outcome/benefit |

|---|---|

| Design and Implement EM Data Governance Processes | Clear escalation routes to fix data issues. Improved definition and understanding of EM data, what it can be used for and how it can be shared. |

| Establish a Data Quality Framework for EM Data | Data outputs are of a high quality and can be used to provide meaningful analysis and insights. Increased automation of reporting products and reduced time on data cleansing. |

| Create and maintain EM Data Documentation | EM data is documented and catalogued, making it easier to understand, locate and connect. |

| Enable the required access to EM Data | Data is accessible for those who need it to inform all levels of decision making, analysis and reporting. |

| Build automation tools and Application Programming Interfaces (APIs) for EM data collection and transfer | A single source of truth (data) from which all data products are derived. |

| Develop and manage an EM Analytical Plan | EM analytical framework in place to drive insight across key priority areas. Reduce duplication of analysis requests. |

| Develop Management Information, statistics, performance metrics and forecasts for EM | Improved reporting on the operational delivery and impact of EM that supports continuous improvement. Well-informed policy, strategic and operational decisions. |

| Implement EM Evaluation Plan | Evaluation evidence that drives improvements in outcomes and supports investment decisions to demonstrate accountability for public spending. |

| Perform data exploration and linking of EM datasets | Analytical model to drive insight into EM cohorts. Monitoring technology/algorithms can be developed based on data to better support rehabilitation. |

| Develop and deliver a plan to share EM data and insight | Data and insight is shared and communicated effectively with key stakeholders, analysts and front-end practitioners on the effectiveness of EM. |

| Promote an EM data culture and increase data literacy | Improved offender management as staff using EM data and insights are well trained and confident to access, interpret. question and use the data and information we hold. |

2.5 Progress to date

We have:

- Developed robust data requirements in new supplier contracts to allow the Authority to have more control over EM data

- Established an EM Data Governance Board to manage and govern EM Data

- Developed the methodology to link EM Data to Probation and other data sets, enabling analysis of specific cohorts

- Created an EM Future Service (EMFS) Data Dictionary setting the scope, comprehensiveness and quality of data based on user needs

- Defined high-level EM data use cases to inform analytical plans and roadmaps

- Provided high level insights into the use of EM and profile of device-wearers for certain cohorts

- Established a regular schedule of management information for statistical publications

- Designed EMFS Performance Metrics to allow for effective management of supplier performance

2.6 Future activities

By the end of 2023 we expect to have:

- Developed an EM Business Glossary to document definitions for key terms required for priority analytical activities and operational needs.

- Appropriate resources in place with a focus on improving EM data access and analysis.

- EM Data & Digital Roadmap in place and digital services being developed to automate EM data processes.

- Enhanced stakeholder Management Information (MI) dashboards and forecast models to support EM needs.

- Delivered Proof of Concepts to identify patterns of behaviour such as curfew non-compliance.

- Refreshed our Analytical Plan, which priorities analytical need and outlines how we will measure the impact of EM.

- Identified high priority EM data fields/data sets to monitor for data quality.

In 2024 we expect to have:

- Access to live and historical EM Data. Insight is made available to users and stakeholders as needed in a timely manner.

- An analytical commissioning process which ensures resources are focussed on greatest value.

- Scoped options to build automated tools and Application Programming Interfaces (APIs) to collect and transfer EM Data to limit manual data entry.

- Delivery of analytical plan based on prioritised use cases.

- Established process to link data from EM datasets to MoJ systems to inform the evidence-base.

- High quality data in supplier systems with clear feedback loops in place to monitor data quality issues.

- Effective data management and data governance embedded to allow for clear escalation of data issues.

- Built pipelines from EM supplier systems to MoJ systems to allow for improved ownership and access to EM data.

- Delivered outcomes for the Acquisitive Crime (AC) expansion pilot and interim, indicative evidence based on historic EM datasets.

In 2025 we expect to have:

- Delivered final outcomes of specified EM evaluation pilots to inform future policy decisions around use of EM.

- APIs and automated tools in place for EM data to enable high-quality data to be collected and used.

- Data science tools incorporated into EM digital services, so that insight is available to aid decision making.

- Developed a data culture within EM so staff value data as an asset and can use it effectively to support decision making.

- Data and insight shared and communicated effectively with key stakeholders, analysts and front line practitioners.

- Linked data from EMS datasets to MoJ datasets to enable us to track and analyse a device-wearer journey through the justice system.

3. Benefits management plan – progress update

3.1 Introduction

In this section, we set out:

- The purpose of the benefits management plan

- Our approach to identifying, developing and monitoring benefits realisation

- The stakeholders we have engaged with

- What work we have completed to date

- What we still need to do

- What the final output will look like

3.2 Purpose of the benefits management plan

- To identify the benefits of Electronic Monitoring (EM).

- To ensure all benefits meet the Infrastructure and Project Authority (IPA) definition

A benefit can be defined as the measurable improvement resulting from an outcome perceived as an advantage by one of more stakeholders, which contributes toward one or more organisational objectives

(Guide for Effective Benefits Management in Major Projects, Infrastructure and Projects Authority, 2017).

3.3 Approach

Our approach to benefits management follows best practice as set out by the IPA. The overarching approach is to:

- Agree strategic objective, drivers and approach

- Deliver workshops with EM stakeholders to identify a long-list of benefits

- Benefit validation, ensuring alignment with strategic objectives, further refinement and prioritisation with EM stakeholders

- Exploration of data landscape to assess feasibility of measurement

- Agree EM Benefit Realisation Plan with detailed overview of benefits and timescale for measurement and reporting

- Execute EM Benefit Realisation Plan

3.4 Stakeholders

By using workshops, breakout groups and individual meetings, EM has engaged with a wide range of stakeholders. This includes initial feasibility and exploratory activities through to more detailed work.

Our stakeholders include:

- HMPPS Electronic Monitoring programme: Senior Leadership Team, Commercial, Contract Management, Business Change, Data Improvement, Operational Strategy and Policy, Finance, Implementation

- Ministry of Justice (MoJ): Probation, Prisons, Courts, Policy, Data and Analysis, MoJ Digital

- Other Government Departments & Partners: Home Office, Police

3.5 Progress to date

Benefits management strategy

The benefits management strategy outlines how benefits will be managed within Electronic Monitoring, including reporting and responsibilities of stakeholders. It will be refreshed annually by the EM Benefits Realisation Manager in consultation with the EM Senior Leadership Team and stakeholders across the Criminal Justice System.

EM service benefits

Benefits have been identified for the new EM service and included in the full business case. A benefits map and benefits tracker have been developed.

3.6 Future activities

Identification and definition of EM benefits – by end September 2023

A detailed overview of the benefits we will use to assess the impact of the EM programme.

Benefits include:

- showing we are an insight-led service that contributes to reducing re-offending

- improving operational efficiency

- process enhancements to improve data quality

Benefits realisation approach – by end October 2023

The outline benefit realisation approach will be agreed, including:

- strategic drivers

- how benefits are being developed

- benefit ownership

- when identified benefits will start to be realised

- and how benefits will be reviewed including targets and tracking

Exploration of data landscape – by end November 2023

An assessment of the feasibility of benefit measurement based on the availability, access and quality of data will be completed. This will support the formulation of the benefit reporting schedule, defining what can be measured now and in the future.

3.7 Final output

Benefits realisation plan – by end November 2023

- Benefit mapping - Outlining the inputs, outputs, outcomes and interconnections between benefits

- Benefits tracker and schedule - Outlining how benefits will be tracked and reported on

- Benefits reporting and dashboard - Outlining how benefits will be shared with appropriate stakeholders and governance structures

- Benefit profile agreement - Detailed benefit profiles will be agreed for each benefit. Identified benefits owners will be responsible for reporting and ensuring maximum value in each benefit

- Benefit tracking – The process of tracking benefits will be agreed

4. Evaluation approach

4.1 Introduction

The EM Expansion Programme comprises a series of projects implementing new uses of EM, to help build understanding of the effectiveness of processes and the impact of EM.

Four expansion projects are currently the subject of an MoJ evaluation. These encompass a range of EM applications aimed at reducing reoffending associated with acquisitive crime, domestic abuse, and alcohol use, and as tool to reduce risk should an escalation be identified.

| Project | Purpose |

|---|---|

| Acquisitive Crime | Mandatory GPS tagging of individuals who have served a standard determinate sentence of at least 90 days where the principal offence was acquisitive in nature and specified in The Compulsory Electronic Monitoring Licence Condition Order (2021). The individual must also reside in a qualifying police force area. Electronically monitored tags and conditions are imposed for a maximum of 12 months or until the end of the licence period, whichever occurs first. |

| Alcohol Monitoring on Licence | Alcohol tags applied to prison leavers whose offending and risk is considered to be alcohol related. Two licence conditions: total abstinence from alcohol, or a requirement to limit alcohol use. Application reflects circumstances and known risks. Alcohol monitoring tags and licence conditions can be applied for up to 12 months during the licence period and must be reviewed every 3 months to ensure that they remain necessary and proportionate |

| Domestic Abuse Perpetrators on Licence |

EM added at the point of release from prison, where Domestic Abuse is a known risk factor. EM conditions include curfew, exclusion zones, attendance monitoring at appointments, and trail monitoring Application reflects risk assessment, necessity and proportionality. Electronic monitoring tags and conditions can be imposed for up to 12 months and must be reviewed every 3 months to ensure that they remain necessary and proportionate |

| Licence Variation |

EM used as a variation to a prison leaver’s licence, where this change is considered necessary and proportionate. Electronic monitoring tags and conditions can be imposed for up to 12 months and must be reviewed every 3 months to ensure that they remain necessary and proportionate |

We will conduct a series of in-depth evaluations of the Expansion Programme projects to understand:

- How EM is working from an operational perspective, and to identify potential improvements

- The extent that the use of EM supports probation practitioners to manage offenders in the community

- The extent that the use of EM impacts offenders’ compliance with their licence conditions, recalls and reoffending

- The impact of EM on those affected by monitoring, which may include tag wearers, victims, and external agencies

- The most effective use of EM (i.e. how it contributes to managing risk in the community, reducing reoffending or as an appropriate alternative to custody), in what circumstances and with whom

- The costs and benefits of the use of EM as a licence variation for the criminal justice sector

4.2 Wider EM projects

In addition to our planned evaluations of EM expansion programmes, we plan to use historic caseload data to analyse the impact of EM on outcomes for the pre-existing service cohorts using quasi-experimental techniques. This will include reconviction analysis of the historic community EM cohort.

Separately, a pilot is currently underway of new powers for youth community sentences (introduced in the Police, Crime, Sentencing and Courts Act 2022), including the use of GPS location monitoring, which will explore whether these powers offer a viable alternative to custody.

4.3 EM Expansion Programme evaluation vision

Evaluation works by collecting systematic evidence of what has been achieved and the impact of our activities. We will use evaluation to assess the value of EM, what works and what doesn’t work, how and why.

Our vision is to deliver proportionate, high-quality evaluation that will enable the MoJ to take evidence-based decisions on the ongoing investment in EM services.

To achieve our vision, we aim to:

- Build evaluation around the needs of our stakeholders

- Develop a robust evidence base

- Quality assure all EM evaluation designs

- Share evaluation findings

Aim 1: Build Evaluation Around the Needs of Our Stakeholders

The EM Programme stakeholders include people working in the criminal justice sector, those responsible for decision making, academics, and people affected by EM including tag wearers and victims of crime.

To ensure our evaluations meet our stakeholders’ needs, each evaluation will be underpinned by a Theory of Change model. This will be developed in careful collaboration with our stakeholders and will enable us to describe the outcomes we are trying to achieve, along with how we are intending to achieve these.

The Theory of Change model

Evaluation should be fit-for-purpose and genuinely useful to decision makers. Our evaluation approach will be guided by four core principles to ensure this. These centre on evaluation being useful, credible, proportionate and robust.

- Inputs:

What resources are needed for the programme? (e.g. financial, staffing, material) - Activities:

What activities does the programme require? (e.g. service delivery, training, partnership work) - Outputs:

What will the prgramme measure? - Short term outcomes

- Longer term outcomes

- Impact:

What is the overarching ambition for your project? (e.g. protect the public, reduce reoffending)

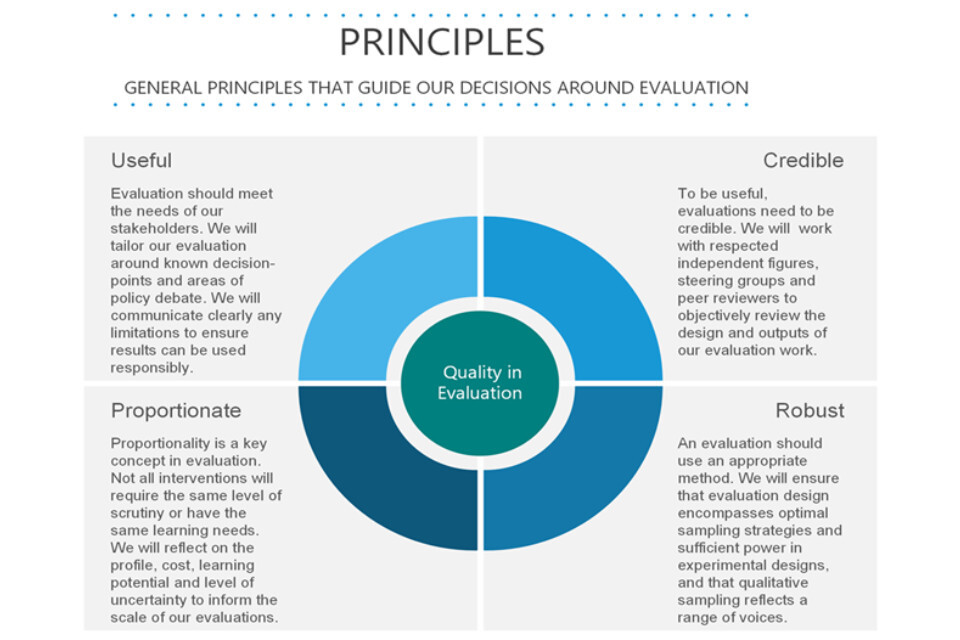

Principles

General principles that guide our decisions around evaluation

-

Useful

Evaluation should meet the needs of stakeholders. We will tailor our evaluation around known decision-points and areas of policy debate. We will communicate clearly any limitations to ensure results can be used responsibly -

Credible:

To be useful, evaluations need to be credible. We will work with respected independent figures, steering groups and peer reviewers to objectively review the design and outputs of our evaluation work -

Proportionate:

Proportionality is a key concept in evaluation. Not all interventions will require the same level of scrutiny or have the same learning needs. We will reflect on the profile, cost, learning potential and level of uncertainty to inform the scale of our evaluations -

Robust:

An evaluation should use an appropriate method. We will ensure that evaluation design encompasses optimal sampling strategies and sufficient power in experimental designs, and that qualitative sampling reflects a range of voices

Evaluation Principles adapted from the Magenta Book, which provides HM Treasury guidance on what to consider when designing an evaluation and must be applied in a manner that meets operational requirements

Aim 2: Develop a Robust Evidence Base

EM has the potential to support the MoJ’s priorities of public protection and reducing reoffending and to improve the value for money with which the system operates in delivering the priorities. Our approach to developing a robust evidence base for EM centres on learning and accountability. In the immediate term, evaluation can provide evidence that can improve the programme being examined. In the longer-term, evaluation can provide evidence to inform future policy development, and assess value-for-money. To develop a robust evidence base, we will follow best practice in evaluation design, as set out in the ‘Magenta Book’ and delivery, in the ‘Green book’. We will adopt the most robust evaluation method that is feasible, drawing on the Nesta Standards of Evidence framework and the Maryland Scientific Methods Scale. Our evaluation method will take into account what is achievable in the context of the project and any competing government priorities.

A range of evaluation methods will be adopted to assess the effectiveness and cost effectiveness of EM. The exact research questions for each evaluation and the methods applied will reflect the needs of our stakeholders and the context in which each project is implemented, comprising a combination of Process, Impact and Value-for-Money evaluations.

- Process evaluation: Assess what can be learned from how a programme is delivered to identify what worked well and what could be improved. Process evaluation is typically conducted after a smaller scale pilot to inform wider roll-out. Methods include collecting and analysing stakeholder perceptions and administrative data

- Impact evaluation: Assess what difference a programme has made and why. Impact evaluations answer questions such as, did the programme achieve its stated objectives? Who did the intervention affect? How did the effects vary across individuals or groups? Impact evaluation is investigated through theory-based, experimental, and/or quasi-experimental approaches

- Value-for-Money evaluation: Assess whether a programme is a good use of resources. Value-for-money evaluation compares the benefits of a programme with its costs or compares the relative costs of a programme and its outcomes to alternative courses of action. This allows us to assess whether the benefits are outweighed by the costs

Aim 3: Quality Assure All Evaluation Designs

We recognise that collaboration can support high quality evaluation design and provide greater accountability. To ensure the quality of our evaluations within the EM Programme, we will work with internal and external collaborators to develop our evaluations. We will integrate advice from three overarching sources to review and quality assure all of our evaluation designs: Internal Partnership Working, formal Analytical Quality Assurance (AQA) and External Review.

Internal Partnership Working: When scoping any evaluation activity, the analytical team will work with wider departmental experts on evaluation design. The MoJ’s Evidence and Partnerships Hub will be used to provide advice on evaluation scoping activities and academic partnership work. We will also partner with the Evaluation and Prototyping Hub, who offer expert consultancy and ongoing support throughout evaluation design and delivery.

Analytical Quality Assurance (AQA): All of our evaluations will be subject to a set of AQA principles that define our ways of working. These principles apply regardless of the scale of the evaluation, but the approach will differ depending on the scale, nature and risks associated with the project. For each evaluation, an AQA protocol will be submitted prior to commencing evaluation activity. This will document key risks, ethical issues and methods. The AQA protocol will be reviewed and agreed by Programme Heads and Policy.

External Review: We will engage with the Evaluation Task Force (ETF) throughout the evaluation process. The ETF are a joint HM Treasury and Cabinet Office unit that aims to ensure evaluation is at the heart of government decision making. More information is available of the Evaluation Task Force website. We will proactively seek scrutiny from the ETF to provide external review and recommendation within each programme. We will also work with ETF partners, including the Evaluation Trial Advice Panel.

Aim 4: Share Our Evaluation Findings

By sharing what we learn through evaluation activity, the government, public and all other stakeholders can learn from and build on our evaluations. Publishing the evidence on which policies are based also delivers greater transparency across government, enabling the public to hold government and public bodies to account.

We make the following commitments to promote transparency by sharing the products of evaluation activity:

- Internal information sharing: We will share evaluation reports in a timely manner, ensuring we can rapidly share early learning with colleagues and inform operational decisions for ongoing implementation.

- External publication: Consistent with the Government Social Research profession’s pledge to publish robust and impartial work set out in the Government Social Research 2021-2025 strategy, we commit to inviting external peer-review of all evaluation reports and subsequent publication via the public Government web pages.

4.4 Timeline

The timelines for evaluation activity and reporting are dependent on sufficient sample sizes in each expansion pilot, data quality and pilot roll-out dates. For this reason, it is not possible to provide fixed dates of when evaluation findings will be available as this will depend upon progress of operational implementation. The table below shows the estimated time post implementation for draft findings, though this will be kept under review. Any publication resulting from evaluation activity will go through a series of steps to ensure the findings are communicated in a transparent, unbiased way. We aim to provide draft evaluation conclusions for internal review within the below timeline:

| Evaluation type | Stage | Estimated timing of draft findings for internal review |

|---|---|---|

| Process | Interim | 6 months after project go-live |

| Process | Final | 12-18 months after project go-live |

| Impact | Interim | 18 months after data collection, based on intermediate outputs |

| Impact | Final | 2and a half years after data collection, reflecting constraints around delivery of reoffending statistics |

| Value-for-Money | Final | Up to 1 year after impact evaluation findings, from which it is typically derived |