Defence Artificial Intelligence Strategy

Published 15 June 2022

Foreword by the Secretary of State for Defence

A year ago, we published the Integrated Review, committing to strengthen security and defence and to modernise our armed forces. We warned then that these steps were a necessary response to the deteriorating global security environment, including state-based threats. Since then, Russia’s illegal invasion of Ukraine has brought all the horrors of industrial-age warfare back to the heart of Europe. The tragic events unfolding in Ukraine are a stark reminder of the importance of ensuring that our armed forces can meet the sort of conventional military challenge we hoped our continent had left behind in the 20th Century.

However, we also noted that we live in an era of persistent global competition, characterised by hybrid and sub-threshold threats. Russia’s appalling invasion of Ukraine removes any blurring of the line between war and peace, but was preceded by many years of malign activity. As we stand in solidarity with the global community in challenging the actions of the Putin regime, we recognise that Defence must be relevant and effective across the spectrum of competition and conflict.

To meet these various challenges, Defence must prioritise research, development and experimentation, maintaining strategic advantage by exploiting innovative concepts and cutting-edge technological advances – and Artificial Intelligence is one of the technologies essential to Defence modernisation. Imagine a soldier on the front line, trained in highly-developed synthetic environments, guided by portable command and control devices analysing and recommending different courses of action, fed by database capturing and processing the latest information from hundreds of small drones capturing thousands of hours of footage. Imagine autonomous resupply systems and combat vehicles, delivering supplies and effects more efficiently without putting our people in danger. Imagine the latest Directed Energy Weapons using lightning-fast target detection algorithms to protect our ships, and the digital backbone which supports all this using AI to identify and defend against cyber threats.

AI has enormous potential to enhance capability, but it is all too often spoken about as a potential threat. AI-enabled systems do indeed pose a threat to our security, in the hands of our adversaries, and it is imperative that we do not cede them a vital advantage. We also recognise that the use of AI in many contexts, and especially by the military, raises profound issues. We take these very seriously – but think for a moment about the number of AI-enabled devices you have at home and ask yourself whether we shouldn’t make use of the same technology to defend ourselves and our values. We must be ambitious in our pursuit of strategic and operational advantage through AI, while upholding the standards, values and norms of the society we serve, and demonstrating trustworthiness.

This strategy sets out how we will adopt and exploit AI at pace and scale, transforming Defence into an ‘AI ready’ organisation and delivering cutting-edge capability; how we will build stronger partnerships with the UK’s AI industry; and how we will collaborate internationally to shape global AI developments to promote security, stability and democratic values. It forms a key element of the National AI Strategy and reinforces Defence’s place at the heart of Government’s drive for strategic advantage through science & technology.

Rt Hon Ben Wallace MP

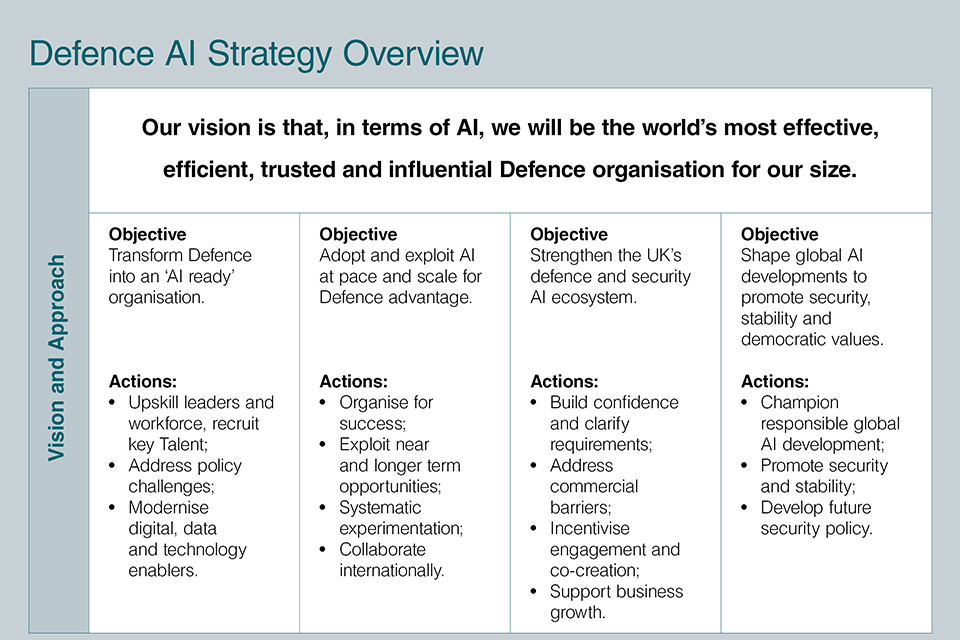

Defence AI Strategy Overview

Our vision is that, in terms of AI, we will be the world’s most effective, efficient, trusted and influential Defence organisation for our size.

Objective 1: Transform Defence into an ‘AI ready’ organisation.

Actions to achieve objective 1: Upskill leaders and workforce, recruit key talent; address policy challenges; modernise digital, data and technology enablers.

Objective 2: Adopt and exploit AI at pace and scale for Defence advantage.

Actions to achieve objective 2: Organise for success; Exploit near and longer-term opportunities; Systematic experimentation; Collaborate internationally.

Objective 3: Strengthen the UK’s defence and security AI ecosystem.

Actions to achieve objective 3: Build confidence and clarify requirements; Address commercial barriers; Incentivise engagement and co-creation; Support business growth.

Objective 4: Shape global AI developments to promote security, stability and democratic values.

Actions to achieve objective 4: Champion responsible global AI development; Promote security and stability; Develop future security policy.

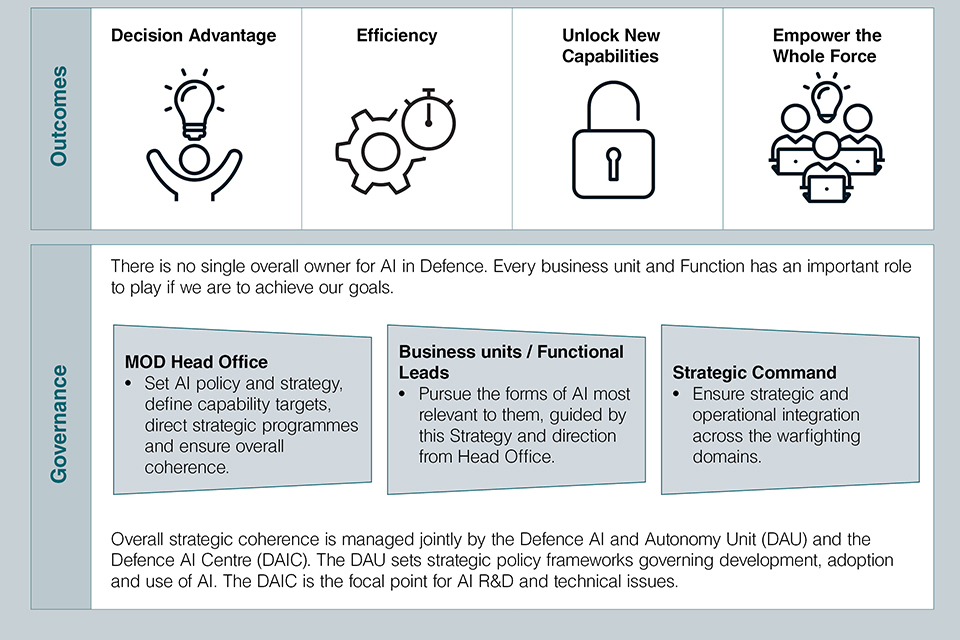

The four outcomes of AI adoption for Defence: Decision Advantage, Efficiency, Unlock New Capabilities, Empower the Whole Force.

The Governance of AI in Defence: There is no single overall owner for AI in Defence. Every business unit and Function has an important role to play if we are to achieve our goals.

- MOD Head Office: Set AI policy and strategy, define capability targets, direct strategic programmes and ensure overall coherence.

- Business units / Functional Leads: Pursue the forms of AI most relevant to them, guided by this Strategy and direction from Head Office.

- Strategic Command: Ensure strategic and operational integration across the warfighting domains.

Overall strategic coherence is managed jointly by the Defence AI and Autonomy Unit (DAU) and the Defence AI Centre (DAIC). The DAU sets strategic policy frameworks governing development, adoption and use of AI. The DAIC is the focal point for AI R&D and technical issues.

What is Artificial Intelligence?

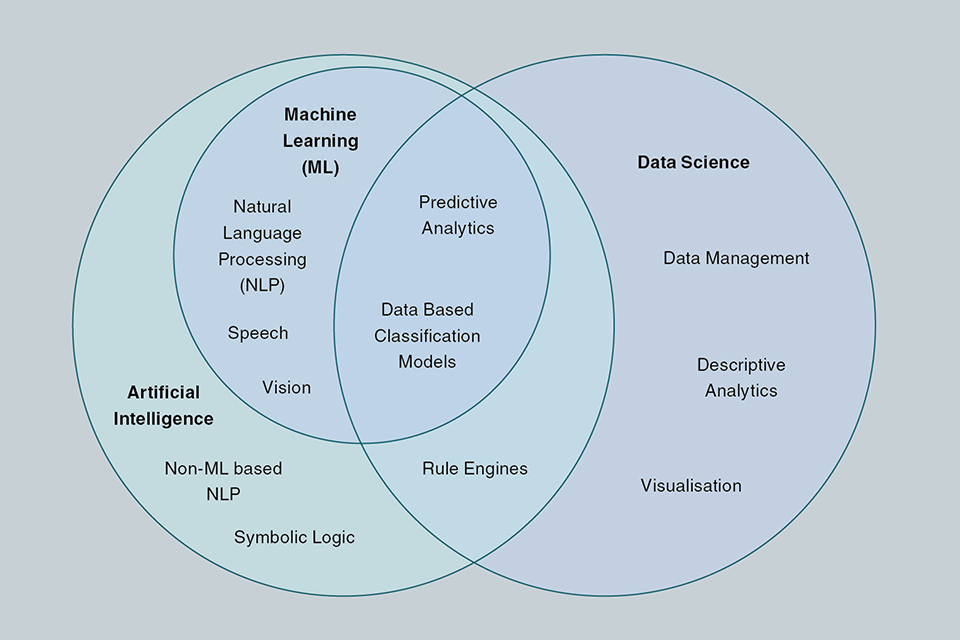

Defence understands Artificial Intelligence (AI) as a family of general-purpose technologies, any of which may enable machines to perform tasks normally requiring human or biological intelligence, especially when the machines learn from data how to do those tasks.

A Venn Diagram showing the 3 circles: The first is Data Science, which includes predictive analytics, data-based classification models, data management, descriptive analysis, visualisation, and rule engines, overlaps with the second: Artificial Intelligence including rule engines, non-ML based natural language processing, and symbolic logic. The third circle, Machine Learning, entirely sits inside the second circle and overlaps the first. Machine Learning includes natural language processing, speech, vision, predictive analytics, and data-based classification models.

Overlapping Technologies:

- AI: Machines that perform tasks normally requiring human intelligence, especially when the machines learn from data how to do those tasks. - UK National AI Strategy

- Machine Learning: Computer algorithms that can ‘learn’ by finding patterns in sample data and then apply this to new data to produce useful outputs, often using neural networks. - Alan Turing Institute

- Data science: Research that involves the processing of large amounts of data in order to provide insights into real-world problems. - Alan Turing Institute

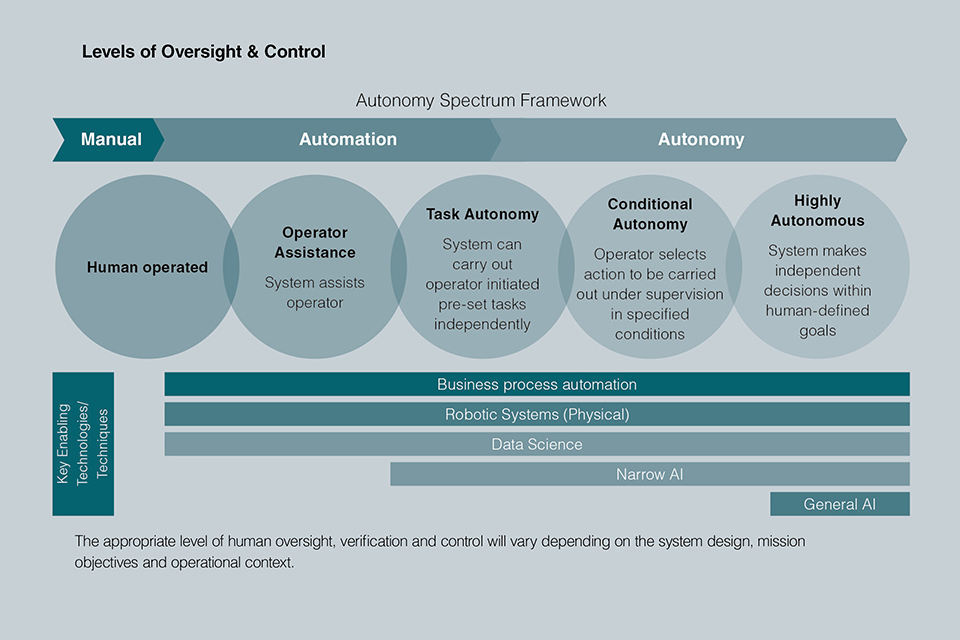

Levels of Oversight & Control

A diagram showing the Autonomy Spectrum Framework represented against a gradient from left to right: from manual, to automation, to autonomy. Starting from the left and moving right:

- Human Operated

- Operator Assistance – System assists the operator

- Task Autonomy – System can carry out operator initiated pre-set tasks independently.

- Conditional Autonomy – Operator selects action to be carried out under supervision in specified conditions.

- Highly Autonomous – Systems makes independent decisions within human-defined goals.

Under these categories, sits the key enabling technologies and techniques. Spanning the full width of the diagram is Business process automation, robotic systems (physical), and data science. Spanning from ‘Task Autonomy’ is narrow AI, and under just ‘Highly Autonomous’ is general AI.

The appropriate level of human oversight, verification and control will vary depending on the system design, mission objectives and operational context.

Executive Summary

Our vision is that, in terms of AI, we will be the world’s most effective, efficient, trusted and influential Defence organisation for our size:

- Effective – through the delivery of battle-winning capability and supporting functions, and in terms of our ability to collaborate and partner with the UK’s allies and AI ecosystem;

- Efficient – through innovative use of technology to deliver capability, conduct operations, and realise productivity benefits across our organisation;

- Trusted – by the public, our partners and our people, for the safety and reliability of our AI systems, and our clear commitment to lawful and ethical AI use in line with our core values;

- Influential – in terms of shaping the global development of AI technologies and managing AI-related issues to positive ends, working collaboratively and leading by example.

The Integrated Review (2021) highlights national excellence in AI as central to securing the UK’s status as a ‘Science and Technology Superpower’ by 2030. The National AI Strategy (2021) notes its huge potential to rewrite the rules of whole industries, drive substantial economic growth and transform all areas of life. The Integrated Operating Concept (2020) describes how pervasive information and rapid technological change are transforming the character of warfare. Across the spectrum of military operations, conflict is becoming increasingly complex and dynamic. New technologies generate massive volumes of data, unlock new threats and vulnerabilities and expand the scale of potential attacks through advanced next-generation capabilities (such as swarming drones, high-speed weapons and advanced cyber-attacks).

These technologies – and the operational tempo they enable – are likely to compress decision times dramatically, tax the limits of human understanding and often require responses at machine speed. As the Defence Command Paper (2021) notes “future conflicts may be won or lost on the speed and efficacy of the AI solutions employed”. Simultaneously, information operations are increasingly important to counter false narratives that distract attention, provide cover for malign activities and undermine public support. In short, a radical upheaval in defence is underway and AI-related strategic competition is intensifying. Our response must be rapid, ambitious, and comprehensive.

This strategy sets out how we will approach this significant strategic challenge. It should be read by all senior leaders in Defence and cascaded widely throughout the organisation because AI affects everybody. It has particular relevance to all those involved in Defence Force Development and Defence Transformation, whose structures and processes will have a key role to play in implementation and delivery – but every part of Defence must identify those elements relevant to them and act accordingly.

We must transform into an ‘AI ready’ organisation. We will proactively drive changes to our culture, skills and policies, training leaders, upskilling the workforce, and strengthening the Defence AI & Autonomy Unit. We will create a Defence AI Skills Framework and new AI career development and progression pathways. We will recognise that data is a critical strategic asset, curating and exploiting it accordingly. We will implement the Digital Strategy for Defence (2021) and the Data Strategy for Defence (2021), and deliver a new Digital Backbone and Defence AI Centre. Some elements of this transformation (especially Digital and Data enablers) will be delivered or supported on a pan-Defence basis, guided by a new AI Technical Strategy, but this is a challenge for every part of our organisation with the nature of that challenge depending on the specific organisation, its business, and the opportunities provided by AI.

We must adopt and exploit AI at pace and scale for Defence advantage, establishing AI as one of our top priorities and a key source of strategic advantage within our Capability Strategies and Force Development processes. Our opportunity and our challenge lie in the sheer breadth of AI applications across our organisation. In the near term, we will enhance our effectiveness, efficiency and productivity through the systematic roll-out and adoption of ‘AI Now’: mature data science, machine learning and advanced computational statistics techniques. In parallel, we will invest in ‘AI Next’: cutting-edge AI research and development integrated within our broader R&D pipelines. Integrated multi-disciplinary delivery teams will be at the heart of our approach to AI adoption and the development of effective Human-Machine Teaming, combining human cognition, inventiveness and responsibility with machine-speed analytical capabilities. We will establish a centre to assess and mitigate AI system vulnerabilities and threats. We will work closely with allies and partners, developing innovative capability solutions to address common challenges, while sharing the burdens of maintaining niche yet essential AI development and test capabilities.

We must stimulate and support the UK’s defence and security AI ecosystem , building on commitments set out in the Defence & Security Industrial Strategy (2021) and the National AI Strategy. Our ability to exploit these technologies is rooted in the vitality of our industrial and academic AI base. We will champion and support our national AI ecosystem as a strategic asset in its own right, forging a more dynamic and integrated partnership. We will foster closer links with the sector, establishing a new Defence & National Security AI Network, promoting talent exchange and co-creation, communicating a scaled demand signal to encourage civil sector investment in defence-relevant AI R&D, and simplifying access to Defence data and assets. We will continue to improve our acquisition system to drive greater pace and agility in delivery. We will also take steps to promote opportunities for small & medium-sized enterprises, modernise regulatory approaches and maximise the exploitation and commercialisation of Defence-held AI-related intellectual property. We will do all of this within Defence whilst more broadly supporting the transition to an AI-enabled economy, capturing the benefits of innovation in the UK.

We must shape global AI developments to promote security, stability and democratic values. As AI becomes increasingly pervasive, it will significantly alter global security dynamics. It will be a key focus for geostrategic competition, not only as a means for technological and commercial advantage but also as a battleground for competing ideologies. We will shape the development of AI in line with UK goals and values, promoting ethical approaches and influencing global norms and standards, in line with democratic values. We will promote security and stability, ensuring UK technological advances are appropriately protected, countering harmful technology proliferation and exploring mechanisms to build confidence and minimise risks associated with military AI use. As we develop our security policies to reflect AI-related challenges we will maintain a broad perspective on implications and threats, considering extreme and even existential risks which may arise, and engaging proactively with allies and partners. We will champion strategic risk reduction and seek to create dialogue to reduce the risk of strategic error, misinterpretation and miscalculation. We will ensure that – regardless of any use of AI in our strategic systems – human political control of our nuclear weapons is maintained at all times.

1 Introduction

We face a strategic imperative: adapt and excel in our exploitation of technologies like Artificial Intelligence (AI), or increasingly fall behind our allies and competitors. The challenges are far-reaching and fundamental, and we must be ambitious.

1.1 The global technology context

The Integrated Review identifies rapid technological change as a critical strategic challenge. New technologies are being developed and adopted faster than ever before, driving profound changes across our societies, politics and economies, and accelerating the emergence of threats. While break-through discoveries are driven by Government and universities, industry and commercial opportunities are key engines of innovation. Global competition for rare talent is fierce. Technology is an arena of intensifying and increasingly critical competition between states, both in prosperity and security terms and as an expression of different visions for society.

Adversaries are investing heavily to challenge our technological edge and threaten our interests. Increasingly, we are seeing hostile acts against our societies, economies and democracies that are difficult to detect, complex to challenge, and more difficult still to deter. Our future security, resilience, international standing and prosperity will be defined by our ability to comprehend, harness and adapt to rapid technological change.

1.1.1 The significance of Artificial Intelligence

AI is perhaps the most transformative, ubiquitous and disruptive new technology with huge potential to rewrite the rules of entire industries, drive substantial economic growth and transform all areas of society. It will be part of most future products and services, powering the 4th Industrial Revolution and affecting every aspect of our lives. This Strategy defines AI as a family of general-purpose technologies, any of which may enable machines to perform tasks that would traditionally require human or biological intelligence, especially when the machines learn from data how to do those tasks; for example, recognising patterns, learning from experiences, making predictions and enabling actions to be taken.

Defence applications for AI stretch from the corporate or business space - the ‘back office’ - to the frontline: helping enhance the speed and efficiency of business processes and support functions; increasing the quality of decision-making and tempo of operations; improving the security and resilience of inter-connected networks; enhancing the mass, persistence, reach and effectiveness of our military forces; and protecting our people from harm by automating ‘dull, dirty and dangerous’ tasks.

The potential to supplement or replace human intelligence raises fundamental questions about our relationship with technology, the personal and societal implications of big data, and the scope for human agency, rights and accountability in an age of data-driven, machine-speed decision-making. Nevertheless, the widespread use and adoption of these technologies is irresistible – those who adopt and adapt successfully will prosper; the unsuccessful will fall behind. Realising the benefits of AI – and countering the resulting threats – may be one of the most critical strategic challenges of our time.

The UK will lead by example, working with partners around the world to make sure international agreements embed our ethical values, and making clear that progress in AI must be achieved responsibly and safely, according to democratic norms and the rule of law.

Exercise SPRING STORM – AI on the Ground

In May 2021, AI was used on a British Army operation for the first time. Soldiers from the 20th Armoured Infantry Brigade trialled a Machine Learning engine designed to process masses of complex data, including information on the surrounding environment and terrain, offering a significant reduction in planning time over the human team; whilst still producing results of equal or higher quality.

The trial demonstrated the potential for AI to quickly process vast quantities of data, providing commanders with better information during critical operations and transferring the cognitive burden of processing data from a human to a machine. The Machine Learning engine had been developed in partnership with industry and used automation and smart analytics along with supervised learning algorithms. This AI capability can be hosted in the cloud or operate in independent mode. By saving significant time and effort, it provides soldiers with instant planning support.

This is one of the first steps towards achieving machine-speed command and control. The trial took place during an annual large-scale exercise with soldiers from NATO’s Enhanced Forward Presence Battlegroup, led by the British Army.

1.1.2 AI threats

The use of AI by adversaries will heighten threats above and below the threshold of armed conflict. AI has potential to enhance both high-end military capabilities and simpler low-cost ‘commercial’ products available to a wide range of state and non-state actors. Adversaries are seeking to employ AI across the spectrum of military capabilities, including offensive and defensive cyber, remote and autonomous systems, situational awareness, mission planning and targeting, operational analysis and wargaming and for military decision support at tactical, operational and strategic levels.

Adversary appetite for risk suggests they are likely to use AI in ways that we would consider unacceptable on legal, ethical or safety grounds. Equally, adversaries will use a range of information, cyber and physical means to attack our AI systems and undermine confidence in their performance, safety and reliability (e.g. by ‘poisoning’ our data, corrupting hardware components in our supply chain, or interfering with communications and commands).

Understanding the Threat

Adversaries and systemic competitors are investing heavily in AI technologies to challenge our defence and security edge. We have already seen claims that current conflicts have been used as test beds for AI-enabled autonomous systems, and we know that adversaries will use technology in ways that we would consider unethical and unsafe.

Potential threats include enhanced Cyber and information warfare, AI-enabled surveillance and population control, accelerated military operations and the use of autonomous physical systems. Non-state actors are seeking to weaponise advanced commercial products to spread terror and hold our forces at risk. As these case studies illustrate, this is not a hypothetical future but the here and now.

As Hostile State and Non-State Actors build their AI capabilities, they will increasingly attempt to acquire key technologies and Intellectual Property from UK academia and Industry. This is a major threat. The UK must prepare to defend our most valuable technologies and capabilities, protect our universities from foreign interference, and safeguard industry, intervening where necessary.

State-Based Threats

Adversaries are developing military AI and AI-enabled robotic systems in pursuit of information superiority and capability advantage.

Open-source analysisFN suggests Russia is prioritising AI R&D in areas such as Command and Control, Electronic Warfare, Cyber, and uncrewed systems in all domains.

One example is the S-70 Okhotnik: a 25 ton, jet powered unmanned combat air system with stealth features to help it survive in contested airspace. Russia claims it has a level of artificial intelligence and that missions may include ISR, Electronic Warfare and air-to-surface / air-to-air strike. Flight testing commenced in 2019 and is on-going, with claims it will enter into service as soon as 2025.

Advanced ‘Proxy’ Threats

Non-state actors are repurposing commercial technologies to enhance their capabilities.

For example, in 2017 Iranian-backed Houthi forces in the Yemen used an Uncrewed Surface Vehicle (USV; probably a converted fishing skiff) loaded with explosives to severely damage the Saudi Arabian naval frigate, AL-MADINAH. They have since used USVs to attack maritime targets at up to 1000km and also launched attacks using Uncrewed Air Vehicles (likely with support from Iran).

While current systems may be limited to GPS waypoint navigation to reach a predetermined target, irresponsible proliferation of AI technologies could enable proxies to field far more dangerous capabilities in future.

AI-Enhanced Cyber Threats

Artificial Intelligence has the potential to significantly increase the impact of malicious cyber attacks, potentially probing for and exploiting cyber vulnerabilities at a speed and scale that is impossible for human monitored systems to defend against.

AI could also be used to intensify information operations, disinformation campaigns and fake news, for example through broad spectrum media campaigns or by targeting individuals using bogus social media accounts and video deep fakes.

Other AI Threats

AI is increasingly being used by adversaries across the full spectrum of military capabilities, including for situational awareness, optimised logistics, operational analysis and wargaming and for decision support at tactical, operational and strategic levels.

Hostile states are almost certainly conducting research in the use of AI for mission planning and targeting, building on capabilities that are already used to curtail civil liberties, e.g. CCTV surveillance and online monitoring.

1.1.3 AI Competition

Global competition for advantage through AI is intense. Allies, adversaries and systemic competitors are investing heavily to develop research capabilities, maximise access to talent and accelerate solutions to market. The UK has significant strengths and is recognised as an AI powerhouse. Our thriving AI sector is worth over £15.6bn and ranked amongst the most innovative and productive in the world. Our vibrant digital sector – worth £149bn in 2018 – creates innovative businesses, produces ‘unicorns’ and employs highly skilled people across the UK, with the 3rd highest number of AI companies in the world. While the US is the current world leader in AI technologies (e.g. in research and patents; in government and private sector investment ; and in the range, reach and size of their AI industrial base), China has substantially accelerated the development of its own AI ecosystem to become world class in many aspects, including the development of AI Talent. Dozens of countries have published ambitious AI strategies to secure economic, societal and security benefits. This offers great scope for collaboration, though opportunities must be balanced against national security risks.

1.2 Our response

We are well-placed to take advantage of AI opportunities by capitalising on our national R&D strengths, aligning with the broader aims set out in the National AI Strategy to promote long-term investment and planning, support the transition to an AI-enabled economy and optimise national and international governance. Over 200 AI-related R&D programmes are underway in Defence, ranging from machine learning applications to ‘generation after next’ research; and covering areas from autonomous ships and swarming drones, to talent management and predictive maintenance.

This is a promising start, but a step change is urgently required if we are to embrace AI at the pace and scale required to match the evolving threat. This Strategy sets out a comprehensive response to the opportunities and disruptive impacts of AI, along with the short to medium term actions that we will take to address these challenges and catalyse a once-in-a-generation technological shift across every part of our business, in our operational activities and in our partnerships.

Our objectives are to:

- transform into an ‘AI ready’ organisation, investing in critical enablers and shaping a culture fit for the Information Age;

- adopt and exploit AI at pace and scale for Defence advantage, pursuing both ambitious, complex projects and simpler, iterative approaches;

- strengthen the UK’s defence and security AI ecosystem, recognising the fundamental need to work with partners across government, industry and academia;

- shape global AI developments to promote security, stability and democratic values, projecting influence internationally and working with allies.

1.3 Ambitious, safe and responsible use of AI

AI has extraordinary potential as a general enabling technology. We intend to realise its benefits right across our organisation, from ‘back office’ to battlespace. We particularly seek to deliver and operate world-class AI-enabled systems and platforms, including AI-enabled weapon systems.

However, we recognise that the use of AI in many contexts – and especially by the military – raises profound issues. There are concerns about fairness, bias, reliability, and the nature of human responsibility and accountability. (For example, there are well documented instances of recruiting software demonstrating racial or gender bias). Unintended or unexpected AI-enabled outcomes could clearly have particularly significant consequences in an operational context. We take all these issues very seriously.

1.3.1 Our commitment as a responsible AI user

The National AI Strategy states that the UK public sector will lead the way by setting an example for the safe and ethical deployment of AI through how it governs its own use of the technology. As we pursue strategic and operational advantage through AI, we are determined to uphold the standards, values and norms of the society we serve, and to demonstrate trustworthiness.

We have therefore published details of our approach, which will be ‘Ambitious, Safe, Responsible’. UK military capabilities and operations will always comply with our national and international obligations. We will maintain rigorous and robust safety processes and standards by ensuring our existing approaches to safety and regulation across Defence are applied to AI. Our approach applies however and wherever in Defence AI is used, throughout system lifecycles, and is guided by robust ethical principles, developed in collaboration with the Centre for Data Ethics and Innovation (CDEI). As well as providing a blueprint for responsible AI, this approach is also the best way of ensuring fast-paced, effective and innovative AI adoption – giving our people, and our partners across Industry and Academia (including bodies such as the AI Council, Turing Institute, and the Trustworthy-Autonomous Systems Hub) the confidence to develop concepts, techniques and capability, meeting our operational needs, and reflecting deeply-held values.

1.3.2 Our commitment to our people

Our pursuit of AI-enabled capabilities will not change our view that our people are our finest asset. AI has tremendous power to enhance and support their work (e.g. enabling analysts to make sense of ever greater quantities of data), but we understand that some challenges require human creativity and contextual thinking, and that the real-world impact of military action demands applied human judgement and accountability. We will not simplistically assume that AI inherently reduces workforce requirements, even if it does change the activities we need people to undertake. Where staff are affected by the adoption of AI, we will support them and help them find new roles and skills.

We recognise we should use AI to minimise the risks faced by our people. As part of our approach to responsible AI, we also recognise the importance of not exposing our people to legal jeopardy in the operation of novel technologies.

Machines are good at doing things right (e.g. quickly processing large data sets). People are good at doing the right things (e.g. evaluating complex, incomplete, rapidly changing information guided by values such as fairness). Human-Machine Teaming will therefore be our default approach to AI adoption, both for ethical and legal reasons and to realise the ‘multiplier effect’ that comes from combining human cognition and inventiveness with machine-speed analytical capabilities.

2 Transform into an ‘AI Ready’ organisation

Defence must rapidly transition from an industrial age Joint Force into an agile, Information Age Integrated Force to stay ahead of adversaries amid an increasingly complex and dynamic threat environment. Rapid and systematic adoption of AI – where it is the right solution – will be an essential element in realising this ambition.

This Chapter sets out the foundational ‘enablers’ that are urgently needed to properly prepare Defence for widespread AI adoption. All organisations and Functions will need to address their ‘AI readiness’ – with key elements and support provided across Defence by Functional Owners (particularly Digital) – based on the following approach:

2.1 Culture, skills and policies

Our internal systems and processes have often failed to keep pace with those used to drive digital transformation in the private sector. We must change into a software-intensive enterprise, organised and motivated to value and harness data, prepared to tolerate increased risk, learn by doing and rapidly reorient to pursue successes and efficiencies. We must be able to develop, test and deploy new algorithms faster than our adversaries. We must be agile and integrated, able to identify and interpret threats at machine speed with the cross-domain culture necessary to defend, exploit, respond and recover in real time.

This is a whole-of-Defence challenge, within the context of a national AI skills gap. We must raise understanding of AI at all levels: ensuring leaders can navigate the hype, seize opportunities and act as smart ‘customers’ and champions of AI services; improving AI literacy across our professional communities (particularly among policy, legal and commercial staff); growing expertise in deep AI coding and engineering skills across the Whole Force, including with our partners in industry; and generating an informed user-base with the knowledge and confidence to use new capabilities effectively. We will increase the diversity of people working with and developing AI, to best reflect and protect society.

The National AI Strategy sets out the Government’s interventions, focusing on three areas to attract and train the best people: those who build AI, those who use AI, and those we want to be inspired by AI. We will work with the Office for AI (OAI ), and colleagues across Government, tapping into these national programmes where appropriate, including programmes to allow greater flexibility and mobility of global AI talent, to ensure Defence remains at the forefront of AI development and deployment.

2.1.1 Strategic planning for Defence AI skills

Our ability to deliver critically depends on our ability to develop, attract and retain skilled people across Defence. However, the global marketplace for AI talent is intense and Defence risks a severe skills deficit if we do not act quickly and decisively. We must understand our AI skills requirements across the board, modernise our recruitment and retention offer and act strategically to ensure we have the right people in the right places to deliver. We will:

- develop a whole-force Defence AI Skills Framework, aligned to the Pan Defence Skills Framework, identifying skills requirements across Defence as every organisation will need a mix of AI skills suitable to its activities;

- identify and unlock policy barriers so that we can recruit the right talent and skills, developing options for an AI pay premium to incentivise recruitment, upskilling and retention, and exploring flexible ways of recruiting elite talent, including lateral entry;

- set benchmarks for recruitment and retention against key categories, actively monitoring AI workforce ‘pinch points’ and providing assurance on the deliverability of local plans and sustainability of critical capabilities.

2.1.2 Applying the brightest minds to the AI challenge

Effective multi-disciplinary AI development and delivery teams require a range of specialists, including data scientists, AI developers, machine learning engineers and AI analysts. An AI career in Defence must be recognised as an attractive, aspirational choice for this highly skilled talent. In parallel, we must make the most of our existing people, identifying and developing individuals that have the aptitude and attitude for AI careers. We will:

- establish a Head of AI Profession (sitting within the Defence AI Centre (DAIC), described in section 2.2.3) responsible for the AI Skills Framework, developing our recruitment and development retention offer, setting standards for delivery team skills (particularly for new or novel deployments of AI) – working with the Defence Digital, Data and Technology (DDaT) and Cyber Professions, FLCs, CDP and the Government Digital Service;

- create AI career development and progression pathways – owned by the Head of AI Profession – with options for deep specialists as well as skilled generalists. This will build on existing ‘best in class’ initiatives in Defence (e.g. JHub coding training) and wider government (e.g. the Data Science Accelerator);

- develop new mechanisms to identify and incubate AI talent within Defence, potentially including aptitude testing, flexible entry paths to the new AI profession and incentive schemes to encourage uptake of advanced technical courses;

- explore options for Unified Career Management of military AI professionals, similar to the model that has been established for Cyber professionals. AI awareness and understanding should be incorporated within military training syllabuses and we will examine options for a dedicated AI Academy as part of the Defence Academy;

- strengthen existing academic partnerships and explore options to tap into government supported interventions across top talent, PhDs and Masters levels. This includes Turing Fellowships, Centres for Doctoral Training, Postgraduate Industrial-Funded Masters and AI Conversion Courses. We will examine placement schemes for AI Masters students to gain practical experience in Defence, and work with national skills programmes to increase the volume of UK talent in critical areas;

- use more specialist reservists, tailoring recruitment exercises to attract data-literate individuals to ensure we make effective use of the UK’s wider AI talent pool – an essential component of the Whole Force.

2.1.3 AI leadership at all levels

Lack of understanding cannot be a barrier to AI exploitation. Leaders at all levels must be equipped to appreciate the benefits AI can provide, the risks they need to look out for, and any ethical and societal implications that might arise (with technical knowledge varying by roles). We will:

- mandate that all senior leaders across Defence must have foundational and strategic understanding of AI and the implications for their organisation;

- Provide targeted products to engage and support “middle level” leaders ensuring they have the training and understanding necessary to identify and exploit AI-enabled opportunities and manage implementation risks;

- support leaders at all levels through coherent AI leadership training programmes, including appropriate horizon scanning products and communities of interest to share understanding of issues, opportunities and best practice;

- task all Defence organisations and Functions to review policies and processes to ensure leaders are empowered to seize AI opportunities at pace;

- establish an Engagement and Interchange Function, delivered by the Defence AI Centre, to encourage seamless interchange between Defence, academia and the tech sector. Working with academia and industry (including the AI Council, the Alan Turing Institute and the Defence Growth Partnership SME forum), we will use secondments and placements to bring in talented AI leaders from the private sector with a remit to conduct high-risk innovation and drive cultural change; create opportunities for external experts to support policy-making; and develop schemes for Defence leaders to gain tech sector experience. We will ensure our approach works for both SMEs as well as our more familiar defence partners.

2.1.4 Upskilling our workforce

We will generate an informed community of AI users across our workforce, enshrining an understanding of AI and Data as a fundamental component of professional development at all levels of the organisation, supported by appropriate training and awareness. In addition to general digital literacy, our people must have enough understanding to work confidently, effectively and responsibly with AI tools and systems. We need AI experts from a range of diverse backgrounds across all the professions; from policy and legal to commercial expertise. This is particularly important in the context of military operators, so we will:

- explore options to establish and manage a distinctive ‘AI-enabled military operator’ skill-set or trade, adapting existing skill-sets where AI becomes a significant factor;

- institute clear and auditable processes for the licensing and routine re-certification of military AI operators, akin to licences to operate vehicles or machinery, where appropriate;

- integrate AI and Human-Machine Teaming throughout our training and exercise programmes, using real, virtual and simulated techniques, and establish clear systems to capture and learn AI-related lessons from our operational deployments.

Military Data Science skills

Defence Intelligence’s Military Data Science programme has trained personnel in coding and basic data science techniques. Service personnel complete postgraduate Data Science training, receive mentoring from experts and have access to data and development environments. This programme provides a deployable capability that can straddle knowledge of operations, intelligence, and data science. Military Data Science have been able to utilise open-source AI algorithms to provide information advantage to a wide range of intelligence operational requirements, including ensuring that data-centric intelligence is delivered at pace whilst on operations. This has led to the delivery of data science projects where a deep understanding of intelligence and operations, blended with an appreciation of data science techniques, has allowed significant contributions to intelligence analysts’ work.

2.1.5 Diversity and Inclusion

We are committed to increasing the diversity and inclusivity of our workforce. It is right that we represent the communities we serve, and diversity & inclusion promotes creativity, brings novel perspectives to bear and helps challenge established or outdated approaches. Our skills interventions will strengthen the diversity and inclusiveness of our workforce.

These qualities are particularly important in the development and exploitation of new technologies, helping us imagine and develop novel capabilities and tactics, avoid strategic surprises, and anticipate unintended consequences. Diverse & inclusive design, development and operational teams are essential to understand how AI systems affect and are usable by different personnel, and to mitigate biases and other effects which may otherwise pass unnoticed while disproportionately impacting certain groups.

We also recognise that – used properly – AI may be a powerful tool to help promote diversity. Diversity & inclusion principles will therefore underline all elements of this strategy; further, we will continuously assess diversity & inclusion in the development and deployment of AI and act where needed to improve it.

2.1.6 Policy, process and legislation

The MOD Science & Technology Strategy (2020) highlights the importance of ‘anticipatory policy making’ – resolving policy issues before the point of technology maturation – for the successful adoption of new capabilities. The Defence AI & Autonomy Unit (DAU) was created in 2018 to help the department adopt these technologies at pace. We must now accelerate efforts to ensure our policies, processes and approaches to legislation enable, rather than constrain us. As examples: HR policies will need to be updated to ensure that staff can use AI effectively and fairly to speed up recruitment; our ability to glean crucial insights from ever-greater quantities of data depends on the intellectual property and commercial frameworks which underpin procurement.

Effective adoption of AI also hinges on questions about existing intelligence-related legislation and information-sharing permissions; and the planning and conduct of military operations involving AI-enabled capability will be shaped by policies governing factors such as the delegation of command & control. We will therefore:

- strengthen the DAU as our focal point for AI-related policy and strategic coherence. Functional Owners and policy leads across Defence will work with the DAU to ensure policies and processes align with this Strategy, and to identify and address priority issues;

- establish a multi-disciplinary ‘Operational AI’ task force to focus particular effort on AI policy issues affecting national or coalition military operations and key intelligence activities;

- work with the Office for AI and colleagues across Government to identify and address key cross-cutting policy or legal considerations to support timely Defence adoption of AI.

Expeditionary Robotics Centre of Expertise (ERCoE)

The Expeditionary Robotics Centre of Expertise (ERCoE) brings together robotics and autonomous systems experts from across MOD, academia and industry with a collaborative and agile-by-design approach. Examples of autonomy projects include:

Nano Uncrewed Air Systems are small, autonomous drones designed for use in the field. Usually weighing less than 200 grams and featuring high-quality full motion video cameras and thermal imaging capabilities. An autopilot system enables the operator to handle the UAV in two modes – direct control or autonomously, following a predefined path. These autonomous vehicles can provide ‘eyes on’ around obstacles such as corners and walls, and increased awareness in harsh and challenging conditions, including over terrain.

Robotic Platoon Vehicles (RPV) and ATLAS (Autonomous Ground Vehicle Projects) use Machine Learning neural networks to analyse aerial and 3D imagery, classifying the traversability of terrain (e.g. dense woodland – poor, roads – good), to enable the systems to automatically generate and travel along routes. Machine Learning is also used to analyse camera data and identify objects in the environment that the vehicles might need to avoid or stop for. These objects could be humans, vehicles or plants. In the case of ATLAS, camera data is also used to identify features in the environment which, with other sensor data, allow to the vehicle to navigate without the need for GPS.

2.2 Digital, data and technology enablers

2.2.1 Trusted, coherent and reliable data

Data is a critical strategic asset, second only to our people in terms of importance. In recognition of this the Defence data transformation is underway with a central Data Office established within Defence Digital, as well as a Defence Data Framework to transform Defence’s culture, behaviour and data capabilities.

There remains much to do, however, as our vast data resources are too often stove-piped, badly curated, undervalued and even discarded. We have published the Digital Strategy for Defence and the Data Strategy for Defence which set out how we will transform into a data-driven organisation: breaking down digital silos; establishing common data architectures, standards, labelling conventions and exploitation platforms (including an operational AI Analytics Environment); and driving appropriate access to integrated, curated and validated data across the Defence enterprise. Building on these measures, the priority of this Strategy is that data is exploited. We will:

- maintain data strategies within each part of our organisation, identifying what data will be a key source of advantage or efficiency, and how it will be obtained or captured. ‘Data registers’ will be available across MOD to promote experimentation and interoperability;

- embrace new ways to collect AI-relevant data, including by requiring software products and services to be instrumented to generate use and performance data (taking care not to impinge on people’s autonomy, privacy and rights);

- adopt new processes and ways of working (including suitable templates, formats and standards) to generate the structured data which can be effectively exploited downstream;

- embed protocols to ensure the veracity and integrity of our data sets, including that obtained from external (and possibly open) sources, and to mitigate risks of bias;

- review data-sharing arrangements with allies, industry and academia, including by exploring innovative ways to streamline data-sharing bureaucracy and introducing measures that ensure data shared with or generated by partners remains accessible to Defence;

- develop security protocols that encourage positive risk-taking while effectively protecting our data from adversarial attack and manipulation. This includes declassification and permissions mechanisms to encourage data sharing with fast-moving AI developers.

Advantage in AI will depend on our ability to provide battle-winning algorithms and trusted machine-ready data to those that need it, when they need it, regardless of geography, platform or organisational boundaries. The right digital, data and technological enablers will be essential.

2.2.2 Computing power, networks and hardware

Fast, scalable and secure compute power and seamless network infrastructures are critical to fully exploit AI across Defence – enabling data to flow seamlessly across sensors, effectors and decision makers, so it can then be exploited for strategic military advantage in the battlespace and to drive efficiencies in the business space. The Digital Strategy for Defence sets out how this will be delivered through the ‘Digital Backbone’, a cutting-edge ecosystem combining people, process, data and technology. Underpinned by enhanced cyber security, technologies will be updated, our workforce digitally transformed, and robust processes implemented to ensure coherence and common standards.

Cloud hosting at multiple classifications is essential to provide the scalable compute needed for our AI models. Cloud-based hosting is already available for Official Sensitive data. We will deliver clouds at both Secret and Above Secret by end 2022 and 2024, respectively. Since operational AI use will often rely on sensitive data, we must deliver effective methods of transferring data between classifications. Application Programme Interfaces (API) and diodes must also become commonplace to enable us to develop and deploy the latest algorithms at pace and scale.

Advanced sensing, next generation hardware (e.g. ‘beyond CMOS’ technologies) and novel ‘edge computing’ approaches will be critical to exploiting AI in Defence, including by creating new opportunities to operate and deliver enhanced capability in challenging, signal denied or degraded environments. We will therefore invest in R&D and partnerships across Government and with industry to develop and adopt next-generation hardware.

2.2.3 The Defence AI Centre (DAIC)

Alongside the Digital Backbone, we are establishing a Digital Foundry, bringing together and building on existing assets to deliver innovative, software-intensive capabilities. This federated ecosystem will be led by Defence Digital in partnership with other Defence exploitation and innovation functions, the S&T community, National Security partners, industry and academia. It is being designed to be the critical next step in the delivery of AI, data analytics, robotics, automation and other cutting-edge capabilities. The Defence AI Centre (DAIC) will be a key part of the Foundry, bringing together expertise from Defence Digital, Dstl and Defence Equipment & Support’s Future Capability Group. The DAIC achieved initial operating capability in April 2022, and is now growing to develop the critical mass of expertise to enable us to harness the game-changing power of AI and accelerate the coherent understanding and development of AI capabilities across the Department. The DAIC will have three main functions:

- act as a visionary hub, championing AI development and use across Defence;

- enable and coordinate the rapid development, delivery and scaling of AI projects that generate breakthroughs in strategic advantage;

- provide access to underpinning pan-Defence digital/data services and sources of expertise as common services to wider Defence.

With the wider Foundry, the DAIC will lead the provision of modern digital and Development, Security and Operations (DevSecOps) environments, common services and tools, and the authoritative data sources that engineers and data scientists require to prototype, test, assure and then deploy their software and algorithms quickly and at scale. AI technologies will be developed across a range of ecosystems (from bespoke in-house solutions to proprietary commercial products) to meet the wide range of potential applications. The DAIC will help guide and cohere technology development, helping establish the appropriate development environment considering the ultimate application of the developed system. It will develop and maintain a Defence AI Technical Strategy and AI Practitioner’s Handbooks which will guide efforts across Defence, including through appropriate standards and risk assurance frameworks for different defence operating contexts - from military grade AI applications to back office systems - together with underpinning Testing, Evaluation, Verification and Validation (TEV&V) approaches and technology protection measures. The DAIC will be established with policy freedoms to emulate successful ways of working from technology organisations, and will focus initially on optimising existing AI-related work across our S&T, FLC AI exploitation units and Digital businesses.

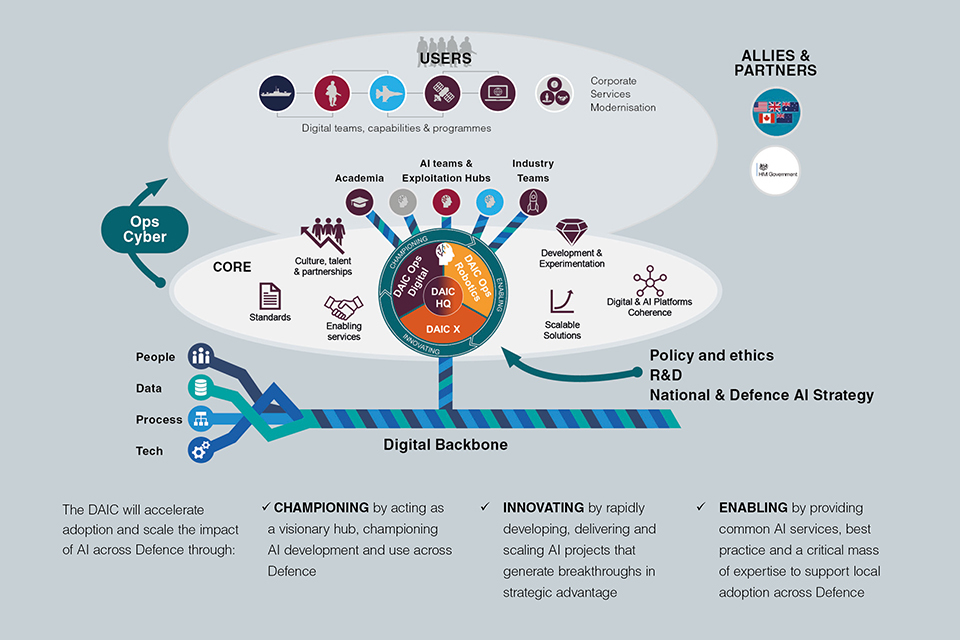

Defence AI Centre Architecture

The Defence Artificial Intelligence Centre (DAIC), a part of the Digital Foundry, is an enabling and delivery unit that will unleash the power of Defence’s data and allow development and exploitation of AI at scale and pace.

A diagram showing the architecture of the Defence AI Centre. The Digital Backbone runs along the bottom, comprising of People, Data, Process, and Tech. The Defence AI Centre comes off of the Digital Backbone. In the centre of the DAIC sits the DAIC Headquarters, surrounded by DAIC Ops Digital & Robotics, and DAIC-X, bordered by the goals of the DAIC: Championing AI in Defence; Enabling its use; Innovating through AI. Surrounding the DAIC is its core responsibilities: Standards; Enabling services; Culture, talent, and partnerships; Development and experimentation; Scalable solutions; and Digital and AI platforms coherence. Going outwards, the DAIC will engage with academia, industry team, and AI teams and exploitation hubs in the users community. Also included in these engagement channels is our Allies & Partners, wider HMG, and corporate services modernisation.

The DAIC will accelerate adoption and scale the impact of AI across Defence through:

- CHAMPIONING by acting as a visionary hub, championing AI development and use across Defence

- INNOVATING by rapidly developing, delivering and scaling AI projects that generate breakthroughs in strategic advantage

- ENABLING by providing common AI services, best practice and a critical mass of expertise to support local adoption across Defence

2.2.4 Technical assurance, certification and governance

AI systems present fundamentally different testing and assurance challenges to traditional physical and software capabilities, not least as it can be technically challenging to explain the basis for a system’s decisions. This is a significant risk to delivering our strategic objectives. We must strike the right risk balance, ensuring new AI-enabled capabilities are safe, robust, effective and cyber-secure, while also delivering at the pace of relevance – in hours, in the case of some algorithms.

We will ensure that Defence-specific governance aligns closely with national frameworks for AI technologies – encouraging innovation and investment while protecting the public and safeguarding fundamental values. In parallel we will work with global partners to shape norms and promote the responsible development of AI internationally.

We will pioneer and champion innovative approaches to testing, evaluation, verification and validation (TEV&V), establishing new live and virtual test capabilities, and collaborating with a broad range of partners. Our S&T programme will work with partners including through the National Digital Twin Programme and the Alan Turing Institute’s Data Centric Engineering Programme to develop novel and less risky design, development and testing approaches using digital models and simulations.

Head Office, the DAIC, Defence Equipment & Support and the Defence Safety Authority will establish a comprehensive framework for the testing, assurance, certification and regulation of AI-enabled systems – both the human and the technical component of Human Machine Teams. Our approach to AI risk management will be based on the ALARP (As Low as Reasonably Practical) principle that is common-place in Defence for safety-critical and safety-involved systems. This regime will recognise the importance of appropriate testing through the lifetime of systems, reflecting the possibility that AI systems continue to learn and adapt their behaviour after deployment.

We will collaborate with other government departments regulators, bodies such as the Regulatory Horizons Council, Standard Developing Organisations, industry, academia and international partners to drive flexible, evolving yet rigorous technical standards, policies and regulations for the design, development, operation and disposal of AI systems – contributing to the Government’s aim to build the most trusted and pro-innovation system for AI governance in the world. The OAI will set out a national position on governing and regulating AI in a White Paper due in 2022.

Trust is a fundamental, cross-cutting enabler of any large-scale use of AI. It cannot be assumed – it must be earned. It emerges when our people have the right training, understanding and experience; we have well-regarded ethics, assurance and compliance mechanisms; and we continuously verify, validate and assure AI capabilities within robust data, technical and governance frameworks.

3 Adopt and Exploit AI at Pace and Scale for Defence Advantage

We aspire to exploit AI comprehensively, accelerating ‘best in class’ AI-enabled capabilities into service; and making all parts of Defence significantly more efficient and effective. To do this, leaders and teams across Defence must excel at the employment and iteration of AI. The aggregation of gains (big and small) will be a major source of efficiency and cumulative advantage. We must also drive the delivery of ambitious, complex projects which will deliver transformative operational capability and advantage.

The enablers described in Chapter 2 provide the foundation to tackle many of the challenges to rapid and systematic adoption of AI. We will also capitalise on the range of research activities and capability demonstrators already underway, our vibrant national AI R&D base and our strong relationships with allies and partners.

3.1 Organising for success

AI is not the preserve of a single part of our organisation. Every business unit and Function has an important role to play if we are to achieve our goals. In line with our delegated model:

- Head Office will set overall AI policy and strategy, define capability targets / headmarks, direct key strategic AI programmes (where appropriate) and ensure the overall programmatic coherence of our AI-enabled capability;

- Business units and Functional Leaders across Defence will proactively pursue the forms of AI most relevant to them, guided by this Strategy and direction from Head Office. The majority of delivery will be owned by individual TLBs or capability Equipment Programmes;

- Strategic Command will ensure strategic and operational integration of AI-enabled capability across the five warfighting domains. MOD’s Chief Information Officer (CIO) ensures overall digital coherence and delivery of common infrastructure, environments, tools and networks for use across the Department.

Overall strategic coherence of the Department’s AI efforts is managed jointly by the Defence AI and Autonomy Unit (DAU) and the Defence AI Centre (DAIC). The DAU is responsible (on behalf of the MOD’s 2nd Permanent Secretary) for defining and overseeing strategic policy frameworks governing the development, adoption and use of AI (e.g. ethical approaches, risk thresholds, cross-cutting issues). The DAIC is the focal point for AI R&D and technical issues: cohering and organising cross-Defence activities; providing centralised services and tools; championing skills and partnerships; and acting as a delivery agent for strategic, joint or cross-cutting AI challenges. An overview of the Defence AI landscape is provided at Annex A.

While approaches to capability development, delivery and deployment may need to be reimagined to ensure AI can be exploited at pace, existing Defence ‘authorities’ (e.g. safety regulation, security accreditation) are broadly unchanged. However, responsible authorities must take account of AI-specific issues and challenges.

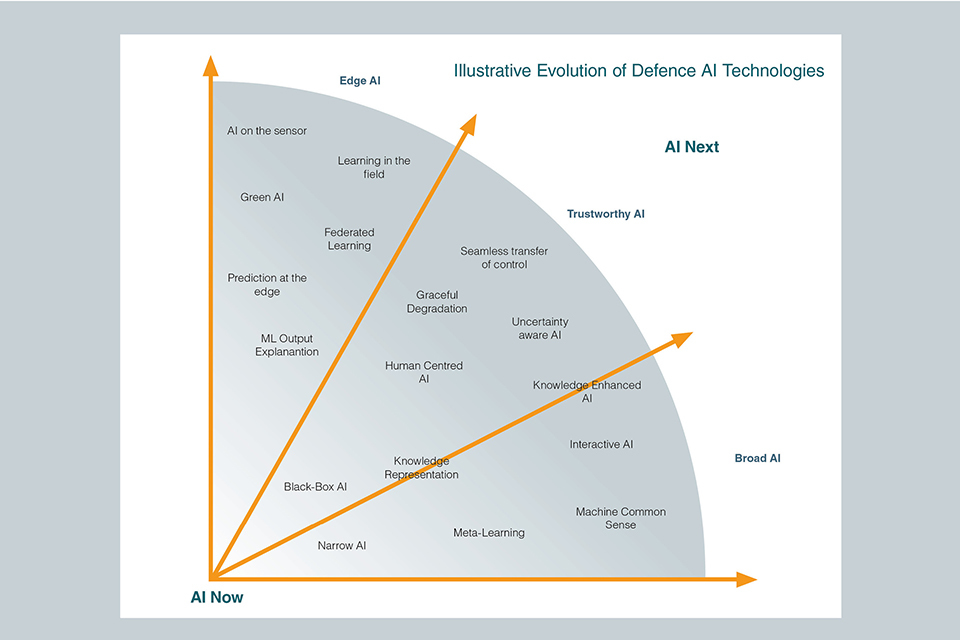

Navigating Technological Complexity

AI encompasses a range of general-purpose enabling technologies. This diagram illustrates the likely evolution of Defence-relevant AI technologies over time, however, in many cases there is significant uncertainty over their utility, transformative effect and timelines to maturity. Technological convergence adds to this complexity, as the combination of AI with other S&T developments (e.g. in quantum, biotech or advanced materials) may unlock game-changing applications or novel military capabilities.

The Defence AI Centre (DAIC) has a key role helping R&D teams across the department to navigate this complexity, building an ecosystem to accelerate solutions across the AI “lifecycle” from generation-after-next research and ‘AI Next’ capability demonstrators (led by Dstl), through to ‘AI Now’ solutions that can be delivered rapidly into the hands of the User.

The DAIC will promote an agile and innovative environment, learning from best practice across academia and industry, recognising that to succeed it is right that we will invest in some technologies and systems that may ultimately fail or transpire to be ‘dead-ends’ (e.g. for practical, ethical or security reasons); our approach will be to prototype, test utility and fail-fast if needs be. Incremental development ensures that we iteratively learn what works, the right tools and platforms to use and best practices standards and approaches to adopt. The DAIC will communicate pan-Defence direction and guidance through:

An AI Practitioner’s Handbook: seeking to capture and disseminate Good Practice from across Defence and industry, providing tailored practical guidance to support:

- Leaders in assessing the full opportunities and implications of using AI solutions;

- AI development teams in understanding the available sources of support and expertise;

- Procurement sponsors in ensure discussions with suppliers cover the full breadth of requirements and opportunities.

An AI Concept Playbook: seeking to spark ideas and constructive engagement with colleagues and partners across academia and industry by setting out the key prospective applications where we see AI having the potential to transform the way we operate or significantly enhance the delivery of military effects.

A Defence AI Technical Strategy: Bringing together the technical standards, tools, platforms and assurance requirements that must be applied for Defence AI projects; particularly championing innovation, multi-disciplinary collaboration, open architectures and common systems requirements.

A diagram showing an illustrative evolution of Defence AI technologies, beginning at AI Now and radiating out towards AI Next technologies. Segmented into three sections these technologies are: Segment 1, Edge AI including machine learning output explanation, prediction at the edge, federated learning, green AI, learning in the field, and AI on the sensor. Segment 2, Trustworthy AI including Black-box AI, knowledge representation, human centred AI, graceful degradation, uncertainty aware AI, and seamless transfer of control. Segment 3, Broad AI, includes narrow AI, meta-learning, machine common sense, interactive AI, and knowledge enhanced AI.

3.2 Our approach to delivery

Our activities can broadly be divided into three categories: (1) military capability; (2) operational and decision support capabilities; and (3) enterprise services and the ‘back office’. Technologically, the key distinction is between ‘AI Now’ (mature AI technologies that are available quickly, and which require little or no adaptation for a Defence context) and ‘AI Next’ (transformative ‘next-generation’ and ‘generation after next’ AI-enabled capabilities).

Many of the most immediate efficiency and productivity gains for Defence will be delivered through the systematic roll-out and adoption of AI Now. Opportunities in this area include the use of mature data science, machine learning and advanced computational statistics techniques to drive smarter financial or personnel management systems, streamlining logistics and maintenance, and embracing data fusion and advanced analytics tools to enhance intelligence, surveillance and reconnaissance (ISR) activities.

To accelerate AI Now adoption:

- business units and Functions will identify the portfolio of new projects most appropriate to their needs, reflecting their unique circumstances, challenges and risks;

- we will publish our strategic headmarks and develop an AI Concept Playbook – a living document which we will update frequently, sparking innovation by setting out the key applications where we see most benefit from AI adoption;

- we will prioritise adoption of proven techniques from the private sector, benchmarking our business and support services against comparable organisations, partnering to learn from and emulate ‘best practice’ and exploiting Commercial of the Shelf (COTS) technology- working closely with partners across our own national AI ecosystem. See chapter 4;

- we will delegate responsibility to local teams to identify and acquire or develop the products and solutions needed to achieve desired strategic headmarks – overall programmatic coherence will be managed through the DAIC.

In parallel, we will invest in AI Next: cutting-edge AI R&D designed to tackle current and enduring Defence Capability Challenges where emerging technologies have potential to provide a decisive war-fighting edge. Given the heightened technical and policy risks involved in the delivery of transformational operational capabilities, these activities are likely to be led centrally and, at least in the first instance, driven by the Defence Science and Technology (S&T) programme. Other elements of our R&D delivery system will deliver the capability and capacity to develop, test, integrate and deploy the resulting systems at pace. To accelerate AI Next adoption, we will:

- maintain horizon-scanning programmes, linked closely to academia and leading players in the sector, to ensure Defence is abreast and keeping pace with cutting-edge breakthroughs in this fast-moving, quickly-evolving area;

- prioritise AI as a source of strategic advantage within our Capability Strategies and Force Development processes, ensuring close collaboration between FLCs, Functional Owners and the R&D community;

- commit to ambitious and complex projects, establishing ambitious capability headmarks to galvanise R&D investment among our partners, accelerate development, and force the pace at which novel AI-driven capabilities and approaches are adopted across the Force;

- explore the mandating of equipment programmes to be ‘AI ready’ with an understanding that it may be necessary e.g. for future capital platforms, their sensors and effectors to process at the edge (pattern recognition, command and control, intelligence analysis).

The DAIC will act as our technical coherence authority: determining our requirement for in-house or on-shore assured capability; providing an expert ‘translator’ service to help leaders understand AI opportunities and implications; and ensuring that lessons and successful approaches are shared widely across Defence (including through the AI Technical Strategy and Practitioner’s Handbooks).

Defence R&D is focused around priority Capability Challenges

Pervasive, full spectrum, multi-domain Intelligence, Surveillance and Reconnaissance (ISR)

- Accelerating technologies with potential to enhance ISR in all in domains & environments.

Multi-domain Command & Control, Communications and Computers (C4)

- Enabling secure, resilient, integrated and coordinated operations and effects across domains.

Secure and sustain advantage in the sub-threshold

- Competing below the threshold of armed conflict, primarily in the information environment.

Asymmetric hard power

- Exploit and counter novel weapons systems (hypersonics, directed energy, swarms etc).

Freedom of Access and Manoeuvre

- Countering systems that limit our ability to manoeuvre in both traditional and new domains.

3.3 Promoting pace, innovation and experimentation

As we pursue both AI Now and AI Next, we will take a systems approach, recognising (a) that the integration of AI into physical or digital systems is perhaps as great a challenge, if not more so, than developing the AI itself; and (b) that we cannot focus on the technology in isolation, ignoring the other critical elements needed to realise benefits and deliver a genuine new capability. We will:

- learn by doing, tackling comparatively ‘simple’ AI projects – in terms of technical, ethical or operational risk – to deliver rapid evolutionary gains and de-risk more complex activities;

- embed a culture of systematic experimentation to iterate, field minimum viable products, test possibilities, de-risk technologies and training plans, develop new operational concepts and doctrines, and increase our appetite and ability to field AI innovations at pace;

- deliver AI software through cross-functional teams, incorporating end users, technologists (in house, industry and, potentially, academia), capability sponsors and acquirers throughout design and development;

- ensure AI S&T and acquisition programmes have appropriate procurement, commercial and Intellectual Property strategies for subsequent acquisition and operational activities;

- embrace cutting-edge modelling & simulation capabilities (e.g. synthetic environments, digital twins) and DevSecOps and Prototype Warfare approaches to accelerate the pace that we develop, test and field new algorithms and AI-enabled capabilities;

- adopt new approaches to agile and rapid capability development and delivery, applying best practice and lessons from pathfinder projects and Innovation Hubs across Defence, and ensuring that through-life support requirements are considered from the outset;

- take steps, in accordance with our Ambitious, Safe, Responsible approach, to ensure safety, reliability, responsibility and ethics are central to innovation.

3.3.1 Securing our AI operational advantage

In increasingly complex and contested security environments, we will need to respond to threats at machine (rather than human) speed effectively and without compromising our values. Adversaries will seek to compromise our AI systems, impair their performance and undermine user and public confidence, using a plethora of digital and physical means. They will also use AI to attack and undermine non-AI systems. Our ability to use AI – while outperforming or otherwise denying the benefits of adversary AI – will be critical.

We must assess and mitigate AI system vulnerabilities and threats to both our capability and the data that drives it. We must harden AI systems against cyber-attack and other manipulations, addressing data, digital and IT vulnerabilities while developing and continuously evolving security methodologies for AI capability defence. We must identify and guard against broader AI-enabled threats to capability and operational effectiveness – for example, deception techniques. We must understand the potential cross-domain effects and implications of ‘algorithmic warfare’; develop appropriate counter-AI techniques; and develop appropriate doctrine, policy positions and integrated process to govern their deployment. We must share experience and best practice routinely and at pace, develop effective mitigations and reversionary modes, and integrate these considerations as part of routine command and control.

We will establish a ‘joint warfare centre’ to tackle these various AI-related operational challenges, initially as a virtual federated ecosystem based on the DAIC and Joint Force Cyber Group with contributions from S&T, intelligence, Joint Force Development and our other Warfare Centres (Navy, Land, Air and Space), allied with the National Cyber Security Centre and the National Cyber Force.

3.4 Working across Government

AI will transform the UK’s economic landscape, requiring a whole-of-society, all-of-government effort that will span the next decade. The Government has set out its ambition and plans for AI in the National AI Strategy. Defence has a critical role to play in preparing and pioneering the use of AI to support both national security and economic prosperity. Likewise, we have lots to gain from working with other government departments to ensure the UK best builds on our strengths in AI.

Defence engagement with partners across government includes:

- actively supporting the Prime Minister’s new National Science and Technology Council (NSTC), ensuring a coherent national approach to AI (and other technologies) through the emergent S&T Advantage agenda;

- closely collaborating with related cross-government initiatives to drive cohesion and exploit synergies across the UK’s strong AI ecosystem, including with the new Advanced Research and Invention Agency and the UKRI National AI Research and Innovation Programme;

- linking with the Office for Artificial Intelligence and AI Council, seeking to maximise potential prosperity gains from Defence investment in AI and identify opportunities where Defence investments can help to remove blockers to both civilian and military utilisation.

In the national security space, we will:

- identify opportunities to burden share and collaborate with the Intelligence Agencies and relevant Departments to develop and exploit new AI technologies, including by identifying common national security AI capability priorities and establishing a new Defence and National Security AI Network to jointly engage with the national AI ecosystem;

- deepen existing collaborations on AI, such as with the National Security Technology and Innovation Exchange (NSTIx), a data science & AI co-creation space that brings together National Security stakeholders, industry and academic partners to iteratively build better national security capabilities;

- support cross-departmental initiatives to strengthen UK technology protection practices and shape wider global regulatory and counter-proliferation regimes.

3.5 International capability collaboration

The fastest route to mastering these technologies is to work closely with allies and partners. Our approach to AI will therefore be ‘International by Design’: sharing information and best practice; promoting talent exchange; developing innovative capability solutions to address common challenges; and sharing the burdens of maintaining niche yet essential AI development and test capabilities. We will prioritise laying the foundations for interoperability: ensuring our AI systems can work together as required (e.g. exchanging sensor feeds and data), that processes are complementary and robust (e.g. for TEV&V), and building trust in the AI-enabled capabilities fielded in coalition operations.

3.5.1 Key AI partnerships

The United States is the world’s leading AI nation and our most important international partner. The US Defense Budget Request for FY2021 (which allocates $800m to AI and $1.7bn to autonomy) demonstrates significant AI ambition. The US has also led the world in championing responsible use of AI in line with our shared values. It is our top priority for AI R&D and capability collaboration, building on our close and long-standing relationship on other technologies and capabilities. The development of our own Defence AI Centre will create fresh opportunities for close collaboration with the Chief Data and AI Office (CDAO), and we will explore opportunities for enhanced ‘service to service’ collaboration on shared capability challenges where AI promises game-changing advantage.

We will similarly expand our collaborative efforts through our longstanding bilateral and Five Eyes partnerships with Australia, Canada and New Zealand. Built upon intelligence, data sharing, digital technologies and shared values, these are the ideal frameworks in which to develop AI interoperability. We will also deepen cooperation on AI through the trilateral AUKUS security partnership.

NATO is our most important strategic alliance and will be a pivotal forum for the UK and Allied consultation on AI and other emerging technologies. The NATO AI Strategy (2021) sets out how the Alliance will collectively approach key AI issues, champion the adoption of common standards and principles and promote interoperability. This includes through talent development and fellowship programmes and establishing a network of ‘AI Test Centres’ – collaborative facilities where NATO institutions and nations can work together to co-develop and co-test relevant AI applications alongside private sector and academic partners.