Literature review on security and privacy policies in apps and app stores

Updated 9 December 2022

This literature review was carried out by:

Prof. Steven Furnell

School of Computer Science

University of Nottingham

17 February 2021

Executive summary

This report investigates issues of cyber security and privacy in relation to apps and app stores. The objective of the review is to provide recommendations for improving the security of applications (apps) delivered via app stores, and to identify issues that may be of relevance to DCMS’s future work on the cyber security in these contexts. Specific attention was given towards app stores and apps intended for mobile devices such as smartphones and tablets.

The current mobile app marketplace is focused around two main app ecosystems – Android and iOS – and there are a range of app store sources from which users can install apps. These include official app stores from the platform providers, as well as a range of further stores offered by device manufacturers and other third parties. While the underlying objective of all stores is the same, in terms of offering the distribution channel for the hosted apps, they can vary considerably in terms of their associated security and privacy provisions. This includes both the guidance and controls provided to safeguard app users, as well as the policies and procedures in place to guide and review developer activities.

Evidence suggests that many users have concerns regarding the ability to trust apps and their associated use of data. As such, they find themselves very much reliant upon the processes put in place by app stores to check the credibility of the apps they host. In reality, however, practices vary significantly across providers – ranging from stores having clear review processes and attempting to ensure that developers communicate the ways in which their apps collect and use user data, through to situations in which apps are made available in spite of having known characteristics that could put users’ devices and data at risk.

When it comes to supporting users, this review reveals that the app stores have varying approaches with correspondingly variable levels of information and clarity. This is observed in terms of both the presence and content of related policies, as well as in relation to supporting users’ understanding when downloading specific apps. The latter is particularly notable in terms of the presence and clarity of messaging about app permissions and handling of personal data, with some stores providing fairly extensive details and others providing nothing that most users would find meaningful.

There are also notable variations in how different app stores guide and support app developers, including the level of expectation that appears to be placed upon providing safe and reliable apps, that incorporate appropriate protections and behaviours in relation to users’ personal data. While some stores include formal review and screening processes, and scan apps to prevent malware, others offer a more permissive environment that enables threats and risky app behaviours to pass through without identification.

Linked to their stance on maintaining security and resolving issues, the larger providers support vulnerability reporting and offer bug bounty schemes. The latter incentivise the responsible disclosure of vulnerabilities rather than allowing them to persist and risking their exploitation in malicious activities. The large rewards available through these schemes is in notable contrast with other app store environments, where such provisions are not offered.

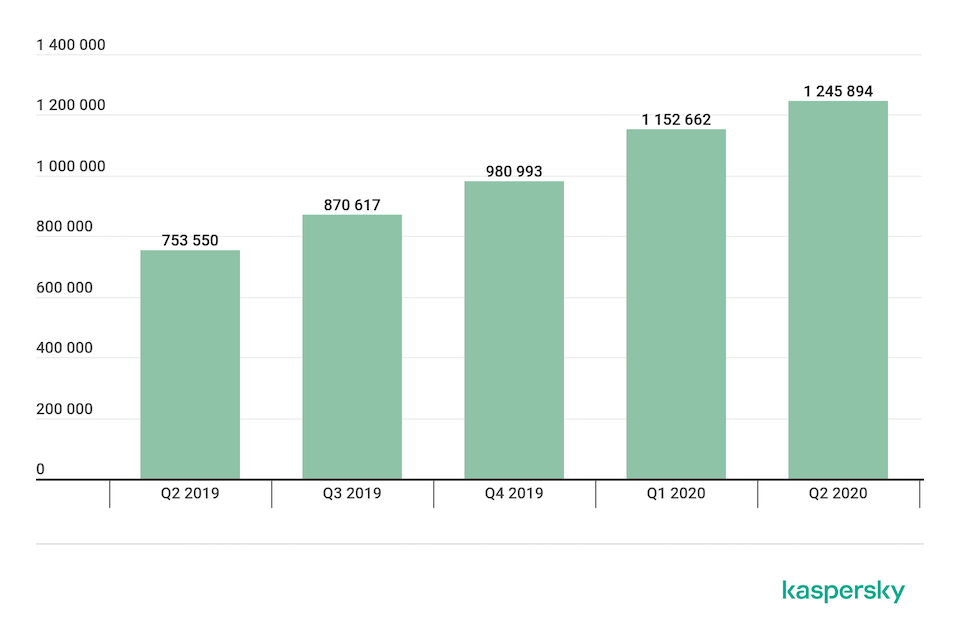

The discussion also gives specific attention to malicious and risky behaviours that can be exhibited by apps, with overall evidence suggesting that the problem is on the increase and that Android users are the most exposed to the risks. This underlying evidence includes clear examples of apps that are overtly malicious (representing traditional malware categories such as viruses, worms, Trojans and spyware), as well as apps whose behaviour (while not directly hostile) could be regarded as risky through factors such as requesting excessive permissions or leading to data leakage.

A series of recommendations are made in relation to operating app stores, guiding developers and supporting users:

-

Ensuring a more credible and consistent level of information to app store users and app developers regarding security and privacy provisions and expectations, supported by mechanisms to enable more informed decisions and control over the apps that are installed.

-

Increasing the opportunity and expectation for app developers to learn and adopt appropriate security- and privacy-aware practices.

-

Increasing the efforts to raise user awareness of app security and privacy risks, and supporting this with better communication of the issues within apps and app stores.

This in turn could lead to initiatives across the app store ecosystem more widely, including a community-adopted code of practice in order to support responsible app development and resulting confidence among users.

The summary is supported by extensive reference to sources, encompassing both relevant research and findings, and recent developments in the app sector. It should be noted that the review was completed in early 2021 and therefore further research could have been published that may further inform this topic.

1. Introduction

This literature review was conducted as part of the Department for Digital, Culture, Media & Sport’s (DCMS) ongoing work to improve the cyber security of digital technologies used by UK consumers. Applications and app stores were identified as a priority area for this work because vulnerabilities, such as malicious apps being allowed on platforms, continue to be found, putting consumers’ data and security at risk.

The objectives of the literature review are:

-

To set out recommendations for improving the security of applications (apps) delivered via app stores. This should also include apps that led to security issues but had made it through the review process and were available on an app store.

-

To outline common themes and any case studies that may be of particular interest to DCMS’s future work on the cyber security of apps and app stores.

1.1 Scope and focus

The review considers issues from the perspective of three distinct stakeholder groups:

-

The app user (i.e. the consumer from the app stores)

-

The developer (i.e. the individual or development house responsible for authoring the app content)

-

The app store provider (i.e. the owner/controller of the channel by which apps are made available)

There are related security considerations in the context of each group. In summary, and in an ideal situation, the user has an interest in using trusted apps, and the developer and store provider have an interest in supporting this. However, the strength and extent of this interest amongst each of the parties serves to inform the opportunity for circumvention and misuse. Related to this, there is of course a further perspective to be considered in terms of ‘attackers’, who seek to use apps as a means of conducting malicious activity (e.g. data or financial theft). The most worrisome context is when the attacker is also the developer[footnote 1], and is thereby seeking to use the app store as the means by which to reach users/devices/data as the intended target.

It should also be noted that any review of security-related aspects in apps and app stores quickly becomes somewhat entangled with some related issues around privacy. While the two topics are not synonymous, there is a definite overlap in the context of app-focused security because there is commonly a link to privacy aspects. For example, malicious or risky app behaviour typically has a privacy impact in terms of affecting (e.g. stealing, exposing and/or disrupting) the user’s personal data. Similarly, the good practice that ought to be followed by developers and app stores in terms of maintaining security often has a privacy-related rationale to underpin it (i.e. the app needs to be appropriately secure in order to safeguard the user’s data). Alongside this, the user perception of the overall suitability and safety of a given app is typically based upon a personal interpretation that takes a somewhat homogenised view of the two issues. In view of these overlaps and intersections, the review also encompasses a variety of topics and sources that include the privacy perspective as a related theme.

1.2 Structure

The report is structured thematically, with attention given to aspects relating to each of the stakeholder groups, as well as specific consideration of malicious and risky app behaviours.

Section 2 opens the main discussion with scene-setting on the notion of the app store as a channel for software distribution and introducing the range of different stores that are present in the overall market.

Section 3 is focused upon the user perspective, beginning with issues around user trust and concerns in relation to app usage, and then looking at how users are supported (in terms of provision of privacy and security related information) in different app store contexts. Brief attention is also given to how users can essentially support themselves via community insights through provision and use of app ratings.

The discussion in section 4 is then directed toward the developer perspective. This specifically highlights the need for related guidance that addresses the developer audience, followed by consideration of the extent to which such guidance and support is provided to them via the various app store platforms.

Even with effective developer guidance, there is potential for the discovery of vulnerabilities (in both apps and host platforms) that could lead to resultant exploitation by attackers and malware. With this in mind, section 5 briefly examines the mechanisms that providers have in place to enable vulnerability reporting and the degree to which this may be incentivised.

Following on from vulnerabilities that may potentially be exploited, the penultimate section presents an overview of actual threats that can be faced in terms of app-based malware and other risky behaviour. This considers the nature of the threats (including illustrative examples), as well as the facilities provided to support detection at both the app store and endpoint levels.

The report concludes with a series of recommendations in relation to app providers, developers and users, as well as a number of cross-cutting ideas that would address multiple stakeholder groups.

1.3 Methodology

The review was primarily focused upon apps and app stores in the context of smartphone and tablet devices (with the interpretation of ‘app stores’ including both official app stores from Apple, Google, and others such as Huawei, as well as third-party stores). It was intended to encompass app-related threats and the measures used by app stores in order to minimise risk. It should be noted that the study did not consider what other governments are already doing in this space in terms of existing or emerging policies and recommendations.

The review was conducted via online, and primary search terms used to constrain the search were ‘app’ and ‘app store’, as well as platform-specific terms such as ‘Android’ and ‘iOS’/ ‘iPadOS’. These were combined with secondary terms to seek examples of issues and concerns:

- Security issues and concerns, e.g.: ‘threat’, ‘risk’, ‘breach’, ‘hack’, ‘malicious’, ‘malware’, ‘Trojan’, ‘ransomware’, ‘spyware’, ‘worm’, ‘virus’, ‘vulnerability’

- Security measures and safeguards, e.g.: ‘security’, ‘protection’, ‘privacy’, ‘data privacy’, ‘verification’

Further tertiary terms were then used to locate literature relating to existing guidance and approaches:

- ‘framework’, ‘guidelines’, ‘recommendations’, ‘app development’, ‘regulation’

- “review”, “security checks”, “permissions” to identify literature regarding the vetting process of applications

As such, examples of resulting search strings included combinations such as ‘“app store” Security’, ‘iOS security breach’, ‘Android malware’ and ‘app security guidelines’. It was not intended (or necessary) to use all combinations of terms exhaustively, as many permutations tended to lead to the same sources in practice.

The searches were applied in a number of contexts:

- General web search – in addition to reports and studies, this route will also deliver results relating to media coverage of reported incidents (which can potentially be used as case examples);

- Academic search tools and publisher repositories (e.g. Google Scholar, IEEE Xplore, ScienceDirect, and Scopus)

Depending upon the extent to which they were already represented within the general web search findings, additional searches were also targeted toward materials offered by security vendors and analysts, and the App Store providers themselves.

In order to ensure relevance and currency, the review had a cut-off at 2016. Older sources were not included unless they were considered particularly relevant, or influential in guiding later developments.

It should be noted that the scope of the study (and length constraints of the report) did not allow for an exhaustive assessment of all app store environments, and there was a lack of existing data that specifically examines recommendations or makes assessments on app stores’ policies. There were similar practical limits on the extent to which the selected stores could be assessed (e.g. due to the extent of information openly available on public sites). However, the resulting sample is nonetheless considered sufficient to highlight a range of approaches and some key practical differences between them.

As mentioned, the focus was intentionally directed toward app stores relating to smartphones and tablets. It is fully acknowledged that app stores also exist in other contexts, but most of the literature retrieved at the time of the review was clearly framed from the perspective of mobile platforms. As such, it was considered appropriate to place the focus here, with these stores being judged to be illustrative of the primary examples of practice.

In terms of quality assurance, the approach was confirmed at several stages, with interim checkpoints and review of materials by Ipsos MORI, DCMS and NCSC representatives at key points during the process.

2. Background

The use of app stores is increasingly becoming the standard route for installing and maintaining software across multiple device types (including personal computers, mobile devices, and other connected technologies such as smart TVs and watches). In addition to enabling a simplified means of locating, purchasing and downloading software, a suitably managed app store has the potential to be regarded as a trusted channel (as opposed to other routes by which users may install apps onto their devices, such as sideloading[footnote 2]).

This report specifically considers apps and app stores for smartphone and tablet devices, which essentially places the focus upon Android and iOS/iPadOS platforms[footnote 3]. While there was previously a dedicated app store for Windows Mobile devices, support for Windows 10 Mobile ended in January 2020, and as of October 2020 it accounted for just 0.03% of the worldwide market share for mobile operating systems[footnote 4]. Additionally, while other examples of app stores exist for other device types (e.g. the Windows Store and Mac App Store for Windows and macOS devices), they are out of scope for this particular study as this would introduce too many avenues to explore within a single investigation. However, further studies may be conducted later as part of DCMS’s focus on building a wider evidence base.

2.1 Operating systems platforms

Before looking at the specifics of the apps and app stores, it is relevant to consider the extent to which devices in the different ecosystems are using the latest versions of the operating systems. The latest versions are expected to be the most advanced and refined in terms of security provisions, and the extent to which devices are using these versions forms a backdrop against which malicious apps might find vulnerable targets.

Given that the combined worldwide market share of Android and iOS now accounts for almost 99% of smartphone devices, it is most relevant to consider the position in these two contexts[footnote 5]. Historically there has been a notable difference in the stance each takes to user privacy and security – in summary the difference has been characterised as Apple aiming for security ‘whatever it takes’ versus Google aiming for things to be ‘secure enough’[footnote 6]. In saying this, it should also be noted that they are positioned differently in the first place, as while both have full control over their own operating systems, Apple also has full control over where the operating system is used (as the sole manufacturer of devices that use it). By contrast, Google (while also producing its own range of devices) licences Android to a host of other manufacturers, who can then deploy and maintain it in endpoint devices in different ways (the fact that Android is open source means that OEMs can operate independently of Google if they choose to).

It is recognised that Apple’s control of the entire platform (i.e. the device and operating system) means that the majority of users receive and install software updates and security patches. Figures from Apple (based on June 2020) report that 81% of active iOS devices have the latest version of the operating system installed, rising to 92% if considering devices introduced in the last four years[footnote 7]. Only 6% of devices were reported as using a version of the OS that was more than one generation older than the current one.

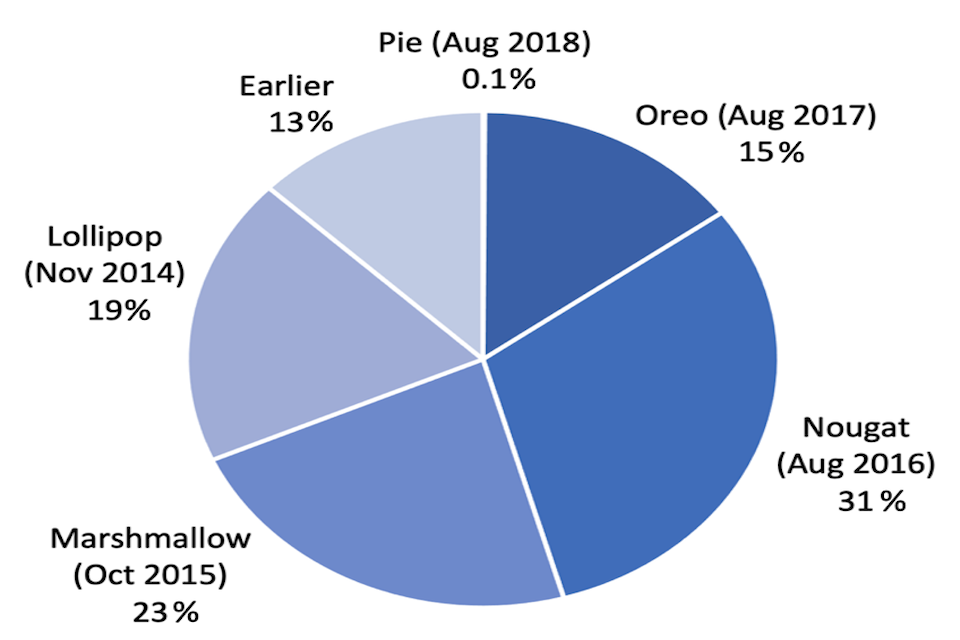

The situation with Android is more complicated, primarily because the device and OS can come from different sources, and manufacturers vary in their pace of adopting new versions, and whether they roll out updates for older devices. Google no longer publishes details of the percentage of devices running different Android versions, but the situation has historically been significantly more fragmented than with iOS. For example, in September 2018 the picture was as shown in Figure 1[footnote 8]. This was a month after the release of Android 9 (Pie), and so its install base accounted for only 0.1% of devices. However, even the prior version (Oreo), which had been around for over a year, was only on 15% of devices. The remaining devices were all running earlier versions, with 13% running OS versions that were over four years old.

Figure 1 : Fragmentation of the Android versions in use in Sept. 2018

Figure 1 : Fragmentation of the Android versions in use in Sept. 2018

The significance of this is that iOS apps are generally running on a much stronger foundation, whereas Android users can find themselves operating across a wider diversity of versions. From a positive perspective, Google’s approach has changed in more recent years, moving the focus of security features from Android to sit within Google Play Services. The consequence is that updates and enhancements can be issued regardless of the OS version on the user’s device3. Nonetheless, the more diverse environment still has potential implications from the perspective of the persistence of known vulnerabilities, which may in turn be exploited by malware (a theme explored in section 6).

2.2 Types of app store

In both of the target operating systems, there are top-level distinctions to be drawn between official and third-party app stores. The key difference is that the official app stores are controlled by the operating system providers (i.e. Apple in the case of iOS and Google for Android), whereas the third-party stores (such as Aptoide, Cydia and GetJar) are not[footnote 9]. This can be seen as advantageous by app developers and users. The former may find themselves able to launch apps with less restriction than through the official stores (which can include the release of apps that official stores would not accept on the ground of content or functionality), as well as having advantages in terms of visibility and economics (e.g. their apps may receive more prominence or exposure, or they may find the app store’s cost model is more to their liking[footnote 10]). They may also find that third-party stores offer a faster route to market, thanks to the lack of rigorous review processes that are applied by the official stores. Similarly, users may consider that third party stores broaden their choice of apps or make them available on better terms. Notably, for users in some countries, this can include whether the official store is available to them at all (e.g. at the time of writing Google’s Play Store is not available in China[footnote 11], which means that the market is exclusively available to third-party stores[footnote 12]).

There are also cases where, even though the official store is available, individual apps are not equally available in all countries – in some cases because the rollout is being phased, in others because the app is not planned to be released there at all. If a given app is particularly popular, this may lead users to seek it out via other routes. A good example here is the popular game Fortnite, which was removed from both the Apple App Store and Google Play Store as a result of its developer, Epic Games, introducing a payment mechanism that bypassed the normal revenue sharing with the host app store. As a result, would-be players were forced to find other stores that were still hosting the game[footnote 13]. In other cases, users may look to third-party stores to find free versions of what would normally be paid-for apps. In both of these scenarios, the resulting apps will have been placed in the third-party store in an unofficial (and unauthorised) manner, and carry the risk that they may also have been tampered with in order to introduce unexpected and unwanted functionality (which links to the topic of malware, discussed later in this report).

In summary, third-party stores can be considered to offer both the developer and the user a marketplace that is more accessible and offers more freedom. At the same time, however, the same openness and lack of restriction can introduce issues from the perspective of security and privacy.

To avoid potential ambiguity, there is also a distinction to be made at the level of the apps themselves. Third-party apps are any that come from developers other than the manufacturer, and so third-party apps may be offered via the official app stores. As such, if we wanted to generate a broad hierarchy of what we might expect to trust based on the scrutiny that the manufacturer would be expected to have provided, then it would be as follows:

1.First party apps, pre-installed on the device (also known as native apps)

2.First party apps via official app store

3.Third-party apps via official app store

4.Third-party apps via third-party app store

This is in no way intended to suggest that all third-party apps from third-party stores are inherently risky, but this is the one category in which the parent operating system provider will have had no input or oversight into what is being offered.

There are also potential differences in the provenance of third-party stores, with some coming from other ‘big name’ sources (e.g. device manufacturers offer app stores to support their range of devices, which users of that brand would be likely to place on the same trust footing as official stores), while others are entirely independent players.

2.3 Android app stores

App Stores for Android devices are available from a variety of providers, including:

● Google Play Store, the app store from Google, the provider of the Android OS.

● Device vendor / manufacturer app stores that are preinstalled on their own devices. Examples here are Samsung’s Galaxy Store and Huawei’s AppGallery[footnote 14].

● Third-party app stores, where there are numerous stores from a range of providers (examples that are considered later in the report are Aptoide, F-Droid and GetJar).

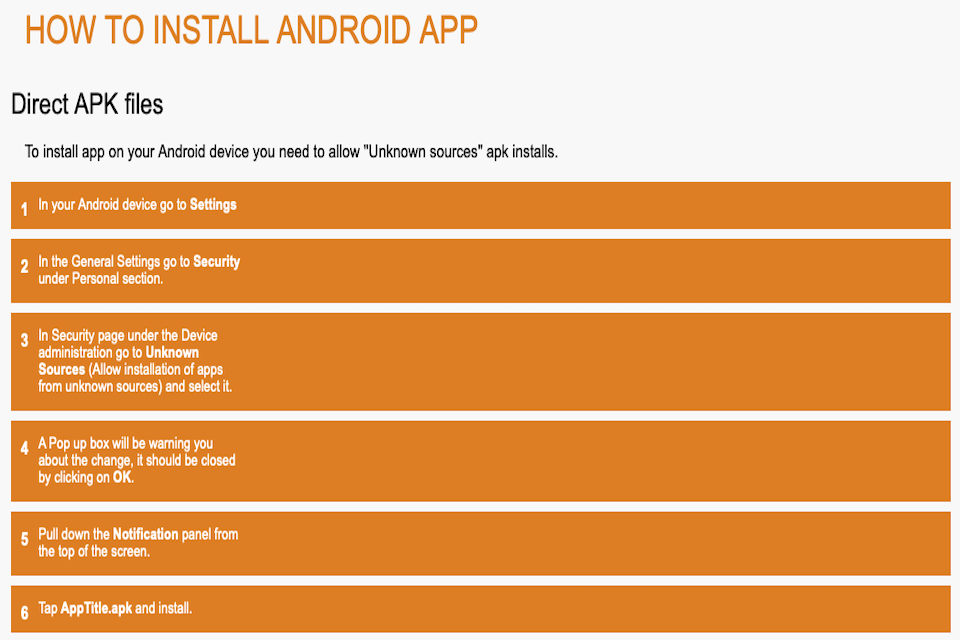

While the apps to support the official and/or manufacturer stores come preinstalled, to get access to the third party stores the user must firstly install the related store’s app onto their device. This in itself can present them with considerations from the security perspective. Looking at the example in Figure 2 (from the Lithuanian-originated GetJar app store[footnote 15]), the first thing the user is guided to do (in order to install the app that gives access to the app store) is to go into their security settings and permit the device to allow the installation of apps from unknown sources. As such, it does not send a positive security message from the outset. Nonetheless, while it remains the official store, Google does not seek to prevent access to such rival routes, and third-party stores are an accepted and expected part of the overall Android app ecosystem.

Figure 2 : Example of instructions for installing an app to allow access to a third-party store

Figure 2 : Example of instructions for installing an app to allow access to a third-party store

2.4 iOS app stores

The situation for Apple devices is notably different, with Apple’s own App Store being the only authorised source (and pre-installed on the related devices). By controlling the channel and maintaining a so-called ‘walled garden’ approach, Apple is then able to exercise control over both who develops for their platform and the apps that are released on it. Developers are required to be registered in order to publish their apps through the App Store, and this status can be revoked if it is being misused in order to produce apps that do not meet the required standards in terms of content and behaviour. Adherence to these standards is monitored and enforced through a formal review process for the apps themselves, which they must pass before being admitted to the App Store and made available to users.

However, while the official App Store is consequently the only app installation route for the vast majority of iOS users, there are means by which apps can be installed by other routes. Specifically, apps can only be installed from other sources if:

● the device is jailbroken[footnote 16] in order to bypass Apple’s controls, which violates the end-user licence agreement and compromises the security of the platform, leaving the user more exposed to vulnerabilities.

● the user installs a configuration profile[footnote 17] which then enables apps to be downloaded from an associated App Store. There are a multitude of iOS app installers that offer a means of getting content onto devices (examples of which include AppValley, Asterix, CokerNutX, iOSEmus, iPA4iOS and TutuApp), all of which initially require the installation of a configuration profile to open the door to their content.

The latter is essentially subverting a legitimate route that Apple offers to enable apps to be distributed outside of the App Store, and thereby outside of the associated review process. Specifically, the Apple Developer Enterprise Program enables in-house apps to be developed and deployed by large organisations for internal use[footnote 18]. There are various eligibility criteria (including that the resulting apps are only available for internal use by employees), and achieving membership is contingent upon an application and verification process, plus payment of an annual membership fee of 299 USD (or local equivalent). Apps can then be distributed via the organisation’s internal systems or via a Mobile Device Management (MDM) solution. So, as a consequence, the developer/organisation to whom the licence is granted is ‘reviewed’ (via Apple’s verification interview and continuous evaluation process), but their app software is not. However, this process can still lead to revocation of the developer’s certificate if it is found to be misused (as was the case with Facebook in January 2019, when it was discovered to have publicly released a research app developed under its Enterprise licence[footnote 19]).

Unfortunately, in spite of the safeguards that appear to be in place via verification and evaluation of developers, the enterprise app route has proven to be a channel for malicious activity. For example, findings from Check Point have shown that devices can end up with apps from multiple sources with varying levels of reputation. Specifically, they outline an investigation of ~5,000 devices in a Fortune 100 company, which revealed a total of 318 unique enterprise apps having been installed, from 116 unique enterprise developer certificates. Of these, only 11 certificates were linked to ‘whitelisted’ developers with positive track records, while 33 had no prior reputation and 2 certificates were compromised. The study concluded that “Non-jailbroken devices are exposed to attacks by a malicious actor using bogus enterprise apps”, with the end-user’s judgement over whether to install the apps then being the main element standing between them and exposure[footnote 20].

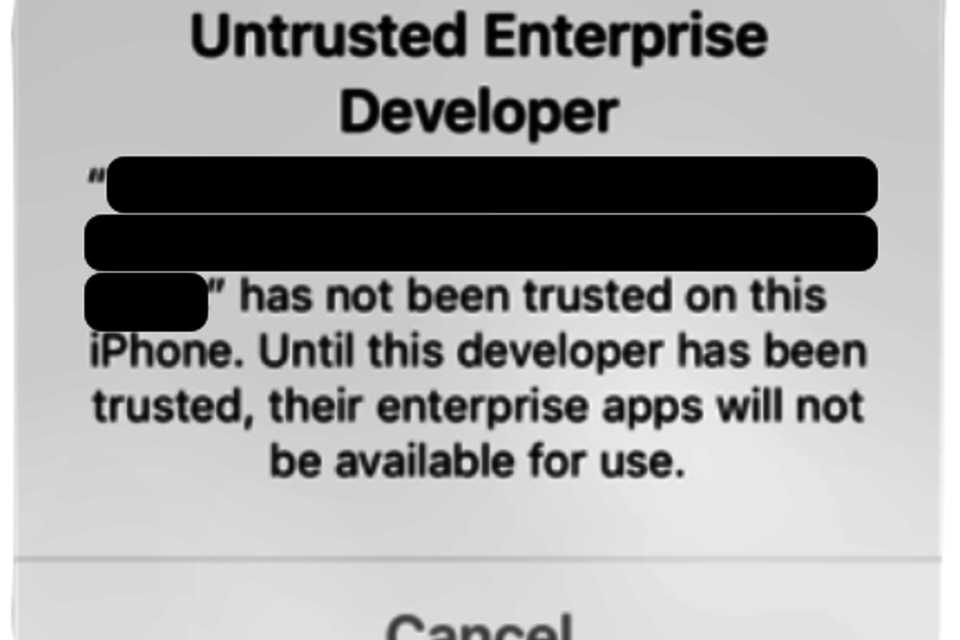

Getting the app installer onto the device creates a similar situation to that outlined with Android, as the user is guided to instruct their device to accept content from an untrusted source. Using as an example, the installation guidance for TutuApp, it requires the users to download and install a configuration profile, which enables the TutuApp installer app to be installed to their device[footnote 21]. However, actually running it leads to a dialogue such as Figure 3, requiring the user to follow further steps to trust the app in their device/profile settings.

Figure 3: iOS dialogue asking the user to trust an unknown developer

Figure 3 : iOS dialogue asking the user to trust an unknown developer

2.5 Examples explored in this study

Given the myriad app stores that can be identified in practice (totalling over 300 at the time of writing[footnote 22]), it is not feasible for the report to consider each and every case. As such, the discussion is focused on a comparison between the official stores for each of the target operating systems (i.e., the Apple App Store and the Google Play Store), alongside suitably illustrative third-party examples (e.g., Aptoide and GetJar as larger third-party Android stores, each with hundreds of thousands of available apps - and F-Droid as an example of a smaller store, with a few thousand apps on offer). In addition, the Huawei AppGallery is included as an illustration of a manufacturer-specific app store, and is considered to be a particularly relevant example given its potential role as a substitute for the Google Play Store in the Chinese market.

3. The user perspective

3.1 User attitudes towards apps

The ability to trust apps, or concern about not being able to do so, are factors of relevance for many users. However, there often tend to be contradictions in terms of users’ claimed concerns and their actual behaviour. For example, when directly asked to consider issues around privacy, users commonly cite concerns about granting access permissions to apps as being an influential factor (with findings suggesting that this aspect has twice as much predictive value in explaining privacy concerns as other factors, including prior experiences and perceived control over their information[footnote 23]). In practice, however, it has been long recognised that users may pay little attention to the permissions that apps request, and may have difficulty understanding what is being requested if they try to do so.

Further survey findings from 1,300 adult smartphone users (across six countries) conducted for Thales in mid-2016 revealed that security and privacy concerns relating to apps and malware collectively accounted for a fifth of the respondents’ greatest fears about the smartphone and apps, as listed in Table 1 (noting that a further 37.3% collectively expressed concerns around fraud, phishing and hacking of online bank accounts)[footnote 24]. As an aside, the single biggest fear (accounting for almost a third of overall responses) was loss of data if the smartphone was lost or stolen, which suggests a need to consider the oft-overlooked safeguard of backup.

| I worry about… | % concerned |

|---|---|

| App obtains personal information from permissions I gave it | 6.5% |

| Virus infecting my phone and all my apps | 5.8% |

| Getting malware when connecting to free Wi-Fi | 4.3% |

| Apps collecting personal data from another app | 1.9% |

| Ransomware attacks locking access to my phone | 1.2% |

| Total app and malware-related concerns | 19.7% |

The National Cyber Security Alliance also indicate variations in how users claim to safeguard themselves when downloading apps[footnote 25]. From a survey of 1,000 users (equally split between young adults aged 18-34, and older respondents aged 50-75), the overall findings suggested that 42% do not check what information an app collects before downloading it, and the same percentage are prepared to download from untrusted sources. There were, however, some notable differences between users based on age. The younger group was found to be more likely to check on what information an app collects (with almost three quarters claiming to do so, compared to less than half of the older group), whereas the older respondents were more inclined to restrict their downloads to trusted sources (with two thirds indicating this, compared to slightly less than half of young adults).

Looking from the privacy perspective, users have historically expressed concerns about the monitoring capabilities of mobile apps. For example, according to findings from Kaspersky in 2017, 61% of users were uncomfortable with sharing location information with apps, and 56% were concerned that someone could see their device activities[footnote 26]. It will be interesting to reflect in the coming years whether attitudes to location sharing have changed as a result of the broader acceptance of such features through COVID-19 contact tracing apps.

3.2 App store information for users

If considering the efforts to cultivate an overall trusted environment or ecosystem, then it is relevant to consider what the app stores say to users in terms of privacy and security in relation to the app store itself.

3.2.1 Apple iOS app store

The information that Apple presents to users under the ‘Principles and Practices’ merits direct inclusion here, as it illustrates how the issues of trust, security and privacy are placed front and centre in the marketing of the App Store[footnote 27]:

“We created the App Store with two goals in mind: that it be a safe and trusted place for customers to discover and download apps, and a great business opportunity for all developers. We take responsibility for ensuring that apps are held to a high standard of privacy, security and content because nothing is more important than maintaining the trust of our users.…It’s our store. And we take responsibility for everything on it”

3.2.2 Google Play store

The Play Store website does not make any cognate claims in relation to the security or safety of the store environment or the apps within it. The closest related information is a link to Google’s overall privacy policy. This is presented in fairly readable terms, and ought to give users a reasonable sense of what Google collects about them and their use of its services. While little of the content is specifically limited to apps, there are some related points, such as the following[footnote 28]:

“We collect information about the apps, browsers, and devices you use to access Google services, which helps us provide features like automatic product updates and dimming your screen if your battery runs low. The information we collect includes unique identifiers, browser type and settings, device type and settings, operating system, mobile network information including carrier name and phone number, and application version number. We also collect information about the interaction of your apps, browsers, and devices with our services, including IP address, crash reports, system activity, and the date, time, and referrer URL of your request.”

As such, while this is providing a factual statement around the collection and use of information, it is not providing a direct assurance of privacy and security in the manner that Apple’s text attempts to do.

3.2.3 Huawei AppGallery

As with the Play Store, the AppGallery website does not have any general customer-facing information to describe the stance on security and protection, and again the only related reference is the privacy policy link at the foot of the page. However, while the Play Store had a fairly readable text, it is questionable whether the majority of AppGallery users would be able to meaningfully interpret much of what is being said. For example, in describing ‘What Data Do We Collect About you’ it is stated that the AppGallery service collects and processes a variety of information, relating to the device, network, browser and service usage[footnote 29]. The description of what is collected uses a variety of acronyms and technical terms that may be unfamiliar to many users (e.g. the average user is unlikely to know the meaning of SN or UDID, or understand what is meant by their browser’s ‘user agent’). Moreover, they are also likely to remain uninformed in terms of understanding how the data is used. Although there is a specific section on data use, it is couched in a number of broad principles framed around fulfilling ‘contractual obligations’ and supporting ‘legitimate interests’.

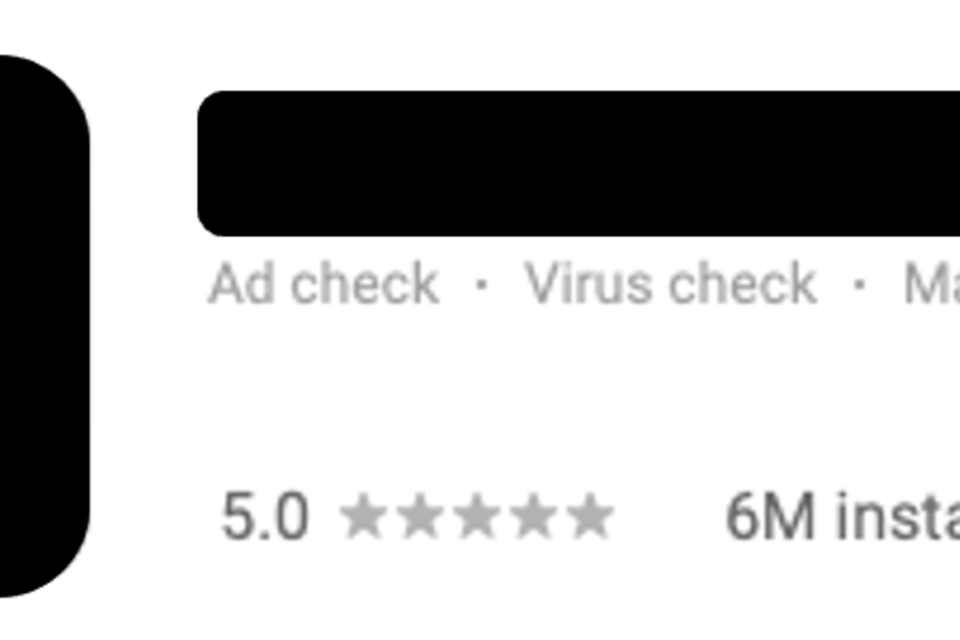

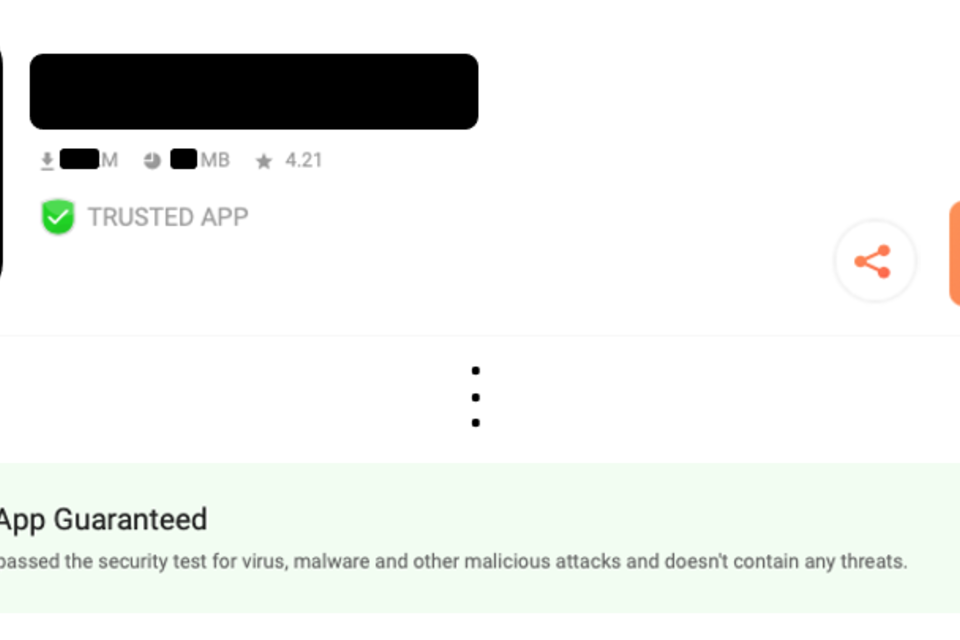

Nonetheless, as Figure 4 illustrates, some relevant app-specific information is presented to users within the AppGallery, with each app able to carry an indicator of the checks that have been passed. Interestingly, however, the meaning of these checks (and the basis for awarding the indicator that an app has passed) is not clearly explained and search results do not find extensive explanation of them[footnote 30].

Figure 4: Huawei AppGallery app check indicators

Figure 4 : Huawei AppGallery app check indicators

3.2.4 Third-party stores

The third-party stores generally tend to present less in terms of any user-facing information about security. Several simply limit information to what the website itself is doing, with no reference or assurance regarding apps that may be downloaded from the associated store. However, some do go a little further, and two related examples are presented below.

The first case, with security getting a fairly prominent mention, is the Android app store Aptoide. Looking at the website, there is certainly a bold claim to be found under the ‘Why Aptoide?’ link, stating that security is the top priority and claiming that recent studies prove it to be the “safest Android app store”[footnote 31]. However, in the absence of any related links or further information it is not clear which studies this is referring to or indeed how recent they are. Elsewhere on the site, the About page makes a similarly bold claim that the store has “one of the best malware detection systems in the market”[footnote 32], but this is again being stated without linkage to further supporting details or explanation.

Further investigation reveals that the store uses a malware scanning platform that it calls Aptoide Sentinel[footnote 33], and apps that pass through this are afforded the use of a Trusted App badge and an accompanying information banner to confirm that it has been scanned (see the anonymised app example in Figure 5, noting that in practice a description and screenshots of the app are presented in the dotted area). This arguably raises the question of whether apps should be present in the store if they have not passed the scanning.

Figure 5 : Trusted app indicators in the Aptoide store

Figure 5 : Trusted app indicators in the Aptoide store

A second example is F-Droid, a repository of free and open source software for Android. In this case, security is not being in any way headlined, but it is at least possible to find some reference to it. However, the following text from the Terms presented on the website make it immediately apparent that the store is providing somewhat less assurance than the Play Store:

“Although every effort is made to ensure that everything in the repository is safe to install, you use it AT YOUR OWN RISK. Wherever possible, applications in the repository are built from source, and that source code is checked for potential security or privacy issues. This checking is far from exhaustive though, and there are no guarantees”[footnote 34].

Meanwhile, looking at other sources, such as Cydia and GetJar, there is no information that directly speaks to the security perspective of the stores or the apps to be found within them.

3.3 App security and privacy information for users

Research suggests that there are often contradictions between what users claim to want and their actual decisions and behaviour. While many express concern about protecting their privacy, the reality is that their decision over whether to install an app is more typically led by factors of app functionality, design and cost[footnote 35]. These aspects consequently tend to outweigh privacy concerns. However, a potential limitation here is that users may not be receiving sufficient information to enable them to place privacy on an equal footing in their decision-making. Within a typical app store, they can see a general description of the app, images of how it looks, and the price. However, the privacy angle may be less clearly represented, and so another relevant consideration is the extent to which information is (or could be) conveyed to users to enable them to take informed decisions about whether they wish to use a given app (e.g. privacy ratings, information on app behaviour), and how they can take steps to protect themselves. In addition to whether such information is provided, it is also relevant to consider where it is placed and the effort that may be involved for users to find and interpret it, and varying approaches can be seen amongst the different stores.

Apple has made a particular virtue of the security and privacy of its product offer, explicitly marketing the operating system and app store environment as offering the user a level of protection that they may not receive elsewhere. The prominence of their stance has included the ‘Privacy. That’s iPhone’ advertising campaign[footnote 36], and a 13-storey billboard poster at the CES 2019 technology exhibition in Las Vegas (at which the company otherwise had no official presence), proclaiming that “What happens on your iPhone, stays on your iPhone”[footnote 37]. Having made such statements so publicly, it is unsurprising to discover that Apple follows up on this with the privacy support offered to users.

In December 2020 Apple introduced new requirements for developers to be explicit about the data collection and usage practices of their apps (these had originally been announced earlier in the year as part of the feature updates accompanying iOS 14[footnote 38]). As a result, they are required to declare information about app data collection and usage via App Store Connect[footnote 39], the service by which they submit and manage apps to the App Store (for both iOS and Mac).

Specifically, the developer is now required to identify and declare all data that they or any third-party partners collect via the app[footnote 40]. They are also required to maintain this information to keep the declaration up to date if any of the details change over time. The need for disclosure becomes optional if any app meets all of the following criteria (i.e. if only a subset apply, then disclosure is still required):

● “The data is not used for tracking purposes, meaning the data is not linked with Third-Party Data for advertising or advertising measurement purposes, or shared with a data broker.

● The data is not used for Third-Party Advertising, your Advertising or Marketing purposes, or for Other Purposes, as those terms are defined in the Tracking section.

● Collection of the data occurs only in infrequent cases that are not part of your app’s primary functionality, and which are optional for the user.

● The data is provided by the user in your app’s interface, it is clear to the user what data is collected, the user’s name or account name is prominently displayed in the submission form alongside the other data elements being submitted, and the user affirmatively chooses to provide the data for collection each time.”

The approach is analogous to the nutritional information labels that are commonly found on food and drink products, and intended to enable users to make more informed decisions about whether they wish to install and trust an app, based upon how it will handle their data. They can expect to see details on all data that the app collects from them and/or accesses from their device, as well as how it is used by the app provider and any third parties. Apple’s website lists the various data types that developers are required to declare to users, as well as the types of data usage that must be disclosed[footnote 41].

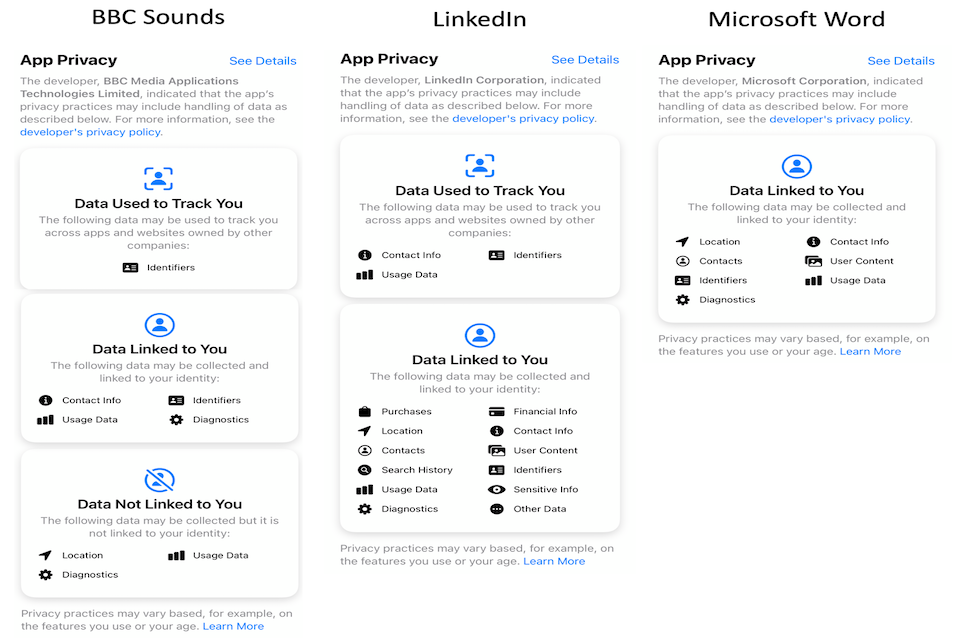

The impact of these new requirements was reflected in the App Store from mid-December 2020, and Figure 6 presents illustrative examples for three different types of app – BBC Sounds (a media streaming service), LinkedIn (a social network) and Microsoft Word (a productivity tool). It is notable that the labels are a top-level indicator of what is collected, and if the user clicks on the labels, they can then explore a further level of detail - revealing the specific sub-types of data used by the app, set against a series of broad usage categories (e.g. including Third-Party Advertising, Product Personalisation, Analytics, and a general option of ‘Other Purposes’)[footnote 42]. While this makes things considerably more transparent than was previously the case (and clearer than the current treatment in other app stores), it may still arguably leave answered questions about what the labelling actually means in practice (e.g. this app collects and uses my usage data, but what does it use it for?).

Figure 6 : Examples of privacy labelling in the Apple App Store

Figure 6 : Examples of privacy labelling in the Apple App Store

It is notable that Apple’s introduction of the privacy labels, and the obligation to use them, was not to the liking of all developers. For example, the developers of Facebook-owned WhatsApp messaging app complained that the broad terms used to describe the privacy practices may actually serve to “spook users” about what data apps collect and what they do with it. To repeat a reported quote from a WhatsApp spokesperson: “While WhatsApp cannot see people’s messages or precise location, we’re stuck using the same broad labels with apps that do”[footnote 43].

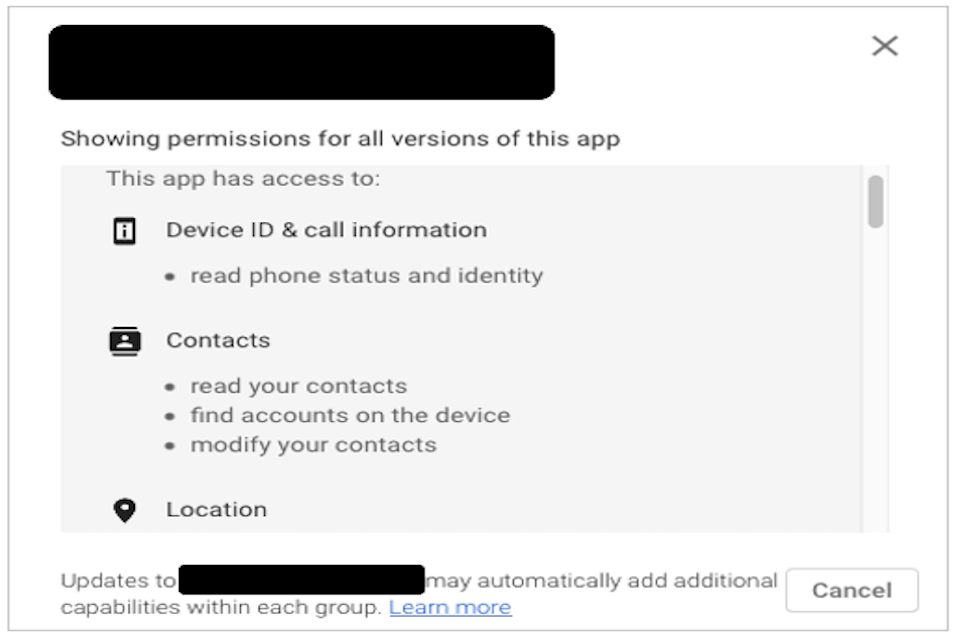

In terms of what other app stores are doing in this space, the Google Play Store allows users to obtain privacy-related information by getting a list of the permissions that an app requires (the link for which is provided amongst a set of ‘additional information’ – including app version, size, content ratings – presented at the bottom of the download page, below user reviews and ratings). Once the link is followed, the permission details are then presented to the user as shown in Figure 7. However, there is a notable difference between this permission-based approach and the privacy-focused representation used by Apple, insofar as while it tells the user what the permissions are for, it does arguably not tell them how they are being used.

Figure 7 : App permission details as presented in the Google Play Store

Figure 7 : App permission details as presented in the Google Play Store

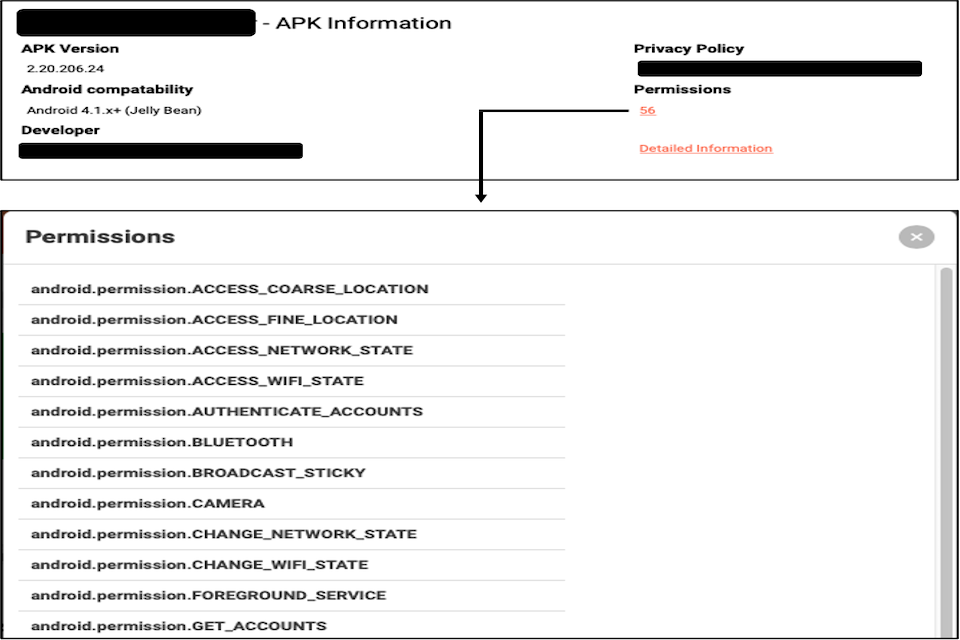

This can be contrasted with the level and style of information presented in the Aptoide store. In this case, potential users are presented with an indication of the number of permissions the app requires and can also obtain an associated list of exactly what they are. However, as illustrated in Figure 8 (which depicts results for the same app as the Play Store example), while this serves to provide the information the nature of the permissions is not conveyed in a manner that the vast majority of users would find meaningful (noting that the Figure shows only a subset of the 56 permissions that are actually listed for the app in question).

Figure 8 : App permission details as presented in the Aptoide store

Figure 8 : App permission details as presented in the Aptoide store

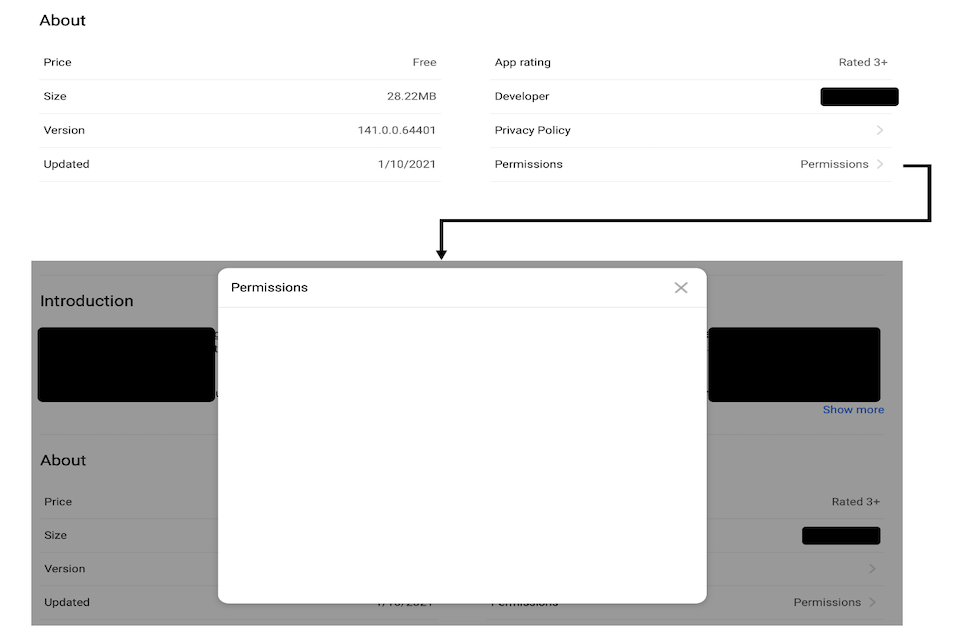

The Huawei AppGallery initially appears to take a similar approach to the other Android examples, in terms of offering users a link from which to view the permissions. However, attempts to test this across multiple devices (including a Huawei phone), using different browsers (Safari, Chrome, Edge, Firefox and Safari), and checking multiple apps met with no success in revealing how the permissions are actually conveyed. As shown in Figure 9 (from an attempt to follow the Permissions link from within a desktop browser), all that is shown is an empty window.

Figure 9 : Attempting to view app permissions in the Huawei AppGallery

Figure 9 : Attempting to view app permissions in the Huawei AppGallery

A further privacy feature announced for iOS 14, but not released at the time of writing, is App Tracking Transparency (ATT)[footnote 44], which limits any tracking across apps and websites to become opt-in only. Again, the aim behind this is to enable users to be more informed and in-control of their privacy, by requiring them to inform users when an app wishes to track them and giving them the choice of whether to permit it.

3.4 Using community insights

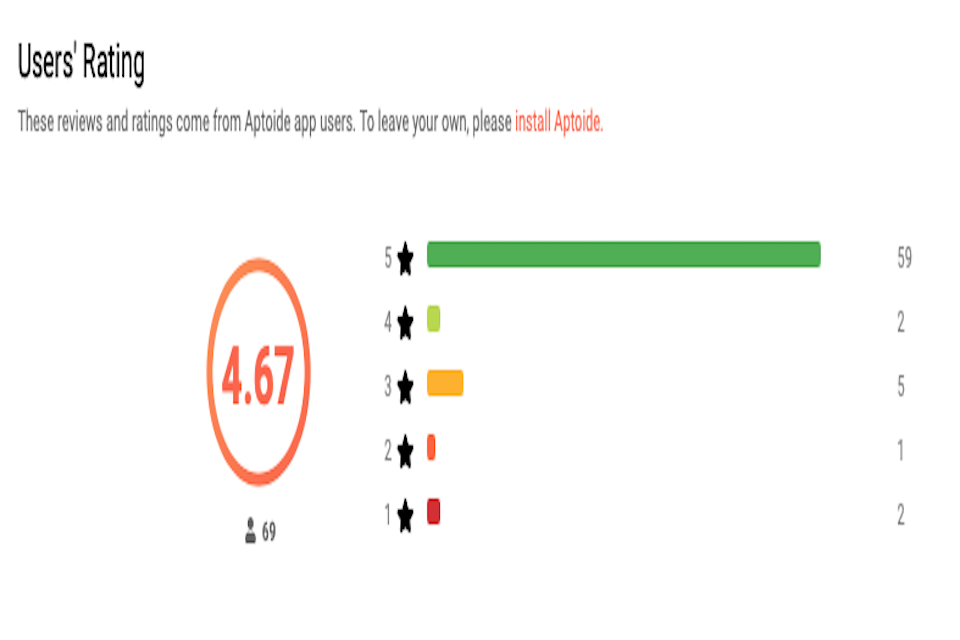

In addition to what app developers declare about their wares, some app stores also offer a further route for potential users to learn about security and privacy issues – namely via the reviews that have already been posted by other users. However, the stores vary in whether this facility is offered, and so while the official stores unsurprisingly do so, the third-party stores are more variable (e.g. from the three independent Android stores examined, Aptoide does but F-Droid and GetJar do not).

Figure 10 presents an example from Aptoide, in this case for an app that does not have the Trusted App / Good App Guaranteed status. With this in mind it is notable that, in practical terms, what users would probably like (but do not get) is a means to link to any negative review comments, in order to see why the app received the negative ratings. Moreover, the site does not expose the full set of review comments, and so those that are visible are not necessarily going to tell a clear story. Huawei’s AppGallery presents information in a similar manner. By contrast, Google Play and the iOS App Store allow review comments to be filtered according to the review rating.

Figure 10 : App rating example from Aptoide store

Figure 10 : App rating example from Aptoide store

In terms of understanding the positive difference that ratings and (more particularly) review comments can make, it is interesting to note the findings of a related study conducted by Nguyen et al.[footnote 45]. They applied natural language processing to over 4.5 million reviews for the top 2,583 apps in Google Play. From this they identified 5,527 security and privacy relevant reviews and determined that these were a significant predictor for later privacy-related updates of the target apps (with the findings mapping SPRs to related later updates in 60.77% of cases). This suggests that appropriately captured user opinions can be influential in motivating behaviour change amongst app developers.

4. Supporting developers

4.1 The need for developer guidelines

While many may now expect credible developers to already know that security and privacy are important, it is still reasonable to assume that some may be familiar with the notion but potentially unclear on exactly what they ought to be doing or how to achieve it. As such, it is relevant to consider the extent to which the issues are promoted to them by the app stores and their associated developer guidance[footnote 46]. For example, how do app stores guide developers and advise them regarding checks on submitted apps, and are developers expected to be conversant with privacy and data protection principles, or does the developer guidance support them to understand what they are expected to be protecting?

It is suggested that in some cases developers (particularly start-ups looking to establish a position and compete in the market) may be releasing apps in haste, without giving due attention to security and protection of user data[footnote 47]. As such, it is relevant to examine what the different stores do to promote and manage these aspects.

4.2 Apple App Store

The App Store is well-known for having an app review process, with Apple recognised as setting a high bar in terms of gatekeeping from both content and security perspectives. Various points within the review guidelines highlight and emphasize issues of relevance to security, privacy and trust[footnote 48]. It is expected that app functionality is clear and accurately described, and specific points of relevance come from Section 2 (Performance) of the detailed developer guidelines, and in particular relate to Section 2.3 (Accurate Metadata) and Section 2.5 (Software Requirements):

“2.3.1 Don’t include any hidden, dormant, or undocumented features in your app; your app’s functionality should be clear to end users and App Review. All new features, functionality, and product changes must be described with specificity in the Notes for Review section of App Store Connect (generic descriptions will be rejected) and accessible for review. Similarly, you should not market your app on the App Store or offline as including content or services that it does not actually offer (e.g. iOS-based virus and malware scanners). Egregious or repeated behavior is grounds for removal from the Developer Program. We work hard to make the App Store a trustworthy ecosystem and expect our app developers to follow suit; if you’re dishonest, we don’t want to do business with you.

2.5.3 Apps that transmit viruses, files, computer code, or programs that may harm or disrupt the normal operation of the operating system and/or hardware features, including Push Notifications and Game Center, will be rejected. Egregious violations and repeat behavior will result in removal from the Developer Program.”

Discussion in later sections makes points about appropriate use of subscriptions, in-app purchases, and notifications in order to avoid scamming and spamming of users, and the Legal section echoes the points around data collection, storage and use that are also presented in the developer guidance

A good summary of Apple’s stance is offered in section 5.6 (Developer Code of Conduct), which states:

“Customer trust is the cornerstone of the App Store’s success. Apps should never prey on users or attempt to rip-off customers, trick them into making unwanted purchases, force them to share unnecessary data, raise prices in a tricky manner, charge for features or content that are not delivered, or engage in any other manipulative practices within or outside of the app.”

The review process itself then involves examination of both technical and user-facing aspects of the candidate app (including its code functionality, use of APIs, content and behaviour), in order to ensure that (amongst other things) they are reliable and respect privacy. Apple’s guidance suggests that, on average, 50% of apps are reviewed in 24 hours and over 90% are reviewed in 48 hours. Apple’s website claims that 100,000 apps are reviewed per week, of which 40% are rejected, with the primary reason being minor bugs, followed by privacy concerns[footnote 49].

In terms of what the review process actually involves, security is headlined as one of the key principles of overall app safety (alongside points such as avoidance of objectionable content, child safety etc)[footnote 50]:

“1.6 Data Security

Apps should implement appropriate security measures to ensure proper handling of user information collected pursuant to the Apple Developer Program License Agreement and these Guidelines (see Guideline 5.1 for more information) and prevent its unauthorized use, disclosure, or access by third parties.”

The detailed guidance within the code of conduct has more specific focus upon ‘data collection and storage’ and ‘data use and sharing’, as well as special consideration for data relating to health and fitness, or to children. Apple’s developer documentation also provides further guidance for developers in terms of privacy principles[footnote 51]:

● Request Access Only When Your App Needs the Data

● Be Transparent About How Data Will Be Used

● Give the User Control Over Data and Protect Data You Collect

● Use the Minimum Amount of Data Required

As examples of coverage on specific issues, it also provides further links to documentation on requesting access to protected resources[footnote 52] (including guidance on how to advise the user about the purpose of the requested access) and encrypting the app’s files[footnote 53].

4.3 Google Play store

Android developer guidelines include specific policies in relation to Privacy, Deception and Device Abuse, and Google’s position on the issue is usefully and unambiguously summarised in a brief video that accompanies the more detailed policy[footnote 54].

In terms of helping developers to understand what they should be concerned about protecting, personal and sensitive user data is defined as including (but not limited to) “personally identifiable information, financial and payment information, authentication information, phonebook, contacts, device location, SMS and call related data, microphone, camera, and other sensitive device or usage data”[footnote 55]

The full Developer Program Policy[footnote 56] makes extensive statements about a variety of relevant issues under the broad heading of ‘Privacy, deception and device abuse’, including permissions, deceptive behaviour, malware and mobile unwanted software. The policy also highlights a range of enforcement actions that can be applied should developers be found to be in breach of these or other aspects, including rejection or removal of their app, and suspension or termination of their developer account.

In terms of the Play Store’s app review process, the guidance covers a number of steps that developers are required to complete in order to prepare their new or updated app for review[footnote 57], but there is no specific information about what happens within the review process. Nonetheless, from the perspective of this study, it is relevant to note that the submission process requires developers to add a privacy policy to indicate how their app treats sensitive user and device data. Developers are also required to complete a Permissions Declaration form[footnote 58] if their app is requesting the use of high-risk or sensitive permissions (e.g. relating to permissions to access call and message logs, device location, or files stored on the device). The guidance does not give a clear indication of how long the review process takes, but it is noted that “certain developer accounts” will experience longer periods and may take a week or more, as illustrated in Figure 11[footnote 59].

Note: For certain developer accounts, we’ll take more time to thoroughly review your app to help better protect users. This may result in review times of up to seven days or longer in exceptional circumstances. You’ll receive a notification on your app’s Dashboard about how long this should take.

Figure 11 : Google Play Store app review information

4.4 Huawei AppGallery

With the Google Play Store being unavailable in the Chinese market, the AppGallery can effectively be considered to assume the mantle of an official store in this context. What seems less apparent in the Huawei case is clear emphasis of top-level security and privacy principles for app developers to comply with. Guidance is presented in relation to the Ability Gallery (the platform for integration and distribution of abilities - services that help users complete tasks)[footnote 60].

AppGallery has what is described as ‘Four-layer Threat Detection’, defined as follows[footnote 61]:

“A professional security detection system featuring malicious behavior detection, privacy check, and security vulnerability scanning; manual real-name security check, as well as real person, real device, and real environment authentication to ensure app security.”

The Safety Detect kit offers a series of APIs that developers can integrate into their apps to enhance security[footnote 62]:

● SysIntegrity - Monitors the integrity of the app’s running environment (e.g. whether the device has been rooted[footnote 63])

● URLCheck - Determines the threat type of a specific URL.

● AppsCheck - Obtains a list of malicious apps.

● UserDetect - Checks whether your app is interacting with a fake user (unavailable in Chinese mainland)

● WifiDetect: Checks whether the Wi-Fi to be connected is secure (only available in Chinese mainland)

Another element of relevance to the app security context is that developers are given access to the DataSecurity Engine, which stores keys, user credentials, and sensitive personal information in a secure space based on a Trusted Execution Environment (TEE)[footnote 64].

As with the official stores, the AppGallery has an app review process, and similarly in common with the others no specific details are available that describes the actual steps and stages. Security is clearly flagged as one of the motivations for the process, and one of the related sets of Frequently Asked Questions indicates that “To provide users with more secure apps, HUAWEI AppGallery has introduced an enhanced professional security monitoring platform and implemented strict review standards”[footnote 65]. The same source, states that from the security and privacy perspective, an app may be rejected because:

-

it requires unnecessary phone permissions that threaten user privacy

-

it uses a third-party SDK plug-in that has viruses or security privacy risks.

Meanwhile, an alternative set of app review FAQs also presents five reasons why an app could be rejected in relation to security issues[footnote 66]:

“ 1) Contains virus

2) Contains unauthorized payments

3) Requires unnecessary phone permissions that threaten user privacy

4) Automatically opens background services

5) Reduces phone performance”

However, these are arguably not very descriptive answers and so rely upon the developer to understand or interpret the point being made. The same observation can be made in relation to the other directly security-related question in the same FAQ, which asks how a developer can deal with an app that has been rejected due to virus issues. The response given is simply that “Developers can upload the APK to VirusTotal, a non-profit service that integrates dozens of anti-virus software”. This seems a somewhat odd response, insofar as it guides the developer in terms of registering the malware to enable later detection, rather than advising them in the steps that should be taken to avoid their app being categorised in this manner.

Huawei also provides guidance on common reasons for the rejection of apps[footnote 67]. While this list includes deficiencies in relation to privacy policy, there are no references to security-related issues such as the presence of vulnerabilities or malware. It is assumed that this omission is simply because these are not common reasons, rather than because they are not qualifying reasons.

Based on the information presented across the two FAQ documents, the app review process reportedly takes three to five working days, but can take longer in (undefined) ‘special cases’.

4.5 Third-party stores

Given the sheer volume of different options available, a review and assessment of the guidance and practices of all third-party stores is outside the scope of this study, but some examples in both Android and iOS contexts are provided in order to compare them to the official stores. Even from this small sample, there are some clear differences in positioning that can be identified in the extent to which security and privacy aspects are headlined to potential developers.

4.5.1 Aptoide

The approach in this case is that developers can create their own store, which is then accessible via the Aptoide app. The guidance for developers on uploading an app to their store makes no mention of security or privacy, and there is no indication of an associated review process[footnote 68]. Indeed, a wider search of the Aptoide ‘knowledge base’ revealed no results for ‘privacy’ and the only result for ‘security’ was in relation to the need to change security settings in order to permit untrusted developers when installing the Aptoide app in order to create the developer’s own store.

As previously mentioned, Aptoide does include anti-malware scanning of apps submitted to the store, with those that pass being awarded the Trusted App status/badge, but this aspect is not flagged in the developer materials.

As an aside, reports in spring 2020 indicated that the Aptoide app store itself had become the victim of a security breach, resulting in the release of data from 20 million subscribers that had registered with the store between July 2016 and January 2018[footnote 69]. While bulk data breaches of this nature are unfortunately quite common, and have affected various large organisations in recent years, the incident potentially raises further questions about the security and privacy aspect of third-party app stores when compared to the official stores that would be (assumed to be) ensuring maximum protection over subscriber details.

4.5.2 F-Droid

The F-Droid inclusion policy is notably more permissive than the official app stores, and includes the following statement:

“Some software, while being Free and Open Source, may engage in practices which are undesirable to some or all users. Where possible, we still include these applications in the repository, but they are flagged with the appropriate Anti-Features.”[footnote 70]

These Anti-Features are defined as flags warning that an app contains “possibly undesirable behaviour from the user’s perspective, often serving the interest of the developer or a third party”[footnote 71]. Such flags include indicators for apps that contain a known security vulnerability or which have been signed using an unsafe algorithm. At the time of writing, the repository contained 357 apps flagged with anti-features, of which 31 related to tracking and two were for known vulnerabilities.

Vulnerabilities are identified by the exploit scanning capability of F-Droid Server (which is the route for adding apps to the repository), which in turn uses F-Secure Lab’s Drozer tool (a security auditing and attack framework that enables use and sharing of public Android exploits[footnote 72]).

In terms of actual guidance for developers in relation to security and privacy aspects, there is essentially no mention in relation to the apps that they are uploading. There is no direct mention of security or privacy in the developer FAQ, but there is the following rather ‘grey’ statement in relation to the question ‘Can I track users from my users?’:

“You can, but if you include any kind of tracking or analytics in your application (even sending crash reports) this must be something that the user explicitly opts in to - i.e. you ask them on first run, before sending anything anywhere, or there’s a preference that defaults to OFF. In all other cases, we may still include the app, but it will be flagged with our ‘Tracking’ AntiFeature, which means users will only ever see the app if they choose to view such apps”[footnote 73]

In short, developers are told that they can track users without asking them to opt in, provided they are willing for the app to be flagged as Tracking.

It is worth noting that the whole concept of anti-features is covering aspects that would not be passed for inclusion in the official app stores in the first place.

4.5.3 GetJar

GetJar presents itself as “The worlds biggest Open App Store for Android”, although the following information (from the About page) is rather dated (referring to information up to a decade old, and making reference to several platforms that are now largely obsolete in the market):

“As of early 2015, the company provides more than 849,036 mobile apps across major mobile platforms including Java ME, BlackBerry, Symbian, Windows Mobile and Android and has been delivering over 3 million app downloads per day. GetJar allows software developers to upload their applications for free through a developer portal. In June 2010, about 300,000 software developers added apps to GetJar resulting in over one billion downloads. In July 2011, GetJar had over two billion downloads.”

The majority of information for Developers is only available if an account is created within the GetJar Developer Zone. However, the Terms of use include several points that are clearly linked to security and protection, including the prohibition of harmful activities that would affect the GetJar site, and any submission of malware or other programs that could harm or disrupt other users[footnote 74]. As such, while it is not presented as prominently as in the official stores, GetJar appears to have a clear position on dissuading developers from uploading apps that would be troublesome from a security and privacy perspective. There is also some direct reference to the existence of an app review process, with the brief developer guidance stating that “The upload of the app is instant, but it can take usually up-to 72hours until app is placed into the catalog or even longer if app looks suspicious. GetJar can prolong moderation time or reject app submission in any case with no prior notice when the app might not be appropriate for GetJar users”[footnote 75]. However, there is no further clarification on how ‘looking suspicious’ or ‘being inappropriate’ may actually be defined.

4.5.4 Cydia

Cydia is a third-party option for iOS devices rather than Android, and the parent website describes it as “the first un-official iPhone appstore that contains jailbreak apps, mods, and other exclusive content, not available on Apple Store”[footnote 76]. The main features are the ability to use so-called ‘tweaks’ (which essentially equate to modifications to iOS), themes (to additionally customise the look of the device), the ability to customise the lock screen, access to the iOS file system, and the ability to unlock the carrier[footnote 77].

It is a jailbreak app store, meaning that it requires the user’s iPhone to be jailbroken before Cydia can then be installed. The website indicates that this is necessary in order to enable various abilities that would otherwise not be possible. However, as the website also observes, jailbreaking the device can result in some existing apps refusing to run, as they can detect that the device has been jailbroken (or, to quote Cydia site, “Some app developers have decided that, for security, they won’t allow their apps to run on any jailbroken device”[footnote 78]). The site consequently provides links to a series of further ‘tweaks’ that can be downloaded in order to bypass the apps’ jailbreak detection. Thus, the user seems to be quickly led into a potential array of fixes in order to retain the functionality they had previously enjoyed. The site readily highlights that the sort of apps that attempt to detect and avoid running on jailbroken devices include mobile banking and PayPal. As such, rather than looking for workarounds, this ideally ought to raise questions in the user’s mind as to why the developers would seek to prevent them running, and whether doing so might expose them to risk.

Perhaps unsurprisingly, there is little in evidence to guide developers and no mention of an app review process.

4.6 Limitations of this analysis

It should be noted that further in-depth examination of the support provided to developers was precluded by the timeframe of the review and the fact that it was limited to materials that were directly available online. As such, a more detailed study that further engages in the process from the perspective of a registered developer could usefully offer a more comprehensive picture of the extent to which different app stores are supporting developers in relation to security themes.

5. Vulnerability reporting and bug bounty

Given that the security of apps and app store environments can often be affected by the discovery of vulnerabilities in the code or the platform, it is relevant to consider how these are handled by the app store providers.

In addition to offering a channel by which vulnerabilities can be reported, it is also common to offer a means to incentivise it being used. The official stores consequently offer rewards programmes (also known as Bug Bounty schemes) that are intended to encourage the disclosure of vulnerabilities rather than their exploitation (with the reward being set at a level high enough to motivate good behaviour and offset the financial gains that may be generated by using the discovery illicitly). A standard (and reasonable) condition of the bounty programmes in all cases is that the discovered issue is not disclosed more widely before the provider has an opportunity to take appropriate action.

5.1 Apple

Apple’s Security Bounty programme spans all of their OS platforms, and includes several categories specifically related to apps[footnote 79]:

User-Installed App: Unauthorized Access to Sensitive Data

- $25,000. App access to a small amount of sensitive data normally protected by a TCC prompt.

- $50,000. Partial app access to sensitive data normally protected by a TCC prompt.

- $100,000. Broad app access to sensitive data normally protected by a TCC prompt or the platform sandbox.

User-Installed App: Kernel Code Execution

- $100,000. Kernel code execution reachable from an app.

- $150,000. Kernel code execution reachable from an app, including PPL bypass or kernel PAC bypass.

User-Installed App: CPU Side-Channel Attack * $250,000. CPU side-channel attack allowing any sensitive data to be leaked from other processes or higher privilege levels.

The figures quoted here are maximum payouts, and the exact amount is determined on the basis of the significance of the vulnerability identified and the quality of the report provided to document it (with Apple providing summary guidance on what should be included in the reporting). In addition to rewarding the researcher that shares the issue, Apple also undertakes to match the bounty payment with a donation to a qualifying charity.

5.2 Google

Google offers a number of reward schemes that can be considered relevant in the context of app and app store security:

● Android Security Rewards Program[footnote 80] – for vulnerabilities in the latest versions of the Android operating system;

● Chrome Vulnerability Reward Program[footnote 81] – for vulnerabilities in the Chrome browser;

● Google Vulnerability Reward Program (VRP)[footnote 82] – for apps developed directly by Google;

● Google Play Security Reward Program (GPSRP)[footnote 83] – for third-party apps in the Google Play Store.

All have separate rules, reward levels and eligibility criteria, and so for the purposes of illustration in this discussion, further focus is given to the scheme that is linked to the app store itself – i.e. the Google Play Security Reward Program. To quote from Google’s own description, GPSRP “recognizes the contributions of security researchers who invest their time and effort in helping make apps on Google Play more secure”.

GPSRP is specifically focused upon ‘popular’ Android apps (classed as those with 100 million installs or more), and at the time of writing, qualifying vulnerabilities must work on patched versions of Android 6.0 or higher.

The process guides disclosers toward contacting the app developers directly in the first instance (if they have a clear means of enabling reporting). If the developer does not appear to offer a reporting route, or does not respond within 30 days, then the vulnerability can be submitted to GPSRP directly.

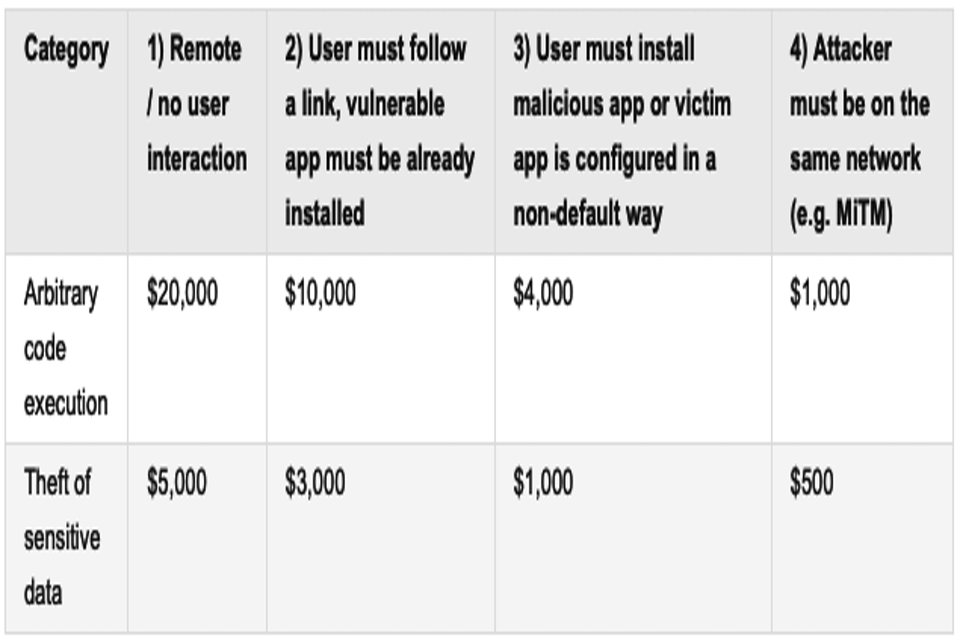

In terms of the associated reward, the monetary value is determined by the level of exploitability of the vulnerability concerned. This is summarised in Table 2, which depicts the details as presented on Google’s website.

Table 2 : Reward categories from the Google Play Security Reward Program

Table 2 : Reward categories from the Google Play Security Reward Program

As shown in the table, the qualifying categories of vulnerability are those relating to arbitrary code execution (which would lead to potential for malware attacks) and theft of sensitive data (which would include vulnerabilities granted access to a user’s account or login credentials, as well as user-generated content, such as their messages, web history and photos). While these categories will clearly account for a range of security and privacy threats, there are other vulnerabilities that are not within scope of the reward scheme:

● “Certain common low-risk vulnerabilities deemed trivially exploitable

● Attacks requiring physical access to devices, including physical proximity for Bluetooth.

● Attacks requiring access to accessibility services.

● Destruction of sensitive data.

● Tricking a user into installing a malicious app without READ_EXTERNAL_STORAGE or WRITE_EXTERNAL_STORAGE permissions that abuses a victim app to gain those permissions.

● Intent or URL Redirection leading to phishing.

● Server-side issues.

● Apps that store media in external storage that is accessible to other apps on the device.”