Security monitoring: technology overview

Published 22 June 2015

1. Introduction

The choices of proprietary products and services that can assist in providing security monitoring capabilities are many and varied. This section summarises the techniques and technology that can be applied to meet security monitoring requirements. This section neither mandates nor prescribes particular technologies, but provides guidance on what can be deployed.

No single product or technology, nor a combination of products or technologies, can provide 100% protection. System designers and security experts should consider the merits and limitations addressed by each option and consider the whole-life costs of implementing and operating those solutions. Organisations that need to implement a holistic approach should consider a variety of tools from multiple vendors in order to provide a ‘defence in depth’ approach.

Any technology deployed should be part of the ICT project delivery. As with other security mechanisms, there needs to be a source of confidence in the effectiveness of technology solutions adopted. Product or service assurance are ways you can gain that assurance.

2. Sources of event data within typical ICT systems

Many components of modern ICT systems have the ability to produce logging information. Several mature and universally recognised logging schemas exist including Syslog or Common Log Format (CLF), so one ICT device can output logs to a log aggregation point with minimal configuration. The main components and their logging capabilities are summarised in the table below.

| Compontent | Description | Typical logging capability |

|---|---|---|

| Server | Used within an ICT estate to provide computer resources to a user or process. Typical servers seen within an ICT system are database servers, web servers or application servers. | * Essential for tracking privileges and monitoring file system based access control. * Provide a source of information regarding access to network resources hosted by a server. * Should be supplemented by application level logging. * Log collection and analysis tools tend to be primitive. * Database and application servers may either use typical server facilities or their own separate reporting mechanisms. |

| Clients | The devices that are used to access the ICT resources available to a user. Also known as end user devices (EUDs), they can include mobile devices, tablets and desktop PCs. | * Are more likely to be the subject of manipulation by an attacker or the end user. * The data collected from these devices must be used with caution as it could have been manipulated. * The lack of data relating to events does not imply that everything is good. * May provide a profile of user activity. * May generate logs while offline (potentially for access to local resources). * May be of value for forensic analysis or local audit. * May provide logs and alerts relating to input/output attachments while connected to the network. Note: Experience gained in providing CESG Platform Guidance has shown, the ability of modern EUDs to generate useful log data is reducing swiftly, and the ability of enterprises to carry out forensic analysis / audit is increasingly limited due to the protections on the devices. In relation to BYOD, it is even less likely that the enterprise will see value in anything coming off of EUDs in terms of monitoring. |

| Authentication services | Used to identify, authorise and establish the resources available to a user. Achieved using services such as domain controllers, directory servers and authentication servers (for example, Kerberos, RADIUS, TACACS). | * Provide source of records regarding network authentication attempts and failures. * May also provide information regarding sessions, privileged assignments, directory information, remote access and token use. |

| Network components | Used to establish and control the communications across an ICT system. Includes routers, switches, network management system (NMS), DNS, DHCP and wireless access points. | * Can provide tracking of network attachments, IP address mapping, wireless access and network health. * Typically have very low, local log retention and are often reliant upon proprietary add-on or SNMP based management infrastructure. * Network Monitoring System output covers many events and requires filtering to select those that are security relevant. |

| Security services | Used to control, prevent and detect system attacks. This can be done with such devices as network firewalls, application firewalls, proxy servers, content scanners, anti-malware and intrusion detection and prevention systems (IDS/IPS). | * Usually proprietary products with vendor specified logging characteristics. * May support SNMP traps or other means of sending alerts. * Are essential for tracking information regarding alerts raised within separated network segments, and for tracking boundary operations. * May support integration with NIDS. |

| Storage management | An ICT system may use a storage service as part of the estate. Such devices can include RAID controllers, SAN controllers, backup servers and cache servers. | * Can provide information relating to storage health and information protection status. * Can track movement of information between storage compartments and network boundaries. |

Note that some components can also raise alert messages, either as proprietary alert messages or as SNMP traps sent to a network management system. These can contribute to an overall picture of security monitoring in the following ways:

- association with likely security policy violations

- indicate suspicious activity or anomalous behaviour

- provide information on the health of the security monitoring mechanisms

- contribute to the picture of normal system behaviour

2.1 Limitations of ICT components

The use of ICT system components for data collection and alerting has some challenges and limitations, which include:

- the data can be in a proprietary format and the information difficult to extract

- the data can contain too much information and require reduction

- the data may not provide evidence to meet security monitoring objectives

- the ability to control, tune and configure the data may be limited

- correlation from different sources can be time consuming and difficult

3. Security information and event management (SIEM) systems

SIEM systems, also known as Security Event Management (SEM) and Security Information Management (SIM) systems, can be provided by one or more vendors. The aspiration is to have all significant information security delivered to selected security manager consoles. The systems can be implemented as an integrated software suite or as a collection of separate tools working together and using a common display. SIEMs can be supported by:

- proprietary agents that provide direct reporting from monitored systems and devices to central consoles

- agent-less technologies that have no impact on the monitored systems and devices, and rely upon pre-existing open reporting mechanisms such as Syslog or SNMP.

SIEMs typically include integrated log management, collection, analysis and reporting functions to provide a comprehensive log monitoring solution. All SIEMs provide a predefined list of supported hardware, software, open standards and protocols. Some SIEMs come with additional tools and modules to provide an integrated approach to information security management, typically including incident management, investigation support and computer forensic capabilities.

SIEMs can also include other modules with behaviour analysis, intrusion detection and intrusion prevention capability or provide integration with third party products such as firewalls and network management systems. There are no open standards with regard to what constitutes SIEM functionality. Further information on SIEM is available from NIST Special Publication (SP) 800-94, section 8.22.

4. Behaviour analysis systems

Behavioural analysis systems use a combination of algorithmic, heuristic, ‘pattern matching’ or ‘artificial intelligence’ approaches to determine or ‘learn’ normal behaviours, and to then alert significant departures from those behaviours. These alerts can provide clues to potential system misuse or attacks. This contrasts with knowledge-based intrusion detection products that use attack signature bases to detect suspicious activity more directly. Some behavioural analysis systems are hybrids and include multiple forms of analysis.

The advantage of behaviour analysis systems is that they can potentially detect previously unknown attacks that have not been included in the latest signature bases. Behavioural analysis systems are especially useful for identifying ‘malformed’ or blatantly suspicious activity. However, there is a need for human oversight as they are particularly prone to false positives and false negatives. It requires both local and specialist expertise to be involved in the decision making processes to filter out the false indications and determine true suspicious activity worthy of investigation.

4.1 Behaviour analysis technologies

There are several technologies and tools that fall into the behaviour analysis category. The most generally useful are network behaviour analysis (NBA) systems that sample large volumes of network traffic at strategic points. NBA systems can work alongside conventional intrusion detection systems and intrusion protection systems (IDS/IPS). There are also systems that are specific to particular applications, such as fraud detection systems, which monitor transaction patterns and provide alerts for any anomalies detected. These are typically supported by host agent software reporting back to a central analysis and management console system.

Many application firewalls use a simple form of behaviour analysis. They are focussed on validating requests and responses in particular protocols (eg SOAP or SQL). They can catalogue and categorise received transactions and then query any new types of transactions that appear, which can then be either blocked or allowed.

Most behaviour analysis systems require a learning period during which normal behaviours are profiled. During this phase the system initially requires intensive oversight, as each captured pattern will be initially alerted. Some may be deployed in a test or pilot phase during which alerts are suppressed to allow this learning to take place. However, any artificiality in the behaviour profiles during the learning phase will mean that the exercise will not be fully effective, as when the system becomes exposed to true behaviours after the learning phase, these will be alerted. There are also tools that allow post-analysis of event logs or event log archives that can aid incident investigations or compliance review activities.

Further information on Network Behaviour Analysis (NBA) systems is available from NIST Special Publication (SP) 800-94, section 6.

5. Intrusion detection and prevention systems (IDS/IPS)

Intrusion Detection and Prevention Systems (IDS/IPS) are the most ubiquitous technology for providing automated attack monitoring and defence.

- An Intrusion Detection System is a passive technology which monitors the traffic flow via for example, an offline switch. It only receives a copy of the traffic and raises a flag (alert) when an attack (threat) is detected. Being offline, it cannot block attacks. It can be tuned to specific requirements as this system on its own can lead to a number of false positives. If the device fails, no alarms will be generated.

- An Intrusion Prevention System is an inline threat protection technology which allows the traffic to actually flow through. Upon detection of an ‘event’, it will stop (block) the traffic from passing, although both detection errors and/or device malfunction can result in service disruption.

IDS/IPS is also a group of technologies, including Host, Meta, Network and Wireless Intrusion Detection Systems and their IPS equivalents. Some vendors provide integrated approaches that can include all of these technology groups. Others specialise in particular groups.

Note that IDS and IPS are just one of a number of security control layers, which must be aligned in order to maintain an effective barrier to threats. There are many vendors within the market, with each offering varying degrees of protection, some are proprietary, others generic. The choice of technology will be dependent ultimately, on the requirements of the system. Although individual firewalls provide a measure of intrusion detection, they are limited in that they only provide monitoring at a few discrete points and provide no detection of activity behind the firewalls. However, some IDS/IPS use firewalls as a source of attack information and some IPS provide attack response by automatically modifying firewall rules on the attack path. But IDS/IPS also deploy other kinds or probes, sensors and agents to allow more comprehensive monitoring of the network infrastructure.

IPS implies the ability to automate preventative response to detected intrusions. This can vary from filtering traffic at specific points, altering boundary firewall rules to drop traffic associated with an attack, or playing an active part in attack streams and modifying potentially harmful behaviour to become harmless. Increasingly, the distinction between IDS and IPS is disappearing, with ‘pure IDS’ products being superseded by new versions that have both capabilities. This applies to all classes of IDS products and consequently, their IPS equivalents. The distinction between IDS and IPS is now more one of configuration, so organisations can choose how to configure the prevention aspects of these devices.

Whichever product is chosen, it is important that the system is ‘tuned’ for a particular organisation. Consequently utilisation of automated prevention facilities warrants comprehensive care in planning, configuration, testing and operation. Projects considering implementation of IPS technology should engage with CESG network defence experts at the earliest possible phase of the project lifecycle.

Further information on IDS/IPS is available from the SANS Organisation Intrusion Detection FAQ and NIST Special Publication (SP) 800-94.

6. Techniques for monitoring ICT systems

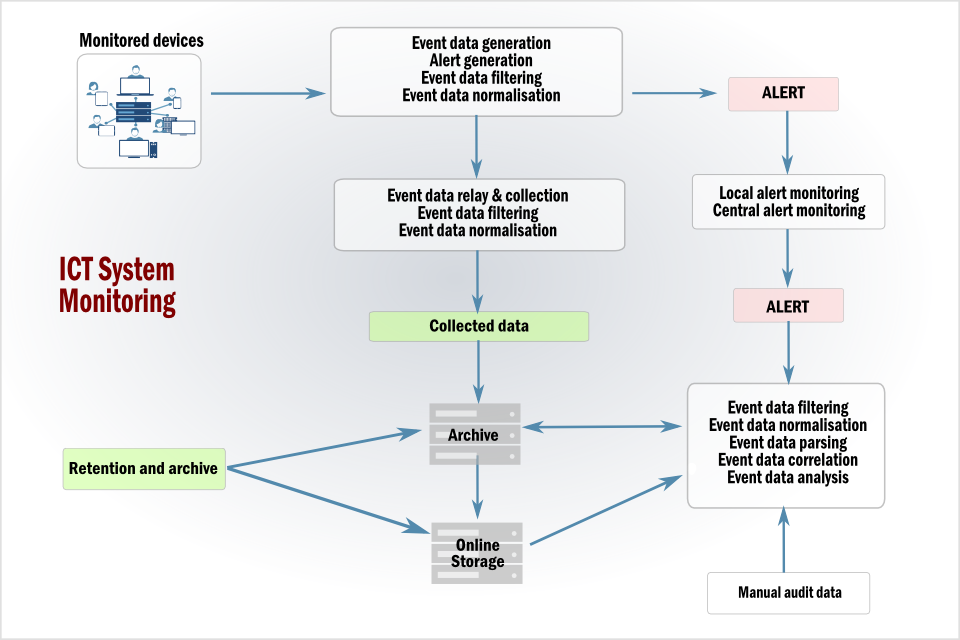

The following diagram shows a security monitoring scenario with a moderate degree of complexity. In this example there are many data sources (perhaps extending over several sites) to be collected, relayed, and held in a central repository.

Techniques for monitoring ICT systems

The techniques and activities in the diagram that are either applied automatically (or need to be considered in manual operational procedures) are described below.

6.1 Event data generation

Devices that are capable of automatically recording security relevant data need to be configured to provide information that relates to the security monitoring requirement of the business. This information may need to be augmented by manually kept records. Operational procedures should cover any requirements for manual recording, and the means by which it can be incorporated within the overall security monitoring processes. Devices that are capable of raising alerts may be able to contribute to real-time or near real-time monitoring.

6.2 Alert generation

Automatic alert generation directly from devices can introduce issues such as false positives or false negatives. Augment automatic alerting by training system users and managers to recognise and report unusual or suspicious events. Consulting with experienced staff and building up a historical knowledge base of activities and expected patterns of behaviour will inform decision making, with operational procedures covering alert recognition and confirmation.

6.3 Event data filtering

The filtering of event data should occur in several points within the service:

- as part of the configuration settings on the monitored devices (this can be tricky as the data may be used to fulfil both system and security monitoring)

- during the collection processes in which only relevant event data should be collected

- during the collation and analysis processes when queries are performed on the event data

In the absence of specialist tools that provide automated filtering or query facilities, consider how filtering might be performed manually. Even on a system with only a few monitored devices, this is likely to make any audit activity extremely laborious, if not impossible. Regardless of whether filtering is performed automatically or manually, there should be adequate documentation that covers how it will be achieved.

6.4 Event data relay and collection

The transport of event data around can be broken down into a number of discrete components:

- standard devices that collect data

- relay devices that collect data from all devices from within a single site

- central devices that collect data from all relay devices, and that store it within an online accounting database

Note that simpler implementations may not include all these components.

The Syslog IP protocol, which is supported by a wide variety of devices, enables this collection of data from distributed sources. Originally documented in RFC 3164 (The BSD Syslog Protocol) and updated in RFC 5424 (The Syslog Protocol), there are several proprietary conflicts in the various Syslog implementations, and significant vulnerabilities. These include:

- Syslog messages are transmitted individually using UDP which does not provide reliable delivery

- messages can be corrupted or lost in transit or they may arrive out of sequence

- messages are limited to a maximum size and can be truncated

- messages can easily be tampered with by a ‘man in the middle’ attack to provide substitute or false entries

- messages can be spoofed or malformed to exhaust the storage space of collectors, to hide illegitimate activity or cause a failure of the logging system

- messages are transmitted in clear text and can therefore be intercepted, and could provide an attacker with a wealth of useful information

There are Syslog implementations that conform to the newer RFC 3195 (Reliable Delivery for Syslog) that address several of these issues. Where projects are implementing Syslog then the implementation should comply with both RFC 3164/5424 and 3195, although there are other proprietary solutions for collecting event data that are based on alternative approaches. Event data collection can also be done by a manual process rather than using automated tools. This may include copying source log files to computer media.

All event data and its collection approach should be viewed as an asset in its own right and must be risk managed with appropriate protective measures, bearing in mind event data should be protected in accordance with its value to the business.

6.5 Event data normalisation

Normalisation is the process by which event data is converted into a single coherent format to provide consistency in the central repository to enable easier analysis. Normalisation can occur at a number of places, such as during collection from the source device, or on an intermediate relay device or in the central collection service. Standalone tools can also provide normalisation, but there is no overall standard for event data collected from ICT devices.

6.6 Event data parsing

Normalised data can only provide limited information as it is typically the ‘lowest common denominator’ amongst possible data formats. For instance, normalised Syslog includes the following:

- timestamp (date and time)

- event code (for syslog this comprises a single number that represents ‘facility’ raising the error and a ‘severity’ indicator)

- source (hostname or IP address raising the message)

- process identifier (process name)

- message text

The message text potentially provides the most informative part of the collected data, as it will usually include any useful message parameters (for example, the name of a file to which access was refused or the name of the user requesting the access). Data parsing extracts the message parameters in order to help provide additional security monitoring information. A limited amount of manual parsing is possible on message texts that are human readable; however most require some form of expert interpretation. There are proprietary tools which can automatically interpret a broad range of system message texts, including those generated by specific applications.

There are software collection agents that bypass the parsing issue by providing detailed security monitoring information in the specific format required. It is also possible to use logging methods in which the monitored devices provide information in more structured formats such as SNMPv3 Management Information Blocks or XML format log records.

6.7 Event data correlation

Correlation is the process of assembling event data into sets of related sequences across different devices. Often an initial user action of interest to a security monitoring objective will trigger a whole series of subsequent events all of which will have event data - for example a system manager creating a new user account on a Windows domain will create a series of recordable events including the system manager logging on and the request for an account creation function. This will trigger a number of automatic actions, including:

- creating a user authentication record of the domain server

- population of a directory record for the user

- creation of an associated email account

Event data correlation also relates to the association of event data with an alert message. Alert messages on their own can be quite deliberately uninformative and need to be linked to the event data with which they are associated to ascertain their true meaning and implications.

Event data correlation process can be partially automated, but needs to be backed up by expert interpretation to confirm the associations. This correlation can help in deciding between events that are security incidents and those that are false positives. When security monitoring only covers a few devices, it’s possible to carry out event correlation manually, by extracting events and aligning them on a timescale. However, regardless of whether event correlation is manual or automated, the key to the process is the reporting integrity of all the monitored devices. Time synchronisation is a key element, as related events should closely correspond in time at least for short duration transactions.

6.8 Event data analysis

The process of analysing event data can be triggered in response to an alert or undertaken as part of an audit, spot-check or other routine investigation. It involves enquiries being performed upon the consolidated event data and can include any of the following:

- performing checks to confirm alerts are genuinely indicative of security incidents worthy of further investigation and are not false alarms

- supporting security investigations by data mining and analysis in relation to specific end points, specific users or specific resources

- providing information relating to compliance checks performed against specific aspects of online security policy

- providing information on normal online behaviour against which deviations can be measured

- supporting the development of expert knowledge to enable the detection of abnormal or unexpected patterns of behaviour that may indicate security incidents

- providing information for the production of regular statistical results for inclusion in management reports

- providing information to support housekeeping activities

Event data analysis can be time consuming and resource intensive, so must be prioritised based on the original security monitoring objectives. However, event data analysis is an essential part of security monitoring as the intermediate data provides little or no benefit on its own.