Explaining Uncertainty in UK Intelligence Assessment

Published 24 March 2025

Intelligence assessments support decision making in environments where information tends to be incomplete, conflicting or unreliable and therefore subject to different interpretations. Intelligence analysts seek to resolve uncertainty but cannot fully eliminate it. Therefore, we will always communicate what doubt remains in our key analytical judgements to our customers upon completion of our analysis. This is to highlight the potential impact on the assessment and therefore on resulting decisions.

We use two frameworks to describe different but related aspects of uncertainty in intelligence assessments:

(i) Probability Yardstick - We use this standard set of language in probabilistic judgements to describe our assessed likelihood that a statement is true or that an event will occur, is occurring or has occurred; and

(ii) Analytical Confidence Ratings (AnCRs) and statements - We use these ratings, which are based on a standard evaluation criteria, to make clear the strengths and limitations of the key judgements made. Analytical confidence can also explain the susceptibility of the judgement to change, particularly for judgements about the future.

Clearly communicating uncertainty allows customers to take it into account when making decisions based on our assessment.

PHIA Probability Yardstick

The intelligence assessment community use terms like unlikely or probable to convey the uncertainty associated with intelligence judgements. These terms are used instead of numerical probabilities (e.g. 55%) to avoid interpretation of judgements as being overly precise, as most intelligence judgements are not based on quantitative data. A Yardstick establishes what these terms approximately correspond to in numerical probability. This ensures that readers understand a judgement as the analyst intends. The rigorous use of a Yardstick also ensures that analysts themselves make clear judgements and avoid the inappropriate use of terms that imply a judgement without being clear what it is (e.g. “if X were to occur then Y might happen”).

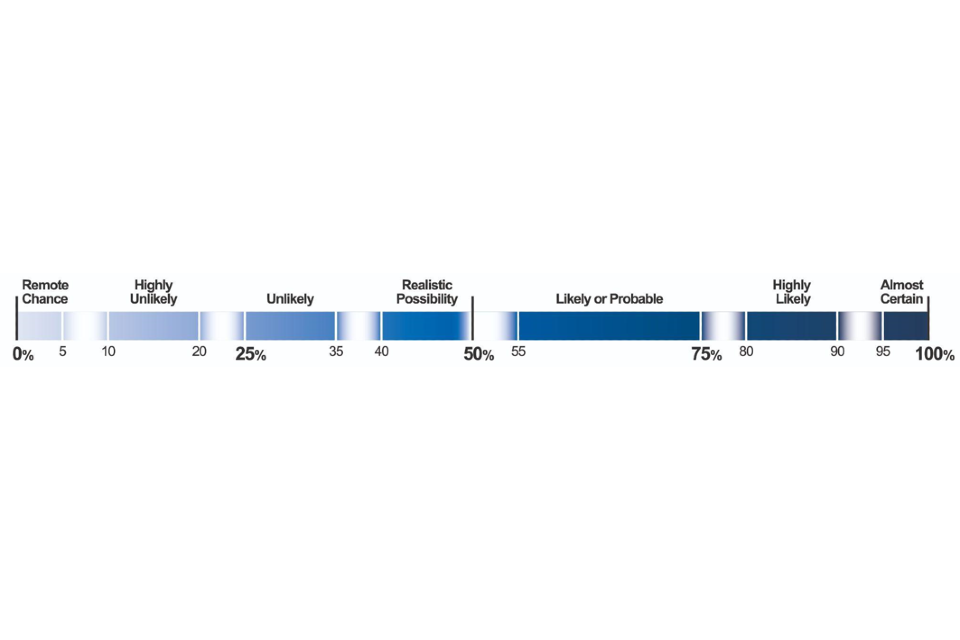

The Professional Head of Intelligence Assessment (PHIA) Probability Yardstick splits the probability scale into seven ranges. Terms are assigned to each probability range. The choice of terms and ranges was informed by academic research and they align as closely as possible with an average reader’s understanding of the terms in the context of what they are reading. Below is an example of how the Yardstick appears in intelligence assessments.

- >0% - ≈5%: Remote Chance

- ≈10% - ≈20%: Highly Unlikely

- ≈25% - ≈35%: Unlikely

- ≈40% - <50%: Realistic Possibility

- ≈55% - ≈75%: Likely or Probable

- ≈80% - ≈90%: Highly Likely

- ≈95% - <100%: Almost Certain

Analytical Confidence Rating (AnCR) Framework

Whereas probability reflects the likelihood that a statement is true, analytical confidence reflects the soundness and stability of the foundations on which the assessment of likelihood has been made. Confidence ratings and statements can be used by the readers of an assessment to evaluate how much weight they should put on assessments when making decisions. They flag up remaining uncertainties, note factors beyond our control and identify where we can strengthen assessments to better inform future decision-making.

AnCRs are expressed in standardised terms. One of three ratings is assigned - High, Moderate or Low, with lower confidence ratings having greater levels of uncertainty. The choice of AnCR is informed by use of a PHIA evaluation tool, which supports systematic evaluation of a criteria against three categories; Information Base, Analytical Rigour and Complexity & Volatility. The Information Base category considers the information and sources on which an assessment is based. Analytical Rigour examines the way in which we have examined the information to better understand it. Complexity & Volatility considers how the topic or environment being analysed affects the assessment, such as how quickly a situation is evolving. The application of a standard process and terminology reduces or mitigates subjectivity in the evaluation process, enabling consistency in how the relative strengths and limitations of an assessment are identified, explained and communicated.

A supporting AnCR statement details the specific source and effect of the uncertainty remaining following analysis and where appropriate how confidence could be increased. By giving readers specific information about the strength of judgements, they are better equipped to make informed decisions.