CDEI AI Barometer

Updated 23 June 2020

AI Barometer

1. Chair’s Foreword

The terms of reference for the Centre for Data Ethics and Innovation (CDEI) call on us to scan for opportunities and risks arising from the use of artificial intelligence, and to identify gaps in our national response. It is a little over a year since these terms were published. Since then, the urgency of knowing that we can safely and effectively deploy new data driven technologies has been demonstrated in a tragic and global way by the COVID-19 pandemic.

The AI Barometer provides a system-wide view of how AI and data are being used across the UK in five key sectors. It highlights where there are opportunities for greater use and where there are barriers to responsible adoption. It draws on the expertise of over one hundred participants from industry, academia, civil society and government. The research that underpins the AI Barometer predates the pandemic. But the conclusions apply now with even greater force.

The AI Barometer is a community-informed view of what we should be focusing on as a country. Within each of the sectors, risks and opportunities have been ranked, debated and analysed for underlying factors. Views differed, of course, and areas where there is less agreement can be seen in the detailed analyses presented here. But the overall conclusions paint an emerging picture of what is foremost on the minds of experts across different disciplines. As we develop the Barometer we will increase the range of sectors looked at and the numbers of people engaged to broaden and deepen our understanding.

I would encourage you to explore the wealth of detail set out in each of the five sector chapters. But there are two overarching messages that are worth highlighting here.

The first is that there are a number of ‘harder to achieve’ opportunities of enormous potential social benefit but which are unlikely to be realised without concerted government support and a clear national policy. These ‘harder to achieve’ opportunities include a fairer justice system; more efficient de-carbonisation; and, of course, more effective public health research and disease tracking. These opportunities have a number of common characteristics: they require coordinated action across organisations or ecosystems; they involve the use of very large scale complex data about people; and they affect decisions that have an immediate and significant impact on people’s lives.

The second overarching conclusion is that there are a number of common barriers to achieving these ‘harder to achieve’ benefits. Some relate to the workforce – the skills and diversity of those working on these problems. Some involve our state of knowledge, for example, about what the public will accept as ethical. Others relate to the data governance and regulatory structures we currently have in place. Concern about the quality and availability of data and its related infrastructure was a consistent theme, as was concern about the lack of clarity in how regulation applied to the use of data in particular circumstances, and a lack of transparency about how data was actually being used.

These issues contribute to one fundamental barrier – low levels of public trust. As we have seen in the response to the COVID-19 pandemic, confidence that government, public bodies and private companies can be trusted to use data for our benefit is essential if we are to address the major risks that threaten our society, from pandemics, to global warming, to social fragmentation.

In its first year, the CDEI’s programme has focused on clarifying areas of regulatory uncertainty, with reports on online targeting and algorithmic bias (to be published shortly). As we plan our programme for the future we will be looking at how the CDEI and the country can address the full range of barriers set out in this report.

The most promising benefits will not be realised without a coordinated national response.

AI has huge potential to address key societal challenges such as climate change, provision of health and care for an aging population, and inequality. It presents concrete opportunities through the potential for:

- Operating an efficient green energy grid capable of managing decentralised power generation and storage

- Identifying and tracking public health risks at speed

- Using automated decision support systems in health, education and criminal justice in a way that reduces bias

- Understanding the impact of automated services on vulnerable people and supporting them better.

- Tackling misinformation while respecting freedom of speech

These most promising opportunities often share key characteristics: the use of complex data flows about individuals; a direct impact on individuals and their rights; and coordination across organisations and ecosystems – and this means realising them will involve overcoming significant common barriers.

Roger Taylor

Chair

2. Overview

2.1 What is the AI Barometer?

The AI Barometer is an analysis of the most pressing opportunities, risks, and governance challenges associated with AI and data use, initially across five key UK sectors. Over 100 experts took part in workshops and scoring exercises to produce a community-informed view of these factors. These outputs will inform the work of the CDEI and our advice to the UK government on its policy priorities. Full details are available in our methodology.

The current age of data-driven technology is unlike anything we have seen before. Large-scale technological change is occurring at an unprecedented pace, which the global response to COVID-19 has only accelerated, with far-reaching implications across all aspects of our lives. It comes accompanied by an overwhelming volume of commentary and claims, for which the evidence - and extent of sensationalism - can often be unclear. In the face of all this, it can be difficult to discern which issues most require our attention.

The ambition of the AI Barometer is to provide a much needed system-wide view of how AI and data is being used across the UK. Having a broad view of the landscape allows us to understand where common challenges are being experienced, and how different contexts drive how beneficial or harmful AI use might be. In a highly interconnected world, it also helps us know how technological, policy, and regulatory developments in one sector may influence others.

2.2 Key findings

-

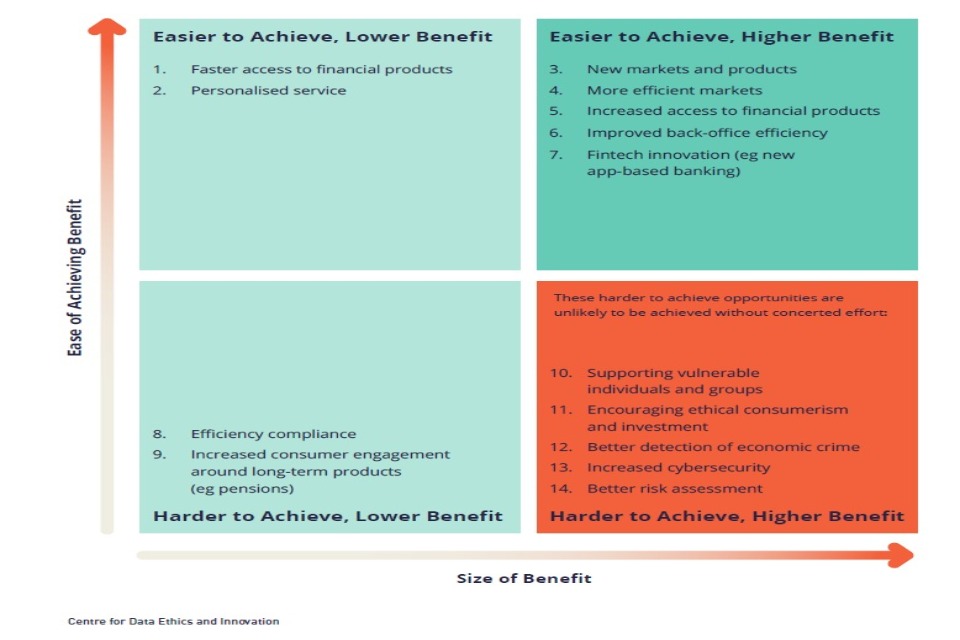

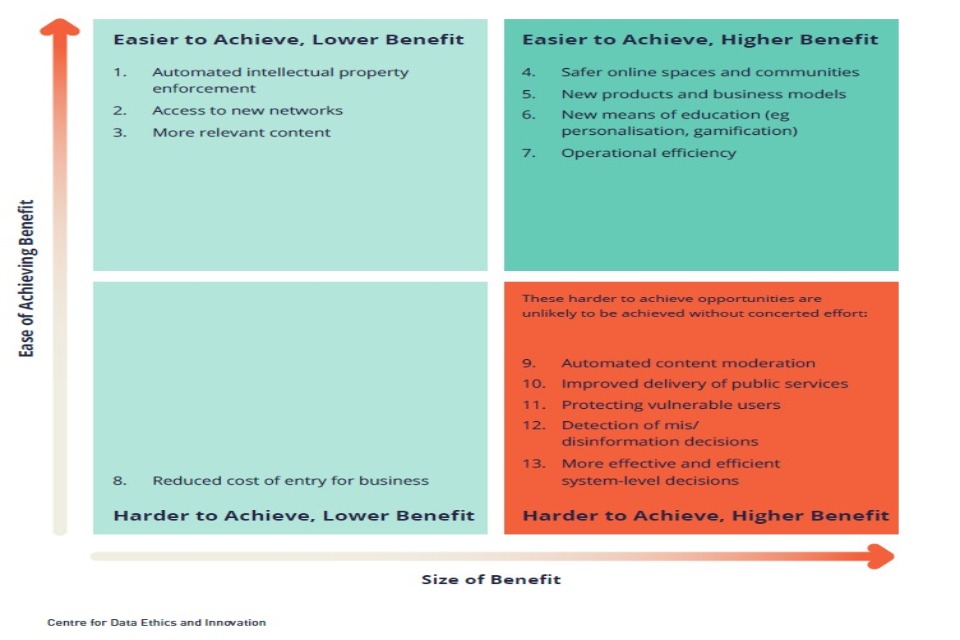

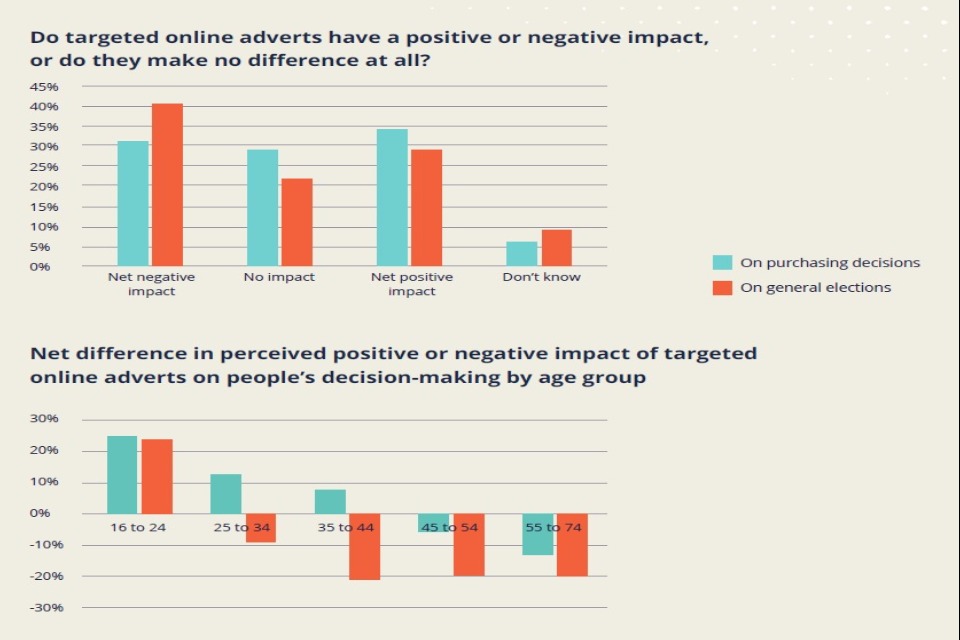

The exercise highlighted the numerous opportunities that AI and data can offer, which many of our panellists believed we have only begun to tap into, for example in improving content moderation on social media, supporting clinical diagnosis in healthcare, and detecting fraud in financial services. Even those sectors that are mature in their adoption of digital technology (e.g. the finance and insurance industry) have yet to maximise the benefits of AI and data use.

-

However, some opportunities are easier to realise than others. ‘Easier to achieve’ innovations tend to involve the use of AI and data to free up time for professional judgement, improve back-office efficiency and enhance customer service. ‘Harder to achieve’ innovations, in contrast, involve the use of AI and data in high stakes domains that often require difficult trade-offs (e.g. police forces seeking to use facial recognition must carefully balance the public’s desire for greater security with the need to protect people’s privacy).

-

Alongside looking at opportunities, our panellists were asked to rank a series of risk statements according to their impact and likelihood. Some of their judgements were to be expected, for example, technologically-driven misinformation scoring highly in healthcare. Yet the scoring exercise also brought to the surface risks that are less prominent in media and policy discussions, for instance the differences between how data is collected and used in healthcare and social care, and how that limits technological benefits in the latter setting.

-

While the top-rated risks varied from sector to sector, a number of concerns cropped up across most of the contexts we examined. This includes the risks of algorithmic bias, a lack of explainability in algorithmic decision-making, and the failure of those operating technology to seek meaningful consent from people to collect, use and share their data. This highlights the value of cross-sector research and interventions.

-

Several barriers stand in the way of addressing these risks and maximising the benefits of AI and data. These range from market disincentives (e.g. social media firms may fear a loss of profits if they take action to mitigate disinformation) to regulatory confusion (e.g. oversight of new technologies like facial recognition can fall between the gaps of regulators).

-

While many of these barriers are daunting, they are far from intractable. Incentives, rules and cultural change can all be marshalled to address them. This document highlights how regulators, researchers and industry are rising to the challenge with new interventions, which will pave the way for more ethical innovation.

-

Three types of barrier merit close attention: low data quality and availability; a lack of coordinated policy and practice; and a lack of transparency around AI and data use. Each contributes to a more fundamental brake on innovation – public distrust. In the absence of trust, consumers are unlikely to use new technologies or share the data needed to build them, while industry will be unwilling to engage in new innovation programmes for fear of meeting opposition and experiencing reputational damage.

-

Against this backdrop, the CDEI is launching a new programme of work that will address many of these institutional barriers as they arise in different settings, from policing, to the workplace, to social media platforms. In doing so, we will work with partners in both the public and private sectors to ensure that the sum of our efforts are greater than their individual parts.

3. Contents

In this first edition of the AI Barometer, you will find:

-

A summary of our findings, covering common patterns across opportunities, risks, and governance.

-

Chapters for each of the five sectors we analysed:

-

Criminal Justice

-

Financial Services

-

Health and Social Care

-

Digital and Social Media

-

Energy and Utilities

-

Details of our Methodology.

-

Acknowledgements recognising the contribution of our sector panellists.

4. Our Methodology

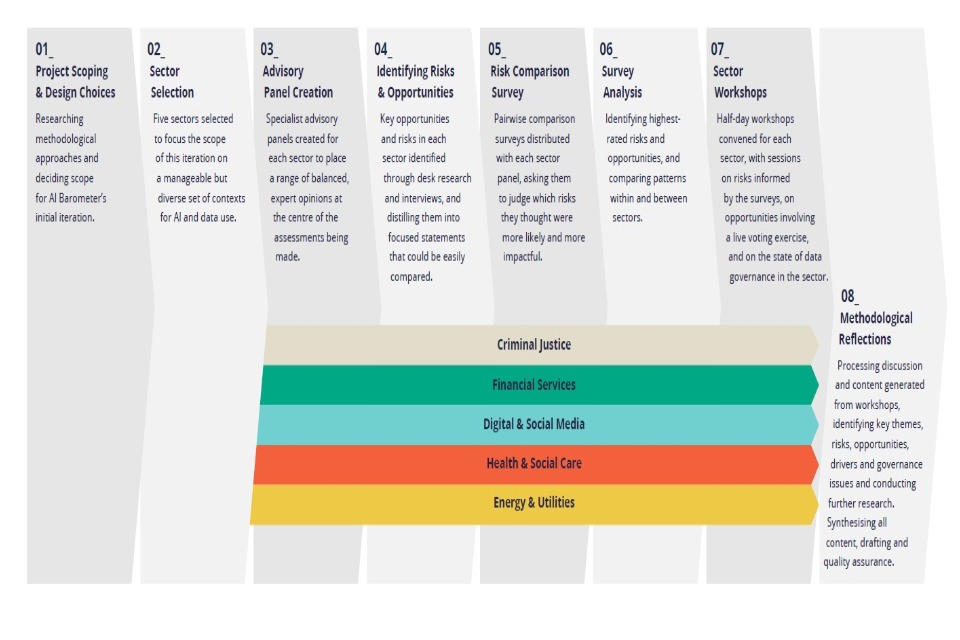

The AI Barometer was developed using the following approaches. Full details are available in our methodology.

-

AI and data-driven technology: We looked at the potential impact of technologies involving the use and collection of data, data analytics, machine learning, and other forms of artificial intelligence.

-

Sectoral approach: We focused on a mix of five key sectors, knowing that AI and data-driven technology can pose radically different opportunities and risks depending on the contexts in which they are deployed. A sectoral approach also frames our findings within boundaries that policymakers and regulators are familiar with.

-

A community-driven view: Understanding the ethical impacts of AI and data use is an interdisciplinary endeavour. We convened expert panels made up of different communities within each sector, which ensured our work was informed by a diverse set of expertise and perspectives. Panellists included representatives from industry, academia, civil society and government. This report reflects the input of these panels.

-

A focus on the opportunities and risks of AI and data use: We used policy and academic literature to list and categorise the opportunities and risks apparent in each sector over the next three years. This provided a starting point for how uses of technology are understood in current debates.

-

Comparative tools to ensure fair judgements: Each of our expert panellists was asked to complete a pairwise comparison survey, whereby they were presented with two risk statements at a time and asked to choose the one that appeared most likely or impactful. This method allowed for a large number of risks to be meaningfully assessed and ranked.

-

Deliberation, discussion and research: We used these survey results to provoke discussion in a series of expert panel workshops. Here we explored the drivers and consequences of the different opportunities and risks we had previously identified. We also discussed the governance regimes present in different sectors. We undertook further research to unpack the issues raised in the workshops and to verify the claims that were made.

5. Sector Selection

We chose a diverse set of sectors to examine for the first edition of the AI Barometer, to understand how opportunities and risks vary by context. Specifically, we selected sectors that varied in the extent of:

-

Personal data use by services and systems

-

Digital maturity, and current level of AI and data analytics use

-

Public and privately commissioned and delivered services

-

Governance systems and approaches

6. Expert Panel Composition

Each panel was composed of a balanced set of experts and stakeholders within each sector, typically including:

-

Government

-

Regulators and other arms-length bodies

-

Tech industry

-

Sector organisations (e.g. service providers, businesses using data-driven technology)

-

Membership bodies

-

Academia

-

Civil society organisations

See the Acknowledgements for a full list.

7. Summary of Findings - Opportunities

The exercise highlighted that AI and data present significant opportunities that have yet to be fully realised. This is true for all of the five sectors in scope, including healthcare and finance, which are typically seen as fast adopters of technology.

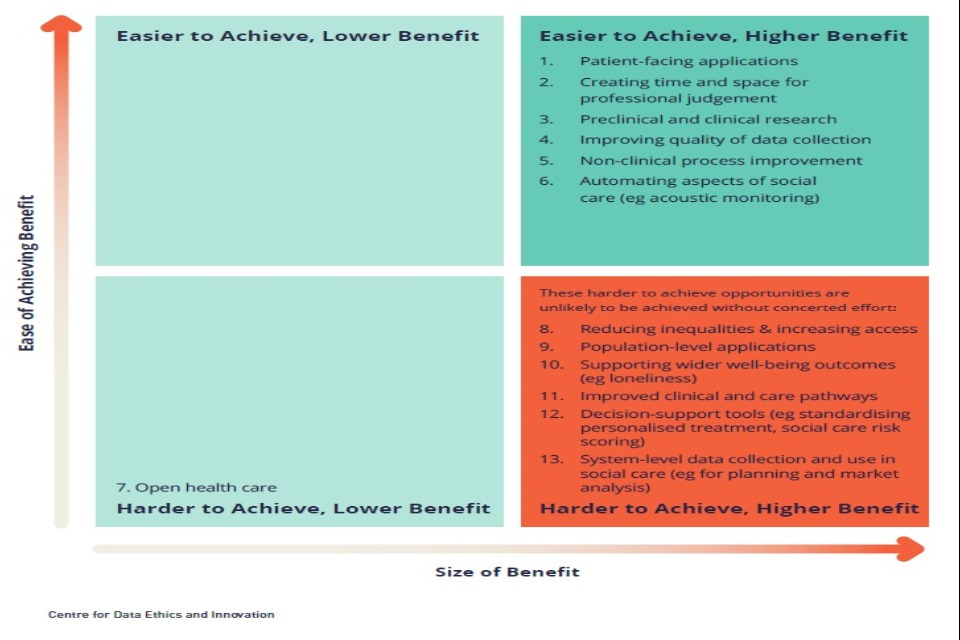

Some of the highest-potential benefits are among the hardest to achieve, typically involving the toughest ethical questions. Others were seen as relatively easy to deliver, as described in the table below. The boxes on the left-hand side highlight characteristics of use cases that appear as common across the sectors.

| Harder to Achieve | Criminal Justice | Financial Services | Health & Social Care | Digital & Social Media | Energy & Utilities |

| Systemic improvements that require market or system coordination | Predictive analytics to improve existing riskscoring | Fraud and money laundering detection | Public health research and tracking | Online content and marketplace moderation | Better data-driven planning to meet decarbonisation goals |

| More effective risk assessment and decision-making supported by algorithms, without exacerbated bias | Facial recognition technology to increase policing capability | Better risk assessment and management | Clinical diagnosis and decision support systems | Combating mis/disinformation | Proactive/predictive network and asset maintenance |

| Improved support of vulnerable people while preserving autonomy | More proportionate and unbiased court decisions | Regulatory compliance | Reducing health inequalities | Supporting vulnerable users | Coupling markets, enabling a whole-systems approach to innovation and energy usage |

| Combating misinformation without affecting people’s rights | Supporting vulnerable consumers |

| Easier to Achieve | Criminal Justice | Financial Services | Health & Social Care | Digital & Social Media | Energy & Utilities |

| Improved corporate and backoffice efficiency | Improved staff wellbeing | Increased access to financial products | Patient-facing apps and services | Improved organisational efficiency | Enhanced consumer choice and control |

| Freeing up time for professional and human judgement | Better allocation of police resources and automated back-office functions freeing up professional time | Innovation in fintech banking services and interfaces | Workforce management | Tracking, profiling and targeted advertising | Increased energy efficiency through automation of power management |

| New products and business models | Pre-clinical and clinical research | Better search and recommendations | |||

| Better consumer-facing applications, choice and control | |||||

| Lower-impact decision-making support |

8. Summary of Findings – Risks

Alongside highlighting opportunities of AI and data use, the exercise identified a broad set of risks resulting from the use of this technology - where risk means the chance of a harm occurring. The below table sets out notable risks found in each sector, as well as notable risks commonly found across all sectors. It is important to note that these risks are not certain to unfold, although many are already playing out in some form. Panellists were asked to score risks according to their perceived impact and likelihood.

| Top Common Risks | Criminal Justice | Financial Services | Health & Social Care | Digital & Social Media | Energy & Utilities |

| Algorithmic bias leading to discrimination featured highly across almost all sectors | Facial recognition technology presents numerous risks, including concerns around accuracy and bias, personal data retention, public/private data-sharing, and its impact on privacy | Bias in algorithmic decision-making systems such as algorithms used for credit-scoring or for pricing insurance | Health mis/ disinformation provided through apps, search, websites and social media | Manipulation and political micro-targeting based on powerful inferences from personal data, affecting people’s autonomy and trust in institutions | Loss of public benefits via underuse such as better local-level energy system planning, interventions to tackle fuel poverty, and meeting decarbonisation targets – largely due to how data can be shared across the supply chain |

| Lack of explainability of AI systems | Bias in algorithmic decision-making systems such as those judging reoffending risk or appropriate sentencing | Higher-impact cyberattacks, due to the scope for adversarial attacks on AI systems, and low explainability making it difficult to identify impact of attacks | Bias in algorithmic decision-making systems which manifests in complex ways in health contexts | Market power of platforms which hold large volumes of personal data, with implications for markets and consumers | Regulator resourcing in the context of an industry adapting to decarbonisation |

| Regulator resourcing impacting the ability of governance systems to address AI and data use | New demands on data by the criminal justice system, particularly of victims of serious crimes | Concentration of data in a few large actors within the sector, with impacts on market fairness, innovation and consumers | Worsened health inequalities due to poor data availability and unequal access to technological benefits | Addictive design leading to excessive use of digital platforms, with potential mental health impacts | Digital exclusion of some households and businesses from the benefits of increased AI and data use |

| Failure of consent mechanisms, leading to the mass collection and use of data without people’s consent | Underuse in social care due to low digital and data maturity, and lack of structural incentives for improvement | ||||

| Loss of public trust in institutions due to problematic AI and data use |

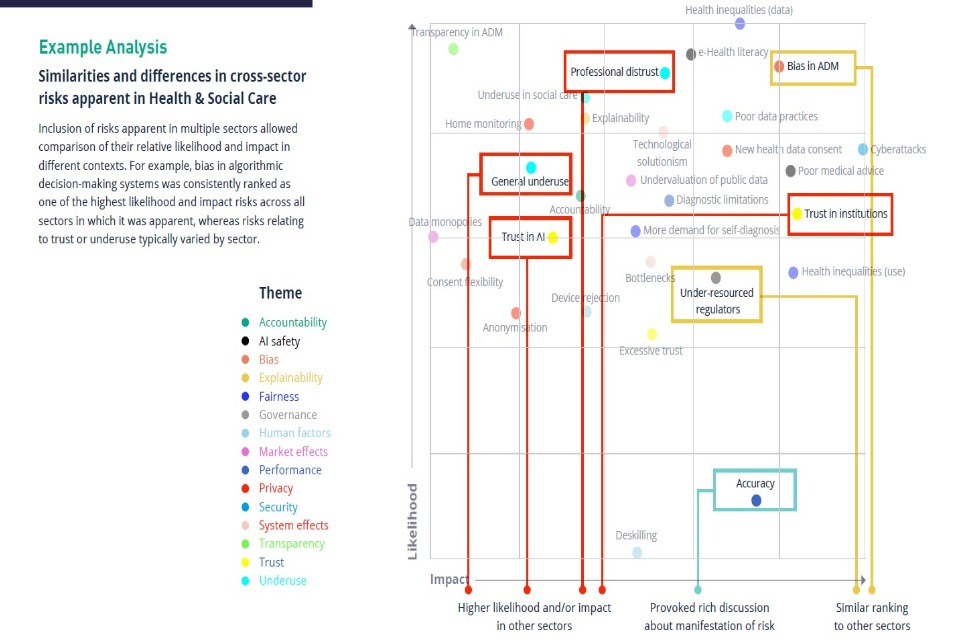

While the use of AI and data presents unique challenges for each sector, our analysis found that a large number of risks were common in every sector examined. This table reflects how risks that are common across sectors were perceived in relative terms by our expert panels, from higher to lower risk, as reflected in the risk quadrants within each sector chapter.

Some familiar risks, such as algorithmic bias, were unsurprisingly prominent. However, the rating of others was perhaps more surprising, such as the failure of consent mechanisms for personal data collection and use, which was deemed a high or medium risk in every context. The table also reveals that the severity of risks, while present in every sector, can vary. The undervaluation of public data, for instance, is perceived as a far greater hazard in healthcare than in any other sector. The extent and severity of the common risks experienced in a given sector typically varied by:

-

How advanced the use of AI and data is

-

The extent of personal data use

-

The direct impact that decisions and system functionality (e.g. the provision of energy) in that sector have on individuals and other actors

-

The nature of interactions between public and private actors

-

Pre-existing governance approaches (e.g. pre-market certification requirements for products such as medical devices).

9. Summary of Findings – Barriers to Ethical AI

The exercise helped reveal several barriers to the ethical use of AI and data in our five sectors. These barriers prevent risks from being adequately mitigated, while also hampering innovation and denying society the full benefits of the technology.

A lack of funding, for example, can block attempts in the public sector to launch new data sharing projects, especially where those projects take years to bear fruit. Yet not all barriers relate to resourcing. Some are borne from a lack of coordination and communication, such as where there is confusion about which regulators govern a new application of AI. In other cases, barriers are more fundamental, such as when there is a lack of evidence to substantiate claims that a perceived risk truly is a risk.

10. Data barriers

- Low data quality, availability and infrastructure - The use of poor quality or unrepresentative data in the training of algorithms can lead to faulty or biased systems (e.g. diagnostic algorithms that are ineffective in identifying diseases among minority groups). Equally, the concentration of market power over data, the unwillingness or inability to share data (e.g. due to non-interoperable systems), and the difficulty of transitioning data from legacy and non-digital systems to modern applications can all stymie innovation.

11. Knowledge barriers

-

Insufficient evidence - The impact of AI and data-driven technology is not always known. This is often the case for new applications and innovations (e.g. synthetic media), which have yet to be studied in depth. Insufficient evidence prevents decision-makers from knowing whether and how to intervene to promote innovation.

-

Lack of consensus - There is often disagreement among the public about how and where AI and data-driven technology should be deployed. Innovations can pose trade-offs (e.g. between security and privacy, and between safety and free speech), which take time to work through.

12. Workforce barriers

-

Digital and data skills - Data skills are in high demand across the economy, meaning that many organisations – particularly in the public sector – struggle to find the talent they need to address risks and maximise opportunities. A lack of skills and capacity is much a feature of the regulatory landscape as it is of the industry landscape, affecting both our ability to adopt technology and to govern it well.

-

Workforce diversity - A lack of diversity in the workforce can mean AI and data-driven technology is developed and deployed without consideration of the needs of every group in society. This is a problem found not just in tech firms but across the tech ecosystem, from data labelling organisations to governance bodies.

13. Market barriers

-

Funding gaps - Significant investment is often required to mitigate the most intransigent risks and hardest to achieve opportunities (e.g. cleaning up public sector datasets and making them available for research and development; or developing detection systems to remove deepfake content from tech platforms). This expense can be difficult to win support for when innovation projects take years to bear fruit.

-

Risks to profit - Private firms can lack incentives to address risks posed by AI and data-driven technology (e.g. social media platforms may fear that addressing AI-driven disinformation could affect their revenue).

14. Governance barriers

-

Regulatory and policy development and coordination - The approaches, guidance, and training used across the development and deployment of AI and data-driven systems is often highly localised (e.g. with different police forces setting their own policies for FRT use). Regulatory approaches can vary between sectors and between regulators operating within one sector. This can lead to confusion among both those deploying and overseeing technology.

-

Lack of transparency - Private firms and public sector organisations are not always transparent about how they use AI and data-driven technology or their governance mechanisms. This prevents scrutiny and accountability, which could otherwise spur ethical innovation.

15. Trust barriers

- Lack of trust - Users of AI and data-driven technology often lack confidence that it is safe to use or is being designed in their interests. This can deprive people of the benefits of technology (e.g. discouraging them from using AI-driven healthcare apps). A lack of trust can also temper industry’s appetite for engaging in innovation, for fear of pushback from their customers (e.g. energy firms may be unwilling to ask customers to share more household data, which could otherwise improve energy efficiency services).

One barrier to ethical AI and data use that deserves close attention is the need for good governance. Whereas some other barriers describe external factors that are difficult to control directly (e.g. public trust), governance can be shaped by policymakers and regulators through a combination of measures, from legislative changes to injections of funding. Our panellists highlighted their understanding and perceptions of how regulation of AI and data use is working, helping build a picture of the barriers to better governance described below - albeit one that very much varies by context and sector (for example, some regulators have dedicated considerable resources to examining the impact of AI and data use).

16. Barriers to effective governance

-

AI competes for attention and resource: AI and data-driven technology are not the only issues on the minds of regulators. In finance, for example, regulators are grappling with the growth of cryptocurrencies, and in energy, regulators are focused on achieving net-zero carbon emission targets, while also managing an influx of new suppliers into the market. Regulators in most sectors are having to respond to increased cybersecurity threats. Each of these trends are competing for regulators’ limited resources and bandwidth, which in many cases have not significantly increased in recent years.

-

Highly devolved or distributed systems: In many instances, governance bodies are operating in fragmented systems with high levels of devolved decision-making. In policing, for instance, individual police forces have considerable scope to experiment with new technologies like facial recognition and predictive policing algorithms, and to devise their own operating procedures. Devolved governance of this kind can make oversight of AI and data use challenging.

-

Lack of clarity about where oversight responsibility lies: Despite AI and data being commonly used within and across sectors, it is often unclear who has formal ‘ownership’ of regulating its effects. This problem is common in sectors where there are multiple regulators (e.g. in healthcare), although there are many examples of regulators coordinating their activity in relation to AI and data use through various bodies and working groups.

-

Data governance is still maturing: The introduction of GDPR and the Data Protection Act has strengthened the data governance landscape. However, panellists highlighted a lack of clarity as to how it should be interpreted in specific contexts (e.g. how to determine what is a lawful use of facial recognition technology, or what amounts to meaningful consent in the collection of data). This confusion can make organisations reluctant to share or make use of data (e.g. hospital trusts). The Information Commissioner’s new regulatory sandboxes and formal Opinions were cited favourably as useful mechanisms to address this challenge.

-

Industry fills governance vacuums: In the absence of clear, centrally-defined governance, some industry and frontline organisations are creating their own standards of AI and data use as they increase their use of technology. For example, panellists in the finance sector noted how some firms were deciding for themselves what amounts to fairness in algorithmic decision-making. While some self-direction is inevitable and welcome, too little steer from regulators could result in diverging and potentially undesirable practices.

-

Data skills shortages: Many regulators struggle to attract staff with data science and AI skill sets, given the extent to which they are in demand across the economy. Regulators are also competing for talent with private firms in their own sectors.

-

Limited data access: Some panellists thought regulators could benefit from having greater access to data about the systems and organisations they govern, although in many cases, regulators do already have powers to obtain information. The capability to access data at a systemic level could help some regulators understand whether regulation is being adhered to and individuals treated fairly (e.g. enabling energy regulators with easy access to system-level smart meter or supplier data).

17. Summary of Findings – What next?

17.1 Making the most of the AI Barometer

Over the coming months, the CDEI will promote the findings of the AI Barometer to policymakers and other decision-makers across industry, regulation and research. We hope the AI Barometer will inform their agendas, directing them to look at the most pressing issues of AI and data use as identified by our expert panels. We will encourage them to look not just at addressing the hazards posed by this technology, be it misinformation or cybersecurity threats, but also to champion new innovations that can improve our public services, bolster our economy and help people lead more fulfilling lives.

The AI Barometer will also play a role in shaping the future strategy of the CDEI. We will use its findings to help us understand where we can add the most value, looking in particular at those barriers to ethical innovation that if removed could yield the greatest gains. The CDEI has already made progress in identifying policy interventions through its two reviews on bias and online targeting. These have highlighted the importance of data access and transparency in ethical data-driven systems.

The AI Barometer itself will be expanded over the next twelve months, looking at new sectors and gathering more cross-sectoral insights.

18. Looking towards the future

As the CDEI embarks on its work programme for the coming year, we will be looking at how both we and others can address the barriers to maximising ethical AI and data use identified in this report.

| Barrier Type | Barrier to Ethical AI and Data Use | Examples of Potential Mitigating Action |

| Data Barriers | Low data quality, availability and infrastructure | Investing in core national data sets; building secure data infrastructure; trusted data sharing mechanisms; ethical data regulation |

|---|---|---|

| Knowledge Barriers | Insufficient evidence; Lack of Consensus | Researcher access to platform data; Citizen council models; ethics committees |

| Workforce Barriers | Digital and data skills; Workforce diversity | Training and education programmes e.g. AI masters; Training and recruitment policies to diversify workforce |

| Market Barriers | Funding gaps; Risks to profit | Investing in core national data sets; Requirements for public disclosure and independent audit |

| Governance Barriers | Regulatory and policy development and coordination; Lack of transparency | Development & coordination of policy; defining & aligning industry and regulatory standards; Requirements for public disclosure and independent audit |

| Trust Barriers | Lack of trust | Public education and information initiatives; Addressing the workforce and data governance barriers to ethical AI |

19. The impact of COVID-19

19.1 How is data-driven technology and AI being used in response to COVID-19?

AI and data-driven technology have played a central role in the response to the COVID-19 pandemic, both in terms of the healthcare response, and addressing its wider economic and societal impacts. Below we highlight some of the more prominent use cases being employed or considered across the world, which we have also begun tracking through our COVID-19 repository.

The CDEI is undertaking research into high-profile uses of technology in response to the pandemic, looking at what it would take to ensure they are developed and deployed to the highest ethical standards.

19.2 Supporting the immediate healthcare response to the disease

-

Speeding up medical research (e.g. using AI to understand the structure of the virus or identify promising treatment and vaccination candidates).

-

Improving diagnostic processes (e.g. using image recognition to identify viral pneumonia).

-

Using algorithms to estimate high-risk patients and triage cases.

-

Using predictive analytics and data-driven simulations to understand how the disease might spread.

-

Using data platforms to track health equipment and other assets.

-

Making population-level data publicly available to aid global COVID-19 research.

-

Prioritising the provision of official health advice through tech platforms (e.g. in search results, on social media, and through smart speakers).

19.3 Supporting the public health response and mitigating the effects of lockdown

-

Identifying vulnerable people using publicly-held data and offering them priority services (e.g. food delivery slots) that improves their ability to self-isolate.

-

Contact-tracing apps to track the spread of the disease and identify people who should isolate.

-

Identifying adherence to social distancing in public and workplaces using wearables and computer vision.

-

Automating content, advertising, and marketplace moderation in the absence of human reviewers.

-

Connecting volunteers and enabling community support on apps and social media platforms.

-

Use of video chat devices within care homes to enable contact with friends, family, and isolating residents.

-

Predicting food shortages to enable redistribution of supplies accordingly.

-

Sharing and aggregation of publicly-held data at the local level to enable better support of people during lockdown (e.g. children receiving free school meals).

19.4 Building future resilience and aiding the recovery

-

Using predictive analytics to predict future epidemics and understand how to build resilience.

-

Using data to understand the longer term impact of disease on other health factors e.g. cardiovascular risk.

-

Using digital health certificates and facial verification to support the return to normal economic activity.

-

Using novel data sources (e.g. energy data) to understand how economic activity is recovering.

19.5 Technology Trends

-

The COVID-19 crisis is causing the ‘leapfrogged’ adoption of specific technologies, applications, and business models, which has accelerated the opportunities and risks presented by particular AI and data use cases. Technologies are being used at scales and in contexts where they may have otherwise taken greater time to penetrate (e.g. the use of video chat devices in care homes). It will take some time for evidence to emerge of how beneficial or problematic particular applications have been in responding to the impacts of the pandemic (e.g. how automated content moderation compares to human moderators).

-

There has been considerable growth in the use of digital and data-driven platforms to address the effects of lockdown, including video conferencing, digital entertainment, social media, online marketplaces, and delivery networks. As well as changing the shape of some markets, these changes in behaviour mean those platforms will be benefiting from increased volumes of data that they can use to generate insights and build their services.

-

Data-sharing and use across the public sector is at a new high-watermark, and responses to the crisis has seen the rapid integration of technology platforms into public services, particularly at the national level. Many public bodies have changed how they deliver services, often by growing existing digital delivery platforms (e.g. tele-health), and these developments may permanently alter how services are delivered in future. In some instances, planning and resource may be needed to retain the benefits of the accelerated technology use, and sustainably integrate it into service delivery.

-

Specific risks identified in the AI Barometer that are likely to be heightened as a result of COVID-19 include misinformation (particularly around health issues), data concentration and platform monopolies as people and businesses increasingly rely on digital services, and the privacy impacts of increased digital surveillance that can arise with the use of technology to track the spread of the virus.

20. Criminal Justice

20.1 Overview

Scope

The scope of our sectoral analysis covered the use of AI and data-driven technology in policing, the courts, prisons, and the probation service, as well as legal services to the extent they relate to access to justice.

How is data-driven technology and AI used in criminal justice?

Applications of AI and data analytics in criminal justice are focused around a small number of use cases:

-

Facial recognition technology is used in policing to identify wanted suspects and persons of interest. It has been used for many years to retrospectively identify people in CCTV footage. However, it is now being used in live settings, for example to process footage from cameras placed in surveillance vehicles. Facial recognition technology could in future be used in combination with other equipment (such as bodycams and smartphones).

-

Risk-scoring algorithmic decision-making or supporting tools (ADMTs). ADMTs are being developed for different uses across the criminal justice sector that support decision-making around individuals, including in policing (e.g. to predict cases of domestic violence), prisons and probation (e.g. to predict the risk that someone will reoffend) and the courts (e.g. to inform sentencing decisions). Not every form of ADMT has yet been trialled in the UK.

-

Predictive crime analytics inform planning decisions by providing ‘heatmaps’ and other insights about criminal activity that help forces decide their resource deployment and responses. Several UK police forces have trialled predictive analytics, however many have ended these experiments or shifted from using external vendors to developing their own technology in-house.

-

Digital forensics, where data analytic tools can improve the capability and speed with which investigators can search through digital evidence from devices, email, and social media accounts, to determine relevance to a case, or what may need to be disclosed in legal proceedings.

Key Messages

-

Risks relating to facial recognition technology (FRT) were seen by many in our sector panel as among the most urgent to address. This reflects FRT’s transformative potential, and the fact it is being tested and deployed in a growing number of settings. Automating, scaling, and networking surveillance using AI and data-driven approaches creates qualitatively different effects than scaling up that activity using traditional human-led methods.

-

Digital maturity presents challenges. While there are ambitions to use state of the art AI and data-driven technology within the courts, they are still in the process of digitising their records and services. Digital systems across policing and prisons are often fragmented (e.g. in terms of standards and interoperability).

-

Parts of the justice system are devolved, making policy coordination difficult. For example, the existence of over forty police commissioners and chief constables mean approaches to managing data and AI can vary considerably across the country, raising the risk of confusion among the public and requiring police forces to spend time building up a significant knowledge base around a technology. Panellists said standardised technology trials would be desirable. The West Midlands Digital Ethics Committee was cited as an example of good practice. This initiative draws on the views of academic researchers and community members, with publicly available documentation and minutes, and mandates formal sign-off for new data projects, providing local accountability.

-

Effective governance depends on learning lessons from industry and other jurisdictions. UK policymakers may benefit from understanding how other national governments and our devolved administrations are managing the use of AI and data in their justice regimes. This includes Scotland, which recently introduced a Scottish Biometrics Bill to govern second generation forms of biometrics like facial images. Panellists also commented on the need to work with the private sector firms who develop these technologies, particularly to ensure they have mechanisms in place to provide accountability for major decisions. Commercial confidentiality may limit effective scrutiny of algorithmic systems, as was demonstrated in the US when investigators struggled to access the COMPAS software being deployed in US criminal courts. Commercial confidentiality may have also hindered the independent bias testing of facial recognition systems procured by UK police forces.

21. Criminal Justice: Opportunities

21.1 Key Messages

-

AI and data-driven technology promise to alter the way the justice system understands and executes human-driven concepts of fairness and accountability. However, the information and human judgement that goes into making these decisions is complex and multifaceted, and may not be fully captured by data-driven systems. Our panel viewed these opportunities, albeit sizeable, as among the hardest to achieve.

-

However, there are many opportunities for AI systems to enhance decision-making where digital technology is already being deployed. AI-based predictive analytics are a natural successor to existing actuarial risk-scoring, while facial recognition technology has the potential to greatly increase policing capability to identify known suspects, with modest workforce requirements.

-

These AI-led opportunities need to be considered alongside more conventional opportunities to improve the justice system. Our panel highlighted the risk that a novel AI-driven solution could attract greater policymaker attention and therefore funding than worthier interventions that lack the same allure, such as digitisation (e.g. of court record and decisions) and the consolidation of legacy systems. Indeed, digitisation could form the foundation for the use of AI in the sector. Better data quality and sharing were widely seen as necessary to achieving better justice outcomes.

21.2 State of the Art: Tech use in Criminal Justice

-

The Law Society has published a series of reports on the use of algorithms in criminal justice, effects of tech on the rule of law and access to justice, and a broader look at lawtech.

-

The Royal United Services Institute published a CDEI-commissioned report in March 2020 on data analytics and algorithm use in policing, including proposals for a new policy framework.

Case Study: Implementing Ethics in Policing

West Midlands Police (WMP) have established an Ethics Committee to advise the Chief Constable and Police and Crime Commissioner on the force’s data analytics projects, the first of its kind within UK policing. Its goal is to create a culture of ethics by design, and ensure that there is adequate ethical review at all stages of the research and development process.

While there are questions about the scalability and sustainability of a force-level model, the committee has influenced several decisions around police use of technology, in part due to the commitment from WMP not to proceed with technology projects without the committee’s approval.

Other police forces have established similar ethical advisory bodies, such as the London Policing Ethics Panel, which has advised London City Hall on issues such as facial recognition technology.

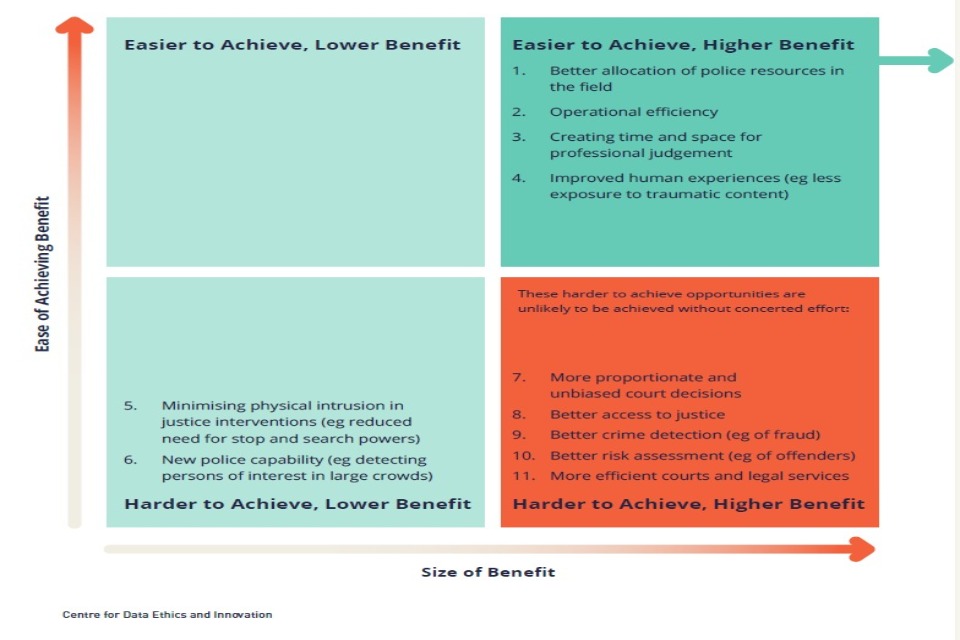

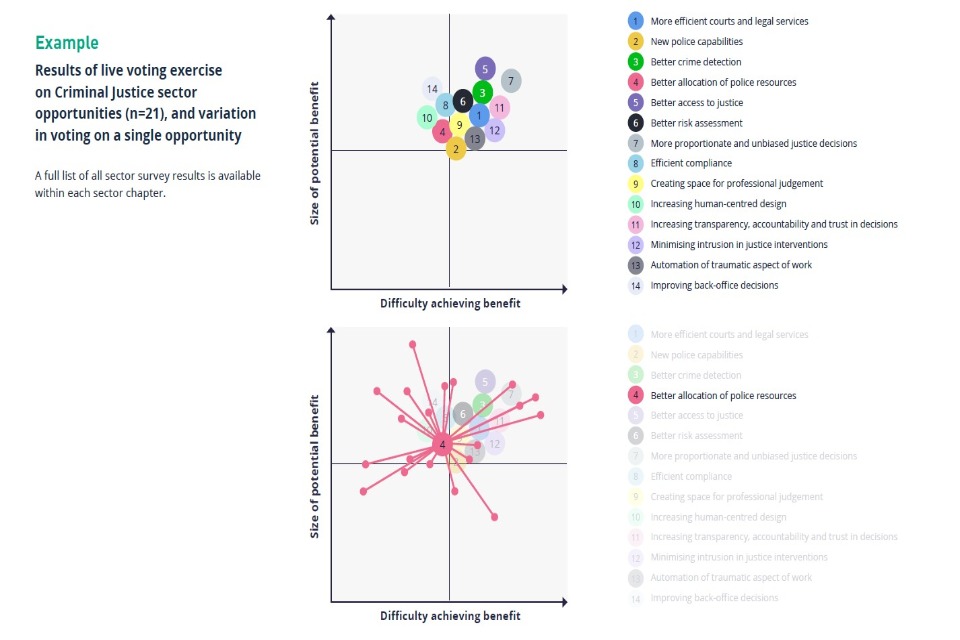

This quadrant is based on panel discussion of major AI opportunities within the Criminal Justice sector over the next three years. This diagram is not exhaustive and reflects a review of existing policy literature, workshop discussion, further socialising and additional research and analysis. See the methodology at the end of this document for further detail.

Spotlight: Improved Human

Experiences AI and data-driven technology present a number of opportunities to improve the human experience in the justice sector:

- Freeing up humans to do more valuable kinds of work. Time saved through AI tools can be reinvested in face-to-face activities or those requiring human judgement.

- Creating opportunities for accountability. The use of AI could make justice decisions more transparent by revealing previously unseen patterns of how people are treated and decisions made. Interrogable algorithms were, however, seen by our panel as hard to achieve and some way off.

- Improving occupational health through automation. For example, AI could automate traumatic aspects of a job, such as reviewing or labelling violent or pornographic imagery (see Case Study on the following page).

- Decreased physical intrusion. Use of facial recognition technology, improved electronic monitoring and automated data analysis could reduce the need for use of stop and search powers, day-today supervision in probation, and human review of sensitive data. However, the use of AI and data-driven technology may interfere with people’s privacy in new and different ways, and panellists noted intrusion need not be physical to be problematic.

Case Study: Reducing Exposure to Traumatic Content in Policing

AI tools are being deployed in policing to improve the way that content which may affect the wellbeing of officers (such as indecent images of children) is processed. As well as increasing the speed at which such content can be reviewed and improving police capabilities (e.g. by matching subjects in photos), the tools moderate and reduce the volume of content officers need to manually review, reducing the psychological pressure placed on them.

21.3 Criminal Justice: Opportunity Descriptions

-

Better allocation of police resources: Use of data-driven technologies to predict risk of crime, allowing more effective deployment of police resources.

-

Operational efficiency: Use of AI and data helps organisations to allocate back-office resources more efficiently and reduce operating costs.

-

Creating time and space for professional judgement: Automation can free people up to do more valuable and rewarding kinds of work.

-

Improved human experiences (e.g. automation of potentially traumatic aspects of work, such as reviewing or labelling violent or abusive imagery, leading to improved occupational health).

-

Minimising physical intrusion in justice interventions: For example, use of facial recognition technology or improved electronic monitoring could reduce the need for use of stop and search powers or day-to-day supervision in probation. Automated data analysis can also mean less sensitive personal data is reviewed directly by humans.

-

New police capability: use of technologies such as facial recognition to provide new policing capabilities, or existing capabilities at scales or in contexts not previously feasible.

-

More proportionate and unbiased court decisions: Use of AI and data could theoretically make justice decisions more transparent by providing more data on how people are treated, and with algorithms that can be interrogated and tested in a way that humans can’t be. In practice this was seen as hard to achieve and some way off.

-

Better access to justice: Process automation driving down the cost of providing legal services and use of chatbots to provide more affordable legal advice services.

-

Better crime detection: use of data-driven technologies to automatically identify criminal patterns of behaviour (e.g. fraud).

-

Better risk assessment: Use of data-driven technologies to better assess risk of reoffending, resulting in more proportionate interventions.

-

More efficient courts and legal services (e.g. digital court management, automated document analysis)

22. Criminal Justice: Risks

22.1 Overview

-

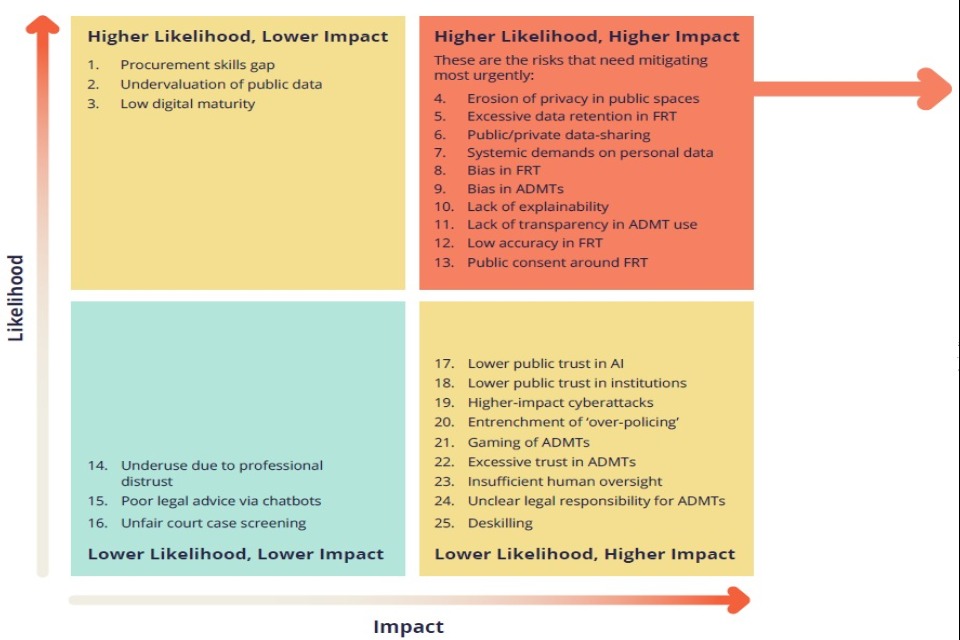

Given the significant impact that justice decisions already have on individuals, even modest deployments of AI and data-driven technology could lead to life-changing effects. Policing and court decisions often curtail people’s liberty, privacy, and other important human rights. Our panel saw the deprivation of rights (e.g. privacy) as a highly likely and highly impactful consequence of deploying AI. They also saw a potential decline in the quality and impartiality of decision-making as a major risk (e.g. with AI systems being biased and lacking in transparency).

-

Privacy risks were seen as both highly likely and highly impactful in this sector. Whereas some privacy risks were ranked highly by panels in other sectors, our criminal justice panel believed AI and data-driven technology posed a threat to privacy across a range of issues, such as the way criminal justice institutions share and retain data.

-

In keeping with our other sectors, many of the risks relating to the use of algorithmic decision-making systems were deemed to be high impact and high likelihood. This includes issues such as a lack of explainability and biased decision-making.

-

Higher impact, lower likelihood risks in need of contingency planning included black swan events such as cyberattacks, as well as future misuses of the technology that could erode the public’s trust in AI and justice institutions. Other risks in this bracket include the deskilling of the justice workforce due to task automation, and many relating to the use of algorithmic decision-making tools, including the absence of effective oversight, confusion over who is legally responsible for the technology, gaming of the system, and excessive trust.

-

Higher likelihood, lower impact risks needing active management mostly related to the digital and skills infrastructure needed to support ethical AI use, including the possibility of a procurement skills gap, a lack of digital maturity in the sector, and the undervaluation of public data.

22.2 Top Risks at a Glance

| Most likely | Most impactful | Most Combined Likelihood of Impact |

| 1. Excessive data retention by facial recognition technology systems | 1. Entrenchment of ‘over-policing’ | 1. Privacy in public / quasi public spaces eroded by FRT |

| 2. Lack of awareness and agreement on police use of FRT | 2. Insufficient human oversight of ADM | 2. Lack of explainability for technical or commercial reasons |

| 3. Lack of digital maturity | 3. Bias of algorithmic decision-making systems | 3. Bias of algorithmic decision-making systems |

| 4. Privacy in public / quasi public spaces eroded by FRT | 4. Higher impact cyberattacks | 4. Lack of awareness and agreement on police use of FRT |

| 5. Lack of explainability for technical or commercial reasons | 5. Loss of public confidence in public institutions | 5. Excessive data retention by facial recognition technology systems |

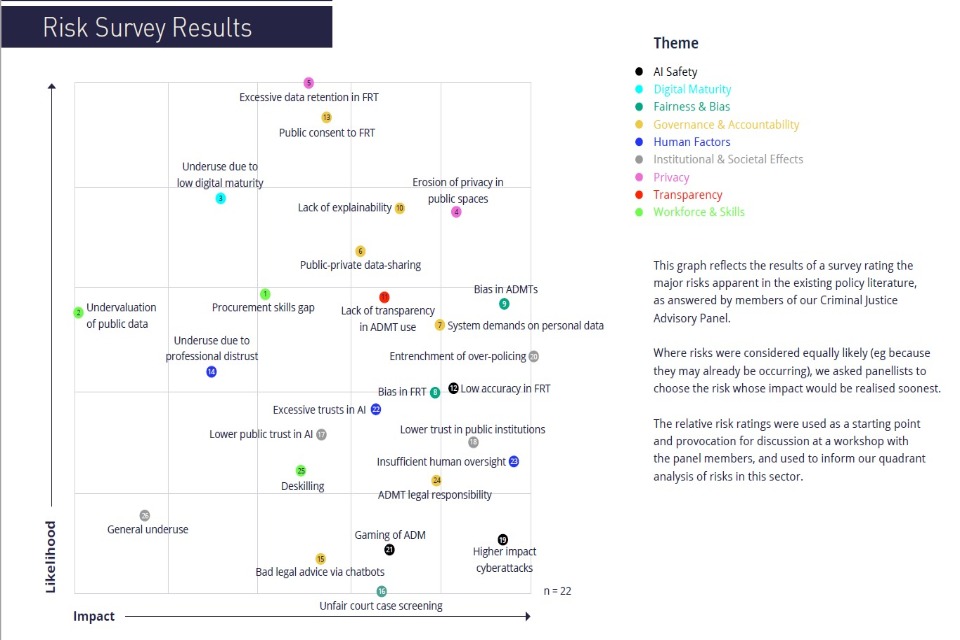

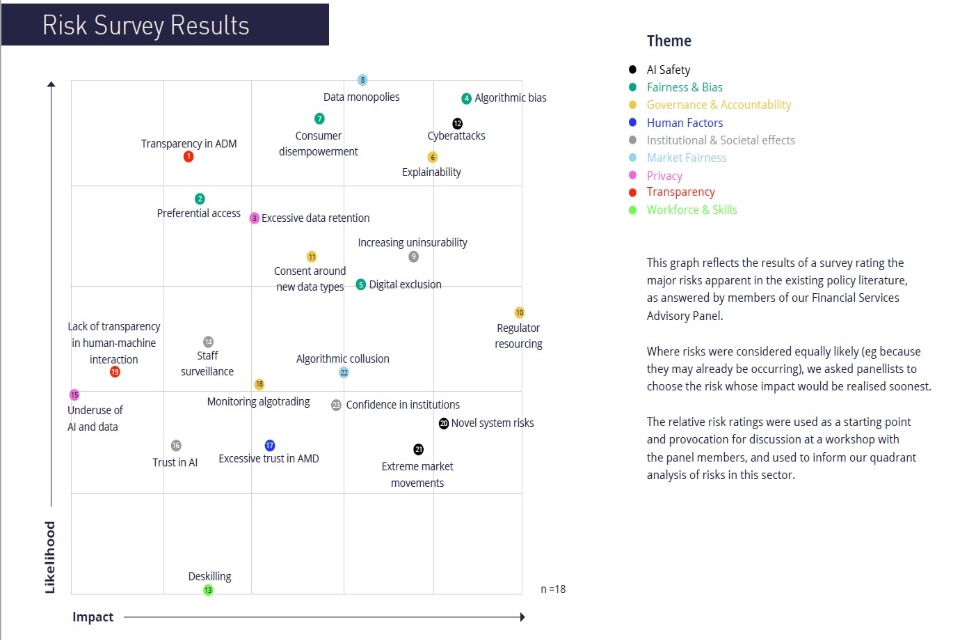

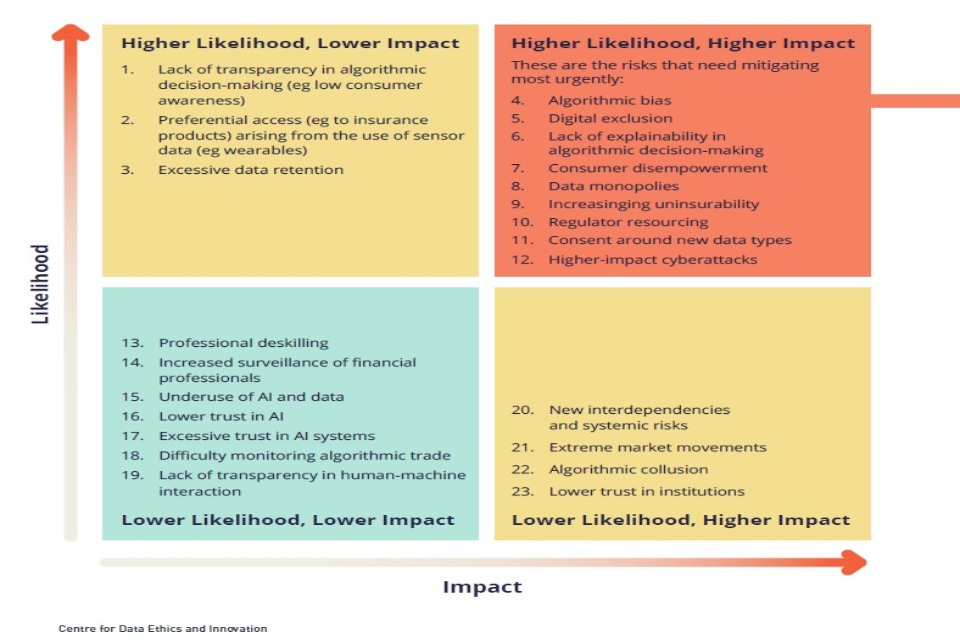

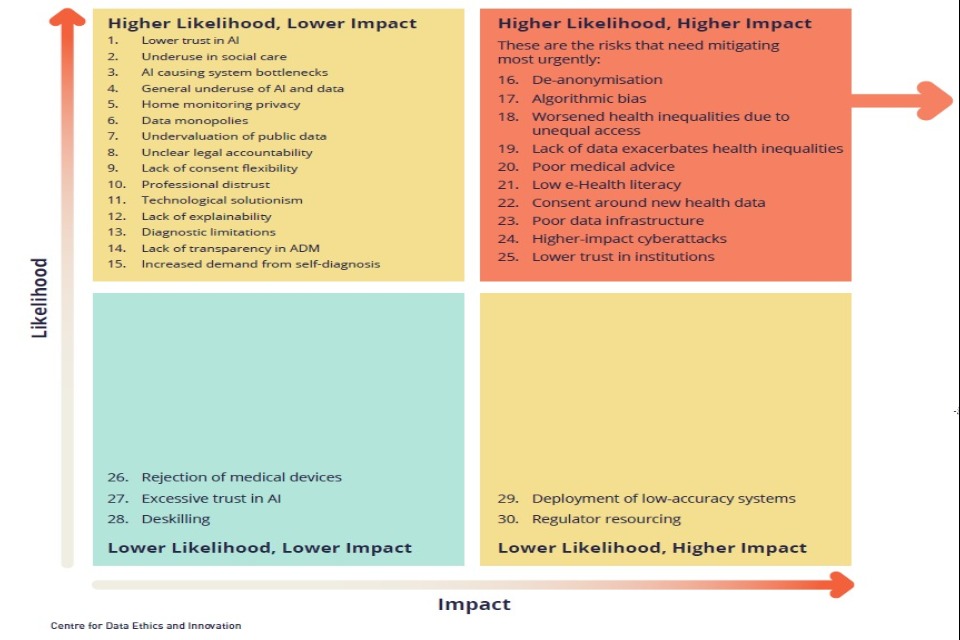

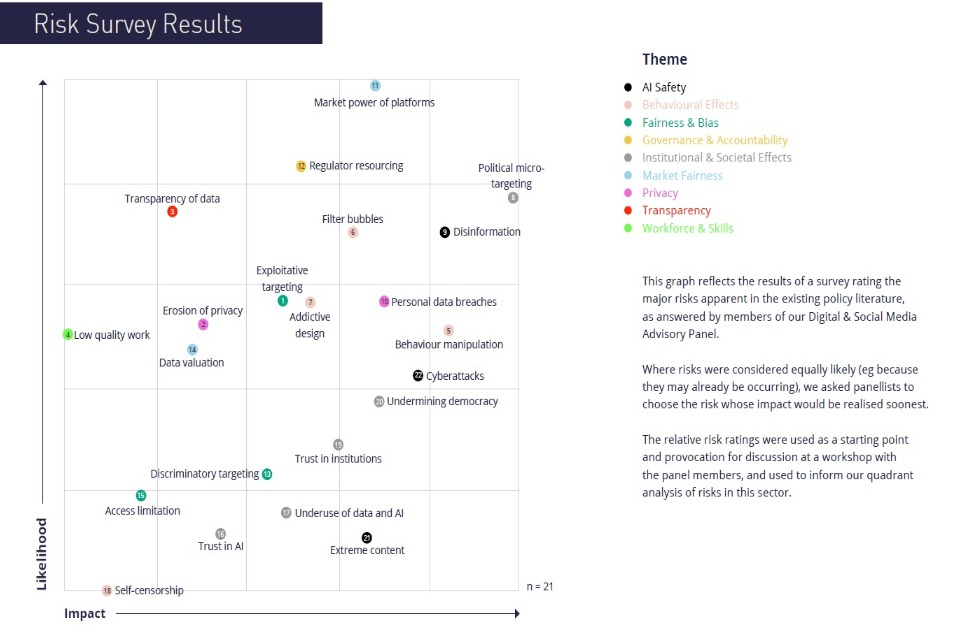

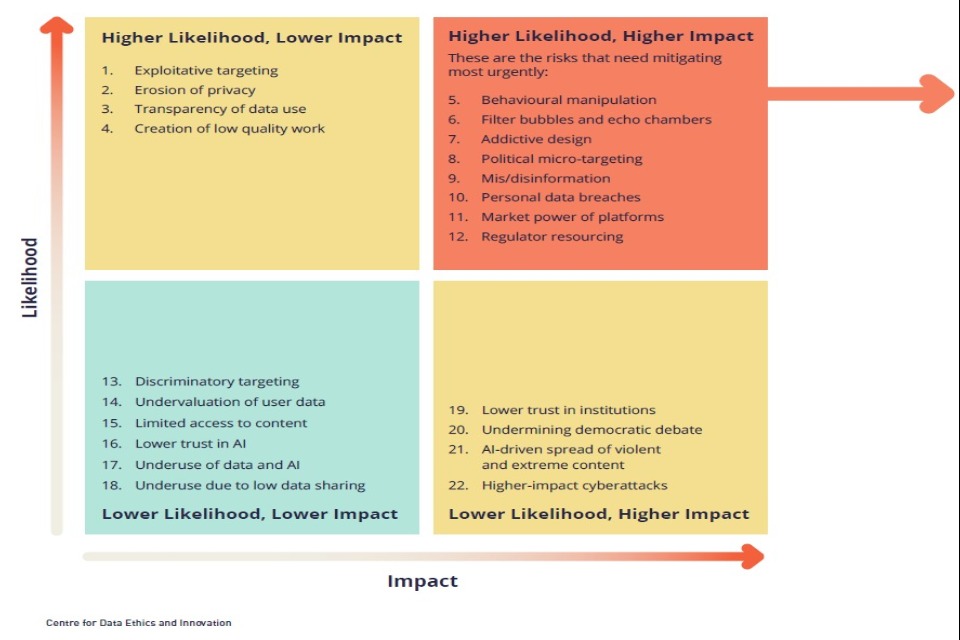

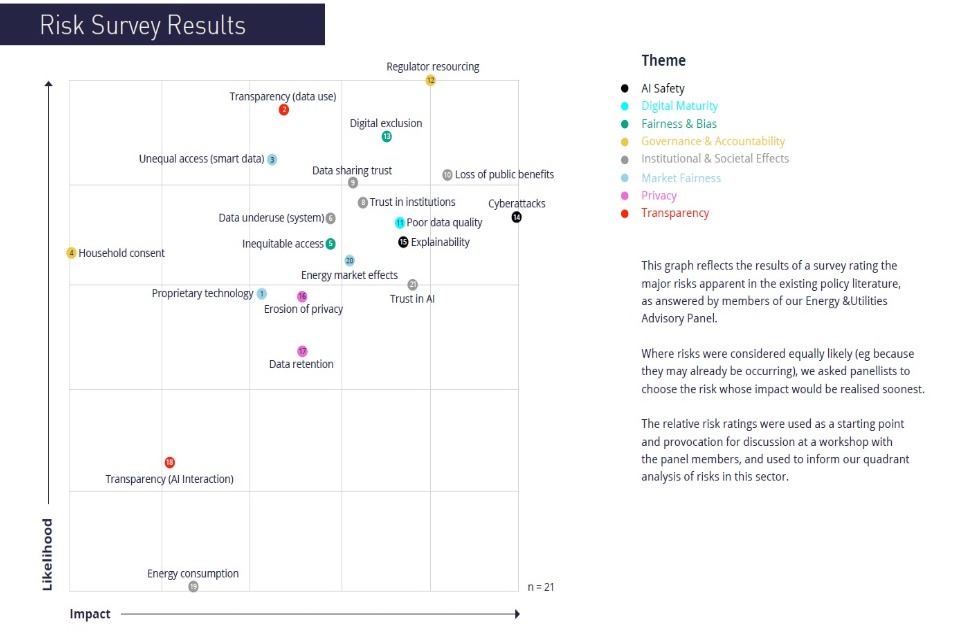

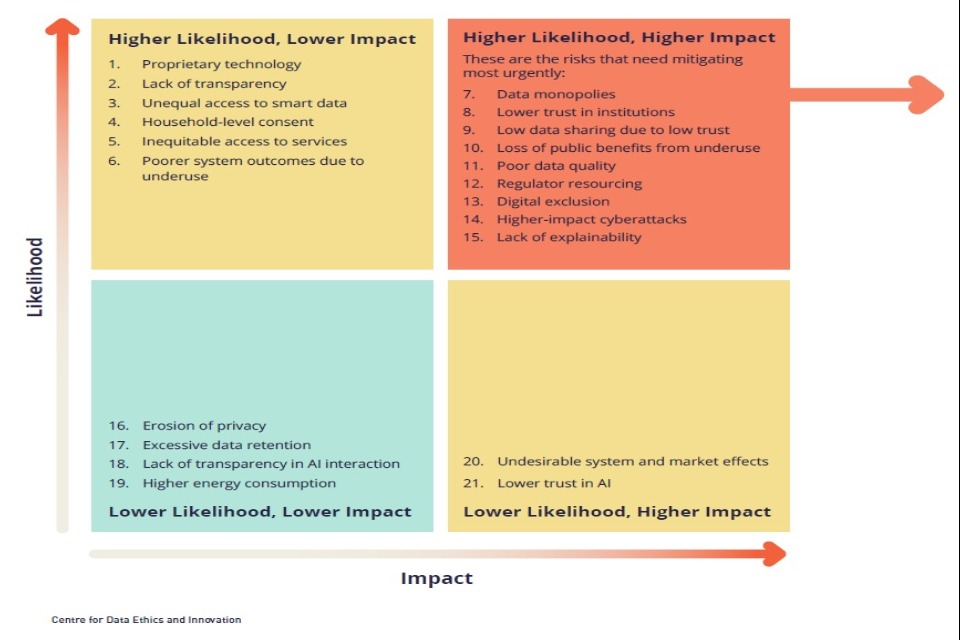

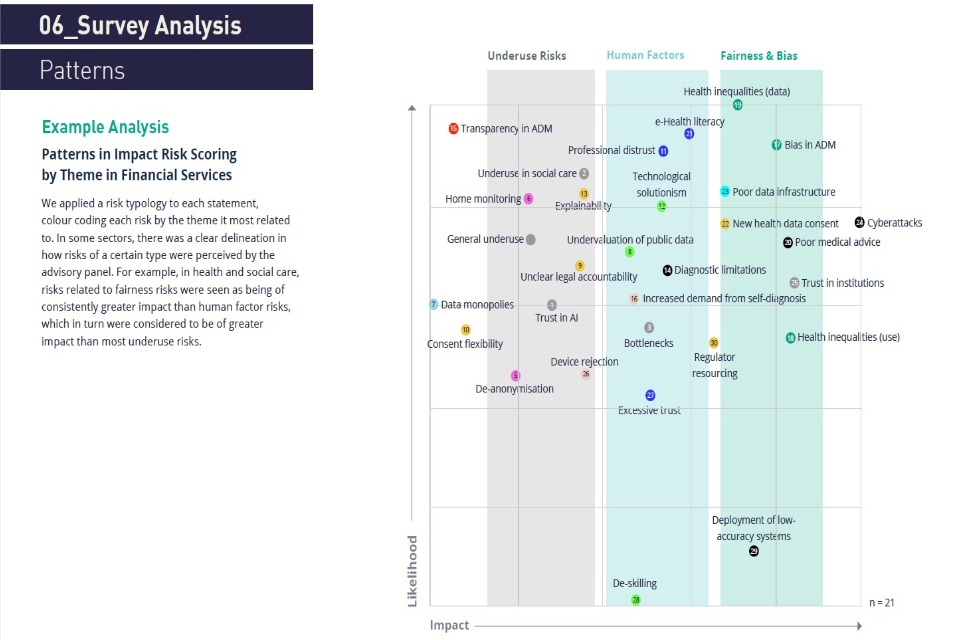

This graph reflects the results of a survey rating the major risks apparent in the existing policy literature, as answered by members of our Criminal Justice Advisory Panel. Where risks were considered equally likely (eg because they may already be occurring), we asked panellists to choose the risk whose impact would be realised soonest. The relative risk ratings were used as a starting point and provocation for discussion at a workshop with the panel members, and used to inform our quadrant analysis of risks in this sector.

Top themes in Criminal Justice Risks: Privacy; Fairness & Bias; Transparency

This quadrant is based on a panel survey rating the major risks in the Criminal Justice sector over the next three years. This diagram is not exhaustive and reflects a review of existing policy literature, workshop discussion, further socialising and additional research and analysis. See our methodology for further detail.

22.3 Criminal Justice: Risk Descriptions

-

Procurement skills gap: Public sector justice bodies are unable to effectively scrutinise the quality and functionality of algorithmic systems they procure, leading to worse justice outcomes.

-

Undervaluation of publicly-owned data: public bodies do not understand the full commercial value of sharing publicly-owned data (e.g. digitised court records) with private sector developers, leading to inefficient use of public assets or taxpayer money.

-

Low Digital Maturity: Limited digitisation of court and police records, or non-interoperable systems hinders the development and use of algorithmic decision making tools, denying institutions and citizens the potential benefits they could provide to justice decisions.

-

Facial recognition technology erodes privacy in public or quasi-public spaces: use of facial recognition for crime prevention erodes individuals’ privacy by making it increasingly difficult to be anonymous in public places.

-

Excessive data retention: facial recognition technology collects and retains data on people beyond immediate operational requirements, resulting in a significant increase in the number of people with biometric data held on police files and infringing on individuals’ privacy.

-

Unclear governance on public/private-data sharing: unclear application of law with regard to data sharing between public and private bodies (e.g. between police and private security firms) infringing on individuals’ privacy.

-

System demands on personal data: availability of analytic technology increases the volume of personal device data demanded from those affected by or involved in crime (e.g. sexual assault victims), infringing on their privacy and access to justice.

-

Bias in facial recognition technology used by the police: low accuracy for particular groups such as women and BAME demographics result in more mistaken police interventions for these groups.

-

Bias in algorithmic decision-making tools: use of biased algorithmic tools (e.g. due to biased training data) entrenches systematic discrimination against certain groups (e.g. reoffending risk scoring).

-

Lack of explainability for technical or commercial reasons: it is difficult for people to understand or challenge decisions made or informed by algorithms because of their ‘black box’ nature or commercial confidentiality regarding their functionality.

-

Lack of transparency in algorithmic decision-making: it is difficult for people to understand or challenge decisions made or informed by algorithms, because they are not aware of their use.

-

Accuracy flaws in facial recognition technology used by the police: FRT systems generate excessive numbers of false positives and negatives, causing mistaken or failed police interventions.

-

Lack of awareness and agreement on police use of facial recognition technology: biometric data of individuals is collected, processed and stored by police without their meaningful input and agreement.

-

Under-use due to professional distrust: benefits of algorithmic tools not realised because justice official (e.g. police or courts) distrust the accuracy or appropriateness of those tools and disregard their input.

-

Poor legal advice via chatbots: AI-driven legal advice services (e.g. chatbots) provide incorrect legal advice to users (for example, about case prospects or routes to legal redress based on the facts of their case).

-

Unfair screening of court cases: use of AI case outcome prediction by lawyers reduces chances of unusual or novel legal cases coming to court and limits access to justice.

-

Trust in AI: The controversial deployment of AI and data use in policing and criminal justice increases the public’s concern about how these technologies are used in other sectors, undermining their application across society.

-

Loss of public confidence in public institutions: Concerns about the accuracy and impartiality of AI and data use in policing and criminal justice undermines public trust in courts, police forces and other institutions

-

Cyberattacks: increased use of data and AI in the justice system increases risk and impact of cyberattacks which may cause changes in system functionality, loss of system availability, or data breaches.

-

Entrenchment of ‘over-policing’: bias in predictive policing algorithms means police resources are directed at communities that have been unfairly targeted in the past and entrenches systematic discrimination against certain groups.

-

Gaming of algorithmic systems in justice (e.g. lowering or raising of offender risk scoring through input data manipulation)

-

Excessive trust in algorithmic decision-supporting tools: police, courts or prisons using algorithmic recommendations (e.g. OGRS scoring system) in lieu of professional judgement resulting in poorer outcomes for victims and the accused.

-

Insufficient oversight by humans in algorithmic decision-making processes leads to poorer outcomes for subjects of those tools (e.g. police being over reliant on the recommendations of their facial recognition systems).

-

Lack of clear legal responsibility for justice decisions made or informed by the use of algorithmic tools, makes it difficult for people to challenge those decisions.

-

Professional deskilling: Over-reliance on algorithmic decision-making tools erodes the development and availability of professional skills and judgement (e.g. for police or judges).

-

Underuse of data and AI: Restrictions on the use of data and AI leads to society missing out on system-wide benefits, such as opportunities for better crime detection, improved access to justice and more efficient allocation of policing resources.

23. Major theme: Facial Recognition Technology

23.1 Overview

Facial recognition technology (FRT) permits the automatic comparison of faces captured in photos or video feeds, typically providing a similarity score between faces seen in a given environment against those in a ‘watch list’. It presents a broad set of use cases for policing, including live deployment via surveillance vehicles, and retrospective use in matching and tracking persons of interest using CCTV surveillance footage.

Despite this transformative potential for policing, the attendant risks of the technology are correspondingly broad, and were consistently identified by our advisory panel as being some of the most concerning across criminal justice. This may reflect the speed with which it is being introduced, fears that it is not suitably governed, and the possibility of it being networked with existing surveillance systems, which would amplify its impact.

23.2 The risks of using FRT include:

-

Its impact on privacy in public spaces, with law enforcement agencies being able to locate people’s whereabouts.

-

The retention of people’s personal data beyond immediate operational requirements, further interfering with their privacy (although the police forces that currently use FRT have policies to immediately discard people’s facial data if they are not matched against a watchlist).

-

The premature deployment of the technology, given FRT systems still struggle to maintain a high level of accuracy in situations like low light or when the subject is wearing accessories.

-

Lower accuracy when matching the faces of some demographic groups, such as Black and Asian people, and women. In a policing context, this magnifies the risk of erroneous police responses against groups already subject to historic discrimination.

The severity of these risks depends in part on who is included on watchlists. Some of our panel expressed concerns that the criteria for deciding who is added to watchlists remains opaque, potentially including people who have been taken into custody but not charged or convicted, and people sought for minor offences. This has led some to warn that FRT deployments could result in a democratic ‘chilling effect’, dissuading people from attending gatherings where the technology is being used.

24. Governance

-

In the summer of 2019, civil liberties group Liberty took South Wales Police (SWP) to court on the basis that their use of live FRT on members of the public had breached the Human Rights Act, the Data Protection Act, and the Equality Act. The High Court ruled that there is a ‘clear and sufficient’ legal framework to ensure the appropriate and non-arbitrary use of live facial recognition, and that SWP used live facial recognition in a way that abided by this legal framework.

-

However, this does not mean that every deployment of live FRT by a police force would necessarily be legal. Under the Data Protection Act, for example, police forces would still need to demonstrate that their specific use of the technology is strictly necessary. The ICO recommends a binding code of practice so that all police forces can better interpret the law. Such a code could, for example, specify best practice approaches to compiling watchlists. Lessons could be learned from the clear guidance available for covert surveillance. Panellists noted the importance of updating guidance to reflect developments in other biometrics, such as gait recognition or affect detection.

-

Guidance would be especially valuable to inform trials of the technology, for example by specifying robust evaluation methods, the criteria that need to be met before FRT is fully rolled out, and how to determine which practices sit within or outside the scope of a trial. Experiments in the use of FRT lack the same rigour as other trials of important innovations, such as medical trials, which are governed by well-established protocols. Panellists cited the Ministry of Justice’s OASys risk assessment tool and earlier police use of randomised control trials as examples of good evaluative practice.

-

There are no widely recognised certification or assurance standards for FRT, or for the underlying algorithms and datasets (for example, with respect to avoiding biased outcomes), in contrast with other tools and technologies used by the police. However, our panellists said some developers seem reluctant to submit their products to independent, third party tests and tools that do exist.

-

The above notwithstanding, panellists indicated that consideration of appropriate policies and practice were far more advanced in policing than private sector use of facial recognition technology.

Case Study: Evaluating data-driven technology in justice

The OASys system was introduced in 2001 to assess offenders’ risks and needs to provide individualised sentence plans. It consists of a number of risk scores for an offender, including calculating the chance of reoffending and the potential severity of any future offence, as well as a structured professional judgement based on these calculated scores, other contextual factors, and engagement with the offender. There have been a number of evaluations of OASys, that have shown its calculated elements offer more predictive value than professional judgements alone. While it does not incorporate machine learning approaches, it was identified by our sector panel as an example of statistical risk modelling successfully supporting a human-led assessment process, and of a well-implemented, iterative evaluation approach to using data-driven insights in justice decisions.

25. Drivers

Novel use of second-generation biometrics in scalable, automated systems. Using biometrics to automate identification in videos represents a fundamentally different form of surveillance than human-led identification. FRT systems are potentially massively scalable, and place considerable power in the hands of their operators. The extent to which FRT systems interfere with privacy depends on whether they are mobile or static, deployed in live or retrospective contexts, and retain or discard personal data. The existence or otherwise of governance mechanisms to guide choices also matters. Panellists emphasised that policymakers and regulators should consider future changes in the capability of FRT systems (such as the prospect of networked, perpetual surveillance systems), and not be limited to examining existing police trials.

Opaque deployment policies. Panellists noted that police forces do not always respond in full to Freedom of Information requests about their FRT policies, meaning the only available information is limited to that voluntarily published by police. Factors in FRT policies that assist in determining the impact of the technology include:

-

Justifications and sign off procedures for the generation of watchlists. Many of the risks presented by FRT scale with the size and geographical breadth of watchlists, along with the policies for the different types of individuals which could be included, such as known criminals and ‘persons of interest’.

-

Criteria for deployment, including how the choice of location is determined.

-

Minimum accuracy thresholds for matching faces both in the context of procurement and for actioning positive identifications during deployment.

-

Procedures for how to act on information generated by FRT systems, and the training that staff are required to undergo to operate the systems.

Historic problems in how police forces have governed data storage. Panellists highlighted that some police forces have not always upheld the highest data governance standards. One example is the unlawful retention of custody images, where it has been alleged that police forces were continuing to store facial images of suspects that had been released without charge, despite a High Court ruling in 2012 that deemed this practice unlawful. Another example is when the Metropolitan Police Service shared facial images with a commercial property developer in Kings Cross, London in 2019. Our panel suggested there may be room for improvement in how police forces govern the use of FRT and associated biometric data.

Proprietary technology and commercial confidentiality limit transparency and the ability to audit systems’ performance and appropriate use. Some suppliers of FRT deployed by police do not permit third-party testing for bias across criteria like ethnicity and gender. There appear to be few robust experiments and evaluations of FRT’s performance ‘in the wild’. Assessing performance in controlled environments may not give reliable indications of a system’s accuracy or capacity to perform with minimal bias.

Dive further into the detail with the CDEI’s latest Snapshot report on Facial Recognition Technology.

26. Major theme: Bias in algorithmic decision-making

26.1 Overview

Algorithmic decision-making or supporting tools (ADMTs) are increasingly used in justice contexts, for example to assess the risk of someone reoffending.

The use of such systems in other jurisdictions, for example recruitment, has already shown they can be biased and produce unfairly discriminatory outcomes. The particular risk of bias in justice ADMTs is the reinforcement and entrenchment of historical discrimination against particular groups, for example through over-policing.

-

In criminal justice, historical data is often weighted against particular groups: anyone with a criminal record; demographic groups that are over-represented in training data; non-white people (due to historic discrimination); and lower income groups (due to the way different forms of crime are detected and processed by the criminal justice system).

-

AI systems that are trained on or which process unrepresentative data can reinforce discriminatory practices. Additionally, even when variables relating directly to protected characteristics such as ethnicity are removed from training data, ADMTs can learn to use proxy variables (e.g. postcodes, income levels, or a combination of various factors) that reintroduce bias from underlying data.

-

While unmonitored use of ADMTs is presently rare in the UK justice system, there are examples of data insights being used in practice with limited roles for the ‘human in the loop’. For example, some offender scoring is used in prisons as a threshold for determining eligibility for rehabilitation opportunities. While staff judgement is used to determine whether an eligible offender will be actually offered the intervention and also to determine eligibility for those with marginal scores, those whose score is substantially below the threshold will not be able to participate. The scores are highly dependent on official criminal history data, which in turn is affected by upstream decisions around the detection and prosecution of reported crime. The use of thresholds is based on meta-analytic findings about the impact of interventions for offenders of different actuarial risk levels.

26.2 Governance

-

It is difficult to agree acceptable thresholds for bias in decision-making. The baseline is the degree of bias seen in human decision-making, which will likely need to be superceded in algorithmic contexts, as people have more tolerance of failure of human judgement.

-

Beyond general measures in the Data Protection Act 2018, there are no context-specific governance requirements regarding how humans should review algorithmic recommendations before they are acted upon. Some offender risk scoring is already used as an access gateway to rehabilitation courses without moderation by a human decision-maker. Panellists noted recent Ministry of Justice work examining different ways of presenting information to support decision-making in justice contexts.

-

New tools and guidance are in development that would help justice institutions to mitigate bias, however these efforts do not appear to be coordinated. Panellists cited several de-biasing projects including: the CDEI/RUSI investigation into the use of algorithms in policing; published work by Westminster Police; and other work by HM Criminal Justice Inspectorates Group. There are, however, no formally applicable best practice guidelines for deploying ADMTs in policing, or industry standards that set out what checks should be undertaken to ensure the ADMTs are fit for purpose, or the training necessary for responsible and effective use.

26.3 State of the Art: Addressing algorithmic bias

The CDEI will shortly be publishing a comprehensive analysis of algorithmic bias across four sectors, including policing, with recommendations on how to address it.

26.4 Drivers

The data underlying machine learning training and deployment can be biased because it reflects a biased reality, or because it is of poor quality or incomplete. Fundamentally, algorithms cannot decide by themselves which biases should be reproduced and which should be ignored. Specific issues discussed included:

-

Data provenance is important, and data will often reflect historical bias present in police records, sentencing, or stop and search practices.

-

Automated decision-making systems such as offender scoring tools are often heavily reliant on police records, and their output therefore depends greatly on how a person was processed and precisely what was recorded. Small differences in police records can result in different outcomes for substantively similar situations.

-

Police forces and criminal courts have more data on particular kinds of crime, such as burglary, knife crime, violent attacks, and domestic abuse, which form the basis of training datasets.

-

A paucity of research on different types of AI and data bias in a UK justice context. Much of the research originates in the US and is not necessarily applicable to the UK context. For example, the geographic segregation of people of different ethnicity is much lower in the UK, and policing systems are different.

-

Some ADMTs (e.g. those that create crime ‘heatmaps’) are trained only on arrest data, meaning that the system is finding and repeating patterns in detainment judgements rather than conviction judgements (although the latter could also be historically unfair).

The impact of bias may vary depending on whether the focus of a system is on predicting criminality or identifying potential victims. Some ADMTs aim to predict future criminality, for example predicting reoffending risk or crime hotspots. The impact of these applications is likely to be different to ADMTs that identify potential victims.

-

Opaque, unstandardised development and deployment processes. Panellists expressed concern that local justice institutions were not coordinating their efforts in tackling bias in AI and data-driven systems. Different biases may arise as police forces use different data sources in a variety of ways. Different accuracy requirements and weight is placed on ADMTs by different parts of the justice system. The panel also highlighted variability in:

-

The level of transparency around the use, evaluation, and functionality of ADMTs, which prevents scrutiny and challenge of potential bias. In some instances this includes a lack of standards or requirements around explainability and interpretability.

-

Data validation processes, for example, ensuring variables are appropriate and not reintroducing bias.

-

Awareness of available de-biasing tools or how to use them.

Human factors affect how recommendations are acted on. The output of ADMTs is only as useful as the ability of justice officials to make sense of the information, and how much they trust it.

-

Some practitioners will not trust an ADMT and ignore recommendations entirely when making judgements. Panellists cited Hampshire Police focus groups on using algorithms, which found police officers were more sceptical and challenging of ADMT outputs than expected, and wanted to understand the information that was processed by the algorithm.

-

Conversely, some justice officials may fear criticism if they do not use or refer to the results of an ADMT. Users of these systems may not be clear that they can reject or challenge an ADMT recommendation. There is a spectrum of emphasis that may be placed on scores provided by an ADMT.

26.5 State of the Art: Explaining Decisions Made with AI

The Information Commissioner’s Office and the Alan Turing Institute have recently published guidance on explaining decisions made with AI.

27. Major theme: New Demands on Data

27.1 Overview

Increasing volumes of data are being requested from people involved in the criminal justice system, including suspects - but also the victims of crime.

The increasing concentration of personal data on digital devices and accounts, and the availability of forensic and analytic technologies that can examine them, means that evidential principles of the justice system are increasingly requiring the processing and use of this data for pursuing ‘reasonable lines of inquiry’ in police investigations, particularly around sexual offences. This has raised questions about whether the privacy of victims is at risk, and whether the justice system can cope amidst an expectation to use and review this data.

-

While refusing consent to provide personal device data does not automatically trigger a case being dropped, in practice it will not proceed if police or prosecutors decide this blocks a reasonable line of inquiry. This means victims of crime may have to decide whether to compromise their privacy in order to access justice.

-

People may have considerable volumes of personal data seized, which may not be immediately relevant to the crime in question. Third party personal data (i.e. of people not involved in cases) can also be captured in these processes, with consequences for those individuals’ privacy.

-

A lack of capacity to process this data can lead to long delays in progressing cases, during which suspects may be released pending investigation, victims are unable to receive redress, and those accused are unable to vindicate themselves. Some panellists described this as a harm of ‘delaying justice’, with tangible effects on people involved with the justice system.

27.2 Governance

-

A central concern is how well long-standing systems of criminal investigation and legal disclosure can adapt to new circumstances. The reality is that most people now own personal devices and digital accounts that store substantial information about their personal lives and interactions with others - data that can be easily extracted.

-

The National Police Chiefs’ Council issued a national digital consent form in April 2019 to standardise police forces’ approaches to requesting data from victims.

-

The Crown Prosecution Service (CPS) issued guidance in April 2019 on its approach to requesting mobile phone data from victims of crime.

-