Android Application Development Guidance

Published 4 September 2014

This guidance refers to Android specific mitigations against loss of sensitive data through secure development. It refers to concepts described in Android’s online security articles and the CESG Android End Users Security Guidance. It is recommended that these documents are read and understood before reading this guidance.

1. Changes between Android 4.3 and 4.4

Android 4.4 has made several minor changes to the security of the Android platform. Those changes that affect secure Android development are listed below:

1.1 Fortify source

Android 4.4 introduces support for the FORTIFY_SOURCE feature of the GNU C Compiler at level 2. This is an improvement on the support for FORTIFY_SOURCE level 1 introduced in Android version 4.2. The feature allows the compiler to insert automatic bounds checking code around functions which could potentially corrupt memory in order to prevent simple buffer overflows. The compiler added code performs checks on the following functions:

| Memcpy | Mempcpy |

| Memmove | Memset |

| Strcpy | Stpcpy |

| Stncpy | Strcat |

| Strncat | Sprint |

| Vsprintf | Snprintf |

| Vsnprintf | Gets |

While FORTIFY_SOURCE does not eliminate all possibilities of buffer overflows, it enhances the exploit mitigation behaviours available on the platform, and should be used in situations where native code is used in an Android application.

1.2 Changes to supported cryptographic algorithms

The integrated AndroidKeyStore provider now includes support for Elliptic Curve signing keys. Elliptic Curve Cryptography is a viable form of public key cryptography that can provide an equivalent or higher level of security alternative to other strong algorithms such as the widely deployed RSA and Diffie-Hellman algorithms using shorter keys.

1.3 VPN behaviour changes

On multi-user devices, VPNs are now applied per user. While this allows a user to route all network traffic through a VPN without affecting another user on the device, device administrators should be aware that in Android 4.4 setting a VPN for a particular user will not affect any other users of that device, and as such data may be transmitted over an insecure channel.

1.4 SSL CA certificate changes

Many corporate IT environments include the ability for administrators of the environment to intercept and monitor HTTPS sessions for security and monitoring purposes. This is achieved through inclusion of the certificate of an additional Certificate Authority (CA) to managed devices, allowing the CA to effectively Man in the Middle (MITM) HTTPS communications. As of Android 4.4, communications over such a channel (i.e. any channel where the certificate is served by a 3rd party CA) will now notify the user that there is a possibility that the integrity of their session is compromised.

Additionally, Android 4.4 detects and prevents the use of fraudulent Google certificates used in secure SSL/TLS communications through Certificate Pinning.

2. Secure Android application development

2.1 Data store hardening

Android by default provides each application on the device access to a private directory to store its files. This protection is enforced using Linux user and group permissions.

Applications are then able to access other areas of the phone by requesting permission from the user at install time. The user can choose to permit that application access to areas such as the device’s calendar and phone book, as well as features such as making phone calls or reading the current location. Once permitted, the application may use these features as much as they like without further interaction from the user.

Despite basic protection offered by this Android sandboxing, it remains the responsibility of the application to store its data securely and not undermine the protection in place. For instance:

- Writing data to publicly readable locations such as the external storage.

- Creating Intents that can be called by any other application on the same device.

- Creating files with world readable/writable permissions.

It should also be considered that a process running on the device with sufficient permissions (such as the root user), will always be able to read and write any data in any application’s sandbox. It is therefore strongly recommended that applications holding sensitive data should build upon the sandbox with more secure functionality. Ultimately it is not possible to guarantee the security of data on a device. It should be assumed that if a user continues to use a device after it has been compromised, the malware will be able to access the data.

The following section outlines best security practices when designing Android applications to handle OFFICIAL data. A general guide to secure Android programming can be found on Android’s web site.

2.2 Network protection

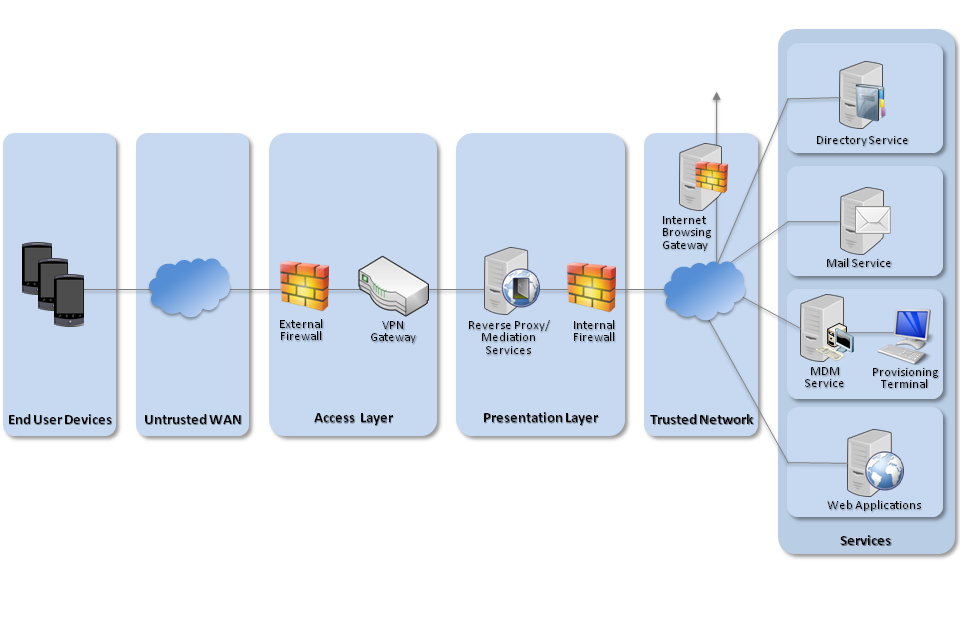

The diagram below, taken from the CESG Platform Security Guidance for Android, illustrates the recommended network configuration for Android devices which handle OFFICIAL information. In summary, a VPN is used to bring device traffic back to the enterprise, and access to internal services is brokered through a reverse proxy server, which protects the internal network from attack.

Recommended network architecture for Android deployments

The following list is a set of recommendations that Android applications should conform to in order to transmit sensitive data securely:

- The credentials used by specific applications to authenticate with the reverse proxy should never be stored unencrypted on the device. Instead they should be derived from material external to the device. This process should be repeated at the start of each application session.

- Any connection made by the application should be performed using Transport Layer Security (TLS) with certificate pinning to a known endpoint within the organisation’s network.

- Any connection made using TLS should not use the device’s list of trusted CA certificates.

2.3 Secure application development recommendations

The following list is a set of recommendations that an Android application should conform to in order to store, handle or process protectively-marked data. Many of these recommendations are general good-practice behaviours for applications on Android, and a number of documented code snippets and examples are available on Android’s developer portal.

Secure data storage

- Android devices, where possible, should not store OFFICIAL data on the device. Instead retrieving all information from a secure remote location that requires user authentication.

- If it is required that OFFICIAL data is stored on the device then the data should be encrypted using a key that cannot be derived or easily guessed by an attacker. Some ways of achieving this are:

- using online authentication that returns the key material

- requesting the key material from the user at the start of each session

- delete data from the device following multiple authentication failures

- Applications should not use the Android backup service (which stores user data to remote cloud storage and doesn’t guarantee security).

- The device’s external storage (e.g. the SD card), should not be used by the application to store OFFICIAL data

Server side controls

- Applications which store credentials must have robust server-side control procedures in place to revoke that credential should the device or data be compromised.

Other

- As the standard Android clipboard is shared between all applications on the device, it is recommended that it is not used when accessing OFFICIAL data. Either:

- To prevent a user from copying OFFICIAL data to other applications on the device, the global ClipboardManager should not be used in the application

- A private clipboard can be implemented if it is required by the application.

- The application should disable both manual and automatic screenshots within activities that display OFFICIAL data by setting secure flags of the window within the application

- Applications that use a shared UID will shared the same sandbox. This means that if one application was compromised, all data in any application with a shared UID would also be compromised. Developers should share functionality between applications using interprocess communications (IPC), restricted by permissions.

- Intents created for IPC between trusted applications should use signature permissions to restrict access by other applications on the device

Secure data transmission

- All off-device communications handling sensitive data must take place over a mutually-authenticated cryptographically protected connection:

- For TLS connections, certificate pinning to a known endpoint within the organisation’s internal network.

- Certificates used by the application should be stored on the device using the Android Keychain.

- As it is not possible for applications to detect whether or not the correct VPN connection has been established, the application should use the mutual authentication to determine whether the VPN connection is established.

- The device’s default list of trusted certificates should not be used. Instead the application should use explicitly defined certificates.

Note that at present there is no appropriate API on Android to check the status of the VPN. To securely check the status of the VPN, the internal service with which the application is communicating must be authenticated. The recommended way of performing this authentication is TLS with a pinned certificate. If mutual authentication is required to the internal service, mutual TLS with pinned certificates should be used.

Application security

- The application must not be compiled with the debug flag enabled.

- The application should only use officially supported APIs.

- The application can be compiled using obfuscation tools to make analysis harder.

Security requirements

The following list includes some other behaviours which can increase the overall security of an application.

- Any data that is deemed necessary to store on the device should be encrypted either with keys that are not stored on the device, or that are stored in the Android Keychain.

- Applications should sanitise in-memory buffers of OFFICIAL data after use.

- Applications should minimise the amount of data stored on the device; retrieve data from the server when needed over a secure connection, and erase it when it is no longer required.

- Applications that require authentication on application launch should also request this authentication credential when switching back to the application sometime after the application was suspended.

3. Questions for application developers

The most thorough way to assess an application before deploying it would be to conduct a full source-code review of the product to ensure it meets the security recommendations and contains no malicious or unwanted functionality. As it is recognised that for the majority of third-party applications this will be infeasible or impossible, this section instead provides some example questions which an organisation may consider asking application developers. Their responses should help provide confidence that the application is well-written and is likely to properly protect information.

3.1 Secure data storage

- What is the flow of data through the application - source(s), storage, processing and transmission?

- Answers should cover all forms of input including data entered by the user, network requests, and inter-process communication.

- How is the OFFICIAL data stored on the device?

- Data should be stored in a location that cannot be accessed by other applications on the device.

- Data should not be accessible to other applications on the device through inter-process communication provided by the application.

- Data should be encrypted when stored on the device.

- Encryption of sensitive data should be performed using a key that is not stored on the device. Either the key is derived from user input, or returned from a server following authentication.

- What device or user credentials are being stored? Are these stored in the Android Keychain? What key is used?

- If certificates are stored on the device then they should be stored using the Android Keychain.

- Are cloud services used by the app? What data is stored there? How is it protected in transit?

- Assessors should make sure that sensitive data is not transmitted to unassured cloud services. If non-sensitive data is transmitted, or data is transmitted to accredited data centres then questions should be asked about data in transit protection.

3.2 Secure data transmission

- Is transmitted and received data protected by cryptographic means, using well-defined protocols? If not, why not?

- Mutually authenticated TLS, MIKEY-SAKKE, or other mutually authenticated secure transport should be used to protect information as it travels between the device and other resources.

- This should be answered specifically for each service the application communicates with to send and receive sensitive information.

3.3 IPC mechanisms

- Are any URL schemes or exported intents declared or handled? What actions can these invoke?

- Can other applications cause the application to perform a malicious action on its behalf, or request access to sensitive data?

- This includes dynamically generated broadcast receivers, which should treat any input as untrusted

3.4 Binary protection

- Is the application compiled with code obfuscation?

- Code obfuscation should be used to help hinder reverse engineering of applications.

- Is the application compiled to be debuggable?

- The debuggable flag in the manifest file should be set to false

3.5 Other

- Does the application implement root detection, and if so how?

- Root detection can never be completely protected against, but the more advanced the detection the more effort is required to bypass it. Mechanisms for detecting rooted devices range from checking for the installation of known rooting applications such as CydiaSubstrate and SuperSu through to technologies that will attempt to detect if a third party process has hooked into its functions.

- Are applications allowed to run in an Android Emulator when in production build?

- Applications that can run in the emulator are easier to reverse engineer. The sandboxed directory of the application can be inspected and manipulated, allowing for greater understanding of the security state of the application. Additionally, the simulator may contain additional testing functionality or have more verbose logging.

- How is session timeout managed?

- The application should include a timeout following inactivity by the user

- How is copy and paste managed?

- Whichever solution is used to manage copy and paste, understanding how data could leak should be understood and accepted by the appropriate risk owner.

- Is potentially-sensitive data displayed within the screenshot when the application is backgrounded?

- This should be performed to ensure that sensitive data is not leaked in screenshots taken of apps for task switching.

- What configuration options are available to end users, and what is the impact to the solution’s security if the user were to change those settings?

- Assessors should be aware of configuration options which may cause the security of the solution to be weakened or disabled.

3.6 Server side controls

- If the application connects to remote services to access OFFICIAL data, how can that access be revoked? How long does that revocation take?

- Assessors should be aware of how long the window of opportunity is for theft

4. Secure Android application deployment

4.1 Third-party app store applications

Third party applications refer to Android applications that are not developed by the organisation. Android supports a number of methods to install new applications. The following section divides these sources into two categories:

Untrusted third party applications

Untrusted applications are those that have been produced by developers that the organisation does not have a relationship with. This includes applications hosted by both Google Play and on third party application stores.

The organisation should assume that in either of these instances, the third party application may have unwanted functionality, either due to weaknesses in their design or deliberately malicious code. Untrusted third party applications therefore should never be used to access OFFICIAL data, and should be assessed before inclusion in the organisation’s private enterprise application catalogue.

Network architecture components such as the reverse proxy can be used to help restrict third-party applications from accessing corporate infrastructure, though these features should be regarded as techniques to help mitigate the potential threat posed by the installation of third-party applications, they cannot guarantee complete protection. The ideal method of mitigation is to not allow any third-party applications to be installed on the device, though in reality this must be taken on a per application basis. Where possible, developers of the application should be consulted in order to understand better the limitations and restrictions of the application. This will help aid its evaluation. The Questions for application developers section should be referenced as a minimum of questions that the developers should be asked about the application.

Trusted third party applications

Organisations are encouraged to learn as much as possible about the security posture of an application, so that the risks of deploying it can be understood and managed wherever possible. Organisations should ideally establish a relationship with the developers of these products and work with them to understand how their product does or does not meet the security posture expected of it. Third party applications should be assessed by the organisation to decide whether the risk of having their code executing on devices is outweighed by the benefits that the application brings. If third party applications are to be permitted on to devices with OFFICIAL data, then the following steps should be taken:

- Ensure that the applications holding OFFICIAL data do not permit third party application access to the data, for instance making sure that the third party application is not included as one that the user can choose to open sensitive documents with.

- Ensure that sensitive data remains secure if the third party application was compromised. For instance the data should not be accessible due to it being stored in a world readable location on the device.

Where third party applications have been specifically designed to manage sensitive data, organisations may also wish to consider commissioning independent assurance on the application due to the scale of access to OFFICIAL material it will have. This is particularly true if the application implements its own protection technologies (such as a VPN or data-at-rest encryption), and does not use the native protections provided by Android. Many enterprise applications available feature server side components and when present, these should be considered as part of the wider risk assessment

Private enterprise application catalogues can be created and managed using MDM solutions, allowing organisations to build a set of accepted third party and in house applications that can either be installed on to every organisation device, or available for employees to browse and choose to install manually.

Security considerations

When deploying third-party applications, the primary concern for an organisation is determining whether these applications could affect the security of the enterprise network, or access data held in a sensitive data store.

Malware and application level vulnerabilities are of particular concern when developing secure applications for Android. Secure applications must therefore pay particular attention when protecting data both in storage on a device and in transit if third party applications are permitted on the same device.

Organisations should also consider the security features of the devices that will host their application. A number of manufacturers offer custom security features to protect corporate data from other applications. If the application will only be used on these devices, then permitting third party applications on the same device may be deemed acceptable.

Security requirements

The best practice when utilising third party applications is as follows:

- Server side components such as a reverse proxy should be used to restrict network enterprise access to trusted applications.

- The developers should be contacted in order to better understand the security posture of the application. Questions that can be asked to developers can be found in the Questions for application developers section.

- Data should be protected from third party applications by restricting their access to sensitive data and functionality.

4.2 In-house applications

In-house applications are those applications that are designed and commissioned by the organisation to fulfil a particular business requirement. The organisation can stipulate the functional and security requirements of the application and enforce these contractually if the development work is subcontracted. For the purposes of this document, these applications are expected to access, store and process OFFICIAL data.

The intention when securing these applications is to minimise the opportunity from data leakage from these applications and to harden them against physical and network-level attacks.

Security considerations

Regardless of whether the application is developed by an internal development team, or under contract by an external developer, organisations should ensure that supplied binaries match the version which they were expecting to receive. Applications should then be installed onto managed devices through a MDM server or in-house enterprise application catalogue front-end to gain the benefits of an application being enterprise-managed.

Security requirements

Both in-house and third party applications should be deployed directly to devices through an in-house enterprise application catalogue. This means they can be remotely managed, and kept separate from third party applications installed by the user.

4.3 Application wrappers

Security considerations

A variety of “application wrapping” technologies exist on the market today. Whilst these technologies ostensibly come in a variety of forms which provide different end-user benefits, on most platforms, they essentially work in one of three ways:

- They provide a remote view of an enterprise service, for example a Remote Desktop view of a set of internal applications that are running at a remote location, or a HTML-based Web-application. Multiple applications may appear to be contained within a single application container, or may live separately in multiple containers to simulate the appearance of multiple native applications. Usually only temporary cached data and/or a credential is persistent on the device itself.

- They are added to an application binary after compilation and dynamically modify the behaviour of the running application, for example to run the application within another sandbox and intercept and modify platform API calls, in an attempt to enforce data protection.

- The source-code to the surrogate application is modified to incorporate a Software Development Kit (SDK) provided by the technology vendor. This SDK modifies the behaviour of standard API calls to instead call the SDKs API calls. The developer of the surrogate application will normally need to be involved in the wrapping process.

Security requirements

Category 1 technologies are essentially normal platform applications but which store and process minimal information, rather deferring processing and storage to a central location. The development requirements for these applications are identical to other native platform applications. Developers should follow the guidelines given above.

Category 2 and 3 wrapping technologies are frequently used to provide enterprise management to applications via the MDM server that the device is managed by. SDKs are integrated into these MDM solutions and can be used to configure settings in the application or to modify the behaviour of the application. For example, the application could be modified to always encrypt all data or not use certain API calls.

On Android, both category 2 and 3 wrapping technologies require the surrogate developer’s co-operation to wrap the application into a signed package for deployment onto an Android device. As such, normally only custom-developed in-house applications, and sometimes trusted third party applications (with co-operation) can use these technologies. As the robustness of these wrapping technologies cannot be asserted in the general case, these technologies should not be used with an untrusted application; they should only be used to modify the behaviour of trusted applications, or for ease of management of the wrapped applications.

Preferably, in-house applications should be developed specifically against the previously described security recommendations wherever possible. The use of App-wrapping technologies should only be used as a less favourable alternative method of meeting the given security recommendations where natively meeting them is not possible.

Ultimately it is more challenging to gain confidence in an application whose behaviour has been modified by a Category 2 technology; it is difficult to assert that dynamic application wrapping can cover all the possible ways an application may attempt to store, access and modify data, and in the general case it is difficult to assert how any given wrapped application will behave. As such, CESG cannot give any assurances about Category 2 technologies or wrapped applications in general, and hence cannot recommend their use as a security barrier at this time.

However, Category 3 technologies are essentially a SDK or library which developers use as they would use any other library or SDK. In the same way that CESG do not assure any standalone cryptographic library, CESG will not provide assurance in SDKs which wrap applications. The developer using the SDK should be confident in the functionality of that SDK as they would be with any other library.