Good practice in the design and presentation of customer survey evidence in merger cases

Updated 23 May 2018

Introduction

Status of this document

During our merger casework, the Competition and Markets Authority (CMA) sometimes receives submissions of evidence derived from surveys of customers that have been commissioned by the merging organisations (‘Parties’) or their external advisors for the specific purpose of helping to understand aspects of a merger. We believe that the use of statistically robust customer survey research can be very important in reaching informed decisions, and we very much welcome this type of evidence.

This document sets out our general views on good practice in the design, conduct and reporting of such surveys. While the Parties and their advisors are the primary intended audiences for this document, it may also be of interest to market research agencies involved in designing and conducting surveys for merger cases.

Where appropriate, the CMA may commission its own survey research and, if so, the survey design, analysis and interpretation of results are informed by in-house statisticians who work closely with inquiry teams and the market research agencies commissioned to conduct the research on our behalf. The principles described in this document apply equally to these surveys.

This document focuses on surveys for merger cases, but many of the principles are applicable to other types of case which the CMA conducts, such as market studies and market investigations, Competition Act enforcement cases, super complaints or consumer protection enforcement cases. However, the uses of survey evidence and the nature of cases themselves can vary and assessments of fitness for purpose need to take this into account.

Generally speaking, the aim of a statistical sample survey is to interview a small proportion of people from a large population of interest (for example, a few hundred customers from the many thousands who use a cinema chain) in such a way that robust inferences can be made from their responses about the population as a whole. Research to inform our investigations may be, alternatively or in addition, ‘qualitative’ in nature, for example, in the form of focus groups or in-depth interviews. Good practice for qualitative research methods is outside the scope of this guidance.

For brevity, this document ‘Good practice in the design and presentation of customer survey evidence in merger cases’ is referred to as ‘this document’. It replaces the document published in 2011 by the then Office of Fair Trading (OFT) and Competition Commission (CC)[footnote 1]. It should be noted for the avoidance of any doubt that this document does not constitute guidance under section 106(1) of the Enterprise Act 2002.

This document is about customer survey research for merger cases. We use the term ‘customer’ here in a loose and non-technical sense. Usually the CMA will be interested in surveying the person (or an entity, such as a business) who buys a product or service directly from (one of) the merging Parties. However, this is not always the case. For example, sometimes the CMA is interested in surveying the end-customers of products or services even if they do not purchase the product or service directly from the Parties.

This document provides principles and examples for illustration, not hard and fast rules or bright-line tests. We recognise that circumstances vary and that knowledge of the relevant scenario, along with judgment and reason, will be required in applying customer survey research methods to a particular case. Where time and/or resource constraints mean that the research possible under particular circumstances cannot comply fully with all of the principles set out here, we will still consider its use to the case.

Submissions that follow the principles set out in this document are more likely to be given evidential weight in the CMA’s merger investigations.

Customer survey research conducted by Parties in the normal course of their business, for example to inform strategy prior to a merger being considered, may also have evidential value for the purpose of a merger inquiry. The interpretation and use of such evidence, and weight to be given to it, will depend on the nature and purpose of the survey and the way in which it relates to the merger case. For example, there are some circumstances in which the CMA would take more account of a survey if it was clear the Party has acted on the results.

This document offers illustrations and examples drawn from recent experience in merger cases that are intended to assist in the design of good customer research. These illustrations are included by way of example and are not exhaustive, nor will they be applicable in all cases.

We would encourage Parties and their external advisors to use what they consider to be the most appropriate research techniques to generate robust evidence. The omission of a particular research technique from this document does not imply that it is invalid, or that the results would not be given evidential weight in appropriate circumstances.

This document is a technical resource to assist Parties in submitting customer survey research evidence that may be given weight in an inquiry. It is without prejudice to the provisions of the Enterprise Act 2002, as amended, and without prejudice to the advice and information in the Quick guide to UK merger assessment and the Merger Assessment Guidelines originally published jointly by the OFT/CC.

Uses of surveys in merger cases and procedural issues

Survey evidence has been submitted to the OFT/CC/CMA in numerous merger cases, by the Parties themselves and by third parties. Most surveys conducted primarily for the merger case itself have been submitted by the Parties as part of phase 1 of a merger case. However, a small number of such surveys have been conducted at phase 2.

In contrast, all the surveys commissioned by the CMA have been run as part of phase 2 merger cases. A small number of surveys have been run by the CMA itself during phase 1 of a merger case, all of which (to date) have been online using customer lists with email addresses supplied by Parties.

The CMA considers a large number of factors when making a decision about whether to conduct a survey in a merger case. For any individual case these will include: the theories of harm to be tested, the range of evidence available (or planned) and anticipated evidential gaps, the nature and number of customers in the market, the practical options for contacting and surveying a sample of these customers, and the feasibility of obtaining survey results of sufficient quality to be fit for purpose within the time available. The CMA also has to be mindful of the cost of the research, to assess whether it would be a good use of public money.

The CMA is obliged, under the Merger Guidelines, to give Parties 24 hours to comment on a draft questionnaire for any survey that it intends to commission as part of a phase 2 merger inquiry. In practice, we always try to allow longer and also provide a description of the overall survey methodology (sample design, mode of interview, etc). We endeavour to provide Parties with a similar opportunity to comment if we conduct survey research as part of a phase 1 investigation.

When designing a survey, it is important to start with a clear view of the objectives of the research. From the Parties’ point of view, it is desirable to give the CMA as much time as possible to consider details of the research planned in order to address any concerns the CMA has about it. However, discussions about the survey should not be seen as the CMA giving its approval for the survey.

Looking at merger cases over time, there are some commonly occurring ways in which survey evidence has been relevant to the inquiry and has had an impact on decision-making. These translate into the following topic areas for merger survey questionnaires:

- Demography – to understand the demographic characteristics (for example, age, sex, education) of customers in the market. In some cases this can be useful in assessing the extent to which the Parties’ customers are differentiated, for example, whether there is evidence that their products or services appeal to different types of people. Note that responses to demographic questions can also be used to evaluate whether the survey respondents are representative of the population of interest (particularly when benchmark population distributions are available to compare them against), and for weighting purposes.

- Choice attributes – to understand how customers make choices in the market of interest, including the relative importance of such factors as price, quality, range, service, location and brand. Other aspects of the purchase decision that may be of interest include the extent to which it was planned or made on impulse, search activity, brand awareness and level of knowledge about the market and competitor options.

- Geography – to understand the geographical aspects of the merger, for example, how far customers travel (measured in distance and/or time) to obtain the product or service and the location of firms (particularly in cases with local area theories of harm). This is often done by asking customers how they have travelled to the Parties’ premises, which mode(s) of transport they have used, how long it has taken, and where they have travelled from (including whether this is from, for example, home or work).

- Cross-channel substitution – to understand the extent to which customers switch between or make use of different purchase channels (usually in-store and online). This can be particularly important in cases where, for example, a bricks-and-mortar retail merger is the subject of the inquiry but it is important to assess the nature and strength of potential or actual online constraints on the bricks-and-mortar outlets. (in these cases, we are interested in the behaviour of customers of bricks-and-mortar stores, for example would they switch to online channels)

- Closeness of competition – to estimate the closeness of competition between the Parties themselves, and between the Parties and competitor third parties. This is often the most influential part of the survey[footnote 2], using hypothetical diversion questions to elicit ‘next best’ options (alternatives/substitutes) from respondents[footnote 3].

The above does not provide an exhaustive list; there are many other potential topic areas that might be relevant to particular markets and, accordingly, form part of the questionnaire for a particular case.

Timing should be a key consideration for Parties and their external advisors when considering whether to conduct a survey. Survey evidence that is submitted too late or is of insufficient quality to be taken into consideration is a waste of time and resources. The general principle on timing is that the earlier the CMA receives survey evidence, the more time we will have to consider it and provide our assessment of the survey’s quality and relevance to the case, along with our analysis and interpretation of results, to the Parties for comment.

For phase 1 mergers, the CMA ideally should receive survey evidence before the phase 1 clock starts. In practice, the CMA will not have sufficient time to fully consider new survey evidence received after the submission of a Party’s response to the issues letter. This would be too late for the CMA to decide whether a survey is of sufficient quality to be given evidential weight, particularly if we have not had sight of the survey materials up to that point.

Parties wishing to conduct a survey for a merger case are strongly encouraged to contact the CMA in the early stages of the survey process to discuss their proposed design, including a draft questionnaire (if available) and wider aspects of the survey methodology.

Any discussion with the CMA would be on a ‘without prejudice’ basis and would not preclude developing or changing views on the evidential weight of a piece of customer survey research on either side. The discussion should not be seen as an alternative to rigorous testing and piloting by the Parties of the planned survey approach and research instruments. As part of its assessment of the quality of the survey, the CMA may ask to observe or listen to certain aspects of the operation of the survey, including the interviewer briefing ahead of fieldwork and/or live interviews once fieldwork is underway. The CMA expects the Parties to accommodate these requests. If, for whatever reason, the Parties are unable to accommodate the CMA’s requests, the CMA may be unable to assure itself on the quality and reliability (and hence the evidential value) of the survey.

Where Parties do not discuss their survey design with the CMA in advance, and/or do not give the CMA an opportunity to monitor and assess the quality of fieldwork while it is underway, it is not necessarily the case that we will consider the survey findings to have no or only limited evidential weight. The weight to be given to such evidence will be assessed against the same principles and standards for conducting surveys described in this document. However, it has been our experience that survey designs not discussed with us in advance have tended to be of insufficient quality, and in the absence of first-hand experience of how fieldwork was conducted, it has been hard to conclude that the findings have genuine weight. In these circumstances, then, the onus will be on Parties to provide highly compelling information about the survey methodology and the steps taken to assure its quality.

We expect good surveys to be neutral and not biased towards one outcome or another. Given the nature of the phase 1 legal test, there is a particular risk to Parties that survey results beneficial to their case may be given little or no weight if they are perceived to have been led by a biased survey design.

We aim to be open and transparent in our work. We will consider requests from Parties in merger cases for the disclosure of underlying information and analyses derived from the CMA’s own customer survey research. However, these requests may be subject to important legal and practical constraints on our ability to disclose such information. These include provisions of the Enterprise Act 2002, as amended, the Data Protection Act 1998 (soon to be replaced by the General Data Protection Regulation), and the statutory timetable for each merger inquiry.

Working with market research agencies

The CMA commissions market research agencies to conduct most of its survey work. The choice of agency, and of the team within the agency, is a key decision affecting the survey quality achieved.

CMA research that provides evidence for merger cases is conducted to high quality standards, and in many cases we place requirements on agencies that go over-and-above standard practices. In particular, for the surveys we commission we take a keen interest in how the survey is conducted after the survey design and questionnaire have been agreed. For example, if interviews are to be conducted using a face-to-face or telephone methodology, then we would ask to see and make changes as appropriate to interviewer briefing materials. We like to participate in the interviewer briefing where possible, and check the quality of fieldwork ourselves (in addition to the agency’s own monitoring), requesting changes where we think it appropriate. This might involve changing a question, the interviewer instructions and/or interviewing personnel, or asking for an interviewer re-briefing, additional supervisor checks or adjustments to interviewer schedules, as appropriate. Our involvement is aimed at driving up the quality of the survey fieldwork, as well as understanding how the survey has worked in the field, which we see as an important part of interpreting the results.

We encourage Parties and their external advisors to use the same hands-on approach to monitoring the merger-related customer surveys they commission. Survey data can only be as good as the fieldwork that generates it, and interviewer instructions and a survey questionnaire are often interpreted and implemented in unexpected ways by interviewers. Respondents’ reactions, interpretations and answers can also differ from expectation, even when the survey has been extensively piloted.

If the Parties are able to demonstrate to the CMA that a survey has been conducted well, and show an understanding of how the survey has worked in practice, this will foster confidence in the survey results, and assist the CMA in assessing the evidential weight that may be attached to the findings.

We would expect market research agencies working for Parties and their external advisors to observe the MRS Code of Conduct, and to have appropriate qualifications to demonstrate their commitment to quality, for example, an ISO accreditation or similar. It is not considered good practice to sub-contract fieldwork without stringent and transparent processes to ensure high fieldwork standards.

Design

Sound statistical research requires that the survey design adheres to certain principles, in particular that:

- the population of interest is clearly defined

- the sample source provides a representative coverage of the population;

- the sample is selected using random methods and the sample is of sufficient size to provide robust estimates

- the interview method is appropriate for researching the audience and subject matter

- the survey is notified to potential respondents in a neutral way that does not bias results

- the questionnaire is well-designed, and is properly tested and piloted in advance

- the fieldwork team is appropriately briefed, and interviewing quality monitored, as appropriate

- the design takes account of likely response rates, the need to minimise the potential for non-response bias and, where possible, incorporates metrics to measure and adjust for such bias [footnote 4]

Target population

Customer survey research involves defining a population of interest and then interviewing a sample from that population. This is done so that measures relating to the population may be estimated, and the sampling uncertainty in the estimates quantified.

In merger cases we are often interested in sub-populations, for example customers from specific geographic areas, or customers from each of the Parties separately, as well as an overall population of interest. Where such sub-populations of interest exist, these should be clearly set out in advance to inform the sample design.

Sample source and survey mode

Having defined the population of interest, the next task is to identify the best way of finding people who are in this population, ie the best source of sample to provide a representative coverage of the population. A variety of sample sources may be considered, including:

- intercepting customers close to the time of purchase, for example as they leave a store (often referred to as an ‘exit survey’)

- customer lists provided by the Parties

- external lists from reputable sources

- customers free-found using a random sampling technique [footnote 5] (for example random digit dialling or face-to-face omnibus interviews)

The following sections consider each of the 4 types of sample source listed above in more detail. Some problematic alternatives will then be discussed.

Intercepting customers at stores

One possible survey objective may be to investigate whether competition takes place locally and, if so, the extent of such competition, for example what are the locations of the firms that constrain each of the Parties’ outlets. In these cases, ideally, customers should be surveyed from all of the Parties’ outlets in areas of concern in order to determine the competitive constraints on them.

For this type of survey, a common approach is face-to-face interviewing with customers as they exit the store. This allows the interviewer to focus the interview on purchases just made, and to measure potential diversion accordingly.

Alternatively, customers may be recruited to the survey at the store (or bus stop, cinema, etc) by collecting postal, telephone and/or email contact details for a follow-up interview. This has the advantage of minimising the time demanded of customers ‘there and then’. However, significant over-recruitment will usually be required to ensure that the required number of follow-up interviews is achieved.

Whichever approach is used, time and resource constraints often make such surveys difficult and so it is important to consider very carefully which outlets to survey. Where there are many outlets for which the CMA considers there to be a competition risk, then sometimes it is possible to eliminate a sufficient number by taking account of existing information, to leave a practicable number of outlets for surveying purposes[footnote 6].

If, following the application of this initial cut, the number of outlets is still too high to survey them all, then a number of strategies might be adopted to decide which outlets to sample. The context of each case will be different, but there is often a need for this sample to form the basis of inferences about Parties’ outlets that have not been surveyed as well as providing direct survey estimates for those that have[footnote 7]. Approaches for the choice of outlets to survey may include:

- random sample – randomly selecting either outlets or overlap areas

- stratified random sample – categorising outlets or overlap areas by characteristics that may have an impact on competition (for example competitor fascia count, whether rural/urban/London) and randomly selecting outlets or areas within each of these categories [for example Ladbrokes/Coral]

- competition gradient – ordering outlets or overlap areas by a competition metric (for example distance between the Parties’ outlets) and selecting outlets or overlap areas in a defined way (for example at fixed intervals) from the ranking [for example Celesio/Sainsbury’s]

- discriminative sample – selecting a set of outlets to survey that maximise the variety and combinations of different characteristics (for example size of outlet, type of outlet, distance to the nearest merger Party outlet, type of area, number and type of third-party competitors, etc) that might be relevant to an assessment of the nature and strength of the competitive constraint that the merger Party has on that outlet [for example Poundland/99p Stores]

Please note that the same issue of selecting a subset of outlets or areas to survey may arise while using other types of sample source, but we mention it specifically in relation to intercepting customers at stores because having a large number of outlets would make this particular type of survey prohibitively expensive.

It is important that the method employed is applied objectively, avoiding cherry-picking of outlets unless this is a clearly stated part of the interpretation of the results (for example choosing a subset of outlets most likely to create competition issues with the aim of showing that if the Parties are not close competitors in these outlets then they are unlikely to be anywhere else).

In exit surveys, it is important that interviewers approach potential respondents at random (rather than target those who they perceive to be more likely to take part). Sometimes, additional rules may need to be set to ensure that customers selected for interview at the outlet are representative of the population of customers at that outlet. For example, the survey design may require interview quotas if there are known characteristics of the customer population that should be reflected in the sample, or interviews may need to be carried out on specified days and at specified times of day to reflect the known footfall of customers using an outlet.

Customer lists provided by the Parties

Where they exist, customer lists supplied by the Parties may be used. Care must be taken to ensure that the list(s) match, as far as possible, the target population(s). Any under-coverage (particularly exclusion of specific sub-groups of customers) or over-coverage should be recorded and consideration given to any assumptions made about any inference from the survey population [for example Cineworld/City Screen where assumptions about under-coverage were tested with a small validation survey].

In many merger cases, all eligible customers in the list are included in the sample. However, if the number of customers in the list is very large, then a sample can be drawn from it at random. There may be other information about customers (for example demographic or categorisation characteristics) that may be expected to have an influence on their behaviours or attitudes with respect to the surveyed market. If so, the sample should have broadly the same composition by those characteristics as does the population. This may require stratification of the sample list before drawing the sample and/or interview quotas or post-stratification weighting to ensure that the achieved interviews are representative.

Where there are specific sub-populations of interest, the sample list should be stratified by those sub-populations to ensure that a sufficient number of respondents for each is obtained. Over-sampling of a sub-population may be necessary to achieve this.

While not exhaustive, the list below describes the methodological options typically considered in merger cases when interviewing from customer lists. Judgement is required as to which is the best approach, as there are advantages and disadvantages to each.

- Telephone survey: The response rate from a telephone survey can be higher than from an online survey, reducing the risks of non-response bias. This is particularly true when surveying businesses where there is the added advantage of being able to ensure that an appropriate person within the business is responding. Interaction with an interviewer also provides more opportunity to ensure that questions and response item lists are communicated in full and understood as intended. However, telephone surveys can be more expensive and time-consuming to conduct compared with other research methods and their usefulness depends upon having a good starting list of customer contact details.

- Online survey (email or SMS invitation): Online surveys tend to be cheaper and faster to undertake, and may be a more natural method in some sectors, for example technology and online media, where the customer base is likely to be more responsive to an email survey. If the list of customer email addresses is very long (for example 10s of thousands) then a large-scale online survey may be possible. In some circumstances, this can overcome the problem (discussed above) of needing to sample outlets to survey. However, response rates to online surveys are often low and the quality of responses is often not as high as when the respondent is interacting with an interviewer face-to-face or by telephone.

- A combination of the above (mixed mode): A mixed interview method might be considered where, for example, the customer list contains both email and telephone contact details for each person and a follow-up telephone interview can be attempted with anyone who does not respond to the initial email invitation to participate in an online survey. Alternatively, where it is possible to contact some of the sample by email but not telephone, and others by telephone but not email, a mixed method approach may be appropriate.

However, particular care is needed in using mixed method approaches. Any solution which involves a mix of interviewer-administered (for example telephone) and self-completion (for example, online) survey methods can be biased by modal effects, ie the results between the 2 methods are different simply because of the method of interviewing. Potential modal effects can be mitigated by ensuring that questions are asked in exactly the same way across each method. For example, if a question in a telephone survey is asked with an open-ended spontaneous response format, the online survey question should be asked in the same way.

This said, there are potential modal effects that may not be eliminated entirely even with the best questionnaire design. For example, in telephone interviewing the interviewer is typically instructed to prompt and probe respondents to capture full and considered responses, whereas there is no such parallel in an online survey. This explains why the number of answers to a question where multiple response is allowed (for example ‘which brands have you used in the last 3 months’?) tends to be higher in a telephone survey than in an online survey.

Regardless of interview method, it is important that no systematic difference in response by customer type ensues. If one type of customer is more likely to respond to the survey than another, the achieved sample will misrepresent the population. So far as possible, therefore, the survey design should include strategies to maximise the response rate and to minimise the risk of significant non-response bias.

Where time permits for a telephone survey, and the appropriate contact details are held, a pre-notification letter or email outlining the purpose of the survey is likely to increase the response rate, as is a carefully worded survey introduction that explains the purpose of the research.

For an online survey, again where time permits, it is usual to send a reminder to those customers who have not responded to the initial survey invitation within the first few days of fieldwork. Ideally, the fieldwork period should span at least one weekend, so that the opportunity for ‘time poor’ customers to respond is maximised.

Where there are no customer lists of sufficient quality, external lists from other reputable sources (for example Dun & Bradstreet for businesses) may be considered. Appropriate screening will be required to ensure that only genuine customers of the Parties are recruited, and it will be even more important to assess the extent to which the lists represent the target population.

Free-finding customers

Where no customer or reputable external lists exist, free-find sampling methods are possible (for example telephone random digit dialling or face-to-face omnibus surveys) but they can become expensive to use if the proportion of eligible individuals in the general population is low.

These are well-established research methods used within the research industry. However, it is important to ensure that the recruitment approach used with these methods is robust, with proper rules for the selection of households and individuals within them. As with external list sources, appropriate screening will be required to ensure that only genuine customers of the Parties are recruited.

Telephone numbers for random digit dialling should include mobile-only households, noting the increasing prevalence of households without a landline in the UK.

More problematic sources: street recruitment and online panels

Some customer sources that are used in commercial research are generally not considered sufficiently robust by the CMA for merger cases. In particular, we advise against recruiting customers:

- on the street

- from panels with non-random samples (ie most online panels)

On-street recruitment is likely to generate sample bias. For example, interviewers may not approach potential respondents at random as they should (tending to target those they perceive to be more ‘willing’ or likely to take part in a survey instead). Time and place of interviewing may also have a bearing on the type of people who are in the vicinity of the interviewer.

Sample bias is also a concern when respondents are drawn from a panel, in particular from an online panel, where sample recruitment does not rely on randomisation methods. Whilst a panel can be made to look like a random, representative cross-section of consumers in terms of its demographic profile, the characteristics of people who join a panel may be very different from other consumers. For example, evidence in the research literature suggests that those who join an online panel spend more time on the internet and engage more actively than other consumers in searching for better deals online. For a merger inquiry where channel substitution issues can be important, this could be a flaw. The CMA tends to place less evidential weight on surveys involving customer recruitment from panels, though each case is treated on its individual merits.

If panel sources are used, transparency and rigour of panel recruitment and data weighting methods will be factors in the CMA’s evaluation of the survey results.

Sample size

In the surveys it commissions as part of a phase 2 merger inquiry, the CMA aims (as a general rule) to achieve a minimum of 100 completed interviews with any pre-defined group of interest for rigorous analysis (for example if analysis is required at an individual outlet level, a minimum of 100 interviews per outlet is needed). If there are other pre-defined sub-populations of interest within a more general population of customers, then the same threshold applies.

The target of 100 is not always met. Below this threshold, the CMA puts less reliance on statistical inferences about corresponding populations and will interpret and report results in a way that cannot be automatically applied to the whole population – for example, “23 of the 61 respondents who were customers of Party A said they would divert to Party B”, not “38% … said …”.

In some cases, survey analysis might retrospectively reveal other groups with particular characteristics of interest. It is difficult to design a survey to ensure a sufficient number of interviews within all potential groups of interest, as these may only become evident at the analysis stage. The sample size requirements should be considered at the survey design stage, taking into account the implications of the likely response rates on the resulting numbers of interviews. Weighting a survey dataset reduces effective sample sizes, sometimes very considerably. It may be the case that the unweighted sample is above the threshold of 100, but the effective sample size of the weighted sample is below 100, in which case care should be taken to present results appropriately.

Survey validation across modes

Where there is concern about potential for sample bias with a chosen method, a parallel validation survey using another research method may provide evidence as to whether or not such bias exists. For example, if the main survey is conducted online, a telephone survey conducted with a smaller sample, or within a specific sub-group, may be helpful in validating the results of the main survey[footnote 8].

Incentives

There is no hard-and-fast rule about whether to use a respondent incentive (for example a gift voucher or entry into a prize draw) to increase the response rate to a merger inquiry survey. The CMA usually has no objection to their use, particularly where a low response rate is reasonably expected and would be a concern.

Advance letters/emails and introductions to respondents

Care should be taken when drafting materials such as pre-notification letters/emails and survey introductions to ensure there is nothing in the wording that gives rise to an unplanned excessive level of participation in the survey by a type of customer with one view on the subject, in preference to another type of customer with a systematically different view.

Advance letters/emails should explain the purpose of the survey and how it will be conducted. Importantly, though, framing effects normally should be avoided so there must be no mention of a merger inquiry: the survey’s purpose should be described as seeking customer views more generally.

In addition, such letters/emails will normally:

- be on agency or commissioning organisation “letterhead” (as appropriate) signed by an appropriate authority

- be kept short and focused purely on the survey

- explain how the customer will be contacted, and when

- include contact details (telephone and/or email) for potential respondents to use if they wish to opt out of the survey

When designing introduction scripts for an interviewer-administered customer survey, an appropriate context needs to be established for the questions being put to respondents, so that respondents know what is being asked of them and why. Again, though, there normally should be no mention of a merger inquiry, and other information provided about the research should not be in any way pre-emptive of the survey questions.

Introductions should be delivered clearly in understandable blocks of plain English, but it is good practice to avoid long introductions which may serve to discourage survey participation. For interviewer-administered surveys, a useful technique to keep introductions short is to add scripts that can be used at the interviewer’s discretion, to help clarify the task and reassure respondents. For example:

- “This survey is purely for research purposes; no attempt will be made to sell you anything either during or after the survey.”

- “Everything you say is confidential and no responses will be attributed to you individually.”

- “Your views are important; this research is being used to find out what people like you think about …”

- “This survey will take about x minutes to complete.”

- “This survey is being conducted according to the Market Research Society code of conduct.”

- “You should have received a letter of introduction about the survey a week or so ago.” (Here, it is also helpful if interviewers have a copy of the letter to hand, which can be posted/emailed/read out to the respondent as appropriate.)

In interviewer-administered surveys, respondents should have an opportunity to ask questions of clarification before the main part of the interview begins. However, it is important that the interviewer adheres to the script provided in the survey introduction, and uses the reassurances exactly as written, to avoid any unintentional bias in respondent recruitment.

Interviewer briefing and monitoring

Strict adherence to the questionnaire script during interviewer-administered surveys is a key principle for merger inquiry research. Interviewers must follow interviewer instructions, including reading the questionnaire script verbatim, and not attempting to paraphrase anything. This can be difficult to achieve in practice, and based on its experience the CMA has come to the view that the following is the best way of ensuring that interviewers adhere to this principle.

Where questionnaires are interviewer-administered, either by telephone or face-to-face, there should be a full briefing of all interviewers scheduled to work on the survey before fieldwork starts. The purpose of the briefing is to ensure that all interviewers are familiar with the questionnaire script and routing, understand when to read out pre-codes, and prompt or probe responses as required.

Ideally, all field managers, supervisors and interviewers should be briefed directly by a member of the agency executive team. This is common practice for commercial telephone surveys where interviewers usually work together in one central location, but is less common for face-to-face surveys where interviewers may be geographically dispersed. However, personal briefing from a member of the agency executive team helps to ensure that interviewers understand and follow all the correct survey procedures[footnote 9]. We recognise that a telephone briefing of interviewers may be more practical and cost-effective than asking interviewers to travel to a central location.

Normally, the briefing sessions will cover:

- a short background to the inquiry, highlighting the survey’s importance and emphasising that the data will be subject to intense scrutiny, so the requirement is for the highest possible standards of fieldwork

- the population of interest for the survey and the screening questions

- where the sample has been sourced from, and how to answer questions from respondents about how their personal details have been obtained (if applicable)

- the importance of screening properly, so that only eligible individuals are interviewed

- the importance of a high response rate

- the importance of, and rationale for, complete adherence to the questionnaire script

- whether each survey question allows one response (single code) or multiple responses (multi-code)

- at each question, whether potential response options should be read out (prompted) or whether responses should be captured spontaneously and probed to pre-codes

- the use of any prompt material (for example maps, showcards, product descriptions)

- routing/filtering protocols

- the importance, where applicable, of interviewing at the correct times and in the right places

A separate written briefing note should also be given to interviewers working on the project. This should include all the instructions from the briefing session as well as a copy of the questionnaire with all routing instructions shown.

Research that is going to provide evidence for a merger inquiry requires particular attention to detail that often goes over-and-above the standards for commercial research, and this should be emphasised in both the verbal and written briefings.

A full and comprehensive briefing will mitigate the risk of poor quality fieldwork. However, it will not eliminate the risk entirely, and it is important that interviewing is monitored rigorously, with the agency executive team taking a keen interest in how the interviewing works in practice.

Good practice is for the agency project executive who conducted the briefing to listen to (telephone)/attend and observe (face-to-face) a selection of the interviews initially conducted post-briefing. Regardless of how well the questionnaire has been piloted (see cognitive testing / piloting), a number of details may need to be ironed out after the survey ‘goes live’. Instructions and a survey questionnaire can be implemented in unexpected ways when entrusted to interviewers; and the reactions, interpretations and answers of respondents can differ from expectation.

Once mainstage fieldwork is fully underway, it is also good practice for the agency project executives to continue to monitor a proportion of interviews. Monitoring of interviewer performance in the field for face-to-face surveys is time-consuming (as it requires agency project executives and field managers/supervisors to travel with interviewers) but the CMA’s experience is that it plays an important role in ensuring high standards of interviewing are maintained.

As a result of monitoring, questionnaire amendments, revised interviewer instructions and refresher briefings may be required (especially for any interviewers who fail to reach and maintain the required standards). Any adjustments needed should be documented and agreed with the client. If there is a systematic problem with the way that some interviewers have conducted interviews, these individuals should be replaced in the fieldwork team and new interviews conducted to replace any erroneous ones.

Telephone interviews are normally audio recorded as part of standard quality control procedures (although permission is required from the respondent to do so). These should be made available for scrutiny by the agency project executive and, if necessary, the client during the fieldwork period. Audio recording is less common for face-to-face surveys, but might be requested (by the agency executive team and/or the client) if felt necessary and the technology is available (subject to the same respondent permissions as for telephone).

The CMA generally likes to be given an opportunity to attend interviewer briefings and observe or listen to some fieldwork. Where possible our preference is to choose interviewing points and which interviewers to monitor for ourselves rather than the agency selecting them for us. All of this is subject to respondents being made aware that their interview is being recorded, or giving consent if the interview is being conducted face-to-face. This needs some prior planning and we encourage early communication with the CMA to facilitate it.

Where Parties are able to demonstrate that a survey has been conducted to a high standard, and show an understanding of how the survey has worked in practice in the field, this will assist the CMA in assessing the evidential weight of the data generated.

Cognitive testing/Piloting

Where time allows, the soundness of any research design and questionnaire should be tested before the ‘live’ survey begins by conducting, monitoring and evaluating cognitive interviews and/or a survey pilot.

Undertaking a small number of cognitive interviews is often an effective way of identifying potential problems with a questionnaire. Usually, cognitive testing involves retrospective interviewing, where the researcher (usually one of the agency executive team responsible for the survey) – after conducting a full interview with an eligible respondent – then works back through the questionnaire asking them about their comprehension and interpretation of questions and discussing possible improvements[footnote 10]. Given the time involved in doing this, respondents are often offered a small incentive to participate.

Pilots are mini-versions of the full survey process, including and therefore testing the interviewer briefing, respondent contact/screening process and the questionnaire with customers drawn from the population of interest.

Members of the agency executive team responsible for the survey should be closely involved in the piloting process. This means conducting at least some of the pilot interviews themselves, or listening in and taking notes directly.

The extent of the pilot will depend upon the complexity of the survey design and the sensitivity or difficulty of the subject matter. Good practice involves the formal recruitment, interview and debrief of a number of pilot respondents, followed by a full design review.

Where there are particular sub-populations of interest, the pilot should cover each in turn, ideally. As a general rule, the CMA recommends conducting at least ten pilot interviews, with a minimum of 2 from each sub-population of interest. However, where time or sample is limited, the scale of the pilot may have to be cut back.

Parties should note that it is risky to put a survey into the field without proper piloting, and it is better to allow time in the project schedule to incorporate a full pilot rather than rushing the survey set-up and finding problems with survey quality at a later stage. However, the CMA recognises that time constraints sometimes make a more limited pilot necessary. In these circumstances the risks can be partly mitigated by testing a paper version of the questionnaire ‘in-house’ by interviewing colleagues, friends or family. The survey can also be ‘soft launched’, ie only a few interviews conducted on the first day or 2 of fieldwork with careful monitoring of how it is working, so that any mistakes can quickly be identified and rectified. If changes are made, it may be necessary to replace some or all of the interviews conducted beforehand.

Questionnaire

Introduction

While there is a well-developed body of good practice in questionnaire design for social research, experience has shown that merger inquiry research requires particular attention to specific (and sometimes small) details to help obtain reliable and valid customer survey evidence. Any bias in response caused by imprecise or leading question wording, or ordering of the questions, can weaken the evidential value of a survey.

Structure

We start by describing an appropriate structure for a merger inquiry questionnaire. Responses to questions in each of these areas will provide key data for the exploration of competition in the market and the potential impact of a merger on customers’ choices.

It is good practice to ask easily answered questions on matter-of-fact topics at the start of a survey to ‘warm up’ respondents, followed by matters of behaviour, then preferences and reasons for choice, and then responses to hypothetical questions. The best questionnaires flow naturally for the respondent, enabling them to give a narrative of their behaviour in the market of interest. Typically, a merger inquiry questionnaire might be structured to include the following sections:

- an introduction inviting potential respondents to take part in the survey

- screening questions (ie questions that establish the respondent’s eligibility to take part in the survey[footnote 11])

- general purchasing behaviour, for example nature of purchase(s), suppliers, frequency of purchases, channels used

- influences on purchasing behaviour/choice attributes

- geography of most recent purchase, for example distance travelled/time taken to get to purchase point, departure point, travel modes used, whether main reason for visit/journey

- aspects of most recent purchase, for example (as appropriate) what was purchased, when, who with, how much was spent, how many items

- response to hypothetical change in a Party’s offering

- respondent demographics (if not covered during the screening of respondents)

It is important to carefully consider the order of individual questions within particular questionnaire sections as well, to avoid influencing answers to later questions by earlier ones within the section.

Questions should be introduced in such a way that clearly states the context in which they are to be answered and reminds customers of this, as necessary. Linking phrases such as ‘Still thinking about the recent purchase you made …’ will be useful in this regard. This, and a structure such as the one above, should be used to help the respondent return to the mindset of their most recent purchase before answering the diversion questions.

Care should be taken not to burden the respondent with a survey that is too long. The quality of responses will deteriorate if the questionnaire is too detailed and time-consuming to answer. Adherence to the structure above will help in designing a questionnaire that is succinct and relevant both to the customer and to the merger analysis. Ideally, the questionnaire should take no more than 10 to 15 minutes of a customer’s time to answer, although in some circumstances (for example store exit interviews) a shorter questionnaire may be necessary.

Language

When designing a questionnaire it is important to use appropriate language that avoids ambiguity or confusion. Wording should be in plain English, to reflect a wide range of language comprehension skills (reading, speaking and/or listening).

In surveys of the general population, technical terms should be used only where these are widely used and understood or – if not widely used/understood but their use is unavoidable – carefully explained so that they are understood in the same way by all respondents. However, if there is any risk that they may be interpreted differently by respondents even with an explanation, they should not be used at all. In surveys of business audiences, the use of technical terms may be more appropriate, but care should still be taken to keep the wording as straightforward as possible.

There needs to be consistency in interpretation of the survey questions by respondents to ensure that the views they express are based on a common understanding of the questions being asked. Any scope for ambiguity or confusion in the phrasing of a customer survey question is likely to reduce its evidential weight. However, there is sometimes a fine balance to be struck between having sufficient detail in a question to avoid ambiguity and it becoming too long and difficult to remember. Where such tension exists, it is often better to split a long question into 2 (or more) shorter questions.

In addition, the questionnaire must not influence customers to give particular answers: it must not lead them to express an opinion or fact that is not a proper representation of their views or behaviours. It is important, therefore, to provide a sufficient range of response options at all questions so that customer views are represented properly.

A question that is presented in a way that leads customers to one answer in preference to another (irrespective of their actual view or behaviour) constitutes bias, and is likely to be of limited evidential value as a result. Some potential sources of bias that should be considered when drafting customer survey questions include:

- Acquiescence bias, where the customer thinks they should agree with a statement included in the question and therefore does so. For example, ‘Have you been to the dentist in the last year?’ contains an acquiescence bias to the response ‘Yes’. A better, more neutral question would be: ‘When, if at all, did you last go to the dentist?’

- Restrictive bias, where the question leads the customer to think only of certain options. For example, asking ‘If you had known before you went there that this branch of X was closed for refurbishment for one year, what would you have done instead?’ – without an explicit encouragement in the question wording to respondents to consider all options, such as ‘Please imagine that you had known before you went there that this branch of X was closed for refurbishment for one year. Thinking of all the options open to you, what would you have done instead?’ – may cause respondents to discount shopping online as an alternative source of supply

- Hypothetical bias, where a customer may indicate a willingness to spend money or change behaviour which does not reflect their likely real response to the situation described[footnote 12].

- Inertia bias, where a customer over-states their likely reaction to a change in the market, for example by not taking into account switching costs, inconvenience, uncertainty of information, etc.

Question types

Selecting the correct question type(s) is an essential part of survey design, and the type(s) of question that can be used will be influenced by survey mode, typically whether this is:

- an interviewer-administered face-to-face interview, using paper and pen or computer-assisted personal interviewing (CAPI);

- an interviewer-administered telephone (CATI) interview;

- a scripted online questionnaire.

Pre-coded (closed) and open questions

Data collection and analysis is often facilitated by using questions where likely frequent answers are included in the questionnaire (closed questions) rather than leaving the customer or interviewer to write in the response (open questions). For interviewer-administered surveys, closed questions can be asked either as prompted (where the response codes are read out or shown to the customer) or as unprompted/spontaneous (where the interviewer codes the response from what the customer says, often probing to clarify what the customer means and how the response fits into the pre-codes on the questionnaire)[footnote 13].

However, care should be taken in the drafting of pre-coded responses. As an over-riding principle, the codes must cover what are likely to be the most frequent survey responses. Then:

- If potential responses are to be prompted (read out or shown to customers), the list should contain responses that can be easily understood and (if not shown) remembered by a typical customer (usually 6-8 possible answers at most).

- If (for an interviewer-administered survey) the pre-coded question is designed to collect responses in a spontaneous (unprompted) fashion, a longer list is feasible, but the response codes should be presented to the interviewer in a logical fashion, ideally with the most likely frequent response codes first on the list, alphabetically or in groups of related responses, and they should not extend over more than one page or screen.

Response codes should be drafted so that they are easily understood (by both respondent and interviewer), so good practice is to avoid any with too many words that make it difficult to interpret their meaning (and how they are differentiated from other codes).

When using pre-coded response questions in an interviewer-administered survey, it is important that there is a clear instruction on the questionnaire to say whether the list is to be read out (prompted) or not (spontaneous). If using a spontaneous approach, make clear in the instruction the extent to which interviewers should prompt for further answers or probe to clarify whether the response fits one pre-code or another. (It is also helpful to remind interviewers that they should not allow respondents to read the pre-codes over their shoulder on the page or screen). Standard practice with spontaneous pre-coded questions is to prompt the customer (for example ‘Why else?’, ‘What else?’, ‘Anything else?’) until he/she has nothing further to add, and to code the first mention separately from all other mentions. However, there may be occasions when the first ‘top of mind’ response is of most interest, in which case further prompting may be of less value.

Some surveys are designed with the inclusion of fully open-ended response questions, where there are no pre-codes and interviewers write in the answers given by the customer, or the customers themselves write in their answer. Fully unstructured responses can be highly informative, but this approach is not often used in merger inquiry surveys because open-ended questions can be time-consuming to ask and costly to analyse.

Scalar responses

Another often-used question technique in customer surveys is to capture responses via semantic or numeric scales. A semantic scale is labelled either at the end points or at every point on the scale (for example Strongly agree, Tend to agree, Neither agree nor disagree, Tend to disagree, Strongly disagree), while a numeric scale uses numbers as labels. The CMA’s preference is to use semantic rather than numeric scales, because the former is easier to interpret by both respondent and analyst.

There is no standardised semantic scale approach used in merger cases, although bipolar scales normally include a neutral mid-point and allow customers to give ‘Don’t know’ as an answer. However, in some cases it may be more appropriate to use an unbalanced scale without a neutral mid-point to unpick differentiation in customer attitudes where there is a natural tendency for customers to answer in a similar fashion. For example, importance scales are often used to identify the key factors that drive consumer choice in a market, by reading out a list of factors and asking customers to rate each of them in turn in terms of importance. Typically some customers will rate all factors as ‘important’ when presented with this task, and so it can be useful to have more granular distinctions at the ‘important’ end of the scale (such as ‘essential’, ‘very important’, ‘fairly important’) to help identify which are the most important factors. Alternatively, respondents could be asked to choose and rank, for example, the 3 attributes that are most important to them.

Questionnaire design for different modes

Different modes have particular strengths and weaknesses in terms of the way in which questions can be designed and presented to customers. These should be taken into account when deciding on the appropriate survey mode. For example:

- In CAPI, CATI and scripted online questionnaires (where the interviewing mode facilitates ‘automated’ or pre-scripted randomisation), it is good practice to vary the order in which item lists are read out to or displayed to customers when it is appropriate to do so (ie because possible answers are not in any way hierarchical), and to automatically reverse response scales for half the sample[footnote 14];

- CAPI, CATI and online self-completion modes also allow complex, conditional routing/filtering to be built into the questionnaire. Necessarily, the routing in paper and pen questionnaires must be simpler, but there remains a risk that customers will not answer questions they should, and answer questions that they should not. Clear interviewer instructions and a comprehensive briefing can help reduce this risk;

- In interviewer-administered isurveys, valid responses such as “Don’t know” and “Not applicable” can be captured as spontaneous answers (ie without being read out). However, in online surveys, such answers are effectively prompted for all customers and this may increase the frequency with which they are selected;

- In interviewer-administered face-to-face interviews, a showcard can be used to minimise the amount of information that customers must retain to be able to give an answer. However, in a telephone survey, customers may be required to absorb/remember a considerable amount of detail before making their response which is why it is important that item lists are limited in length;

- Face-to-face and online questionnaires can include stimulus content (for example logos, fascia images) in a way that a telephone survey cannot.

Content

Screening questions

In general, screening questions will be necessary at the start of a customer survey interview to ensure that only those within the population of interest are included in its scope. Occasionally, this will be all potential customers or businesses within the market[footnote 15], but is usually only the customers of one or both of the Parties.

Where customers are free-found using a random recruitment method (for example a face-to-face omnibus), the screening section may include a question(s) on previous purchasing behaviour to establish whether they are the customer of a merger Party. Importantly, such a question should not lead the customer to the identification of the other merger Party, as this may bias subsequent responses. Good practice is to ask for responses spontaneously or from a prompted list that includes all potential suppliers, as appropriate.

Screening questions are often used to ensure that the respondent was personally involved in the purchase decision. For example, a customer may have seen a film at a particular cinema but a friend or family member chose the cinema and booked the tickets. Timing of last purchase is also important. If the last purchase was a long time ago, then respondent recall may be a problem. Much depends on the product or service being purchased; recall is likely to be better regarding the purchase of laser eye surgery than about a visit to a convenience store. Piloting the survey can help to test recall and set a limit on how recently a relevant purchase needs to have been made to be eligible for the survey.

In many research surveys, screening questions are added to exclude customers who may have an informed/expert or vested interest in the subject because of their employment or personal connections, on the grounds that this may lead them to purposefully bias their responses in a particular way. The CMA’s general view is that all members of the population of interest should be included within the eligible sample and any such questions should be crafted to exclude as few people as possible. For example, it is not our usual practice to exclude people working in the market research industry or journalism from responding to merger surveys. In the surveys we commission, the CMA would normally include customers who have opted out of marketing communications or who have been flagged as recent participants in other market research.

Customer demography

Demographic questions may be asked after the screening questions (by way of easy introduction to the survey) or right at the end of the interview. The latter approach is usually preferable when the information requested may be sensitive, for example respondent income. Where the survey sample is taken from a customer list/database and already includes key demographic information about potential respondents, it is better (where possible) to take these ‘answers’ from the database and not waste interviewing time to recapture them, unless there is a reason to believe that verification is desirable and their importance merits it.

Typical demographic information collected is:

- sex (may be observed, not asked)

- (if not already covered during the screening) age (ideally the customer’s specific age, only asking them to indicate an age band if this is refused)

- working status

- highest educational qualification

We would not generally recommend asking a detailed social grade question in a merger inquiry survey: collecting sufficient information to enable an accurate classification takes time that is better spent on questions that are typically of more interest.

Previous purchase behaviour/consideration of other suppliers

Responses to hypothetical questions about what customers would do in the event of a change in a Party’s offering should always be assessed in the context of other evidence about the customer and a general understanding of consumer behaviour in the market as gained from the survey. Questions on previous purchase behaviour provide this contextual understanding. Similarly, collecting information about whether customers have considered other suppliers in the market in the past, and whether they have actively searched out information about other suppliers, may help to give a better understanding of consumer behaviour and whether customers are actively engaged in the market.

Typical questions:

- brands purchased in last day/week/month/year etc (depending upon typical purchase frequency)[footnote 16]

- frequency with which brands are purchased

- purchase channels used (bricks-and-mortar outlet, online, telephone etc)

- whether the customer has considered purchasing from another supplier(s) and/or been approached by another supplier(s)

- whether the customer has searched for information about another supplier(s) or about the supplier they purchased from, and if so where and for how long they searched for information

Choice attributes; purchase decision

Understanding why customers choose to buy from one supplier rather than another enables the identification of key drivers of consumer choice and helps us to draw inferences about how suppliers compete in the market. It also gives respondents an opportunity to think about the factors that are important to them, and makes it more likely that they will give a considered response to the subsequent diversion question(s).

Two different question approaches are commonly used to understand what drives customer choice:

- a choice attribute question that asks the customer to identify the most important reason(s) for choosing one product/service or supplier over another

- attribute importance questions (with a scalar response for each of several attributes) that asks how important each attribute is to the customer

A choice attribute question may be asked either as a spontaneous (unprompted) question or as a prompted question. The advantage of asking reason(s) for choice spontaneously is that it captures the ‘top of mind’ differentiators; the disadvantage is that one or 2 attributes may dominate (price, for example) and then there is less evidence about the importance of other factors. The question may be asked just to capture the single most important reason or alternatively all important reasons (although here it is advisable to capture the first mention separately to help identify the key reason for choice). An option to capture and code ‘other’ responses is usually included.

Attribute importance questions should be prompted on a one-by-one basis, using a scale which is semantically defined. Here, the CMA often uses an Essential/Very important/Fairly important/Not important/Don’t know scale because in our experience it generates results that discriminate effectively between attributes.

It is usually inadvisable to include both a prompted choice attribute question and attribute importance questions in the same survey, as this combination may introduce respondent fatigue. Instead, it will usually be better to ask reason(s) for choice spontaneously and then the prompted attribute importance questions (in that order so that spontaneous responses are captured first)[footnote 17].

The choice attribute question is usually the most informative in discerning parameters of competition from a customer perspective because it differentiates the Parties’ offerings. Consequently, it is often (although not always) the more relevant question in a merger case compared with attribute importance questions. (The latter quantify the importance of component parts of the Parties’ offerings but those revealed as most important are frequently common to both Parties[footnote 18]). For a fuller discussion of the interpretation of these types of question, illustrated with an example, see the hospital merger case study in Illustrations.

Discrete choice; conjoint analysis

There are other well-established question approaches that use modelling techniques to understand the importance to customers of various attributes in the purchase decision (for example Choice Based Conjoint (CBC) or other forms of discrete choice analysis). These tend not to be used extensively in merger inquiry research due to time constraints – there is often insufficient time to design and test conjoint survey instruments extensively before fieldwork, to administer the necessary questions during the interview, or to undertake the modelling prior to presentation of results[footnote 19].

‘Geography’ of local competition

The collection of details about where a product or service was purchased, how the customer travelled to the purchase point, how long it took, and where they travelled from (their departure point), can be useful information to help identify the geographic scope of the competitive constraints on the Parties’ products or services. Clearly this is not of relevance for purchases made online.

Typical questions are:

- where the customer travelled from to get to the purchase point (home, workplace, somewhere else)

- travel mode(s) used

- time taken to travel/distance travelled to purchase point

- whether the visit was the only/main reason for making the trip, or not the main reason

This information can be used sometimes to map customers in terms of proximity to a particular purchase outlet, and to define the catchment area for a particular outlet[footnote 20].

The phrasing of these questions needs to be considered carefully to ensure they are meaningful to all customers in different purchase circumstances. For example, if the purchase is made on impulse or planned as part of a more general shopping or commuting trip (rather than being the sole or main reason for the trip), the question may need to focus on the travel from the relevant local point to the outlet, rather than from the home or workplace[footnote 21].

Diversion

In many merger cases, the main objective of the survey is to assess the closeness of competition between the Parties and their competitors. A key element of this assessment is the inclusion of a suite of questions asking customers what they would have done under various hypothetical scenarios on a previous purchase occasion from one of the Parties. The most common of these scenarios is that a given product/service/supplier (or a given supplier’s outlet or website) was not available (forced diversion)[footnote 22], or a product/service was offered at a higher price (price diversion).

As indicated before, these questions should normally be asked in relation to the last purchase occasion, to put them in a specific and meaningful context. Thus, a price diversion question may take the form: “Thinking about [your most recent purchase from x], what would you have done if the price of this product/service had gone up by £1?”[footnote 23]

Conceptually, we are trying to measure the extent to which sales revenues lost through a deterioration in an aspect of one merger Party’s offerings would be internalised as a result of the merger, because some customers would choose to divert some or all of their expenditure to purchases from the other merger Party. In more technical language, we are using a hypothetical question to capture the stated next best alternatives/substitutes of ‘marginal’ customers: customers whose demand is elastic in regard to the dimension of the offering that is being varied under the hypothetical scenario presented in the diversion question. This is usually a small increment in price, but can be a change in some other aspect such as frequency of service in transport markets.

In most circumstances only a small proportion of customers will be marginal in the sense described above. Subsequently, the sample of marginal customers for which we have diversion responses is likely to be too small to provide estimates of sufficient precision for robust analysis. To overcome this, we ask the forced diversion question, removing a Party’s offering altogether, which results in all customers being asked what they would have done instead. When interpreting the findings, it is then necessary to make the assumption that the distribution of their responses is the same as for marginal customers. Note that this is equivalent to making the assumption that the diversion behaviour of marginal and non-marginal (“inframarginal”) customers is the same[footnote 24].

In cases where both price diversion and forced diversion questions are asked of all customers, the CMA has found that the order in which the questions are asked makes little difference to the results, ie it does not matter whether the forced diversion question is asked before or after the price diversion question. However, it is usually more natural to ask the price diversion question first, as this will help to identify marginal customers in response to a specified price increase. It is also a more logical question sequence for respondents, particularly those who are inframarginal customers.

When asking customers about their response to price increases, it is usually better to frame questions in terms of absolute amounts (for example in pence or £s) based on an actual price recently paid for a product or service, or typical price. Information may need to be collected in the survey about the actual price paid, and then a calculation made (this can be done automatically in a computer-assisted interviewing script) of the new amount after, say, a 5% price increase. We try to avoid presenting a price increase to consumers within the general population as a percentage (for example “What would you do in the event of a 5% increase in the price you paid?”), because they may find it difficult to work out what a percentage increase means in monetary terms. This is less of a concern for business respondents.

Questions about diversion options should be designed and tested to ensure they cover all possibilities. The exact wording will depend upon the range of options specific to a given merger situation, but as a general rule the initial question should ask about hypothetical behaviour at the highest level, for example would the customer (a) not ‘purchase’ or (b) ‘purchase’ or (c) don’t know[footnote 25]. Those who say ‘purchase’ should then be asked a follow-up question, ie what product/service/supplier[footnote 26] (or supplier’s outlet or website) would they substitute.

When framing the follow-up question, consideration should be given to allowing a spontaneous (unprompted) answer, avoiding the risk that a non-exhaustive showcard or read-out list of options introduces bias (although there is no hard-and-fast rule here and prompted lists are usually needed in online surveys). However, care is required with this approach: it necessitates no prompted mentions of particular products/services/suppliers (or supplier’s outlet or website) earlier in the survey, and interviewers must probe carefully to identify the correct product/service/supplier (or supplier’s outlet or website) in circumstances where they are recording responses against a pre-code rather than capturing verbatim what the respondent says.

The interviewer can be given lists and maps to help validate responses to unprompted questions. However, it is important to brief interviewers not to show these materials to the respondent when first asking the question. Experience suggests that many respondents struggle to read maps so while they might be a useful aid for the interviewer, interviews should not be dependent upon them.

Where it might be difficult to collect sufficiently accurate details of the substitute that respondents have in mind, it may be better to prompt the customer with a showcard or read-out list. This style of question is more appropriate when there is a limited number of alternatives in the market, or where precise outlet location details are required. If a short prompted list is used, the order in which alternatives are listed can be randomised. Otherwise, the list should be ordered in a systematic way such as alphabetically by brand/supplier fascia or name (and location, if applicable), or product/service name, to ensure no order effect biases are introduced (and if appropriate the alphabetic start point could be rotated between interviews).

In some merger cases, attempts have been made to investigate the effect of hypothetical non-price changes such as reductions in quality. This is a difficult (but not impossible) survey task. The challenge is to find a quality measure where a hypothetical deterioration has a precise meaning to all customers. Harder measures such as ‘waiting times for hospital appointments’ are better in this context than softer measures like ‘friendliness of staff’. As with price diversion, any question asking for a response to a hypothetical deterioration should be based on the actual product or service delivered on the last or most typical purchase occasion.

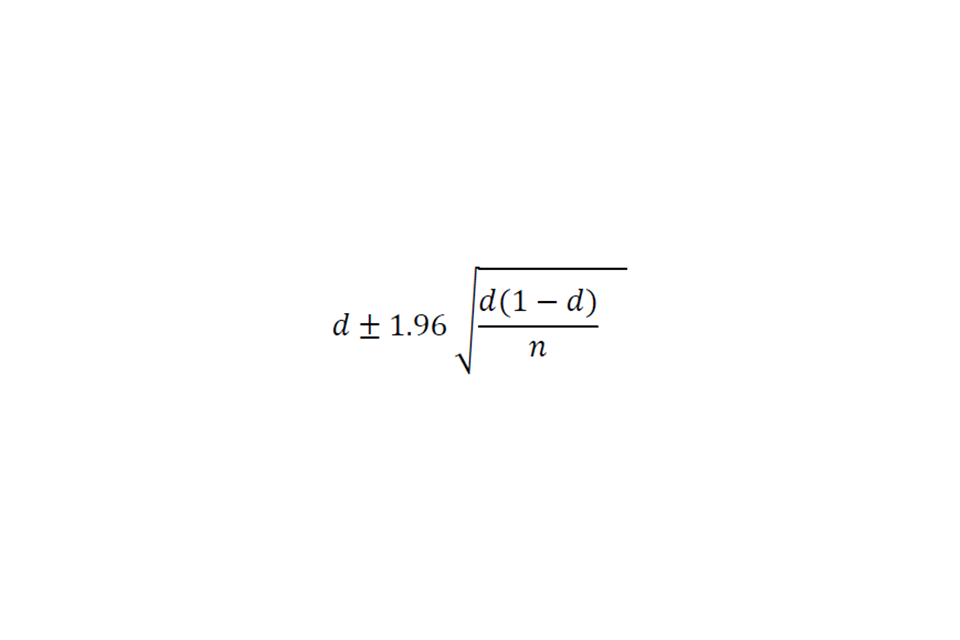

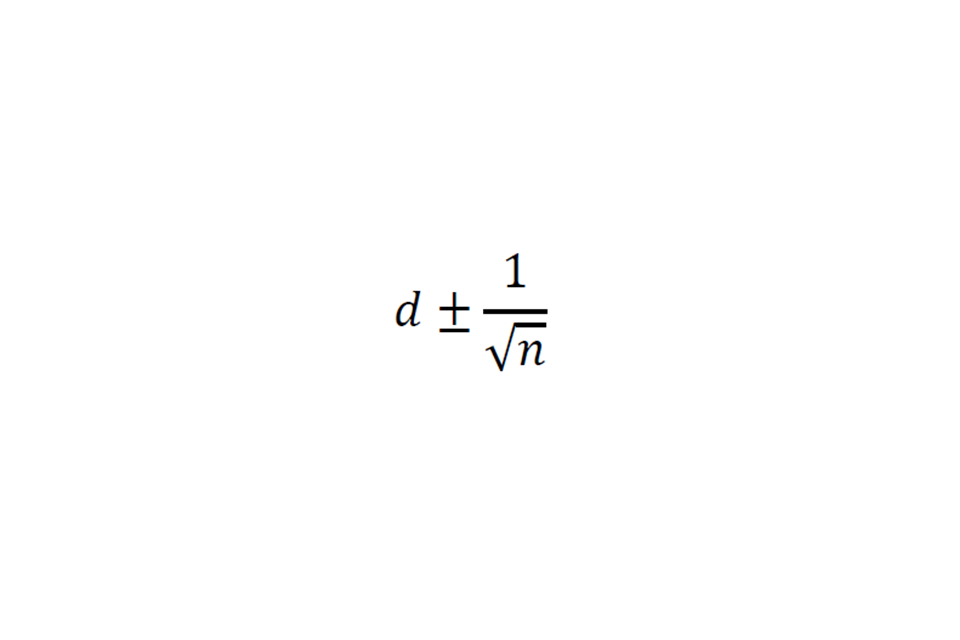

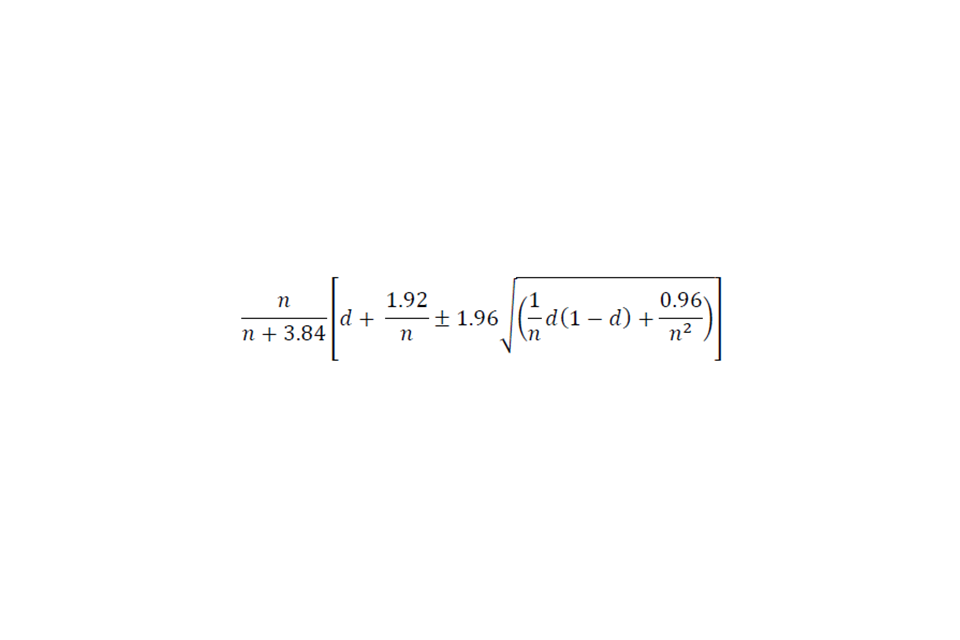

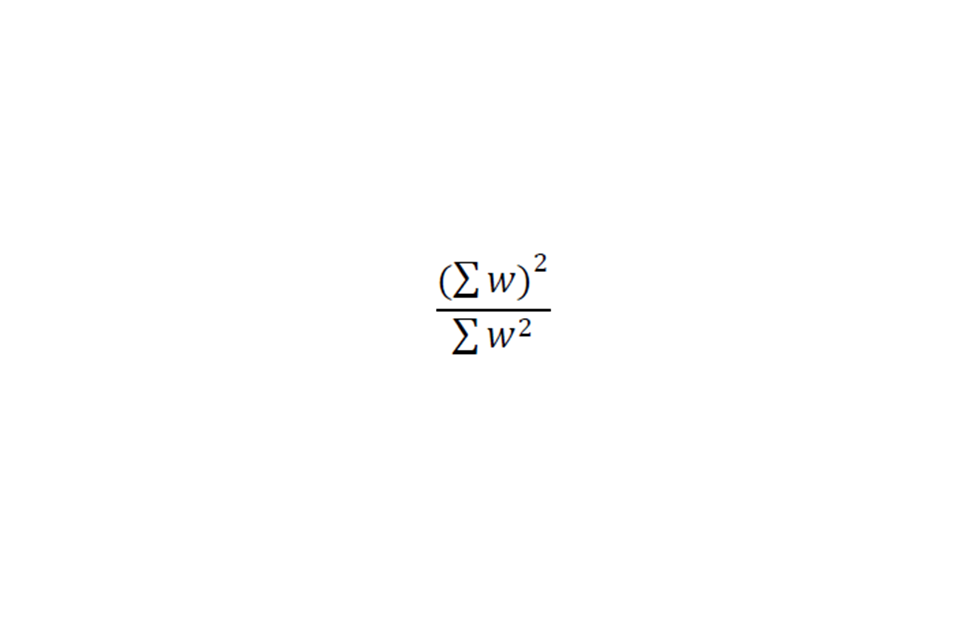

Diversion questions need to be worded in such a way that the customer puts themselves in the mindset of the original purchase decision. This purchase may have been planned or on impulse and it is sometimes appropriate to have different question wording to cover each of these situations.