Use of Multi-Criteria Decision Analysis in options appraisal of economic cases

Updated 16 May 2024

A step-by-step guide for analysts and policymakers to be read as a supplement to the Green Book (Chapter 4: Generating options and longlist appraisal)[footnote 1]

This note provides a brief guide for analysts and policymakers who wish to apply Multi-Criteria Decision Analysis (MCDA) in policy development as per the Green Book guidelines. It discusses the appropriate circumstances when to use MCDA in options appraisal, the general approach to follow, key points to consider, and common pitfalls to be aware of.

However, it is not intended to be a detailed practitioner guide for MCDA so will only describe a ‘light touch’ version of the technique for illustrative purposes. A more general practitioners’ guide[footnote 2] discusses the full approach in more detail and outlines conditions in which it would be appropriate to use – such as at pre-appraisal stage when there is a need to understand which factors influence benefit before specific options have been identified[footnote 3].

1. What is MCDA?

MCDA is an analytic method used to select from or rank a set of choices where these can be assessed against delivery on a range of criteria or performance objectives – such as those to be found during the policy appraisal process.

MCDA provides a clear rational structure for these decisions to be taken - most importantly it allows for detailed sensitivity analysis of how option preferences can be affected, not just by changes in the relative importance of one criterion over another but also by how significant the difference is between the best and worst performing choices in each criterion.

This process is referred to as ‘swing weighting’. It is the critical element that allows trade-off in performance between options to be fully explored with a group of subject matter experts and decision makers, illustrating how robust the final selection would be under different scenarios.

2. When can MCDA be used?

MCDA is a technique that helps decision-makers make rational choices between alternative options when these are required to achieve multiple specific objectives. It is particularly effective when there is a mix of qualitative and quantitative criteria not directly comparable against each another and when the perspectives of multiple stakeholders may need to be considered.

For example, if looking to rent an apartment one may use several criteria to assess which is the best option, some of which will be quantitative (price, size, energy costs etc) and others qualitative or judgemental (such as layout, décor, convenience for amenities and so on). An MCDA process would allow option performance against these different criteria to be compared against a common scale to make transparent what the trade-offs would be in selecting one choice over another.

3. What is the Green Book advice on use of MCDA?

The Green Book recommends using MCDA to support identification and ranking of choices during the longlist appraisal stage when the standard process is insufficient to construct a preferred way forward to take to shortlist stage. By including the findings from the MCDA exercise in the final business case, analysts would then have a transparent record of the evidence and assumptions used to derive the shortlist, and reviewers would be assured that all options have been properly and fairly considered against policy objectives.

However, analysts should be aware that this method can be time and resource intensive so should only be used when specifically required, in a proportionate way and with the assistance of facilitators who have professional Operational Research MCDA competence and who are also trained and accredited in HM Treasury methodology. It is not to be applied to analyse options at the shortlist stage itself – instead either cost-benefit or cost-effectiveness analysis must be used at this point.

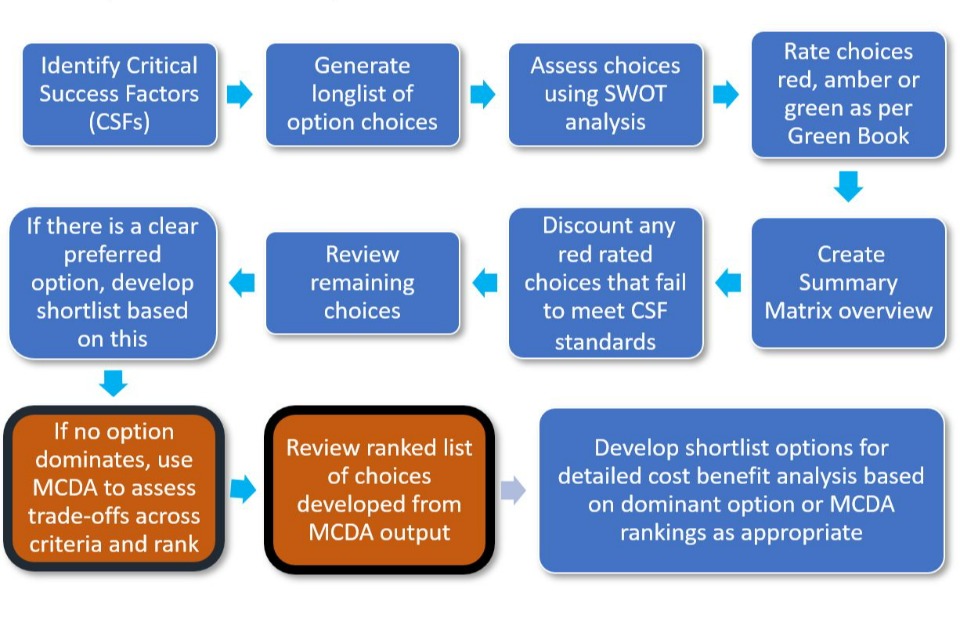

HMT Green Book guidance on development of an economic case advises analysts to firstly construct a wide range of component choices as building blocks from which a shortlist of complete alternative options can be constructed to achieve a policy goal. These choices are reviewed using an options framework filter against defined critical success factors (CSFs) with performance being rated on a Red-Amber-Green (RAG) scale.

Using the filter, any red-rated option choices will by definition fail to meet the minimum threshold for performance so would be dismissed from further consideration. From the remaining choices it may be clear there is a set that dominates such that it is the only combination to be green-rated across all CSFs – and this would be identified as the preferred way forward for the shortlist. Other option combinations might also be viable (but inferior) and also taken forward to the shortlist stage, if appropriate.

But if there were complex technical trade-offs, particularly concerning service scope and service solution, then it would be appropriate to use MCDA to support identification and preference ranking of choices, but not to produce complete solutions directly[footnote 4].

Flowchart of Green Book Appraisal Process

- Identify critical success factors (CSFs) 2. Generate longlist of option choices 3. Assess choices using SWOT analysis 4. Rate choices red, amber or green as per Green Book 5. Create Summary Matrix overview 6. Discount any red rated choices that fail to meet CSF standards 7. Discount any red rated choices that fail to meet CSF standards 8. If there is a clear preferred option, develop shortlist based on this 9. If no option dominates, use MCDA to assess trade-offs across criteria and rank 10. Review ranked list of choices developed from MCDA output 11. Develop shortlist options for detailed cost-benefit analysis based on dominant option or MCDA rankings as appropriate

Notes: MCDA should not be confused with simple scoring and weighting as that lacks the capability to fully examine the trade-offs in performance as described above and is NOT compliant with the latest Green Book advice due to its “lack of transparency and objectivity” (see Green Book para. 4.44, page 37)

As per the Green Book advice, MCDA should not be used as a substitute for cost-benefit analysis when appraising options at the shortlist stage. It is only to be used for longlist appraisal.

As per any other evidence-based analysis, the MCDA process must follow the Aqua book guidance.

4. Steps for undertaking a robust and valid MCDA analysis

4.1 Step 1 – Identify relevant criteria to underpin the CSFs

The first step is to identify a set of performance criteria against which each choice can be compared – these should be derived from the existing set of critical success factors already developed for the options framework longlist stage in consultation with experts and key stakeholders (as explained in the Green Book, page 31 onwards).

There should be sufficient criteria developed to cover all key performance aspects within each individual CSF; that is, there can be more than one per CSF, if necessary, to fully reflect the extent to which options differ from each other in ways that matter to the stakeholders. But note the more criteria are added, the greater the effort that will be required to complete the process.

Criteria should be measurable either in qualitative or quantitative terms, in regards of how well options would perform comparatively against them, but this can be tailored to whatever is most appropriate to the specific circumstances – for example:

- quantifiable using a natural scale of measurement (such as average fuel consumption figures)

- measurable against a defined set of levels on a bespoke constructed scale (Technology Readiness Levels or NCAP crash safety standards for instance)

- direct rating, with no defined constructed scale to help with scoring but pairwise comparisons for instance

Criteria should be mutually independent, without a causal relationship that makes performance against one criterion directly influence performance on another. This will be apparent as correlation in the scores. However, since correlation does not imply causation, correlated criteria should not be eliminated unless a causal link is clear.

In more complex exercises it is possible that these inter-relationships will only emerge from discussion with stakeholders; especially if an attempt to derive scores against one category receives a response that “it depends on” another.

Particular care should be given to phrasing the definitions of qualitative criteria in such a way that makes it easier to consider the relative merits of each option against them – for example “The extent to which the option unleashes innovation in the UK economy in the next X years…”

The key question that should be borne in mind when defining all these criteria is how they demonstrate the extent to which the available choices differ from each other in ways that matter to you and other stakeholders, and the extent to which each option does something useful.

Note: It is essential to validate the developed set of criteria in consultation with experts and stakeholders before proceeding to the next phase of the MCDA process. Without this assurance it will not be possible to have the necessary confidence in the validity of the final outputs for the shortlist stage. It may take more than one pass or iteration at this stage to achieve this.

Step 1 example:

Policy options to boost innovation in high-end manufacturing are being considered using the options framework filter as per Green Book advice. No combination of option choices dominate against the CSFs, so an MCDA exercise is set up. For each CSF at least one criterion is required, against which choices can be compared on performance.

Criteria are developed in close consultation with stakeholders and include the extent of R&D benefit that would accrue, the impact on long term jobs, level of protection of export markets and so on.

All key performance metrics are covered and mutually exclusive so performance on one criterion does not depend on that for another.

4.2 Step 2: Assess the performance of options against the criteria

The next step is to invite key stakeholders and policy experts to assess the performance of each option choice with respect to each of the defined qualitative criteria. This will usually be done in a workshop setting although to save time the initial scores can be returned in a write round exercise so long as all concerned have a clear understanding of requirements. Either way will require support from an experienced MCDA practitioner in a proportionate way – this may include advising on the approach to the workshop or help in facilitating the process depending on the scale of the exercise (contact your local Operational Research analyst or HoP for initial advice)

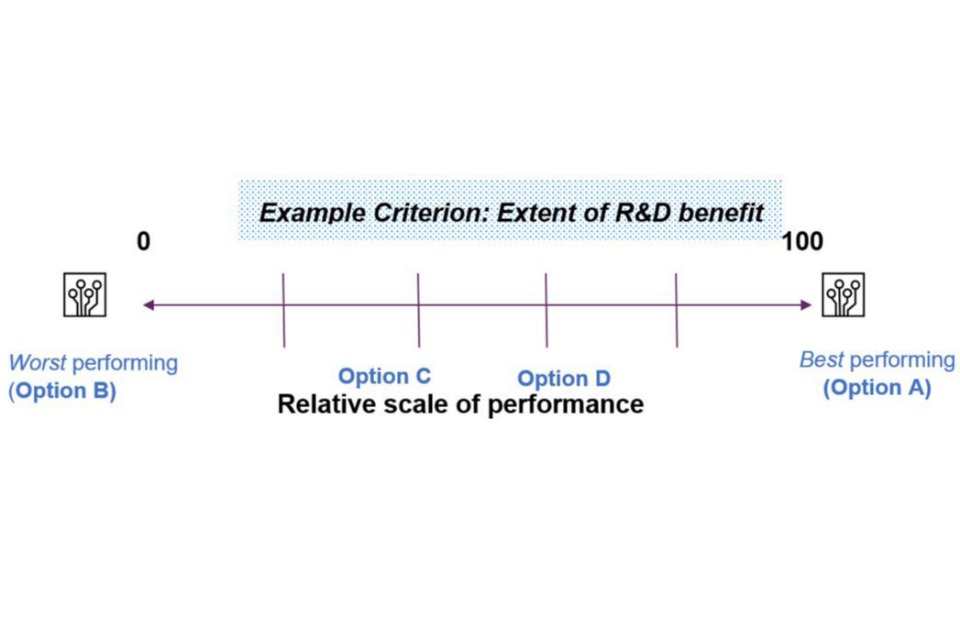

Although there are some differences in approach at this stage of an MCDA, usually comparing options on qualitative criteria requires a scale to be developed that encompasses the best performing or most preferred, and worst performing or least preferred option in each instance. These become the reference points against which all the remaining options are compared e.g. a third option could be judged to be halfway in performance or preference between best and worst. Either at this point or later, these preferences can be translated into relative scores, with the best allocated an arbitrary score of 100 and the worst a score of 0, while other options fall in-between.

Where option performance or desirability against a criterion can be expressed in quantitative terms then a similar scale can be applied using the same principles but based on the best available estimates of delivery for each. Again, these scores can be rescaled linearly without the need for additional stakeholder input so the option with the best performance/desirability is assigned a score of 100 and the worst a score of 0. Such measures may be either continuous (in terms of capacity, outputs etc) or be on a discrete scale (Technology Readiness Levels for example).

Notes: Individual qualitative scores returned in a write round exercise should be checked for obvious errors before proceeding. Similarly, data sources used for quantitative criteria need to be validated for consistency across different options.

In the ‘light touch’ MCDA approach we assume a straightforward linear relationship when scoring quantitative performance against desirability. More sophisticated value functions would be developed in the full approach to take account of issues such as (for example) diminishing returns.

Step 2 example:

Say Option ‘A’ is judged the most effective, it is scored at 100. Then Option ‘B’ is considered the least effective and is scored at 0. The remaining Options ‘C’ and ‘D’ are scored in comparison with these reference points based on how well (or badly) they achieve the same goal. In general, the process is then repeated for all remaining options for that criterion.

4.3 Step 3: Consolidate and summarise scores for each option and sense check results with stakeholders

At this point scores must be reviewed in a moderated workshop called a Decision Conference facilitated by an experienced MCDA practitioner. This gives an opportunity for the stakeholder group to discuss and reach a consensus on individual scores - in particular, by focusing on any obvious outliers or disagreements, resolving these wherever possible and so allowing a shared understanding of the merits of each option among the group.

If disagreements cannot be easily resolved, then the decision conference should record the reasons why and test out the practical effects of such differences later during sensitivity analysis of results.

Scores can then be consolidated and summarised for presentation and reflection back to the group using available proprietary software or bespoke spreadsheets as appropriate.

Once the set of scores is complete it may be possible at this point to disregard or dismiss one or more options from shortlist consideration based on the principle of Dominance. For example, an option that is considered the least effective across every individual criterion would always come last in preference ranking no matter what further analysis was carried out. Therefore, it can be dropped from further consideration.

Note: At least a half day slot should be earmarked for anything beyond the simplest Decision Conference exercise.

Step 3 example:

| List of options | Criterion 1 | Criterion 2 | Criterion 3 | Criterion 4 |

|---|---|---|---|---|

| Option A | 100 | 75 | 75 | 100 |

| Option B | 0 | 20 | 0 | 0 |

| Option C | 40 | 100 | 40 | 30 |

| Option D | 60 | 0 | 100 | 50 |

Here is an example of a completed set of scores for a set of options against defined criteria. These would be collected from individual stakeholders then consolidated in an excel spreadsheet or bespoke software.

4.4 Step 4: Derive a ranked list of options by assigning relative weights to the criteria

Given the full set of scores collated in Step 3, it should now be possible to compare results and generate a ranked shortlist of options measured by overall benefit or performance against the criteria and CSFs. However, the individual performance/desirability scales for each criterion cannot be directly combined because a unit on one scale does not necessarily equal a unit on another. So instead, MCDA uses a process known as ‘swing weighting’ to derive weights for the criteria, taking account of:

- the relative difference between the best and worst performing options on each criterion; and

- how important stakeholders consider that difference to be in relation to the desired outcome

As a result, the weight of a criterion reflects not just the range of difference in the options (the size of the ‘swing’ between the best and worst performers) but how much that difference matters in practice to the stakeholders in terms of delivery.

It is important to stress that weights for criteria must not be derived from their relative importance alone (or simple weighting). For example, if an MCDA exercise was conducted on purchasing a family car, vehicle safety might be a key criterion to include in the overall assessment. In isolation it would rightly be considered a highly important factor and assigned a significant weight. But if the analysis showed that all the cars being reviewed scored about the same in safety terms – so the ‘swing’ between best and worst performers was negligible – then this criterion becomes much less important to the decision makers in differentiating between the choices available and therefore the applied weight for this should be set much lower than for other factors.

There are various techniques that can be used in a Decision Conference to derive the ‘swing weights’ for criteria. Most commonly, participants will be asked to first identify which criterion has the biggest, most significant swing in performance. This is given an arbitrary weight of 100, setting a standard against which all the remaining criteria are compared, usually in a pairwise approach. The ‘swing’ of each remaining criterion would be reviewed in turn compared to the standard and weighted accordingly – for example if a criterion were judged to have half the degree of ‘swing’ in performance or desirability as the standard, it would be assigned a weight of 50.

The process continues until the workshop has weighted all criteria. As with option scoring, the facilitator will seek a consensus from the stakeholders on the criteria weights, resolving differences where possible with any that remain noted for further review during sensitivity analysis.

Once validated across all criteria, these weights can now be multiplied by the option scores to produce a performance matrix and overall weighted score for each option which can translate directly into a preference list. The top-level ordering of options is provided by the weighted average of all the performance scores. These total scores give an indication of how much better one concept is over another – hence the highest scoring option would be considered the preferred way forward.

Note: Before proceeding to the next step, there should be a ‘sense check’ that the general order of options presented, including the preferred way forward, is in line with the expectations of the group given the scores they awarded. The facilitator will explain the contribution each criterion has made to the final weighted score for each option (these can usually be illustrated automatically via the software used to drive the exercise).

Step 4 example: (for illustrative purposes only)

| List of options | Criterion 1 | Criterion 2 | Criterion 3 | Criterion 4 | Total score | Ranking |

|---|---|---|---|---|---|---|

| Option A | 100 | 75 | 75 | 100 | 85 | 1st |

| Option B | 0 | 20 | 0 | 0 | 10 | 4th |

| Option C | 80 | 100 | 40 | 30 | 78.5 | 2nd |

| Option D | 60 | 0 | 100 | 50 | 32.5 | 3rd |

| Weights % | 25 | 50 | 10 | 15 |

This is an example of how weights across criteria can be applied to produce a final set of scores for each option and hence a relative ranking of preference. In this case the scores for Criterion 2 had been judged to have the most significant swing in performance from best to worst option; followed by Criterion 1 being half as important, while 3 and 4 are some ways behind. By applying the percentage weights to the consolidated scores and summing, a total weighted score for each option is produced. This allows the options to be ranked.

Here Option A is ranked 1st after all the scores have been calculated.

4.5 Step 5: Conduct sensitivity analysis on initial results

The final step of the main MCDA process involves a sensitivity analysis to understand how the ordering of the options examined may change under different scoring or weighting assumptions. This will demonstrate to the group how robust the overall ranking is and provides an opportunity to test out any specific areas of disagreement previously raised and the extent to which these have a practical effect on the results.

If the group is satisfied at this point, then the process can conclude. A summary of the outcome can be included in the main business case with full details included as an annex.

If results are inconclusive then it may be necessary to conduct further workshops either formally or informally.

Note: The focus here should be on stress testing the extent to which the top ranked option or options remain the same under different scenarios. If the results are stable with one option tending to dominate, then this gives confidence in choosing which to take as the preferred way forward.

Step 5 example (for illustrative purposes only)

| List of options | Criterion 1 | Criterion 2 | Criterion 3 | Criterion 4 | Revised score | Original Ranking | Revised Ranking |

|---|---|---|---|---|---|---|---|

| Option A | 100 | 75 | 75 | 100 | 82.5 | [1st] | 2nd |

| Option B | 0 | 20 | 0 | 0 | 12 | 4th | 4th |

| Option C | 80 | 100 | 40 | 30 | 83 | 2nd | [1st] |

| Option D | 60 | 0 | 100 | 50 | 27 | 3rd | 3rd |

| Original Weights % | 25 | 50 | 10 | 15 | |||

| Revised Weights % | 20 | 60 | 10 | 10 |

In this example the revision in weighting leads to a preference switch between options A and C (although the difference in scores is marginal). Further stress testing can be carried out to fully explore the impact of other changes in weighting assumptions with the group.

Overall, it appears that Option A performs slightly better than Option C, while Options B and D lag well behind. But it is notable that Option C performs best on Criterion 2, which the stakeholder group weighted as having the most important or significant ‘swing’ from best to worst. It may be necessary for further stakeholder discussions to decide which of A and C to take forward to the shortlist (if not both) dependent on what trade-offs are considered most important to them.

But most importantly there is now clear evidence to draw on when presenting the final decision in the business case for review.

- See The Green Book: appraisal and evaluation in central government. ↩

- See: An Introductory Guide to Multi-Criteria Decision Analysis (MCDA) ↩

- Other conditions include where multiple (say 10 or more) factors have been identified; a hierarchy of relevant criteria and sub-criteria can be constructed; or where relative preference for criteria does not track linearly with performance (diminishing returns for instance). ↩

- These are produced in a workshop by selecting alternative combinations of choices using the process specified by the Green Book as above. For more information on development of the longlist and use of CSFs, please consult paragraphs 4.27 onwards of the Green Book. ↩