FCDO annual evaluation report 2021 to 2022

Published 6 October 2022

1. Introduction

We know how well things work because of evidence. Evaluation is the specific evidence that tells us what works, how and why, so as to maximise the value of taxpayers’ money and better achieve our objectives. It identifies good practice, improves our performance, and helps to target resources on activities that will have the most impact on the ground [footnote 1].

This Annual Report presents an overview of evaluation in the Foreign, Commonwealth and Development Office (FCDO) from April 2021 to March 2022. Over this period, we developed an Evaluation Policy setting out principles and standards for evaluation, and an Evaluation Strategy which sets out key outcomes to advance and strengthen the practice, quality and use of evaluation.

In this report, we outline the work of the FCDO Evaluation Unit (section 2), including our work on central thematic evaluations, evidence-informed influencing, and our support to the Centre of Excellence for Development Impact and Learning (CEDIL). In section 3, we provide an overview of evaluation across the business during this period and our ongoing efforts to ensure quality and rigour.

2. Central evaluation service

The Evaluation Unit works to support evaluation across the FCDO. It is part of the Economics and Evaluation Directorate in FCDO, led by the Chief Economist. As of March 2022, the unit comprised seven Evaluation Advisors, one Data and Insights lead and 3 programme managers. Its focus is to deliver the Evaluation Strategy by ensuring that:

- Strategic evaluation evidence is produced and used in strategy, policy and programming

- Evaluation evidence is systematic and objective

- Learning from evaluations is shared and used in decision-making

- FCDO has an evaluative culture, the right evaluation expertise and capability

2.1 Thematic evaluations

One key route to deliver the first outcome of the strategy is through commissioning and delivering thematic and portfolio evaluations. These evaluations draw evidence from across several projects, programmes or activities to answer strategic and cross-cutting questions which will inform policymaking. In 2021 to 2022, the Evaluation Unit commissioned 2 thematic evaluations:

Climate smart agriculture

The Evaluation Unit commissioned a synthesis of 13 evaluations of FCDO Climate Smart Agriculture (CSA) programmes to learn how we can better reduce smallholder farmers’ vulnerability to climate variability and shocks. The review found that CSA interventions were more likely to be successful: (a) if they resulted in improvements in profit and productivity for farmers; (b) if they involved government and private sector stakeholders alongside farmers; or (c) if they were supported by temporary subsidies and/or long-term private sector investment to ensure synergies between production, adaptation, and mitigation. Future CSA programmes could enhance their impact through using carbon finance, such as climate or environmental service credits. Lastly, the report highlights the importance of beneficiary engagement embedded into robust monitoring and learning approaches, and the need for evaluation to improve the evidence base on approaches to building resilience and sustainability of adoption. Read the report here.

Nutrition and food security data in complex operating environments

FCDO and other international donors are investing in the development of new tools and approaches to collect and analyse data to assess humanitarian support needs. These tools need to address the political, technical, and operational barriers that limit the collection, analysis and sharing of timely, complete information on affected populations as a humanitarian crisis evolves. During this review period, the Evaluation Unit, in collaboration with the Humanitarian Policy and Protracted Crisis Policy Group (HPPCP), commissioned a thematic evaluation to synthesise existing evidence on best practice across the humanitarian sector, and to evaluate data collection and analysis approaches in six case study countries. We expect to publish the evaluation in autumn 2022.

2.2 Evidence-based influencing

Building on existing expertise and capabilities, this workstream supports the development of FCDO influencing. There are four strands of work within this area: clarifying and communicating the definitions on influencing and what we need to measure; developing staff capabilities on influencing; measuring influencing results and impact; and using the evidence base to better influence strategy, policy and programming in the short and long term. The Evaluation Unit convenes an FCDO Measuring Influencing Working Group and an HMG Influencing Working Group. Internal guidance on integrating influencing into monitoring and evaluation frameworks has been produced, and wide-ranging technical advice provided to staff across the organisation in high priority areas. Further work to understand the evidence base is being used to feed into training to build staff influencing capabilities, including on measuring and evaluating influencing, for anticipated rollout in the next reporting period.

2.3 Central programmes

The Centre of Excellence for Development Impact and Learning (CEDIL) programme [footnote 2] is developing new rigorous methodologies for hard-to-measure problems. Over this reporting period, the programme has:

- completed an innovative evidence synthesis of how and when “Teaching at the Right Level” is most effective. Teaching is targeted at different children in the class depending on their current level of capability, thus ensuring all children in the class have a chance to learn, not just the most able. The research used Novel Bayesian methods: It found that when implemented by teachers the approach was effective but was three times more effective when implemented by volunteers [footnote 3], and that the degree to which interventions are delivered as planned matters strongly to effectiveness. Neither the country and region, nor the baseline level of learning in the classroom, had much impact on effectiveness, meaning the approach could be applied widely across lower income countries. The research was used to help design an intervention that supported 25,000 children in Africa and South Asia to catch up with learning lost during the Covid pandemic. It also influenced global thinking, with findings reflected in the World Bank’s Global Education Evidence Advisory Panel review of Cost Effective Approaches to Improving Global Learning

- piloted new methods for making evidence more accessible to policy makers and practitioners. For example, CEDIL funded a first-of-its-kind Disability Evidence Portal which has been expanded and scaled up by a philanthropist foundation. The CEDIL-funded Uganda Evidence Gap Map has increased the use of evidence in Uganda, and the approach has been replicated in another country

- produced a working paper and methods guidance surveying evaluation methods for complex interventions, highlighting underutilised approaches with high potential

- shared the learning and innovation from CEDIL worldwide, including through social media, blogs, methods briefs and a virtual conference, whose sessions have had over 500 views [footnote 4]

CEDIL’s website has over 25 papers and 50 seminars available for all to use.

The Evaluation Unit’s programme with the Development Impact Evaluation (DIME) Department at the World Bank, the Fund for Impact Evaluation, [footnote 5]. came to an end this year, though the relationship between DIME and FCDO continues. The programme was successful at developing rigorous impact evaluations in underserved areas of international development – such as migration, livelihoods in conflict, or refugees’ economic development – and improving their use by donors, government and practitioners. As well as continuing to support 162 impact evaluations to their completion across six regions, the programme pivoted to provide extra support to FCDO programmes during the pandemic. This included adapting (phone-based) surveys to include questions about the impact of COVID-19 in the Democratic Republic of Congo and supporting evidence generation for behaviour change interventions in Tanzania. DIME also pioneered research on social cohesion through social media monitoring.

3. Evaluation across the organisation

3.1 FCDO evaluation in numbers

Twenty-four evaluations were reported as completed or had products published in 2021 to 2022 [footnote 6], of which nearly all were FCDO led.

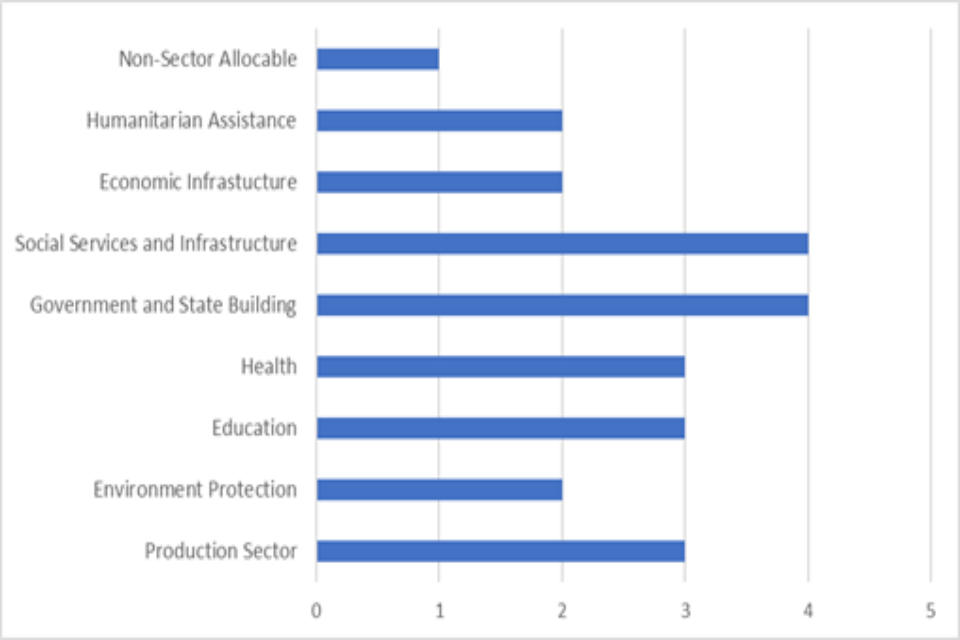

There were 4 evaluations published in each of the broad sectors ‘Government and State Building’ and ‘Social Services and Infrastructure’ [footnote 7]. Health, Education and the Production Sector each published 3.

Figure 1: Number of evaluations by sector

As illustrated in Figure 2, evaluations were spread across both Africa and Asia, with a particular concentration in Africa. Individual countries included: Armenia, Bangladesh, Benin, Cameroon, Côte D’Ivoire, Democratic Republic of the Congo, Ethiopia, Gabon, Ghana, Indonesia, Kenya, Liberia, Madagascar, Malawi, Mozambique, Pakistan, Rwanda, Sierra Leone, Somalia, Sudan, United Republic of Tanzania, Zimbabwe.

Figure 2: Geographic distribution of evaluations [footnote 8]

3.2 Ensuring quality

FCDO is committed to ensuring rigour and quality in all our evaluations. For evaluations related to former DFID spend, it remained a requirement during the review period for all evaluations to go through our Evaluation Quality Assurance and Learning Service (EQUALS). This service, as well as offering technical assistance, provides independent quality assurance of all evaluation products. Each product is assessed against a template with criteria covering a range of areas such as independence, stakeholder engagement, ethics and safeguarding, as well as methodological rigour.

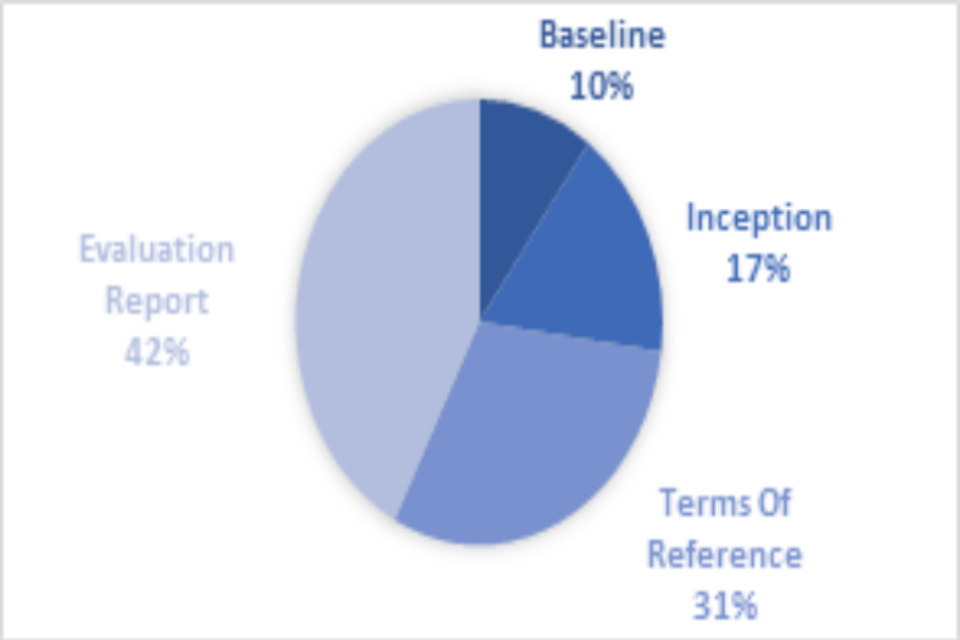

During this review period a total of 60 requests to quality assure evaluation products [footnote 9] were raised from FCDO, including 7 re-reviews. Figure 3 outlines the type of products that were sent to EQUALS for review. Products are rated excellent, good, fair and unsatisfactory. Products are improved based on qualitative feedback before publication, with those rated unsatisfactory having to undergo re-review following improvements. From April 2021 to March 2022, 83% of products quality assured through EQUALS [footnote 10] met the minimum quality criteria on their first review.

Figure 3: EQUALS Quality Assurance request type as a percentage, April 2021 to March 2022

The service is also open to other ODA spending government departments. In this review period, the service has been utilised by the Department of Health and Social Care and the Department for Business, Energy & Industrial Strategy. Since 2016, other departments including the Department for Environment, Food and Rural Affairs, the Home Office, the Cabinet Office and, pre-merger, the Foreign and Commonwealth Office have also made use of the service.

3.3 Evaluation impact: case studies

Annex 2 provides case study examples of how evaluations have influenced decision making within and beyond the FCDO [footnote 11]. These are:

- innovative Ventures and Technologies for Development - INVENT Global, India: a theory-based performance evaluation

- punjab Education Sector Programme II: a performance evaluation that is helping to shape education reform programmes

- development Impact Bonds: a randomised control trial (RCT) and contribution analysis evaluation

- delivering emergency COVID-19 cash transfers in Kenya: an impact and process evaluation being used to inform priority areas of action for future shock responsive systems

4. Looking forward: building an evaluation system for FCDO

Over the coming year, we will focus on the implementation of our 3-year Evaluation Strategy. Each of the 4 outcomes has a workplan with a series of activities and milestones that will enable progress towards meeting the outcomes.

These workplans and milestones will be reviewed annually.

4.1 Strategic evaluation evidence is produced and used in strategy, policy and programming

We seek to ensure relevant, timely, high-quality evaluation evidence is produced and used in areas of strategic importance for FCDO, HMG and international partners. As part of this, we will revisit our approach to assessing evaluation plans in high-value business cases to ensure that plans are proportionate and robust. We will undertake a series of Evaluation Portfolio Assessments, a process to generate recommendations on areas where evaluations should and could be conducted. These Evaluation Portfolio Assessments will identify where evaluation could generate insights for high-level decision-making, particularly in areas that might otherwise have been missed. We will continue to deliver demand-responsive thematic evaluation that reflects the evidence needs of the organisation as we deliver against our international priorities. We will also develop new mechanisms to provide financial and technical support for experimental impact evaluations for high priority FCDO activities.

4.2 Evaluation evidence is systematic and objective

It is vital that users have confidence in the findings generated from FCDO evaluations. Activities under this outcome include the implementation of the FCDO Evaluation Policy, which sets clear principles and minimum standards for all evaluations. Over the coming year, Evaluation Quality Assurance and Learning Service 2 (EQUALS 2) will be fully embedded and provide a professional and responsive quality assurance service that can support the full breadth of FCDO development and diplomatic activities. We will also develop a new Global Monitoring and Evaluation Framework Agreement, providing efficient access to pre-qualified suppliers. Finally, the Evaluation Unit will continue to develop and share guidance, templates and best practice to staff across the organisation.

4.3 Learning from evaluations is shared and used in decision-making

We will implement a range of actions to improve the accessibility and use of evaluation findings. This includes improving our internal management information on evaluations to strengthen our knowledge sharing and collaboration across teams. We will generate ‘evaluation insights’, learning documents which synthesis evaluative evidence. We will also work on our approaches to communications developing mechanisms to share lessons and raising visibility of evaluation findings, ensuring learning internally and with partners across HMG and globally.

4.4 FCDO has an evaluative culture, the right evaluation expertise and capability

This objective seeks to develop an FCDO that is sufficiently resourced with skilled advisers possessing up-to-date knowledge of evaluation, and minimum standards of evaluation literacy are mainstreamed across the organisation. We will develop capability, through skills and training and drawing on our membership of the cross-government network of Government Social Research professionals.

Annex 1: List of evaluation reports published 2021 to 2022

Note this is a list of reports published during this timeframe, not evaluations completed. There may be delays in the publication of some evaluations, meaning that the list below includes evaluations that were completed before 2020 to 2021, and some which were completed during this period but are pending publication.

| Project code | Project title |

|---|---|

| 202745 | Investments in Forests and Sustainable Land Use |

| 203429 | Zimbabwe Livelihoods and Food Security Programme |

| 203491 | Support to Bangladesh’s National Urban Poverty Reduction Programme (NUPRP) |

| 204242 | Skills for Employment |

| 204290 | Productive Safety Net Programme Phase 4 |

| 204369 | Corridors for Growth |

| 204399 | Skills Development Programme |

| 204564 | Power of Nutrition Financing Facility |

| 205128 | Somalia Humanitarian and Resilience Programme (SHARP) 2018-2022 |

| 205143 | Support to Refugees and Migration programme in Ethiopia |

| 300043 | Violence against Women and Girls: Prevention and Response |

| 300339 | Humanitarian Reform of the United Nations through Core Funding (2017-2020) |

| 202708 | Global Health Support Programme |

| 300288 | Overseas Development Institute (ODI) Fellowships Scheme Accountable Grant 2015-2020 |

| 202596 | Private Enterprise Programme Ethiopia |

| 300589 | African Union Support Programme |

| 204023 | Supporting Nutrition in Pakistan (SNIP) |

| 203878 | Support to the 2018 electoral cycle in Sierra Leone |

| 300627 | Good Governance Fund: Supporting Economic and Governance Reform in Armenia |

| 202697 | Punjab Education Support Programme II |

| 300143 | Hunger Safety Net Programme (HSNP Phase 3) |

| 202495 | Enterprise and Assets Growth Programme |

| 203473 | Productive Social Safety Net Programme |

| 300196 | Responding to Protracted Crisis in Sudan: Humanitarian Reform, Assistance & Resilience Programme |

Annex 2: Case studies

Innovative Ventures and Technologies for Development INVENT Global, India

Evaluation type: Performance Evaluation

Publication date: March 2021

Full report: https://iati.fcdo.gov.uk/iati_documents/59915608.odt

Management response: https://iati.fcdo.gov.uk/iati_documents/61262319.odt

The programme

The Innovative Ventures and Technologies for Development (INVENT) programme was a £37m programme funded by the UK Government, working with Indian institutions on innovation. The programme sought to encourage innovation amongst the private sector through the provision of investment capital and business development services, worth £18.8m, to innovative partnerships (‘enterprises’) in low-income states in India (INVENT Domestic) and £11m for enterprises in developing countries (INVENT Global). The evaluation covered the activities of INVENT Global, which tested the effectiveness of three funding instruments as mechanisms to spread India’s affordable innovation for developmental impact in low-income populations. These were:

- Millennium Alliance (MA): a structured challenge fund with broad sectoral approach, managed by the Federation of Indian Chambers of Commerce & Industry (FICCI). FCDO funds focused on health and agriculture, supporting 12 enterprises

- Grand Challenges (GC): managed by IKP Knowledge Park, GC focused on solutions to improve tuberculosis compliance and adherence to Tuberculosis treatment through 3 Enterprises supported by FCDO funds

- Connect to Grow (C2G): implemented by IMC Worldwide, this was a demand-led market facility focused on the health and agri-food sectors with more than 20 enterprises

The evaluation

The objectives of the performance evaluation were to: (i) assess, compare and learn from the three main instruments being tested by INVENT Global; (ii) assess the extent to which INVENT Global had delivered against objectives; (iii) strengthen overall partnerships between India and other countries on innovation; and (iv) demonstrate that Indian innovations can be piloted. The performance evaluation took a theory-based approach in which hypotheses of transformational change were established, tested and linked to learning cycles. The evaluation took a sample of 11 (pilot) innovation enterprises for assessment from the 35 funded by FCDO.

The evaluation found that, overall, the theory of change underpinning the INVENT programme remained relevant and valid. INVENT significantly reduced the innovation and solution exploration costs. FCDO’s role in supporting innovation transfers across countries was significant, if not unique, and FCDO was both opportunistic and strategic in engaging with the three instruments, leveraging existing platforms and organisations. Innovation transfer through systematic and institutionalized facilitation was found to be a crucial ingredient for success. The evaluation also examined value for money (VfM) and found positive potential return in some areas e.g., within the Millennium Alliance (MA) component of the programme. However, the value add of other mechanisms was less clear, especially the Connect to Grow (C2G) component. The C2G component brought in high levels of expertise, documentation and monitoring; however, the operating model limited the ability to meet the on-going requirements of enabling enterprises, engaging with ecosystem and following adaptive learning process.

The impact: how recommendations were used

The team acted on the evaluation recommendations to conclude the C2G component, reconsider the Grand Challenge, and continue the Millennium Alliance.

On the back of this programme, a newer and bigger programme called Global Innovations Partnership was designed, incorporating many lessons from the evaluation. The evaluation found that capital is critical in social ventures: the new programme therefore has three arms of capital funding at different amounts. The evaluation also recommended substantial improvement in the M&E system: the new programme will have a separate Monitoring and Evaluation Impact facility with about 5% of the programme spend budgeted, and with shorter learning cycles to enable better flexibility. Finally, the evaluation found that philanthropic capital can start the expansion in a new country and then patient capital by private and commercial investors can follow: the new programme is a 14-year programme with patient capital, combining grants and capital.

The Punjab Education Sector Programme (PESP II)

Evaluation type: Performance Evaluation

Publication date: October 2021

Full report: https://iati.fcdo.gov.uk/iati_documents/90000014.pdf

Management response: https://iati.fcdo.gov.uk/iati_documents/90000013.pdf

The programme

The Punjab Education Sector Programme (PESP II) ran from January 2013 to March 2022, with the aim of supporting the Government of Punjab (GoPb) to reform and transform delivery of education in Punjab, providing equitable access to better quality education across the province. The programme budget was £437.1 million, channelled through the Government of the Punjab, World Bank, private sector and the local civil society organisations. It had 6 thematic outputs delivered through 16 components throughout the programme lifecycle:

- strong school leadership and accountability

- better teacher performance and better teaching

- high quality school infrastructure

- improved access to schools especially in priority districts

- top political leadership engagement on education reforms

- high quality technical assistance to government stakeholders

It supported activities in all 36 districts of Punjab, but with a particular focus on districts with greater need for reforms.

The evaluation

The performance evaluation of PESP II, carried out from August 2017 to March 2020, was based on a conceptual framework derived from the 2018 World Development Report, which is itself based on a comprehensive review of global evidence for assessing the effectiveness and functionality of systems of education. This framework identifies four key school-level ingredients for learning: prepared learners; effective teaching; learning-focused inputs; and skilled management and governance.

The evaluation focused on 2 levels of evaluation questions (EQs):

- Level One EQs relate to understanding the performance of the education system in Punjab over the period of the PESP II programme, and the factors that have determined this performance

- Level Two EQs relate to understanding the contribution of the PESP II project components (individually and collectively) to the progress tracked and analysed by the Level One EQs

The evaluation found that the programme was well designed to support structural and system changes and helped to increase access to education and learning outcomes with a focus on inclusion and gender. The most successful components were around access to education through public private partnership and the programme’s roadmap approach to meet high level results on participation rates and learning outcomes.

The impact: how recommendations were used

The evaluation findings were shared widely through relevant networks and teams within FCDO, the Government of Punjab (GoPb) and development partners. The findings were used in discussions about FCDO’s approach to education reforms in Punjab and nationwide and to help develop a new programme - Girls and Out of School Action for Learning (GOAL) programme for Pakistan.

Progress is already seen on some specific responses to the evaluation’s recommendations. For example: the GoPb is currently developing its education policy with a focus on gender, equity and inclusion (recommendation 1); decentralisation has already taken place in the education department; and there is a continued focus on capacity building (recommendation 8). In response to challenges posed by COVID-19, FCDO and GoPb collaborated to initiate and implement the Taleem-Ghar initiative (a type of online learning) and a new Ed-Tech policy which supported provision of education through electronic media (recommendation 9). In the successor programme ‘GOAL’, FCDO has incorporated a stronger focus on the enrolment of marginalised children - mainly girls and religious minorities - teaching quality, performance and accountability, improved data collection and collaboration with FCDO’s Data and Research Evidence programme (recommendation 3).

The lessons learned from the programme evaluation will continue to shape FCDO’s future thinking and actions for education reform programme across the world.

Development Impact Bonds

Evaluation type: Qualitative, contribution analysis

Publication date: February 2021

Full report: https://iati.fcdo.gov.uk/iati_documents/60354735.pdf

Management response: https://iati.fcdo.gov.uk/iati_documents/90000274.pdf

The programme

A Development Impact Bond (DIB) is a mechanism for drawing external finance into payment-by-results (PbR) projects. In a DIB, an outcomes funder (e.g., donor, philanthropic fund, government) commits to paying for development results if and when they are achieved. A service provider delivers an intervention intended to yield the pre-defined results, supported by a third-party investor who finances the upfront costs of delivery. If the programme is successful, investors are repaid their original investment by the outcomes funders, which may include a financial return. The FCDO’s DIB pilot programme runs from June 2017 to March 2023 and funds 3 innovative schemes:

- Humanitarian Impact Bonds (HIB) for the building of three new physical rehabilitation centres in Mali, Nigeria and the Democratic Republic of Congo (DRC)

- Micro-Enterprise Poverty Graduation Impact Bonds raising the incomes of the extreme poor through developing micro businesses

- Quality Education India which aims to improve education outcomes for primary school aged children in India

The evaluation

Evaluation has been critical to both implementation and learning from the programme. Randomised Controlled Trials (RCTs) were used to ascertain that results were additional to business as usual. In addition, a programme-level evaluation focused on drawing out lessons on the overall effect of using a DIB instead of a grant or other payment-by-results mechanism.

Results of the first DIB evaluation were recently published covering the Village Enterprise poverty graduation program in Kenya and Uganda (running between November 2017 and December 2020). The RCT found a positive and statistically significant impact on monthly consumption for households and net assets which provided the basis for a pay-out.

The programme-level evaluation found that DIBs can cultivate a stronger outcome-focussed culture and increased performance management by delivery partners, which in turn can lead to more and better-quality outcomes being achieved for higher numbers of programme beneficiaries. This can have a positive effect on motivation, but carries a risk of overwhelming organisations and staff, which could affect morale. Collaboration between stakeholders was also strengthened through the impact bond partnership. While some of these effects were also found in the comparator sites, DIB participants believe they are stronger for the DIB programmes.

The impact: how recommendations were used

The DIBs Pilot programme team relied on the evaluation findings in their decisions, for example:

1) using robust RCT evidence to discern impact in order to provide a basis for pay-outs;

2) using the evidence to inform FCDO’s future policy direction on outcome-based financing.

Based on positive indications of the efficiency and effectiveness of the impact bond model, a significantly scaled up programme was launched in 2020 to develop the outcomes-based financing ecosystem. The Pioneer Outcomes Fund programme seeks to build on learning from the DIBs pilot to overcome common limitations of the model and barriers to scale. As a result, the team have focused attention on setting up pooled outcome funds involving multiple donors (including domestic governments) to mobilise outcomes funding at a much larger scale as well as focused on building the capacity of market players including investors and service providers to develop and deliver outcomes-based programmes. The team also rely on the evidence from the evaluation to provide technical advice and assistance internally to country offices and thematic teams across the FCDO who are looking to integrate outcomes-based approaches into their programmes.

Delivering emergency COVID-19 cash transfers in Kenya

Evaluation type: Impact evaluation and process review

Publication date: December 2021

Full report: https://iati.fcdo.gov.uk/iati_documents/90000186.pdf

Management response: https://iati.fcdo.gov.uk/iati_documents/90000188.pdf

The programme

In response to the growing COVID-19 crisis in Kenya, the UK government supported a three-month cash transfer (CT) of 4,000 Kenyan Shillings (approx. £27)/month to 52,700 vulnerable people living in the informal settlements in Nairobi and Mombasa. The programme, which started in October 2020, was implemented by a consortium led by GiveDirectly, with support from community-based organisations operating within the informal settlements. The monthly stipend was paid using mobile money and remote targeting methods were used to identify beneficiaries. The programme’s aim was to support beneficiaries to buy food or other high-priority needs - such as purchasing water, paying for medical care, or making rent payments - as well as to reduce the use of negative coping strategies (eg. selling assets, borrowing money).

The evaluation

The objective of the evaluation was to determine whether, and to what extent, the support funded by the UK through emergency COVID-19 CT had a positive effect on the targeted beneficiaries in the informal settlements where it was implemented. The evaluation also sought to provide insights on implementation and operational elements that were adopted in the design and delivery of the intervention. The evaluation was structured around two separate components - an impact evaluation and a process review - and drew on a mixed methods research framework.

Overall, the impact evaluation found that the COVID-19 CT had a discernible positive impact on food security and reduced the use of negative coping strategies. Beneficiaries’ employment status and related income sources also improved, and some of these improvements appear to be associated with the CT.

With regards to implementation, the process review found that:

- the COVID-19 CT was relevant for the target population and the amount and timing of the CT were also appropriate, although the delay to implementation may have weakened some of its effectiveness

- the targeting approach used was appropriate in the context of the crisis, but the loosely defined targeting criteria and provision of low-quality data led to high levels of rejection from the programme which negatively affected equity

- the payment mechanism based on mobile money transfers was effective in delivering cash relatively easily, cheaply, quickly, and directly to those in need, while minimising opportunities for corruption; however, some of the most vulnerable were excluded due to a lack of access to the mobile technology

- coordination on specific aspects of CT implementation between NGOs and government stakeholders remains weak; more could be done to develop existing social protection systems within the Government of Kenya to enhance coordination and improve future shock responses

The impact: how recommendations were used

The evaluation has provided a number of wider policy implications, which are useful for all stakeholders involved in the COVID-19 CT – including the Government of Kenya, FCDO and other development partners, GiveDirectly and their partners or other NGOs – to improve the design and implementation of shock-responsive CTs within the context of Kenya’s social protection system. The evaluation results have been shared with the joint Government of Kenya/Development Partner sectoral working group for social protection and will inform priority areas of action to strengthen future shock responsiveness of the system and support Kenya to ensure that necessary arrangements are in place in advance of a crisis. Broader lessons include:

- the use of an emergency cash transfers (CT) is an appropriate tool to deal with the most severe consequences of large, sudden, and long-lasting shocks such as the COVID-19 pandemic

- effective use of social protection to respond to shocks requires ex-ante preparation in order to facilitate swift and efficient action at the onset of a crisis

- non-government actors need support and coordination from the government

Mobile technology, such as M-PESA (mobile money), can provide an efficient and effective payments system.

-

FCDO Evaluation Strategy, July 2022 https://assets.publishing.service.gov.uk/government/uploads/system/uploads/attachment_data/file/1087411/FCDO_Evaluation_Strategy_June_2022.pdf ↩

-

The research team highlighted further research would be needed to understand what is it about volunteers versus teachers that makes the difference. ↩

-

Conference sessions available to view here: https://cedilprogramme.org/cedil-2022-conference ↩

-

Based on data from Aid Management Platform (AMP), Procurement and Commercial Department and EQUALS. ↩

-

This figure relates to the primary sectoral focal area as defined by the OECD DAC though some evaluations cover more than one sector. http://www.oecd.org/dac/stats/methodology.htm ↩

-

Several evaluations were non-specific in terms of country focus or categorised as ‘Global’. These, and those classified as regional evaluations, are not represented in Figure 2. Benefiting locations for each evaluation can be found on the relevant programme devtracker page. ↩

-

Includes Terms of Reference, baseline, inception reports, mid-term, and end-term evaluation reports ↩

-

Data excludes Prosperity Fund products. ↩

-

Some of these evaluations were published in Financial Year 20-21 but have been included here as we have more information on their impact. ↩