Online standardisation of assessors: effective practice identified through research

Published 9 January 2020

Applies to England

1. Introduction

1.1 This document

This document sets out the key elements of effective practice identified in our research published in 2018 that looked at the processes involved in online standardisation of assessors, to prepare them for marking candidates’ responses to assessment questions.

1.2 Scope

This summary of effective practice identified in our research applies to the training of assessors after they have been recruited and before they carry out live marking of candidate responses. The quality assurance of marking once assessors are marking live candidate responses is outside the scope of this summary.

1.3 Context

Condition H1.1 of our General Conditions of Recognition states the following:

For each qualification which it makes available, an awarding organisation must have in place effective arrangements to ensure that, as far as possible, the criteria against which Learners’ performance will be differentiated are:

a) understood by Assessors and accurately applied, and

b) applied consistently by Assessors, regardless of the identity of the Assessor, Learner or Centre

In Condition J1.8 we define an Assessor as follows:

A person who undertakes marking or the review of marking. This involves using a particular set of criteria to make judgements as to the level of attainment a Learner has demonstrated in an assessment.

Our rules for GCSE, GCE, Project and Technical Qualifications include specific requirements for assessors to be provided with training before carrying out live marking.

The training of assessors is sometimes called standardisation. This can be in the form of face to face training or can take place remotely. Online standardisation is one approach an awarding organisation may take. It involves standardising assessors remotely using technology.

Ofqual carried out a project aimed at gaining a deeper understanding of the processes involved in online standardisation and identifying practices that could be implemented to improve the experience and performance of assessors. We reported our research findings into online standardisation in November 2018.

We invited comments on the key elements of effective practice identified in the research from awarding organisations who currently operate online standardisation. Their feedback has been incorporated in the content set out below where appropriate.

A glossary of key terms relevant to the practice of online standardisation is included at the end of the document.

2. Four phases of online standardisation

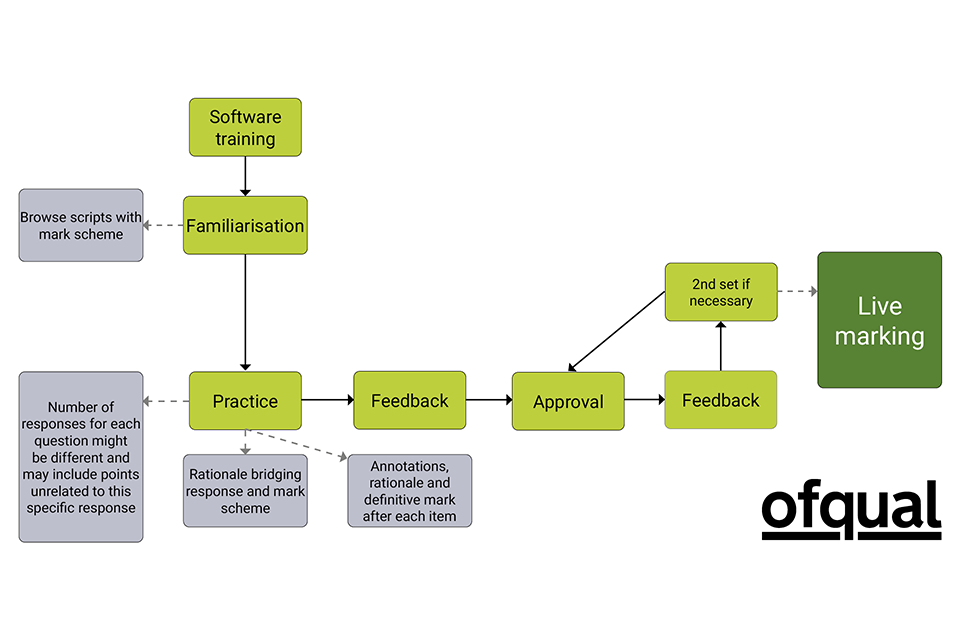

Our 2018 research identified that effective models of online standardisation include the four distinct phases set out below.

2.1 Training to use technology for standardisation

Purpose:

The assessor understands the technology and how to use it in order to get the most out of the standardisation activities and to maximise their learning prior to live marking.

Key elements:

- The awarding organisation provides instruction and orientation to the assessor on the use of the online standardisation technology.

- The assessor practises using the technology and all aspects of its functionality.

- The awarding organisation considers whether any adjustments need to be made to allow the assessor to use the technology effectively, including any reasonable adjustments required to meet the needs of disabled assessors.

2.2 Familiarisation

Purpose:

The assessor gains familiarity with the questions, the nature of students’ responses and the criteria against which responses are to be judged (the ‘mark scheme’).

Key elements:

- The assessor reviews the mark scheme and browses actual responses (scripts or items).

- Neither a definitive mark nor a rationale for the definitive mark is made available to the assessor at this stage.

2.3 Practice

Purpose:

- This is the main training phase. Assessors learn how to apply the mark scheme accurately through repeated iterations of applying the mark scheme to responses and receiving feedback.

- Through automated and personal feedback, the assessor learns where they have and have not awarded the definitive mark (or a mark within tolerance of the definitive mark) and how their basis for marking judgements does or does not accord with the rationale for the definitive mark. The assessor should also be able to learn when they have given the same mark as the definitive mark, but on a basis other than was intended (as set out in the rationale).

Key elements:

- The assessor receives a mark scheme and logs into their marking system to mark practice scripts or items.

- The assessor marks an appropriate number of responses. The appropriate number will be mainly dependent on the question or response type. The greater the anticipated variety or range of potential responses, the greater the number of practice item or scripts required. For example, for more constrained items (usually with shorter responses) a small number may be appropriate, whereas for longer and more complex responses, a greater number is likely to be appropriate to ensure assessors have been trained on applying the mark scheme to a range of responses of different quality and differing natures.

- The practice responses marked during this phase exemplify the mark scheme content, criteria and principles.

- The assessor submits marks and, in response, sees automated feedback. This includes annotation overlay on the scripts identifying the location/rationale of the definitive mark points, as well as a written rationale explaining why the marks were awarded [see section 3.1].

- The assessor receives personal feedback from their team leader [see section 3.2]. As well as helping the assessor identify how to improve their own marking, this feedback also reinforces good quality of marking more generally.

2.4 Approval

This stage is sometimes referred to as ‘qualification’ by awarding organisations.

Purpose:

The assessor demonstrates required competence in the application of the mark scheme in order to be permitted to progress to live marking.

Key elements:

- The awarding organisation sets the criteria an assessor must meet in order to be approved to progress to live marking, and may apply an appropriate tolerance which reflects the nature of the marking.

- The approval criteria set by the awarding organisation are consistently applied to the assessor cohort.

- The assessor marks a ‘test’ set of responses, in order to test the assessor’s competence in the accurate application of the mark scheme.

- If the assessor submits marks in line with (or where appropriate, within agreed tolerance of) the definitive marks, they may proceed to live marking.

- The assessor receives personal feedback on their performance after marking the test set of responses; this is important because, as with the practice phase, it is possible for the assessor to give the same mark as the definitive mark, but on a different, or even wrong, basis. It is important to identify this as awarding marks on the wrong basis will be problematic in live marking.

- The awarding organisation may choose to allow an assessor more than one attempt to pass the approval phase.

- The awarding organisation may choose to require daily approval of assessors for live marking where they consider this is appropriate.

3. Feedback - effective practice

3.1 Automated feedback during the practice phase

Our research found that effective online standardisation included feedback that is software facilitated, in the form of a clearly written rationale of how marks have been awarded and through annotation overlay. Key elements of effective practice identified are set out below.

- Automated feedback is available for all responses, immediately after a mark is submitted. For a question with multiple sub-sections, it may be appropriate for the feedback to appear when the assessor has marked all sections of the question. To provide automated feedback at the end of a script may not be as effective as feedback after each question.

- Feedback includes the definitive mark and a rationale explaining how that mark was arrived at. The rationale may be of greater importance for extended responses.

- Assessors are required to review the feedback regardless of whether their given mark matched the definitive mark. This is important because it is possible that the assessor gave the same mark as the definitive mark, but on the wrong basis.

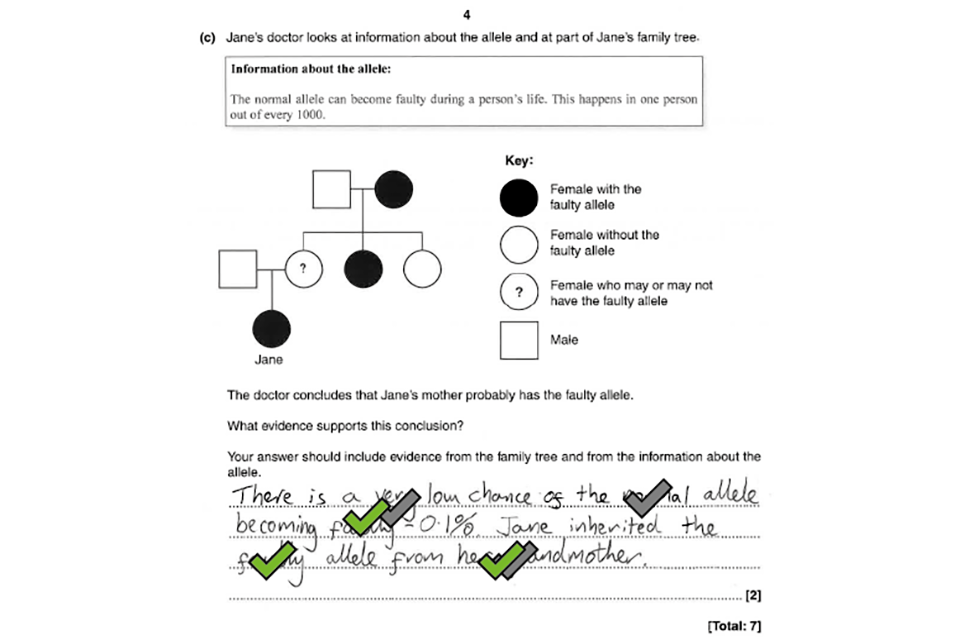

- Annotation overlay is a good, simple way to make complex messages easy to understand and to see where there is agreement/disagreement between definitive marks awarded and assessor marks awarded.

In the example below, a points-based mark scheme, the definitive marks are illustrated by green ticks, and the assessor’s marks by grey ticks. You can see that the assessor has awarded three marks, but one mark has been awarded incorrectly as illustrated by the green tick being in a different position.

- Automated feedback is easy to access in terms of number of clicks and visibility of icons and text. The system presents the script responses, the annotation overlay and rationale in a single view.

In the example below the assessor has awarded only two marks but should have awarded three, as illustrated by the three green ticks. This example also includes a rationale for the marks awarded in the box labelled ‘definitive comment’.

- It may be useful for the software to facilitate easy access to the relevant part of the mark scheme.

3.2 Personal feedback

Our research showed that effective online standardisation included personal feedback, in addition to automated feedback. Key elements of effective practice identified are set out below.

- The feedback is bespoke and specific, covering how the assessor marked particular responses and referring to specifics of the mark scheme. Bespoke feedback is more useful that generic feedback.

- Feedback is timely – ideally within 24 hours so that the assessor can easily recall their reasoning for the mark given.

- Verbal communication: a discussion by telephone or video conferencing is useful because it provides the assessor with an opportunity to raise concerns or queries.

- Written communication: email or other form of written communication (e.g. message facilitated by the software) may also be useful as it provides a written record for reference.

- A combination of both verbal and written communication is likely to be the best approach.

- The assessor is encouraged to seek additional feedback if particular aspects of the mark scheme and/or its application are not well understood.

- Feedback is provided as a minimum between the practice and approval phases. It is also helpful if feedback is provided during the practice phase and after the approval phase.

4. Glossary

A glossary of key terms used in this publication.

Annotation overlay: this is a form of automated feedback; graphic presentation of where the assessor has awarded the marks compared to where the definitive marks are awarded.

Definitive mark: mark awarded by the principal assessor and in a hierarchical marking system is usually considered to be the most appropriate mark. In points-based marking, it is likely that the definitive mark is the only possible correct mark (ie no other mark would reflect an acceptable application of the mark scheme). In more holistic marking (levels-based marking), it is possible there is a small range of acceptable marks which also reflect an acceptable application of the mark scheme.

Scripts: candidate responses on a question paper. Assessors may mark whole scripts or individual items from scripts.

Standardisation: training of assessors to apply the marking standard consistently to candidate responses.

Rationale: explanation of how and why marks are awarded.

Tolerance: this is a numerical expression or approximation of acceptable mark variation from the definitive mark. For some marked items, a tolerance of 0 is appropriate where the only acceptable mark is the definitive mark.

5. Typical process

This flowchart illustrates a typical process as described above.